Use a queue that acts as a buffer between a task and a service it invokes in order to smooth intermittent heavy loads that can cause the service to fail or the task to time out. This can help to minimize the impact of peaks in demand on availability and responsiveness for both the task and the service.

使用一个队列,作为任务和它调用的服务之间的缓冲区,以便平滑那些导致服务失败或任务超时的断断续续的重

负载。 这能够最大限度地减少需求峰值对可用性以及任务和服务响应能力的影响。

Context and problem

Many solutions in the cloud involve running tasks that invoke services. In this environment, if a service is subjected to intermittent heavy loads, it can cause performance or reliability issues.

云中的许多解决方案涉及到运行调用服务的任务。 在这种环境中,如果服务遭受间歇性重负载,则可能导致性能或可靠性问题。

A service could be part of the same solution as the tasks that use it, or it could be a third-party service providing access to frequently used resources such as a cache or a storage service. If the same service is used by a number of tasks running concurrently, it can be difficult to predict the volume of requests to the service at any time.

服务可以是与使用它的任务相同的解决方案的一部分,也可以是提供对诸如高速缓存或存储服务之类的常用资源的访问的第三方服务。

如果并发运行的多个任务使用相同的服务,则可能难以随时预测对服务的请求量。

A service might experience peaks in demand that cause it to overload and be unable to respond to requests in a timely manner. Flooding a service with a large number of concurrent requests can also result in the service failing if it's unable to handle the contention these requests cause.

服务可能会遇到需求高峰,导致其超载,无法及时响应请求。 如果无法处理大量并发请求之间导致的竞争,也可能导致服务失败。

Solution

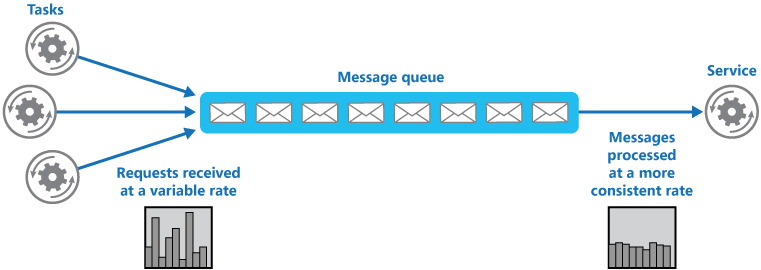

Refactor the solution and introduce a queue between the task and the service. The task and the service run asynchronously. The task posts a message containing the data required by the service to a queue. The queue acts as a buffer, storing the message until it's retrieved by the service. The service retrieves the messages from the queue and processes them. Requests from a number of tasks, which can be generated at a highly variable rate, can be passed to the service through the same message queue. This figure shows using a queue to level the load on a service.

重新构建解决方案,并在任务和服务之间引入一个队列。 任务和服务异步运行。 该任务将包含服务所需数据的消息发布到队列。 队列作为缓冲区,存储消息,直到被服务检索为止。 该服务从队列中检索消息并处理它们。 通过相同的消息队列将不同速率生成的多个任务的请求传递到服务。 该图显示了使用队列对服务的负载进行调整。

The queue decouples the tasks from the service, and the service can handle the messages at its own pace regardless of the volume of requests from concurrent tasks. Additionally, there's no delay to a task if the service isn't available at the time it posts a message to the queue.

队列将任务与服务分离,服务可以以自己的速度处理消息,而不管并发任务的请求量如何。 此外,就算发送消息到队列的时候服务不可用,任务也不会延迟。

This pattern provides the following benefits:

It can help to maximize availability because delays arising in services won't have an immediate and direct impact on the application, which can continue to post messages to the queue even when the service isn't available or isn't currently processing messages.

It can help to maximize scalability because both the number of queues and the number of services can be varied to meet demand.

It can help to control costs because the number of service instances deployed only have to be adequate to meet average load rather than the peak load.

Some services implement throttling when demand reaches a threshold beyond which the system could fail. Throttling can reduce the functionality available. You can implement load leveling with these services to ensure that this threshold isn't reached.

此模式提供以下优点:

它可以帮助最大限度地提高可用性,因为在服务中产生的延迟不会对应用程序产生立即和直接的影响,即使服务不可用或当前不处理消息,该应用程序也可以继续发送消息到队列。

它可以帮助最大限度地提高可扩展性,因为队列数量和服务数量可以变化以满足需求。

它可以帮助控制成本,因为部署的服务实例数量只须满足平均负载而不是峰值负载。

当需求达到阈值以上时,某些服务会实现节流,系统可能会失败。 节流会减少功能的可用性。 您可以使用这些服务实现负载均衡,以确保未达到此阈值。

Issues and considerations

Consider the following points when deciding how to implement this pattern:

It's necessary to implement application logic that controls the rate at which services handle messages to avoid overwhelming the target resource. Avoid passing spikes in demand to the next stage of the system. Test the system under load to ensure that it provides the required leveling, and adjust the number of queues and the number of service instances that handle messages to achieve this.

Message queues are a one-way communication mechanism. If a task expects a reply from a service, it might be necessary to implement a mechanism that the service can use to send a response. For more information, see the Asynchronous Messaging Primer

.

Be careful if you apply autoscaling to services that are listening for requests on the queue. This can result in increased contention for any resources that these services share and diminish the effectiveness of using the queue to level the load.

在决定如何实现此模式时,请考虑以下几点:

实现应用程序逻辑时,有必要控制服务处理消息的速率,避免超过目标资源的处理能力。 避免将需求峰值传递到系统的下一个阶段。 在负载下测试系统,以确保它提供所需的水平,并调整队列数和处理消息的服务实例数量来实现此目的。

消息队列是单向通信机制。 如果任务期望来自服务的回复,则可能需要实现服务可用于发送响应的机制。 有关更多信息,请参阅异步消息引擎。

如果对正在监听队列上的请求的服务应用弹性伸缩,请小心。 这可能增加这些服务共享的任何资源的竞争,并降低了使用队列调整负载的有效性。

When to use this pattern

This pattern is useful to any application that uses services that are subject to overloading.

这种模式对使用可能会重载的服务的任何应用程序都很有用。

This pattern isn't useful if the application expects a response from the service with minimal latency.

如果应用程序期望来自服务的响应以最小延迟,则此模式将无用。

Example

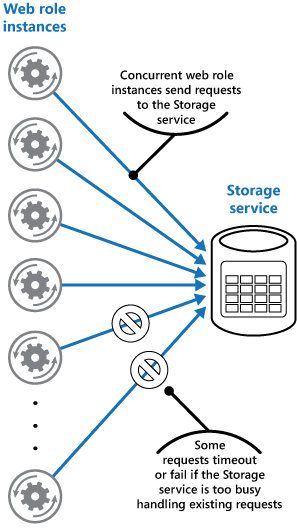

A Microsoft Azure web role stores data using a separate storage service. If a large number of instances of the web role run concurrently, it's possible that the storage service will be unable to respond to requests quickly enough to prevent these requests from timing out or failing. This figure highlights a service being overwhelmed by a large number of concurrent requests from instances of a web role.

Microsoft Azure Web角色使用单独的存储服务存储数据。 如果Web角色的大量实例同时运行,则存储服务可能无法快速响应请求以防止这些请求超时或失败。 下图突出显示了一个服务被网络角色的大量并发请求所压倒。

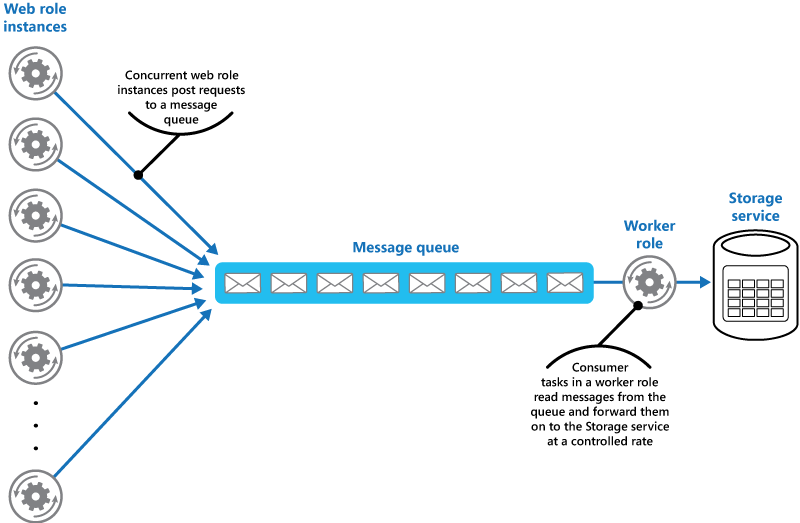

To resolve this, you can use a queue to level the load between the web role instances and the storage service. However, the storage service is designed to accept synchronous requests and can't be easily modified to read messages and manage throughput. You can introduce a worker role to act as a proxy service that receives requests from the queue and forwards them to the storage service. The application logic in the worker role can control the rate at which it passes requests to the storage service to prevent the storage service from being overwhelmed. This figure illustrates sing a queue and a worker role to level the load between instances of the web role and the service.

要解决此问题,您可以使用队列来平衡Web角色实例和存储服务之间的负载。 然而,存储服务被设计为接受同步请求,并且不能容易地修改以读取消息并管理吞吐量。 您可以引入一个worker角色,作为从队列接收请求并将其转发到存储服务的代理服务。 工作者角色中的应用程序逻辑可以控制将请求传递到存储服务的速率,以防止存储服务被淹没。 该图说明了一个队列和一个工作者角色,用于对Web角色和服务的实例之间的负载进行调整。

Related patterns and guidance

The following patterns and guidance might also be relevant when implementing this pattern:

Asynchronous Messaging Primer

. Message queues are inherently asynchronous. It might be necessary to redesign the application logic in a task if it's adapted from communicating directly with a service to using a message queue. Similarly, it might be necessary to refactor a service to accept requests from a message queue. Alternatively, it might be possible to implement a proxy service, as described in the example.

Competing Consumers pattern

. It might be possible to run multiple instances of a service, each acting as a message consumer from the load-leveling queue. You can use this approach to adjust the rate at which messages are received and passed to a service.

Throttling pattern

. A simple way to implement throttling with a service is to use queue-based load leveling and route all requests to a service through a message queue. The service can process requests at a rate that ensures that resources required by the service aren't exhausted, and to reduce the amount of contention that could occur.

Queue Service Concepts

. Information about choosing a messaging and queuing mechanism in Azure applications.

实现此模式时,以下模式和指导也可能是相关的:

异步消息入门。消息队列本质上是异步的。如果从应用程序直接与服务通信到使用消息队列,可能需要重新设计任务中的应用程序逻辑。类似地,可能需要重构服务以接受来自消息队列的请求。或者,可能实现代理服务,如示例中所述。

竞争消费者模式。可能运行服务的多个实例,每个服务实例作为消息使用者从负载均衡队列中充当。您可以使用此方法来调整消息被接收并传递到服务的速率。

节流模式。使用服务实现节流的一种简单方法是使用基于队列的负载均衡,并通过消息队列将所有请求路由到服务。该服务可以以确保服务所需资源未用尽的速度处理请求,并减少可能发生的争用的数量。

队列服务概念。有关在Azure应用程序中选择消息传递和排队机制的信息。