使用gpac封装mp4

编译环境:Ubuntu16.04 64位

交叉编译工具:arm-hisiv500-linux-gcc

在我的另一篇博客《使用mp4v2封装MP4》中,发现mp4v2只支持H264封装成MP4,这里使用gpac完成对H265的封装。

文章目录

- 1. 交叉编译gpac

- 2. sample代码

- 2.1 H264的Nalu数据写入

- 2.2 H265的Nalu数据写入

- 2.3 数据区的写入

- 3. MP4文件未正常关闭的修复方案

1. 交叉编译gpac

下载合适版本的gpac源码,我下载的是0.7.0Release版本的gpac。

./configure --prefix=/home/jerry/work/gpac/gpac.install --cc=arm-hisiv500-linux-gcc --cxx=arm-hisiv500-linux-g++ --extra-cflags=-I/home/jerry/work/gpac/gpac/extra_lib/include/zlib --extra-ldflags=-L/home/jerry/work/gpac/gpac/extra_lib/lib/gcc --use-zlib=local

make

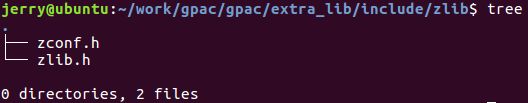

–prefix选项是一个中间目录,gpac库的编译需要依赖zlib库,–extra-cflags选项指定zlib的头文件目录,–extra-ldflags选项指定libz.a所在的的目录。直接将所需要的头文件和交叉编译好的libz.a放到上述目录即可。交叉编译zlib的流程见我的另一篇博客《openssh移植记录》。

其中,MP4Box等可执行程序支持的功能可以百度。libgpac_static.a是我们所需要的静态库,头文件可以将include下的gpac目录完整拷贝到开发板即可。libz.a也需要拷贝(不需要头文件)。

2. sample代码

2.1 H264的Nalu数据写入

int AF_MP4Writer::WriteH264Nalu(unsigned char **ppNaluData, int *pNaluLength)

{

m_nTrackID = gf_isom_new_track(m_pFile,0,GF_ISOM_MEDIA_VISUAL,1000);

gf_isom_set_track_enabled(m_pFile,m_nTrackID,1);

GF_AVCConfig *pstAVCConfig = gf_odf_avc_cfg_new();

gf_isom_avc_config_new(m_pFile, m_nTrackID, pstAVCConfig, NULL, NULL, &m_nStreamIndex);

gf_isom_set_visual_info(m_pFile, m_nTrackID, m_nStreamIndex, m_nWidth, m_nHeight);

pstAVCConfig->configurationVersion = 1;

pstAVCConfig->AVCProfileIndication = ppNaluData[AF_NALU_SPS][1];

pstAVCConfig->profile_compatibility = ppNaluData[AF_NALU_SPS][2];

pstAVCConfig->AVCLevelIndication = ppNaluData[AF_NALU_SPS][3];

GF_AVCConfigSlot stAVCConfig[AF_NALU_MAX];

memset(stAVCConfig, 0, sizeof(stAVCConfig));

for (int i = 0; i < AF_NALU_MAX; i++)

{

if (i == AF_NALU_VPS)

continue;

stAVCConfig[i].size = pNaluLength[i];

stAVCConfig[i].data = (char *)ppNaluData[i];

if (i == AF_NALU_SPS)

{

gf_list_add(pstAVCConfig->sequenceParameterSets, &stAVCConfig[i]);

}

else if (i == AF_NALU_PPS)

{

gf_list_add(pstAVCConfig->pictureParameterSets, &stAVCConfig[i]);

}

}

gf_isom_avc_config_update(m_pFile, m_nTrackID, 1, pstAVCConfig);

pstAVCConfig->pictureParameterSets = NULL;

pstAVCConfig->sequenceParameterSets = NULL;

gf_odf_avc_cfg_del(pstAVCConfig);

pstAVCConfig = NULL;

return 0;

}

2.2 H265的Nalu数据写入

int AF_MP4Writer::WriteH265Nalu(unsigned char **ppNaluData, int *pNaluLength)

{

m_nTrackID = gf_isom_new_track(m_pFile, 0, GF_ISOM_MEDIA_VISUAL, 1000);

gf_isom_set_track_enabled(m_pFile, m_nTrackID, 1);

GF_HEVCConfig *pstHEVCConfig = gf_odf_hevc_cfg_new();

pstHEVCConfig->nal_unit_size = 4;

gf_isom_hevc_config_new(m_pFile, m_nTrackID, pstHEVCConfig, NULL, NULL, &m_nStreamIndex);

gf_isom_set_nalu_extract_mode(m_pFile, m_nTrackID, GF_ISOM_NALU_EXTRACT_INSPECT);

gf_isom_set_cts_packing(m_pFile, m_nTrackID, GF_TRUE);

pstHEVCConfig->configurationVersion = 1;

HEVCState hevc;

memset(&hevc, 0 ,sizeof(HEVCState));

GF_AVCConfigSlot stAVCConfig[AF_NALU_MAX];

memset(stAVCConfig, 0, sizeof(stAVCConfig));

GF_HEVCParamArray stHEVCNalu[AF_NALU_MAX];

memset(stHEVCNalu, 0, sizeof(stHEVCNalu));

int idx = 0;

for (int i = 0; i < AF_NALU_MAX; i++)

{

switch (i)

{

case AF_NALU_SPS:

idx = gf_media_hevc_read_sps((char*)ppNaluData[i], pNaluLength[i], &hevc);

hevc.sps[idx].crc = gf_crc_32((char*)ppNaluData[i], pNaluLength[i]);

pstHEVCConfig->profile_space = hevc.sps[idx].ptl.profile_space;

pstHEVCConfig->tier_flag = hevc.sps[idx].ptl.tier_flag;

pstHEVCConfig->profile_idc = hevc.sps[idx].ptl.profile_idc;

break;

case AF_NALU_PPS:

idx = gf_media_hevc_read_pps((char*)ppNaluData[i], pNaluLength[i], &hevc);

hevc.pps[idx].crc = gf_crc_32((char*)ppNaluData[i], pNaluLength[i]);

break;

case AF_NALU_VPS:

idx = gf_media_hevc_read_vps((char*)ppNaluData[i], pNaluLength[i], &hevc);

hevc.vps[idx].crc = gf_crc_32((char*)ppNaluData[i], pNaluLength[i]);

pstHEVCConfig->avgFrameRate = hevc.vps[idx].rates[0].avg_pic_rate;

pstHEVCConfig->constantFrameRate = hevc.vps[idx].rates[0].constand_pic_rate_idc;

pstHEVCConfig->numTemporalLayers = hevc.vps[idx].max_sub_layers;

pstHEVCConfig->temporalIdNested = hevc.vps[idx].temporal_id_nesting;

break;

}

stHEVCNalu[i].nalus = gf_list_new();

gf_list_add(pstHEVCConfig->param_array, &stHEVCNalu[i]);

stHEVCNalu[i].array_completeness = 1;

stHEVCNalu[i].type = g_HEVC_NaluMap[i];

stAVCConfig[i].id = idx;

stAVCConfig[i].size = pNaluLength[i];

stAVCConfig[i].data = (char *)ppNaluData[i];

gf_list_add(stHEVCNalu[i].nalus, &stAVCConfig[i]);

}

gf_isom_set_visual_info(m_pFile, m_nTrackID, m_nStreamIndex, hevc.sps[idx].width, hevc.sps[idx].height);

gf_isom_hevc_config_update(m_pFile, m_nTrackID, 1, pstHEVCConfig);

for (int i = 0; i < AF_NALU_MAX; i++)

{

if (stHEVCNalu[i].nalus)

gf_list_del(stHEVCNalu[i].nalus);

}

pstHEVCConfig->param_array = NULL;

gf_odf_hevc_cfg_del(pstHEVCConfig);

pstHEVCConfig = NULL;

return 0;

}

2.3 数据区的写入

int AF_MP4Writer::WriteFrame(unsigned char *pData, int nSize, bool bKey, long nTimeStamp)

{

if (m_TimeStamp == -1 && bKey)

{

m_TimeStamp = nTimeStamp;

}

if (m_TimeStamp != -1)

{

GF_ISOSample *pISOSample = gf_isom_sample_new();

pISOSample->IsRAP = (SAPType)bKey;

pISOSample->dataLength = nSize;

pISOSample->data = (char *)pData;

pISOSample->DTS = nTimeStamp - m_TimeStamp;

pISOSample->CTS_Offset = 0;

GF_Err gferr = gf_isom_add_sample(m_pFile, m_nTrackID, m_nStreamIndex, pISOSample);

if (gferr == -1)

{

pISOSample->DTS = nTimeStamp - m_TimeStamp + 1000 / (m_nFps * 2);

gf_isom_add_sample(m_pFile, m_nTrackID, m_nStreamIndex, pISOSample);

}

pISOSample->data = NULL;

pISOSample->dataLength = 0;

gf_isom_sample_del(&pISOSample);

pISOSample = NULL;

}

return 0;

}

PS:

int AF_MP4Writer::WriteFrame(unsigned char *pData, int nSize, bool bKey, long nTimeStamp);

// nTimeStamp时间戳可以直接在接口内部获得,不需要传参

// 方式一:实时流的写入,直接使用系统时间即可

struct timeval timeNow;

gettimeofday(&timeNow, NULL);

long long nTimeStamp = timeNow.tv_sec * 1000 + timeNow.tv_usec / 1000;

// 方式二:读取文件等执行快速写入,根据帧率计算

if (m_TimeStamp == -1 && bKey)

{

m_TimeStamp = 0;

}

//...

pISOSample->DTS = m_TimeStamp;

m_TimeStamp += 1000 / m_stEncInfo.nFps;

//...

PPS:

int AF_MP4Writer::WriteH264(unsigned char *pData, int nSize, bool bKey, long nTimeStamp)

int AF_MP4Writer::WriteH265(unsigned char *pData, int nSize, bool bKey, long nTimeStamp)

// bKey是否关键帧可以直接在接口内部获得,不需要传参

// 先将bKey置为false

// WriteH264在case 0x07: // SPS时置为true

// WriteH265在case 0x20: // VPS时置为true

PPPS:

代码错误:

int AF_MP4Writer::WriteH264(unsigned char *pData, int nSize, bool bKey, long nTimeStamp)

int AF_MP4Writer::WriteH265(unsigned char *pData, int nSize, bool bKey, long nTimeStamp)

//lenIn -= (lenOut - (pOut - pIn));// 修改为

lenIn -= (lenOut + (pOut - pIn));

以上代码,已经在hi3519测试通过,MP4格式录像在多种播放器中均能正常播放。源码点击使用gpac封装MP4源码 下载。

PPPPS:优化部分错误,并新增AAC音频,音频部分未经测试。源码点击使用gpac封装MP4源码(新)下载。

3. MP4文件未正常关闭的修复方案

使用gpac封装MP4文件,未正常关闭,会导致头部的mdat box的长度以及type未写入,以及尾部moov box未写入(包括vps、sps、pps等信息),MP4文件格式参考博客 MP4文件格式分析及分割实现(附源码)。

我们可以在开始录制MP4的同时添加一个idx文件,记录vps、sps、pps信息和编码方式(H264/H265),帧率,时间比例以及对应是否关键帧序列,在MP4正常关闭时可以删除idx文件。

分别读取异常MP4和idx文件,重新写入新的MP4文件。

mdat type字段后可能会接若干占位符,跳过。后续的数据即是N个(帧长度+数据帧)的序列,重新写入数据时需要注意,假设数据为…00 00 00 10 01 01 01 01 01 01 01 01 01 01 01 01 01 01 01 01…,重新写入时,dataLength=0x10+4=20字节,data为全部的20字节。

转载请注明出处,如有错漏之处,敬请指正。