日志系统elfk

前言

经过上周的技术预研,在本周一通过开会研究,根据公司的现有业务流量和技术栈,决定选择的日志系统方案为:elasticsearch(es)+logstash(lo)+filebeat(fi)+kibana(ki)组合。es选择使用aliyun提供的es,lo&fi选择自己部署,ki是阿里云送的。因为申请ecs需要一定的时间,暂时选择部署在测试&生产环境(吐槽一下,我司测试和生产公用一套k8s并且托管与aliyun......)。用时一天(前期有部署的差不多过)完成在kubernetes上部署完成elfk(先部署起来再说,优化什么的后期根据需要再搞)。

组件简介

es 是一个实时的、分布式的可扩展的搜索引擎,允许进行全文、结构化搜索,它通常用于索引和搜索大量日志数据,也可用于搜索许多不同类型的文。

lo 主要的有点就是它的灵活性,主要因为它有很多插件,详细的文档以及直白的配置格式让它可以在多种场景下应用。我们基本上可以在网上找到很多资源,几乎可以处理任何问题。

作为 Beats 家族的一员,fi 是一个轻量级的日志传输工具,它的存在正弥补了 lo 的缺点fi作为一个轻量级的日志传输工具可以将日志推送到中心lo。

ki是一个分析和可视化平台,它可以浏览、可视化存储在es集群上排名靠前的日志数据,并构建仪表盘。ki结合es操作简单集成了绝大多数es的API,是专业的日志展示应用。

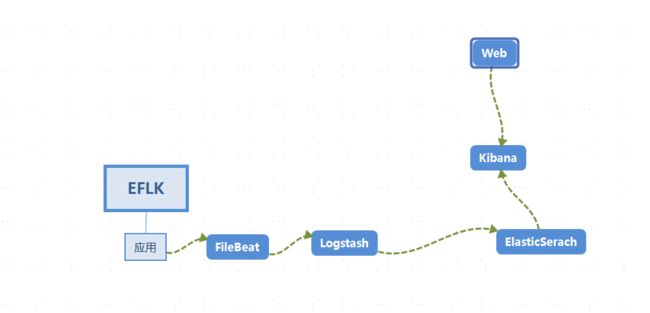

数据采集流程图

日志流向:logs_data---> fi ---> lo ---> es---> ki。

logs_data通过fi收集日志,输出到lo,通过lo做一些过滤和修改之后传送到es数据库,ki读取es数据库做分析。

部署

根据我司的实际集群状况,此文档部署将完全还原日志系统的部署情况。

在本地MAC安装kubectl连接aliyun托管k8s

在客户端(随便本地一台虚机上)安装和托管的k8s一样版本的kubectl

curl -LO https://storage.googleapis.com/kubernetes-release/release/v1.14.8/bin/linux/amd64/kubectl

chmod +x ./kubectl

mv ./kubectl /usr/local/bin/kubectl

将阿里云托管的k8s的kubeconfig 复制到$HOME/.kube/config 目录下,注意用户权限的问题

部署ELFK

申请一个名称空间(一般一个项目一个名称空间)。

# cat kube-logging.yaml

apiVersion: v1

kind: Namespace

metadata:

name: loging

部署es。网上找个差不多的资源清单,根据自己的需求进行适当的修改,运行,出错就根据日志进行再修改。

# cat elasticsearch.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: local-class

namespace: loging

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer

# Supported policies: Delete, Retain

reclaimPolicy: Delete

---

kind: PersistentVolume

apiVersion: v1

metadata:

name: datadir1

namespace: logging

labels:

type: local

spec:

storageClassName: local-class

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/data/data1"

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: elasticsearch

namespace: loging

spec:

serviceName: elasticsearch

selector:

matchLabels:

app: elasticsearch

template:

metadata:

labels:

app: elasticsearch

spec:

containers:

- name: elasticsearch

image: elasticsearch:7.3.1

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

ports:

- containerPort: 9200

name: rest

protocol: TCP

- containerPort: 9300

name: inter-node

protocol: TCP

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

env:

- name: "discovery.type"

value: "single-node"

- name: cluster.name

value: k8s-logs

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx512m"

initContainers:

- name: fix-permissions

image: busybox

command: ["sh", "-c", "chown -R 1000:1000 /usr/share/elasticsearch/data"]

securityContext:

privileged: true

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

- name: increase-vm-max-map

image: busybox

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

- name: increase-fd-ulimit

image: busybox

command: ["sh", "-c", "ulimit -n 65536"]

securityContext:

privileged: true

volumeClaimTemplates:

- metadata:

name: data

labels:

app: elasticsearch

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "local-class"

resources:

requests:

storage: 5Gi

---

kind: Service

apiVersion: v1

metadata:

name: elasticsearch

namespace: loging

labels:

app: elasticsearch

spec:

selector:

app: elasticsearch

clusterIP: None

ports:

- port: 9200

name: rest

- port: 9300

name: inter-node

部署ki。因为根据数据采集流程图,ki是和es结合的,配置相对简单。

# cat kibana.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: kibana

namespace: loging

labels:

k8s-app: kibana

spec:

replicas: 1

selector:

matchLabels:

k8s-app: kibana

template:

metadata:

labels:

k8s-app: kibana

spec:

containers:

- name: kibana

image: kibana:7.3.1

resources:

limits:

cpu: 1

memory: 500Mi

requests:

cpu: 0.5

memory: 200Mi

env:

- name: ELASTICSEARCH_HOSTS

#注意value是es的services,因为es是有状态,用的无头服务,所以连接的就不仅仅是pod的名字了

value: http://elasticsearch:9200

ports:

- containerPort: 5601

name: ui

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: loging

spec:

ports:

- port: 5601

protocol: TCP

targetPort: ui

selector:

k8s-app: kibana

配置ingress-controller。因为我司用的是阿里云托管的k8s自带的nginx-ingress,并且配置了强制转换https。所以kibana-ingress也要配成https。

# openssl genrsa -out tls.key 2048

# openssl req -new -x509 -key tls.key -out tls.crt -subj /C=CN/ST=Beijing/L=Beijing/O=DevOps/CN=kibana.test.realibox.com

# kubectl create secret tls kibana-ingress-secret --cert=tls.crt --key=tls.key

kibana-ingress配置如下。提供两种,一种是https,一种是http。

https:

# cat kibana-ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kibana

namespace: loging

spec:

tls:

- hosts:

- kibana.test.realibox.com

secretName: kibana-ingress-secret

rules:

- host: kibana.test.realibox.com

http:

paths:

- path: /

backend:

serviceName: kibana

servicePort: 5601

http:

# cat kibana-ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kibana

namespace: logging

spec:

rules:

- host: kibana.test.realibox.com

http:

paths:

- path: /

backend:

serviceName: kibana

servicePort: 5601

部署lo。因为lo的作用是对fi收集到的日志进行过滤,需要根据不同的日志做不同的处理,所以可能要经常性的进行改动,要进行解耦。所以选择以configmap的形式进行挂载。

# cat logstash.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: logstash

namespace: loging

spec:

replicas: 1

selector:

matchLabels:

app: logstash

template:

metadata:

labels:

app: logstash

spec:

containers:

- name: logstash

image: elastic/logstash:7.3.1

volumeMounts:

- name: config

mountPath: /opt/logstash/config/containers.conf

subPath: containers.conf

command:

- "/bin/sh"

- "-c"

- "/opt/logstash/bin/logstash -f /opt/logstash/config/containers.conf"

volumes:

- name: config

configMap:

name: logstash-k8s-config

---

apiVersion: v1

kind: Service

metadata:

labels:

app: logstash

name: logstash

namespace: loging

spec:

ports:

- port: 8080

targetPort: 8080

selector:

app: logstash

type: ClusterIP

# cat logstash-config.yaml

---

apiVersion: v1

kind: Service

metadata:

labels:

app: logstash

name: logstash

namespace: loging

spec:

ports:

- port: 8080

targetPort: 8080

selector:

app: logstash

type: ClusterIP

---

apiVersion: v1

kind: ConfigMap

metadata:

name: logstash-k8s-config

namespace: loging

data:

containers.conf: |

input {

beats {

port => 8080 #filebeat连接端口

}

}

output {

elasticsearch {

hosts => ["elasticsearch:9200"] #es的service

index => "logstash-%{+YYYY.MM.dd}"

}

}

注意:修改configmap 相当于修改镜像。必须重新apply 应用资源清单才能生效。根据数据采集流程图,lo的数据由fi流入,流向es。

部署fi。fi的主要作用是进行日志的采集,然后将数据交给lo。

# cat filebeat.yaml

---

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-config

namespace: loging

labels:

app: filebeat

data:

filebeat.yml: |-

filebeat.config:

inputs:

# Mounted `filebeat-inputs` configmap:

path: ${path.config}/inputs.d/*.yml

# Reload inputs configs as they change:

reload.enabled: false

modules:

path: ${path.config}/modules.d/*.yml

# Reload module configs as they change:

reload.enabled: false

# To enable hints based autodiscover, remove `filebeat.config.inputs` configuration and uncomment this:

#filebeat.autodiscover:

# providers:

# - type: kubernetes

# hints.enabled: true

output.logstash:

hosts: ['${LOGSTASH_HOST:logstash}:${LOGSTASH_PORT:8080}'] #流向lo

---

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-inputs

namespace: loging

labels:

app: filebeat

data:

kubernetes.yml: |-

- type: docker

containers.ids:

- "*"

processors:

- add_kubernetes_metadata:

in_cluster: true

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: filebeat

namespace: loging

labels:

app: filebeat

spec:

selector:

matchLabels:

app: filebeat

template:

metadata:

labels:

app: filebeat

spec:

serviceAccountName: filebeat

terminationGracePeriodSeconds: 30

containers:

- name: filebeat

image: elastic/filebeat:7.3.1

args: [

"-c", "/etc/filebeat.yml",

"-e",

]

env: #注入变量

- name: LOGSTASH_HOST

value: logstash

- name: LOGSTASH_PORT

value: "8080"

securityContext:

runAsUser: 0

# If using Red Hat OpenShift uncomment this:

#privileged: true

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 100Mi

volumeMounts:

- name: config

mountPath: /etc/filebeat.yml

readOnly: true

subPath: filebeat.yml

- name: inputs

mountPath: /usr/share/filebeat/inputs.d

readOnly: true

- name: data

mountPath: /usr/share/filebeat/data

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

volumes:

- name: config

configMap:

defaultMode: 0600

name: filebeat-config

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: inputs

configMap:

defaultMode: 0600

name: filebeat-inputs

# data folder stores a registry of read status for all files, so we don't send everything again on a Filebeat pod restart

- name: data

hostPath:

path: /var/lib/filebeat-data

type: DirectoryOrCreate

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: filebeat

subjects:

- kind: ServiceAccount

name: filebeat

namespace: loging

roleRef:

kind: ClusterRole

name: filebeat

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: filebeat

labels:

app: filebeat

rules:

- apiGroups: [""] # "" indicates the core API group

resources:

- namespaces

- pods

verbs:

- get

- watch

- list

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: filebeat

namespace: loging

labels:

app: filebeat

---

至此完成在k8s上部署es+lo+fi+ki ,进行简单验证。

验证

查看svc、pod、ingress信息

# kubectl get svc,pods,ingress -n loging

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/elasticsearch ClusterIP None 9200/TCP,9300/TCP 151m

service/kibana ClusterIP xxx.168.239.2xx 5601/TCP 20h

service/logstash ClusterIP xxx.168.38.1xx 8080/TCP 122m

NAME READY STATUS RESTARTS AGE

pod/elasticsearch-0 1/1 Running 0 151m

pod/filebeat-24zl7 1/1 Running 0 118m

pod/filebeat-4w7b6 1/1 Running 0 118m

pod/filebeat-m5kv4 1/1 Running 0 118m

pod/filebeat-t6x4t 1/1 Running 0 118m

pod/kibana-689f4bd647-7jrqd 1/1 Running 0 20h

pod/logstash-76bc9b5f95-qtngp 1/1 Running 0 122m

NAME HOSTS ADDRESS PORTS AGE

ingress.extensions/kibana kibana.test.realibox.com xxx.xx.xx.xxx 80, 443 19h

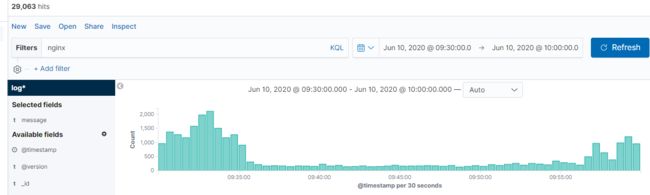

web配置

配置索引

发现

至此算是简单完成。后续需要不断优化,不过那是后事了。

问题总结

这应该算是第一次亲自在测试&生产环境部署应用了,而且是自己很不熟悉的日子系统,遇到了很多问题,需要总结。

- 如何调研一项技术栈;

- 如何选定方案;

- 因为网上几乎没有找到类似的方案(也不晓得别的公司是怎么搞的,反正网上找不到有效的可能借鉴的)。需要自己根据不同的文档总结尝试;

- 一个组件的标签尽可能一致;

- 如何查看公司是否做了端口限制和https强制转换;

- 遇到IT的事一定要看日志,这点很重要,日志可以解决绝大多数问题;

- 一个人再怎么整也会忽略一些点,自己先尝试然后请教朋友,共同进步。

- 项目先上线再说别的,目前是这样,一件事又百分之20的把握就可以去做了。百分之80再去做就没啥意思了。

- 自学重点学的是理论,公司才能学到操作。