elfk收集k8s日志(三)

本文介绍通过elk + filebeat方式收集k8s日志,其中filebeat以sidecar方式部署。elfk最新版本:7.6.2

k8s日志收集方案

- 3种日志收集方案:

1. node上部署一个日志收集程序

Daemonset方式部署日志收集程序,对本节点 /var/log 和 /var/lib/docker/containers 两个目录下的日志进行采集

2. sidecar方式部署日志收集程序

每个运行应用程序的pod中附加一个日志收集的容器,使用 emptyDir 共享日志目录让日志容器收集日志

3. 应用程序直接推送日志

常见的如 graylog 工具,直接修改代码推送日志到es,然后在graylog上展示出来

- 3种收集方案的优缺点:

| 方案 | 优点 | 缺点 |

|---|---|---|

| 1. node上部署一个日志收集程序 | 每个node仅需部署一个日志收集程序,消耗资源少,对应用无侵入 | 应用程序日志需要写到标准输出和标准错误输出,不支持多行日志 |

| 2. pod中附加一个日志收集容器 | 低耦合 | 每个pod启动一个日志收集容器,增加资源消耗 |

| 3. 应用程序直接推送日志 | 无需额外收集工具 | 侵入应用,增加应用复杂度 |

下面测试第1种方案:每个node上部署一个日志收集程序,注意elfk版本保持一致。

SideCar方式收集k8s日志

- 主机说明:

| 系统 | ip | 角色 | cpu | 内存 | hostname |

|---|---|---|---|---|---|

| CentOS 7.8 | 192.168.30.128 | master、deploy | >=2 | >=2G | master1 |

| CentOS 7.8 | 192.168.30.129 | master | >=2 | >=2G | master2 |

| CentOS 7.8 | 192.168.30.130 | node | >=2 | >=2G | node1 |

| CentOS 7.8 | 192.168.30.131 | node | >=2 | >=2G | node2 |

| CentOS 7.8 | 192.168.30.132 | node | >=2 | >=2G | node3 |

| CentOS 7.8 | 192.168.30.133 | test | >=2 | >=2G | test |

- 搭建k8s集群:

搭建过程省略,具体参考:Kubeadm方式搭建k8s集群 或 二进制方式搭建k8s集群

搭建完成后,查看集群:

kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1 Ready master 4d16h v1.14.0

master2 Ready master 4d16h v1.14.0

node1 Ready <none> 4d16h v1.14.0

node2 Ready <none> 4d16h v1.14.0

node3 Ready <none> 4d16h v1.14.0

这里为了方便,直接使用之前的k8s集群,注意删除之前实验的k8s资源对象。

- docker-compose部署elk:

部署过程参考:docker-compose部署elfk

mkdir /software & cd /software

git clone https://github.com/Tobewont/elfk-docker.git

cd elfk-docker/

echo 'ELK_VERSION=7.6.2' > .env #替换版本

vim elasticsearch/Dockerfile

ARG ELK_VERSION=7.6.2

# https://github.com/elastic/elasticsearch-docker

FROM docker.elastic.co/elasticsearch/elasticsearch-oss:${ELK_VERSION}

# FROM elasticsearch:${ELK_VERSION}

# Add your elasticsearch plugins setup here

# Example: RUN elasticsearch-plugin install analysis-icu

vim elasticsearch/config/elasticsearch.yml

---

## Default Elasticsearch configuration from Elasticsearch base image.

## https://github.com/elastic/elasticsearch/blob/master/distribution/docker/src/docker/config/elasticsearch.yml

#

cluster.name: "docker-cluster"

network.host: "0.0.0.0"

## X-Pack settings

## see https://www.elastic.co/guide/en/elasticsearch/reference/current/setup-xpack.html

#

#xpack.license.self_generated.type: trial #trial为试用版,一个月期限,可更改为basic版本

#xpack.security.enabled: true

#xpack.monitoring.collection.enabled: true

#http.cors.enabled: true

#http.cors.allow-origin: "*"

#http.cors.allow-headers: Authorization,X-Requested-With,Content-Length,Content-Type

vim kibana/Dockerfile

ARG ELK_VERSION=7.6.2

# https://github.com/elastic/kibana-docker

FROM docker.elastic.co/kibana/kibana-oss:${ELK_VERSION}

# FROM kibana:${ELK_VERSION}

# Add your kibana plugins setup here

# Example: RUN kibana-plugin install

vim kibana/config/kibana.yml

---

## Default Kibana configuration from Kibana base image.

## https://github.com/elastic/kibana/blob/master/src/dev/build/tasks/os_packages/docker_generator/templates/kibana_yml.template.js

#

server.name: "kibana"

server.host: "0.0.0.0"

elasticsearch.hosts: [ "http://elasticsearch:9200" ]

# xpack.monitoring.ui.container.elasticsearch.enabled: true

## X-Pack security credentials

#

# elasticsearch.username: elastic

# elasticsearch.password: changeme

vim logstash/Dockerfile

ARG ELK_VERSION=7.6.2

# https://github.com/elastic/logstash-docker

FROM docker.elastic.co/logstash/logstash-oss:${ELK_VERSION}

# FROM logstash:${ELK_VERSION}

# Add your logstash plugins setup here

# Example: RUN logstash-plugin install logstash-filter-json

RUN logstash-plugin install logstash-filter-multiline

vim logstash/config/logstash.yml

---

## Default Logstash configuration from Logstash base image.

## https://github.com/elastic/logstash/blob/master/docker/data/logstash/config/logstash-full.yml

#

http.host: "0.0.0.0"

#xpack.monitoring.elasticsearch.hosts: [ "http://elasticsearch:9200" ]

## X-Pack security credentials

#

#xpack.monitoring.enabled: true

#xpack.monitoring.elasticsearch.username: elastic

#xpack.monitoring.elasticsearch.password: changeme

#xpack.monitoring.collection.interval: 10s

vim logstash/pipeline/logstash.conf #如果filebeat配置文件中没有配置多行合并,可以在logstash中进行多行合并

input {

beats {

port => 5040

}

}

filter {

if [type] == "nginx_access" {

#multiline {

#pattern => "^\d{1,3}.\d{1,3}.\d{1,3}.\d{1,3}"

#negate => true

#what => "previous"

#}

grok {

match => [ "message", "%{IPV4:remote_addr} - (%{USERNAME:user}|-) \[%{HTTPDATE:log_timestamp}\] \"%{WORD:method} %{DATA:request} HTTP/%{NUMBER:httpversion}\" %{NUMBER:status} %{NUMBER:bytes} %{QS:referer} %{QS:agent} %{QS:xforward}" ]

}

}

if [type] == "nginx_error" {

#multiline {

#pattern => "^\d{1,3}.\d{1,3}.\d{1,3}.\d{1,3}"

#negate => true

#what => "previous"

#}

grok {

match => [ "message", "%{IPV4:remote_addr} - (%{USERNAME:user}|-) \[%{HTTPDATE:log_timestamp}\] \"%{WORD:method} %{DATA:request} HTTP/%{NUMBER:httpversion}\" %{NUMBER:status} %{NUMBER:bytes} %{QS:referer} %{QS:agent} %{QS:xforward}" ]

}

}

if [type] == "tomcat_catalina" {

#multiline {

#pattern => "^\d{1,2}-\S{3}-\d{4}\s\d{1,2}:\d{1,2}:\d{1,2}"

#negate => true

#what => "previous"

#}

grok {

match => [ "message", "(?%{MONTHDAY}-%{MONTH}-%{YEAR} %{TIME}) %{LOGLEVEL:severity} \[%{DATA:exception_info}\] %{GREEDYDATA:message}" ]

}

}

}

output {

if [type] == "nginx_access" {

elasticsearch {

hosts => ["elasticsearch:9200"]

#user => "elastic"

#password => "changeme"

index => "nginx-access"

}

}

if [type] == "nginx_error" {

elasticsearch {

hosts => ["elasticsearch:9200"]

#user => "elastic"

#password => "changeme"

index => "nginx-error"

}

}

if [type] == "tomcat_catalina" {

elasticsearch {

hosts => ["elasticsearch:9200"]

#user => "elastic"

#password => "changeme"

index => "tomcat-catalina"

}

}

}

vim docker-compose.yml

version: '3.7'

services:

elasticsearch:

build:

context: elasticsearch/

args:

ELK_VERSION: $ELK_VERSION

volumes:

- type: bind

source: ./elasticsearch/config/elasticsearch.yml

target: /usr/share/elasticsearch/config/elasticsearch.yml

read_only: true

- type: volume

source: elasticsearch

target: /usr/share/elasticsearch/data

ports:

- "9200:9200"

- "9300:9300"

environment:

ES_JAVA_OPTS: "-Xmx256m -Xms256m"

ELASTIC_PASSWORD: changeme

discovery.type: single-node

networks:

- elk

logstash:

depends_on:

- elasticsearch

build:

context: logstash/

args:

ELK_VERSION: $ELK_VERSION

volumes:

- type: bind

source: ./logstash/config/logstash.yml

target: /usr/share/logstash/config/logstash.yml

read_only: true

- type: bind

source: ./logstash/pipeline

target: /usr/share/logstash/pipeline

read_only: true

ports:

- "5040:5040"

- "9600:9600"

environment:

LS_JAVA_OPTS: "-Xmx256m -Xms256m"

networks:

- elk

kibana:

depends_on:

- elasticsearch

build:

context: kibana/

args:

ELK_VERSION: $ELK_VERSION

volumes:

- type: bind

source: ./kibana/config/kibana.yml

target: /usr/share/kibana/config/kibana.yml

read_only: true

ports:

- "5601:5601"

networks:

- elk

volumes:

elasticsearch:

# driver: local

# driver_opts:

# type: none

# o: bind

# device: /home/elfk/elasticsearch/data

networks:

elk:

driver: bridge

为了方便,建议提前在test节点上拉取需要的镜像:

docker pull docker.elastic.co/elasticsearch/elasticsearch-oss:7.6.2

docker pull docker.elastic.co/kibana/kibana-oss:7.6.2

docker pull docker.elastic.co/logstash/logstash-oss:7.6.2

docker-compose up --build -d

docker-compose ps

Name Command State Ports

-------------------------------------------------------------------------------------------------------------------------------

elfk-docker_elasticsearch_1 /usr/local/bin/docker-entr ... Up 0.0.0.0:9200->9200/tcp, 0.0.0.0:9300->9300/tcp

elfk-docker_kibana_1 /usr/local/bin/dumb-init - ... Up 0.0.0.0:5601->5601/tcp

elfk-docker_logstash_1 /usr/local/bin/docker-entr ... Up 0.0.0.0:5040->5040/tcp, 5044/tcp, 0.0.0.0:9600->9600/tcp

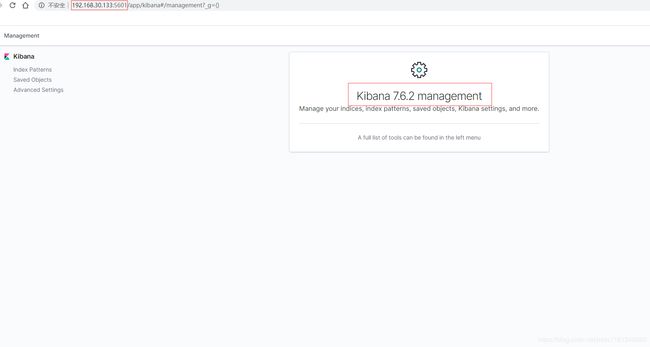

访问kibana页面:ip:5601,

elk容器启动正常,kibana访问正常。接下来sidecar方式部署filebeat收集应用日志。

- 以sidecar方式部署filebeat收集nginx日志:

vim filebeat-nginx.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

namespace: default

labels:

app: nginx

spec:

selector:

app: nginx

ports:

- port: 80

nodePort: 30090

type: NodePort

---

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-config

namespace: default

labels:

app: filebeat

data:

filebeat.yml: |-

filebeat.config:

inputs:

path: ${path.config}/inputs.d/*.yml

reload.enabled: false

modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

filebeat.inputs:

- type: log

paths:

- /logdata/access.log

tail_files: true

fields:

pod_name: '${pod_name}'

POD_IP: '${POD_IP}'

type: nginx_access

fields_under_root: true

multiline.pattern: '^\d{1,3}.\d{1,3}.\d{1,3}.\d{1,3}'

multiline.negate: true

multiline.match: after

- type: log

paths:

- /logdata/error.log

tail_files: true

fields:

pod_name: '${pod_name}'

POD_IP: '${POD_IP}'

type: nginx_error

fields_under_root: true

multiline.pattern: '^\d{1,3}.\d{1,3}.\d{1,3}.\d{1,3}'

multiline.negate: true

multiline.match: after

output.logstash:

hosts: ["192.168.30.133:5040"]

enabled: true

worker: 1

compression_level: 3

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

namespace: default

spec:

replicas: 1

minReadySeconds: 15

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

terminationGracePeriodSeconds: 30

containers:

- name: filebeat

image: docker.elastic.co/beats/filebeat-oss:7.6.2

args: [

"-c", "/etc/filebeat/filebeat.yml",

"-e",

]

env:

- name: POD_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

- name: pod_name

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.name

securityContext:

runAsUser: 0

resources:

limits:

memory: 200Mi

requests:

cpu: 200m

memory: 200Mi

volumeMounts:

- name: config

mountPath: /etc/filebeat/

- name: data

mountPath: /usr/share/filebeat/data

- name: logdata

mountPath: /logdata

- name: nginx

image: nginx:1.17.0

ports:

- containerPort: 80

volumeMounts:

- name: logdata

mountPath: /var/log/nginx

volumes:

- name: data

emptyDir: {}

- name: logdata

emptyDir: {}

- name: config

configMap:

name: filebeat-config

items:

- key: filebeat.yml

path: filebeat.yml

kubectl apply -f filebeat-nginx.yaml

kubectl get pod |grep nginx

nginx-865f745bdd-mkdl6 2/2 Running 0 15s

kubectl describe pod nginx-865f745bdd-mkdl6

Normal Scheduled 36s default-scheduler Successfully assigned default/nginx-865f745bdd-mkdl6 to node2

Normal Pulled 34s kubelet, node2 Container image "docker.elastic.co/beats/filebeat-oss:7.6.2" already present on machine

Normal Created 34s kubelet, node2 Created container filebeat

Normal Started 34s kubelet, node2 Started container filebeat

Normal Pulled 34s kubelet, node2 Container image "nginx:1.17.0" already present on machine

Normal Created 34s kubelet, node2 Created container nginx

Normal Started 34s kubelet, node2 Started container nginx

访问nginx页面以产生日志:ip:30090,

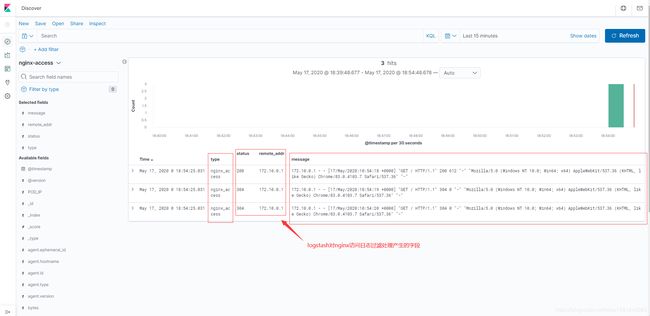

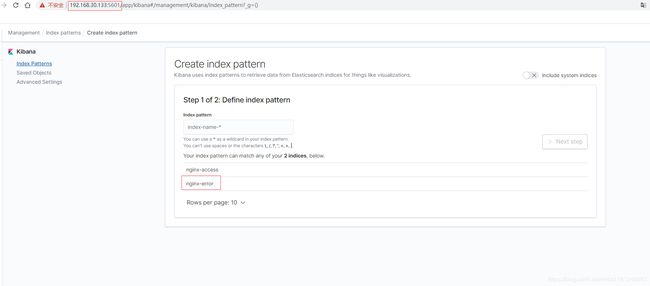

- kibana创建索引,查看nginx日志:

可以看到,已经有指定的index——nginx-access产生,创建同名索引查看日志,

nginx的访问日志通过logstash处理之后已产生对应的字段。访问不存在的url(如ip:30090/index.php)以产生错误的nginx日志,

到kibana页面查看是否有index产生,

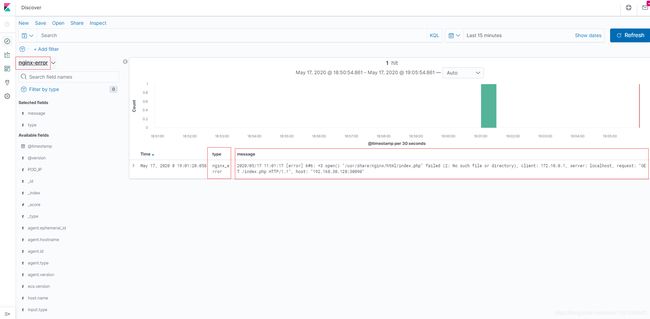

可以看到,已经有指定的index——nginx-error产生,创建同名索引查看日志,

查看对应的nginx访问日志,

nginx日志收集完成。

- 以sidecar方式部署filebeat收集tomcat日志:

vim filebeat-tomcat.yaml

apiVersion: v1

kind: Service

metadata:

name: tomcat

namespace: default

labels:

app: tomcat

spec:

selector:

app: tomcat

ports:

- port: 8080

nodePort: 30100

type: NodePort

---

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-config-tomcat

namespace: default

labels:

app: filebeat

data:

filebeat.yml: |-

filebeat.config:

inputs:

path: ${path.config}/inputs.d/*.yml

reload.enabled: false

modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

filebeat.inputs:

- type: log

paths:

- /logdata/catalina*.log

tail_files: true

fields:

pod_name: '${pod_name}'

POD_IP: '${POD_IP}'

type: tomcat_catalina

fields_under_root: true

multiline.pattern: '^\d{1,2}-\S{3}-\d{4}\s\d{1,2}:\d{1,2}:\d{1,2}'

multiline.negate: true

multiline.match: after

output.logstash:

hosts: ["192.168.30.133:5040"]

enabled: true

worker: 1

compression_level: 3

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: tomcat

namespace: default

spec:

replicas: 1

minReadySeconds: 15

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

selector:

matchLabels:

app: tomcat

template:

metadata:

labels:

app: tomcat

spec:

terminationGracePeriodSeconds: 30

containers:

- name: filebeat

image: docker.elastic.co/beats/filebeat-oss:7.6.2

args: [

"-c", "/etc/filebeat/filebeat.yml",

"-e",

]

env:

- name: POD_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

- name: pod_name

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.name

securityContext:

runAsUser: 0

resources:

limits:

memory: 200Mi

requests:

cpu: 200m

memory: 200Mi

volumeMounts:

- name: config

mountPath: /etc/filebeat/

- name: data

mountPath: /usr/share/filebeat/data

- name: logdata

mountPath: /logdata

- name: tomcat

image: tomcat:8.0.51-alpine

ports:

- containerPort: 8080

volumeMounts:

- name: logdata

mountPath: /usr/local/tomcat/logs

volumes:

- name: data

emptyDir: {}

- name: logdata

emptyDir: {}

- name: config

configMap:

name: filebeat-config-tomcat

items:

- key: filebeat.yml

path: filebeat.yml

kubectl apply -f filebeat-tomcat.yaml

kubectl get pod |grep tomcat

tomcat-5c7b6644f4-jwmxc 2/2 Running 0 10s

kubectl describe pod tomcat-5c7b6644f4-jwmxc

Normal Scheduled 35s default-scheduler Successfully assigned default/tomcat-5c7b6644f4-jwmxc to node1

Normal Pulled 34s kubelet, node1 Container image "docker.elastic.co/beats/filebeat-oss:7.6.2" already present on machine

Normal Created 34s kubelet, node1 Created container filebeat

Normal Started 34s kubelet, node1 Started container filebeat

Normal Pulled 34s kubelet, node1 Container image "tomcat:8.0.51-alpine" already present on machine

Normal Created 34s kubelet, node1 Created container tomcat

Normal Started 34s kubelet, node1 Started container tomcat

访问tomcat页面以产生日志:ip:30100,

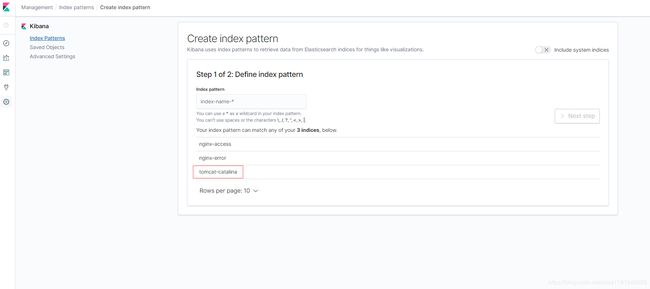

- kibana创建索引,查看tomcat日志:

可以看到,已经有指定的index——tomcat-catalina产生,创建同名索引查看日志,

tomcat的catalina日志通过logstash处理之后已产生对应的字段。tomcat日志收集完成。

- 总结:

通过sidecar方式部署filebeat收集k8s日志,比使用logagent方式部署filebeat收集k8s日志更加直接,可以指定具体路径并自定义index,在查看日志时也更加方便,缺点就是会增加资源消耗,不过资源消耗在可接受的范围内。

需要注意的是,filebeat对不同应用程序日志收集的ConfigMap名称尽量不要相同,避免冲突和误删除。

在前面,filebeat收集日志是直接输出到es中的,而且elk是部署在k8s集群中的。本文中filebeat收集日志是传输到logstash之后进行日志处理再输出到es中的,而且elk单独部署(这里为了方便,采用docker-compose部署,也可以传统方式部署)。

至此,elk + filebeat收集k8s日志完毕。个人感觉sidecar方式比logagent方式更为合适,在日志的过滤处理和查看方面更为简单有效。另外对于错误日志的告警,参考 ELK集群部署(三),此处不再赘述。