复仇者联盟4影评数据分析

作为一名漫威迷,观影之后,我便想看看大家对复仇者联盟4的评价如何。当然,作为一名程序员,应当通过程序来实现对影评数据的分析。下面,通过利用猫眼提供的电影接口,我将展示如何对复仇者联盟4的影评数据进行分析。

1.抓取数据

这里我们通过请求猫眼的API来抓取数据,借助的工具是 requests。接口地址如下:(http://m.maoyan.com/mmdb/comments/movie/248172.json?_v=yes&offset=1),其中,248172 表示猫眼中该电影的id(直接从猫眼官网得到),offset表示偏移量,简单理解就是页数

利用requests发送请求并拿到接口所返回的JSON数据:

import requests

import pandas as pd

import numpy as np

base_url = "http://m.maoyan.com/mmdb/comments/movie/248172.json?_v_=yes&offset="

# 爬取每一页的评论

def crawl_one_page_data(url):

headers = {

"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.108 Safari/537.36",

}

response = requests.get(url, headers=headers)

if response.status_code == 200:

return response.json()

return None

# 解析每一个获得的结果

def parse(data):

result = []

# 影评数据在 cmts 这个 key 中

comments = data.get("cmts")

if not comments:

return None

for cm in comments:

yield [cm.get("id"), # 影评id

cm.get("time"), # 影评时间

cm.get("score"), # 影评得分

cm.get("cityName"), # 影评城市

cm.get("nickName"), # 影评人

cm.get("gender"), # 影评人性别,1 表示男性,2表示女性

cm.get("content")] # 影评内容

# 爬取影评

def crawl_film_review(total_page_num=100):

data = []

for i in range(1, total_page_num + 1):

url = base_url + str(i)

crawl_data = crawl_one_page_data(url)

if crawl_data:

data.extend(parse(crawl_data))

return data

columns=["id", "time", "score", "city", "nickname", "gender", "content"]

df = pd.DataFrame(crawl_film_review(4000), columns=columns)

# 将性别映射后的数字转为汉字

df["gender"] = np.where(df.gender==1, "男性", "女性")

# 根据id去除重复影评

df = df.drop_duplicates(subset=["id"])

df.head()

#保存本次所抓取的数据

df.to_csv("复仇者联盟影评_1000.csv", index=False)

f = open("复仇者联盟影评_1000.csv",encoding="UTF-8")

df = pd.read_csv(f)

df.head()

注意

这里数据文件名中含有中文名,所以需设置encoding = UTF-8模式

2.性别分析

from pyecharts import Pie

# 求出不同性别出现的次数

gender_count = df.gender.value_counts().to_dict()

pie = Pie("性别分析")

pie.add(name="", attr=gender_count.keys(), value=gender_count.values(), is_label_show=True)

pie

3.评分分布

from pyecharts import Bar

# 求出不同评分出现的次数

score_count = df.score.value_counts().sort_index()

score_list = score_count.index.tolist()

count_list = score_count.tolist()

bar = Bar("评分分布", width=450, height=450)

bar.add("", score_list, count_list)

bar

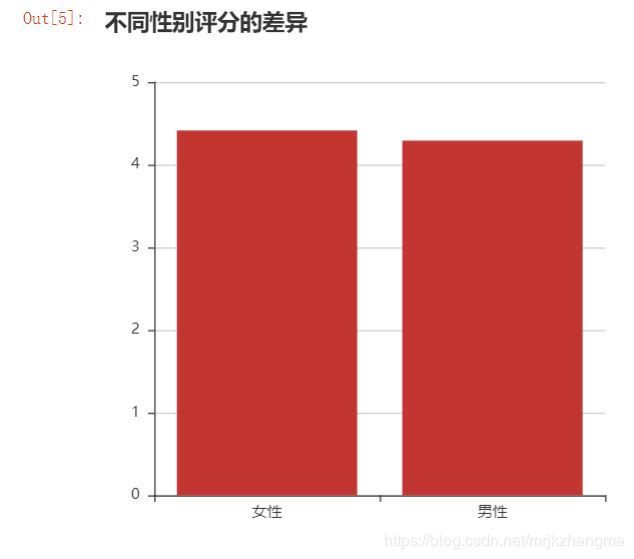

4.不同性别评分的差异

# 求出不同性别评分的均值

sex_score_mean = df.groupby(["gender"])["score"].mean().to_dict()

bar = Bar("不同性别评分的差异", width=450, height=450)

bar.add("", list(sex_score_mean.keys()), list(sex_score_mean.values()), is_stack=True)

bar

5.一线城市与二线城市的评分差异

# 求出不同城市评分的均值

city_list = ["北京", "上海", "合肥", "南宁"]

gender_city_score_mean = df[df.city.isin(city_list)].groupby(["gender", "city"], as_index=False)["score"].mean()

city_data, city_index = pd.factorize(gender_city_score_mean.city)

gender_data, gender_index = pd.factorize(gender_city_score_mean.gender)

data = list(zip(city_data, gender_data, gender_city_score_mean.score.values))

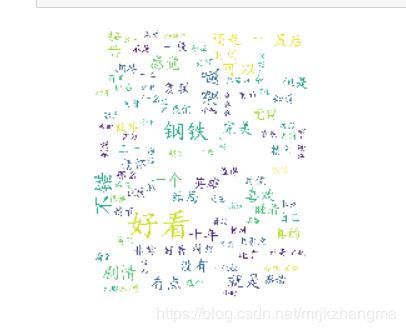

6.影评词云图

from wordcloud import WordCloud, STOPWORDS, ImageColorGenerator

import jieba

import matplotlib.pyplot as plt

# 将分词后的结果以空格连接

words = " ".join(jieba.cut(df.content.str.cat(sep=" ")))

# 导入背景图

backgroud_image = plt.imread("格鲁特.jpg")

# 设置停用词

stopwords = STOPWORDS

stopwords.add("电影")

wc = WordCloud(stopwords=stopwords,

font_path="C:/Windows/Fonts/simkai.ttf", # 解决显示口字型乱码问题

mask=backgroud_image, background_color="white", max_words=100)

my_wc = wc.generate_from_text(words)

ImageColorGenerator

image_colors = ImageColorGenerator(backgroud_image)

plt.imshow(my_wc )

# plt.imshow(my_wc.recolor(color_func=image_colors), )

plt.axis("off")

plt.show()