【Spring Cloud Alibaba】【Hoxton】Seata从入门到实战

1 Nacos 入门-配置中心-集群

2 Sentinel 入门-限流-降级(一)

3 Sentinel 热点规则-@SentinelResource-熔断-持久化(二)

4 Seata从入门到实战

1 简介

官网地址:https://seata.io/zh-cn/

1.1 Seata

Seata 是一款开源的分布式事务解决方案,致力于提供高性能和简单易用的分布式事务服务。Seata 将为用户提供了 AT、TCC、SAGA 和 XA 事务模式,为用户打造一站式的分布式解决方案。

1.2 术语

(1) TC - 事务协调者

维护全局和分支事务的状态,驱动全局事务提交或回滚。

(2) TM - 事务管理器

定义全局事务的范围:开始全局事务、提交或回滚全局事务。

(3) RM - 资源管理器

管理分支事务处理的资源,与TC交谈以注册分支事务和报告分支事务的状态,并驱动分支事务提交或回滚。

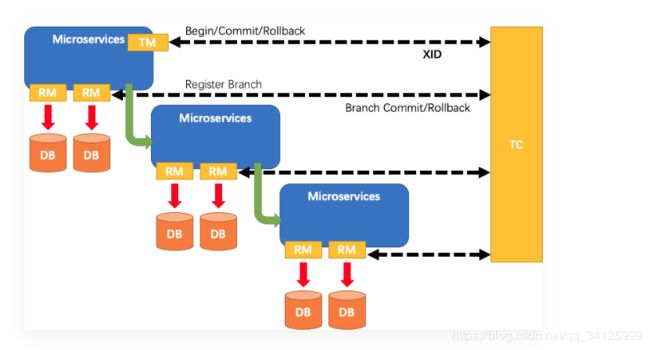

1.3 流程简介

(1) TM向TC申请开启一个全局事务,全局事务创建成功并生成个全局唯一的XID

(2) XID在微服务调用链路的上下文中传播;

(3) RM向TC注册分支事务,将其纳入XID对应全局事务的管辖

(4) TM向TC发起针对XID的全局提交或回滚决议

(5) TC调度XID下管辖的全部分支事务完成提交或回滚请求

2 安装

2.1 环境准备

192.168.0.38 mysql

192.168.0.39 java8 nacos seata

2.2 解压

tar -zxvf seata-server-0.9.0.tar.gz

2.3 修改配置文件

cd /usr/local/seata/conf

vim file.conf

#(1) 修改service模块的分组zrs_tx_group

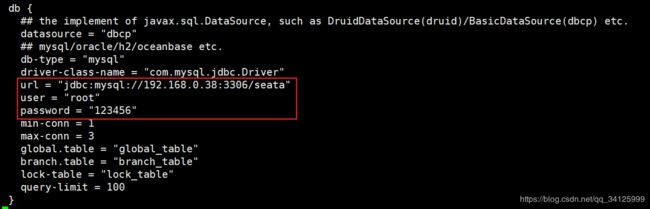

#(2) 修改store模块

vgroup_mapping.my_test_tx_group = "zrs_tx_group"

mode = "db"

url = "jdbc:mysql://192.168.0.38:3306/seata"

user = "root"

password = "123456"

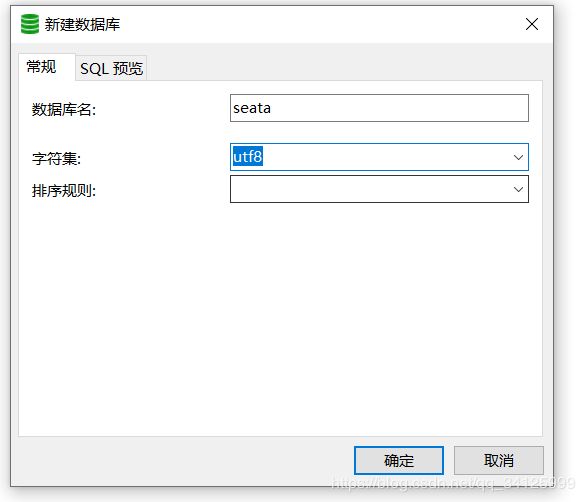

2.4 建数据库

2.5 在数据库里面建表

#SQL地址

https://github.com/seata/seata/blob/v0.9.0/server/src/main/resources/db_store.sql

-- the table to store GlobalSession data

drop table if exists `global_table`;

create table `global_table` (

`xid` varchar(128) not null,

`transaction_id` bigint,

`status` tinyint not null,

`application_id` varchar(32),

`transaction_service_group` varchar(32),

`transaction_name` varchar(128),

`timeout` int,

`begin_time` bigint,

`application_data` varchar(2000),

`gmt_create` datetime,

`gmt_modified` datetime,

primary key (`xid`),

key `idx_gmt_modified_status` (`gmt_modified`, `status`),

key `idx_transaction_id` (`transaction_id`)

);

-- the table to store BranchSession data

drop table if exists `branch_table`;

create table `branch_table` (

`branch_id` bigint not null,

`xid` varchar(128) not null,

`transaction_id` bigint ,

`resource_group_id` varchar(32),

`resource_id` varchar(256) ,

`lock_key` varchar(128) ,

`branch_type` varchar(8) ,

`status` tinyint,

`client_id` varchar(64),

`application_data` varchar(2000),

`gmt_create` datetime,

`gmt_modified` datetime,

primary key (`branch_id`),

key `idx_xid` (`xid`)

);

-- the table to store lock data

drop table if exists `lock_table`;

create table `lock_table` (

`row_key` varchar(128) not null,

`xid` varchar(96),

`transaction_id` long ,

`branch_id` long,

`resource_id` varchar(256) ,

`table_name` varchar(32) ,

`pk` varchar(36) ,

`gmt_create` datetime ,

`gmt_modified` datetime,

primary key(`row_key`)

);

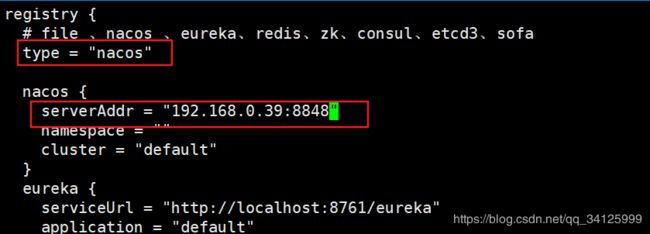

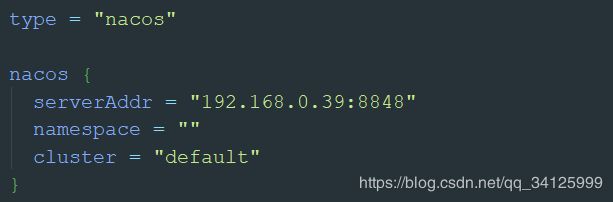

2.6 修改注册文件

cd /usr/local/seata/conf

vim registry.conf

# file 、nacos 、eureka、redis、zk、consul、etcd3、sofa

type = "nacos"

nacos {

serverAddr = "192.168.0.39:8848"

namespace = ""

cluster = "default"

}

2.7 启动

#启动nacos

cd /usr/local/nacos/bin

bash startup.sh -m standalone

#启动seata

cd /usr/local/seata/bin

nohup ./seata-server.sh >seata.log 2>&1 &

3 场景实战

这里我们会创建三个服务,一个订单服务,一个库存服务,一个账户服务。

当用户下单时,会在订单服务中创建一个订单,然后通过远程调用库存服务来扣减下单商品的库存,再通过远程调用账户服务来扣减用户账户里面的余额,最后在订单服务中修改订单状态为已完成。

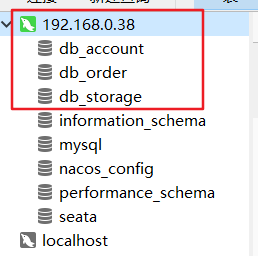

3.1 数据库环境准备

(1) 创建db_account、db_order、db_storage数据库

(2) 在db_order下创建表

CREATE TABLE `t_order` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`user_id` int(11) DEFAULT NULL,

`product_id` int(11) DEFAULT NULL,

`count` int(11) DEFAULT NULL,

`money` decimal(11,0) DEFAULT NULL,

`status` int(1) NOT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

(3) 在db_storage下创建表

CREATE TABLE `t_storage` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`product_id` int(11) DEFAULT NULL,

`total` int(11) DEFAULT NULL,

`used` int(11) DEFAULT NULL,

`residue` int(11) NOT NULL COMMENT '剩余库存',

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

(4)在db_account下建表

CREATE TABLE `t_account` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`user_id` int(11) DEFAULT NULL COMMENT '用户ID',

`total` decimal(11,0) DEFAULT NULL COMMENT '总金额',

`used` decimal(11,0) DEFAULT NULL COMMENT '已用金额',

`residue` decimal(11,0) DEFAULT NULL COMMENT '剩余金额',

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

(5) 在db_account、db_order、db_storage 创建回滚日志表

drop table `undo_log`;

CREATE TABLE `undo_log` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`branch_id` bigint(20) NOT NULL,

`xid` varchar(100) NOT NULL,

`context` varchar(128) NOT NULL,

`rollback_info` longblob NOT NULL,

`log_status` int(11) NOT NULL,

`log_created` datetime NOT NULL,

`log_modified` datetime NOT NULL,

`ext` varchar(100) DEFAULT NULL,

PRIMARY KEY (`id`),

UNIQUE KEY `ux_undo_log` (`xid`,`branch_id`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

3.2 创建公用工程

(1) 3个表的实体 略

(2) 统一返回类

@Data

@AllArgsConstructor

@NoArgsConstructor

public class ResponseResult {

private String msg;

private Integer status;

private Object data;

public static ResponseResult success(Object object){

return new ResponseResult("SUCCESS",200,object);

}

public static ResponseResult fail(String msg){

return new ResponseResult(msg, 500,null);

}

}

3.3 创建order工程

(1) pom

<dependencies>

<!--seata-->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-seata</artifactId>

<exclusions>

<exclusion>

<artifactId>seata-all</artifactId>

<groupId>io.seata</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>io.seata</groupId>

<artifactId>seata-all</artifactId>

<version>0.9.0</version>

</dependency>

<!--spring cloud alibaba-->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-nacos-discovery</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<!--openfegin-->

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-openfeign</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

</dependency>

<!--devtools热部署-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-devtools</artifactId>

<optional>true</optional>

<scope>true</scope>

</dependency>

<!--公用包-->

<dependency>

<groupId>spring-cloud-alibaba</groupId>

<artifactId>common</artifactId>

<version>1.0-SNAPSHOT</version>

</dependency>

<!--数据库-->

<!-- mybatis -->

<dependency>

<groupId>org.mybatis.spring.boot</groupId>

<artifactId>mybatis-spring-boot-starter</artifactId>

<version>1.3.1</version>

</dependency>

<!--mapper -->

<!-- https://mvnrepository.com/artifact/tk.mybatis/mapper-spring-boot-starter-->

<dependency>

<groupId>tk.mybatis</groupId>

<artifactId>mapper-spring-boot-starter</artifactId>

<version>2.1.5</version>

</dependency>

<!--pagehelper -->

<!-- https://mvnrepository.com/artifact/com.github.pagehelper/pagehelper-spring-boot-starter-->

<dependency>

<groupId>com.github.pagehelper</groupId>

<artifactId>pagehelper-spring-boot-starter</artifactId>

<version>1.2.10</version>

</dependency>

<!-- MySQL 连接驱动依赖 -->

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

</dependency>

</dependencies>

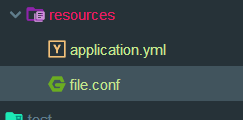

(2) application.yml

server:

port: 9006

spring:

application:

name: order

cloud:

alibaba:

seata:

#自定义组

tx-service-group: zrs_tx_group

nacos:

discovery:

server-addr: 192.168.0.39:8848

datasource:

url: jdbc:mysql://192.168.0.38:3306/db_order?useUnicode=true&characterEncoding=UTF-8&autoReconnect=true&useSSL=true

username: root

password: 123456

driver-class-name: com.mysql.jdbc.Driver

type: com.alibaba.druid.pool.DruidDataSource

feign:

hystrix:

enabled: true

(3) DataSourceProxyConfig

@Configuration

public class DataSourceProxyConfig {

@Bean

@ConfigurationProperties(prefix = "spring.datasource")

public DruidDataSource druidDataSource() {

return new DruidDataSource();

}

/**

* 必须配置数据源代理

*/

@Primary

@Bean

public DataSourceProxy dataSource(DruidDataSource druidDataSource) {

return new DataSourceProxy(druidDataSource);

}

}

transport {

# tcp udt unix-domain-socket

type = "TCP"

#NIO NATIVE

server = "NIO"

#enable heartbeat

heartbeat = true

#thread factory for netty

thread-factory {

boss-thread-prefix = "NettyBoss"

worker-thread-prefix = "NettyServerNIOWorker"

server-executor-thread-prefix = "NettyServerBizHandler"

share-boss-worker = false

client-selector-thread-prefix = "NettyClientSelector"

client-selector-thread-size = 1

client-worker-thread-prefix = "NettyClientWorkerThread"

# netty boss thread size,will not be used for UDT

boss-thread-size = 1

#auto default pin or 8

worker-thread-size = 8

}

shutdown {

# when destroy server, wait seconds

wait = 3

}

serialization = "seata"

compressor = "none"

}

service {

#vgroup->rgroup

vgroup_mapping.zrs_tx_group = "default"

#only support single node

default.grouplist = "127.0.0.1:8091"

#degrade current not support

enableDegrade = false

#disable

disable = false

#unit ms,s,m,h,d represents milliseconds, seconds, minutes, hours, days, default permanent

max.commit.retry.timeout = "-1"

max.rollback.retry.timeout = "-1"

}

client {

async.commit.buffer.limit = 10000

lock {

retry.internal = 10

retry.times = 30

}

report.retry.count = 5

tm.commit.retry.count = 1

tm.rollback.retry.count = 1

}

## transaction log store

store {

## store mode: file、db

mode = "file"

## file store

file {

dir = "sessionStore"

# branch session size , if exceeded first try compress lockkey, still exceeded throws exceptions

max-branch-session-size = 16384

# globe session size , if exceeded throws exceptions

max-global-session-size = 512

# file buffer size , if exceeded allocate new buffer

file-write-buffer-cache-size = 16384

# when recover batch read size

session.reload.read_size = 100

# async, sync

flush-disk-mode = async

}

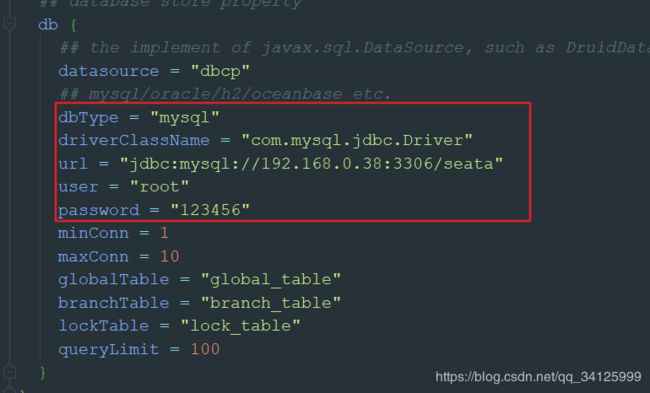

## database store

db {

## the implement of javax.sql.DataSource, such as DruidDataSource(druid)/BasicDataSource(dbcp) etc.

datasource = "dbcp"

## mysql/oracle/h2/oceanbase etc.

db-type = "mysql"

driver-class-name = "com.mysql.jdbc.Driver"

url = "jdbc:mysql://192.168.0.38:3306/seata"

user = "root"

password = "123456"

min-conn = 1

max-conn = 3

global.table = "global_table"

branch.table = "branch_table"

lock-table = "lock_table"

query-limit = 100

}

}

lock {

## the lock store mode: local、remote

mode = "remote"

local {

## store locks in user's database

}

remote {

## store locks in the seata's server

}

}

recovery {

#schedule committing retry period in milliseconds

committing-retry-period = 1000

#schedule asyn committing retry period in milliseconds

asyn-committing-retry-period = 1000

#schedule rollbacking retry period in milliseconds

rollbacking-retry-period = 1000

#schedule timeout retry period in milliseconds

timeout-retry-period = 1000

}

transaction {

undo.data.validation = true

undo.log.serialization = "jackson"

undo.log.save.days = 7

#schedule delete expired undo_log in milliseconds

undo.log.delete.period = 86400000

undo.log.table = "undo_log"

}

## metrics settings

metrics {

enabled = false

registry-type = "compact"

# multi exporters use comma divided

exporter-list = "prometheus"

exporter-prometheus-port = 9898

}

support {

## spring

spring {

# auto proxy the DataSource bean

datasource.autoproxy = false

}

}

registry {

# file 、nacos 、eureka、redis、zk、consul、etcd3、sofa

type = "nacos"

nacos {

serverAddr = "192.168.0.39:8848"

namespace = ""

cluster = "default"

}

eureka {

serviceUrl = "http://localhost:8761/eureka"

application = "default"

weight = "1"

}

redis {

serverAddr = "localhost:6379"

db = "0"

}

zk {

cluster = "default"

serverAddr = "127.0.0.1:2181"

session.timeout = 6000

connect.timeout = 2000

}

consul {

cluster = "default"

serverAddr = "127.0.0.1:8500"

}

etcd3 {

cluster = "default"

serverAddr = "http://localhost:2379"

}

sofa {

serverAddr = "127.0.0.1:9603"

application = "default"

region = "DEFAULT_ZONE"

datacenter = "DefaultDataCenter"

cluster = "default"

group = "SEATA_GROUP"

addressWaitTime = "3000"

}

file {

name = "file.conf"

}

}

config {

# file、nacos 、apollo、zk、consul、etcd3

type = "file"

nacos {

serverAddr = "localhost"

namespace = ""

}

consul {

serverAddr = "127.0.0.1:8500"

}

apollo {

app.id = "seata-server"

apollo.meta = "http://192.168.1.204:8801"

}

zk {

serverAddr = "127.0.0.1:2181"

session.timeout = 6000

connect.timeout = 2000

}

etcd3 {

serverAddr = "http://localhost:2379"

}

file {

name = "file.conf"

}

}

(6) AccountService、StorageService

@FeignClient(value = "account")

@Service

public interface AccountService {

@PostMapping(value = "/account/decrease")

ResponseResult decrease(@RequestParam("userId") Integer userID, @RequestParam("money") BigDecimal money);

}

@FeignClient(value = "storage")

@Service

public interface StorageService {

@PostMapping(value = "/storage/decrease")

ResponseResult decrease(@RequestParam("productId") Integer productId, @RequestParam("count") Integer count);

}

(7)OrderMapper

@Mapper

public interface OrderMapper extends BaseMapper<OrderEntity> {

}

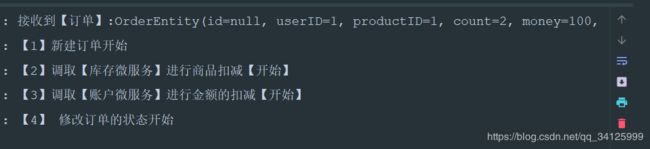

(8) 核心流程类OrderService

@Service

@Slf4j

public class OrderService {

@Autowired

private OrderMapper orderMapper;

@Autowired

private StorageService storageService;

@Autowired

private AccountService accountService;

public void create(OrderEntity order){

log.info("接收到【订单】:"+order.toString());

log.info("【1】新建订单开始");

orderMapper.insertSelective(order);

log.info("【2】调取【库存微服务】进行商品扣减【开始】");

storageService.decrease(order.getProductID(), order.getCount());

log.info("【3】调取【账户微服务】进行金额的扣减【开始】");

accountService.decrease(order.getUserID(), order.getMoney());

log.info("【4】 修改订单的状态开始");

order.setStatus(1);

orderMapper.updateByPrimaryKeySelective(order);

}

}

(9) 主启动类

@SpringBootApplication

@MapperScan("com.order.mapper")

@EnableFeignClients

@EnableDiscoveryClient

public class OrderApplication {

public static void main(String[] args) {

SpringApplication.run(OrderApplication.class);

}

}

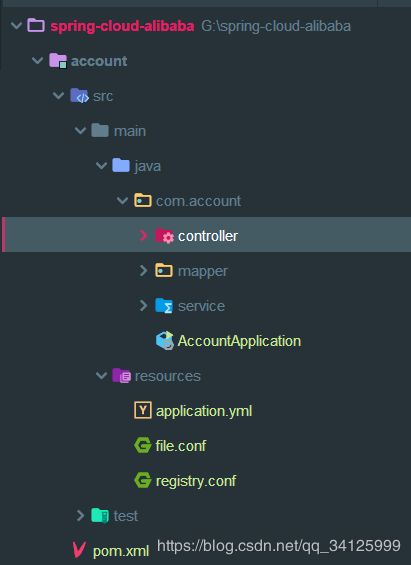

3.4 根据order项目创建storage工程

3.5根据order项目创建account工程

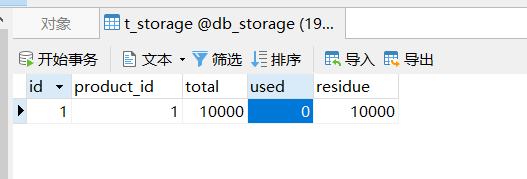

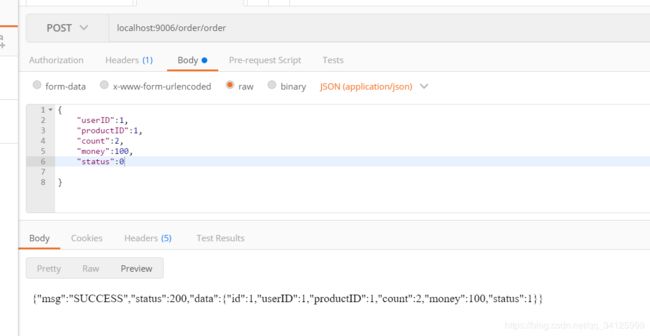

3.6 准备数据

3.7 测试

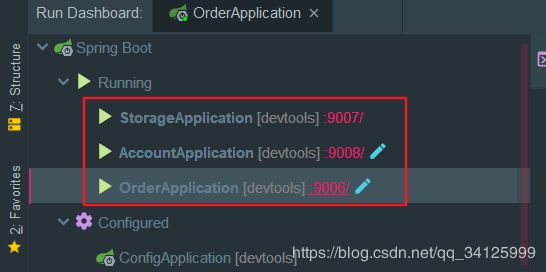

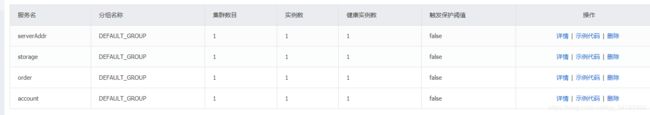

(1) 启动storage、account、order服务

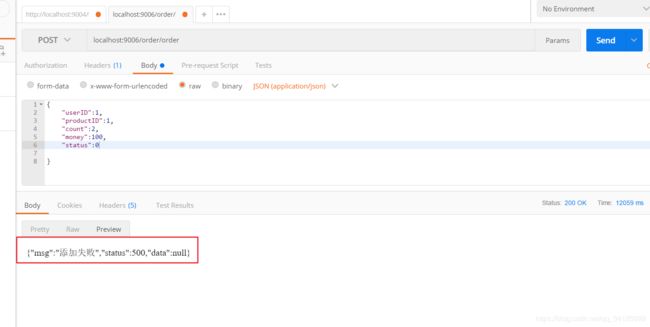

(2) postman测试

{

"userID":1,

"productID":1,

"count":2,

"money":100,

"status":0

}

3.8 异常测试

(1) 修改OrderService

(2) postman测试

LAST GITHUB

#分支Seata-release-v1.0

https://github.com/zhurongsheng666/spring-cloud-alibaba