常见的机器学习算法(五) K均值聚类算法

原理:

1. 初始化聚类中心,或者在输入数据范围内随机选择,或者使用一些现有的训练样本(推荐)

2. 直到收敛

- 将每个数据点分配到最近的聚类。点与聚类中心之间的距离是通过欧几里德距离测量得到的。

- 通过将聚类中心的当前估计值设置为属于该聚类的所有实例的平均值,来更新它们的当前估计值。

目标函数:

聚类算法的目标函数试图找到聚类中心,以便数据将划分到相应的聚类中,并使得数据与其最接近的聚类中心间的距离尽可能小。

给定一组数据X1,...,Xn和一个正数k,找到k个聚类中心C1,...,Ck并最小化目标函数:

这里:

决定了数据点

决定了数据点 是否属于类

是否属于类

表示类

表示类 的聚类中心

的聚类中心 表示欧几里得距离

表示欧几里得距离

K-Means 算法的缺点:

-

聚类的个数在开始就要设定

-

聚类的结果取决于初始设定的聚类中心

-

对异常值很敏感

-

不适合用于发现非凸聚类问题

-

该算法不能保证能够找到全局最优解,因此它往往会陷入一个局部最优解

import numpy as np

import matplotlib.pyplot as plt

from sklearn.datasets import make_blobs

import random

np.random.seed(123)

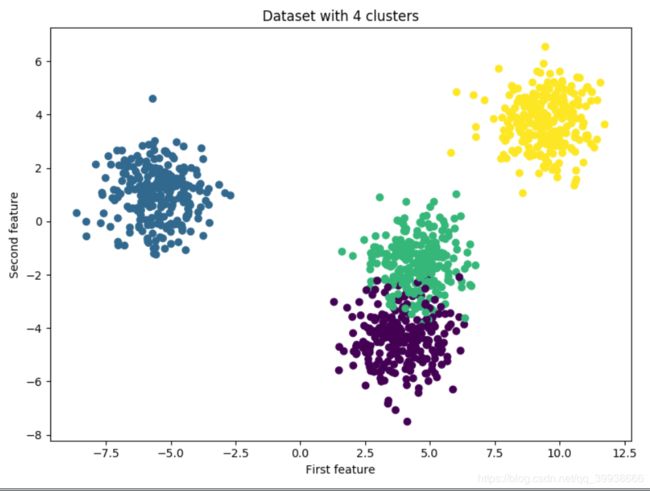

x, y = make_blobs(n_samples=1000, centers=4)

print('Shape of dataset: ', x.shape)#(1000,2)

fig = plt.figure(figsize=(8,6))

plt.scatter(x[:, 0], x[:, 1], c=y)

plt.title('Dataset with 4 clusters')

plt.xlabel('First feature')

plt.ylabel('Second feature')

plt.show()

class KMeans:

def __init__(self, n_clusters=4):

self.k = n_clusters

def fit(self, data):

n_samples, _ = data.shape

self.centers = np.array(random.sample(list(data), self.k))#从list里随机取出k个元素作为初始中心点

self.initial_centers = np.copy(self.centers)

old_assigns = None

n_iters = 0

while(True):

new_assigns = [self.classify(datapoint) for datapoint in data]#把data的各点分类到各簇

if new_assigns == old_assigns:

print('Training finished after ', n_iters,' iterations!')

return

old_assigns = new_assigns

n_iters += 1

for id_ in range(self.k):

points_idx = np.where(np.array(new_assigns) == id_)

datapoints = data[points_idx]

self.centers[id_] = datapoints.mean(axis=0)

def l2_distance(self,datapoint):

return np.sqrt(np.sum((self.centers - datapoint)**2, axis=1))

def classify(self,datapoint):

dists = self.l2_distance(datapoint)

return np.argmin(dists)#返回各数据点对应的簇类别

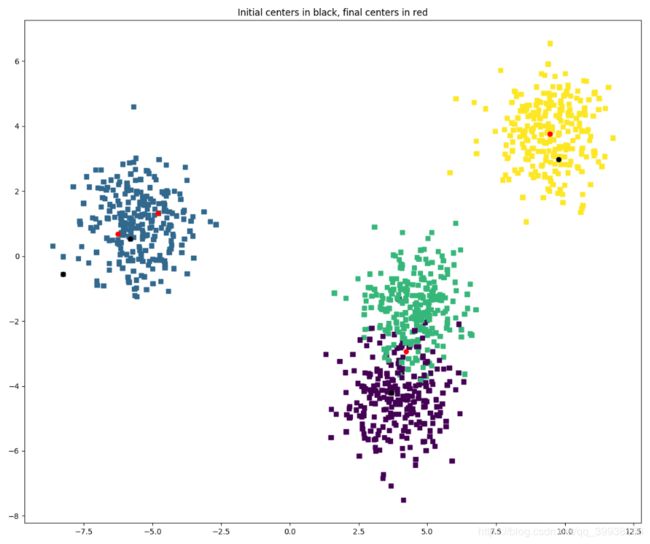

def plot_clusters(self,data):

plt.figure(figsize=(12,10))

plt.title('Initial centers in black, final centers in red')

plt.scatter(data[:,0], data[:,1], marker=',', c=y)

plt.scatter(self.centers[:,0], self.centers[:,1], c='r')#final centers in red

plt.scatter(self.initial_centers[:,0], self.initial_centers[:,1], c='k')#Initial centers in black

plt.show()

kmeans = KMeans(n_clusters=4)

kmeans.fit(x)

kmeans.plot_clusters(x)

(二) 直接调用sklearn的API

from sklearn.cluster import KMeans #kmeans聚类#

module = KMeans(n_clusters=3, random_state=0)

module.fit(x, y)

module.predict(test)完整代码:

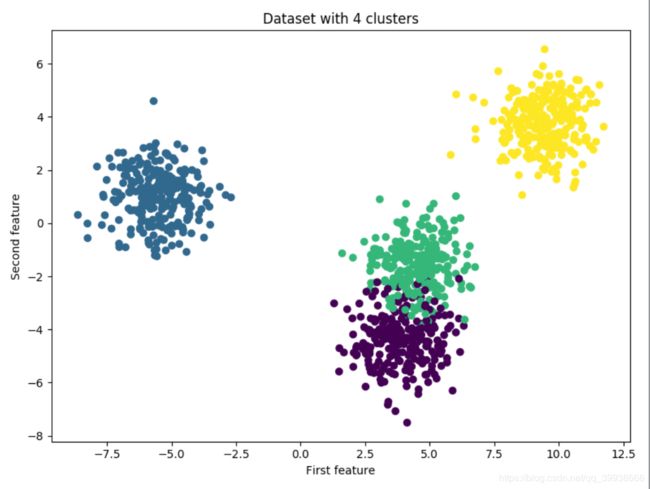

import numpy as np

import matplotlib.pyplot as plt

from sklearn.datasets import make_blobs

from sklearn.cluster import KMeans

np.random.seed(123)

x, y = make_blobs(n_samples=1000, centers=4)

fig = plt.figure(figsize=(8, 6))

plt.scatter(x[:, 0], x[:, 1], c=y)

plt.title('Dataset with 4 clusters')

plt.xlabel('First feature')

plt.ylabel('Second feature')

plt.show()

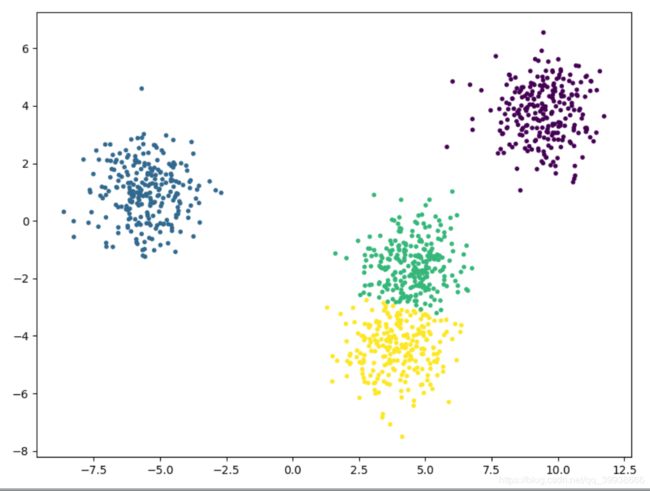

module = KMeans(n_clusters=4)

module.fit(x)

y_p = module.predict(x)#得到各点的簇类标签0-3

plt.figure(figsize=(8,6))

plt.scatter(x[:,0], x[:,1], marker='.', c=y_p)

plt.show()