k8s(十)、微服务--istio1.0抢鲜测试

前言

此前写了三篇文章,介绍了istio的工作原理、流量调度策略、服务可视化以及监控:

k8s(四)、微服务框架istio安装测试

k8s(五)、微服务框架istio流量策略控制

k8s(六)、微服务框架istio服务可视化与监控

istio项目成立1年多以来,鲜有生产上的用例,但这次最新的1.0版本,在官网介绍上赫然写着:

All of our core features are now ready for production use.

既然宣称生产可用,那么不妨来试用一番昨晚刚发布热气腾腾的1.0版本。

一、安装

首先环境还是基于此前的k8s v1.9集群

1.下载安装istio官方包:

curl -L https://git.io/getLatestIstio | sh -

mv istio-1.0.0/ /usr/local

ln -sv /usr/local/istio-1.0.0/ /usr/local/istio

cd /usr/local/istio/install/kubernetes/

由于集群使用traefik对外服务,因此对官方的istio-demo.yaml部署文件中名为istio-ingressgateway的Service部分稍作修改,修改部分未删除已注释,大约在文件的第2482行开始:

###

vim istio-demo.yaml

'''

apiVersion: v1

kind: Service

metadata:

name: istio-ingressgateway

namespace: istio-system

annotations:

labels:

chart: gateways-1.0.0

release: RELEASE-NAME

heritage: Tiller

app: istio-ingressgateway

istio: ingressgateway

spec:

#type: LoadBalancer

type: ClusterIP

selector:

app: istio-ingressgateway

istio: ingressgateway

ports:

-

name: http2

#nodePort: 31380

port: 80

#targetPort: 80

-

name: https

#nodePort: 31390

port: 443

-

name: tcp

#nodePort: 31400

port: 31400

-

name: tcp-pilot-grpc-tls

port: 15011

#targetPort: 15011

-

name: tcp-citadel-grpc-tls

port: 8060

#targetPort: 8060

-

name: http2-prometheus

port: 15030

#targetPort: 15030

-

name: http2-grafana

port: 15031

#targetPort: 15031

'''

修改完毕,kubectl apply -f istio-demo.yaml

出现了一些类似如下的报错:

error: unable to recognize "install/kubernetes/istio-demo.yaml": no matches for authentication.istio.io/, Kind=Policy

WTF?第一步就出错?去Github查了一下,找到一个issue下的答案说再次kubectl apply -f istio-demo.yaml就不会有报错了,果然,再次apply之后确实没有报错,推测可能是某些资源之间的依赖关系导致的,几千行的yaml文件,就不深入寻找原因了,继续往下。

稍后查看pod和svc是否正常部署:

root@yksv001238:/usr/local/istio/install/kubernetes# kubectl get pods -n istio-system

NAME READY STATUS RESTARTS AGE

grafana-6995b4fbd7-kpfsz 1/1 Running 0 34m

istio-citadel-54f4678f86-bfv72 1/1 Running 0 34m

istio-egressgateway-5d7f8fcc7b-fpfbr 1/1 Running 0 34m

istio-galley-7bd8b5f88f-gtq2x 1/1 Running 0 34m

istio-ingressgateway-6f58fdc8d7-pwwrz 1/1 Running 0 34m

istio-pilot-d99689994-rfk84 2/2 Running 0 34m

istio-policy-766bf4bd6d-gscqs 2/2 Running 0 34m

istio-sidecar-injector-85ccf84984-m9v99 1/1 Running 0 34m

istio-statsd-prom-bridge-55965ff9c8-8fv4m 1/1 Running 0 34m

istio-telemetry-55b6b5bbc7-9ptrx 2/2 Running 0 34m

istio-tracing-77f9f94b98-5ghc5 1/1 Running 0 34m

prometheus-7456f56c96-qrfpg 1/1 Running 0 34m

servicegraph-684c85ffb9-6ctmc 1/1 Running 0 34m

root@yksv001238:/usr/local/istio/install/kubernetes# kubectl get svc -n istio-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

grafana ClusterIP 10.99.131.246 <none> 3000/TCP 34m

istio-citadel ClusterIP 10.96.83.131 <none> 8060/TCP,9093/TCP 34m

istio-egressgateway ClusterIP 10.111.29.59 <none> 80/TCP,443/TCP 34m

istio-galley ClusterIP 10.101.207.154 <none> 443/TCP,9093/TCP 34m

istio-ingressgateway ClusterIP 10.96.171.51 <none> 80/TCP,443/TCP,31400/TCP,15011/TCP,8060/TCP,15030/TCP,15031/TCP 34m

istio-pilot ClusterIP 10.103.244.35 <none> 15010/TCP,15011/TCP,8080/TCP,9093/TCP 34m

istio-policy ClusterIP 10.108.241.232 <none> 9091/TCP,15004/TCP,9093/TCP 34m

istio-sidecar-injector ClusterIP 10.103.39.16 <none> 443/TCP 34m

istio-statsd-prom-bridge ClusterIP 10.100.205.153 <none> 9102/TCP,9125/UDP 34m

istio-telemetry ClusterIP 10.106.175.49 <none> 9091/TCP,15004/TCP,9093/TCP,42422/TCP 34m

jaeger-agent ClusterIP None <none> 5775/UDP,6831/UDP,6832/UDP 34m

jaeger-collector ClusterIP 10.107.200.30 <none> 14267/TCP,14268/TCP 34m

jaeger-query ClusterIP 10.104.201.226 <none> 16686/TCP 34m

prometheus ClusterIP 10.103.110.167 <none> 9090/TCP 34m

servicegraph ClusterIP 10.108.243.227 <none> 8088/TCP 34m

tracing ClusterIP 10.96.208.110 <none> 80/TCP 34m

zipkin ClusterIP 10.98.50.58 <none> 9411/TCP 34m

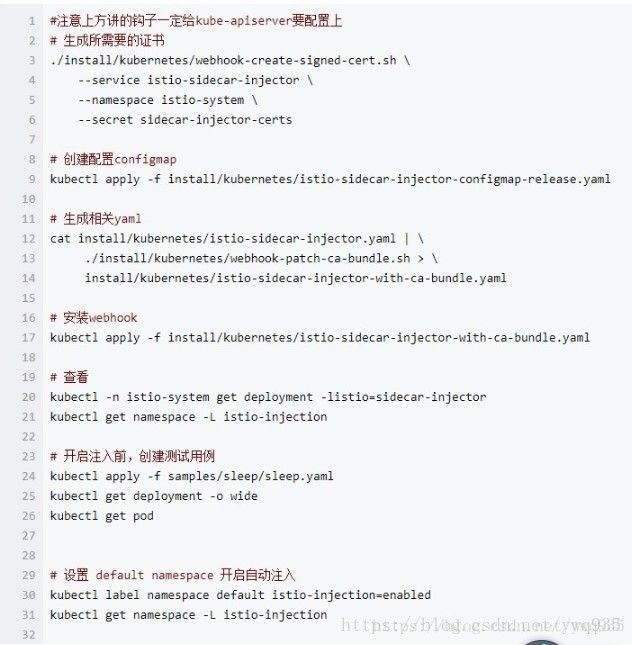

可以看到,istio-sidecar-injector已经部署成功,在此前的0.x的版本中,开启注入的钩子步骤如下,稍显繁琐,在这里已经全部集成在这一个istio-demo.yaml文件中了,省去许多步骤。

0.x版本开启注入的步骤:

开启指定命名空间的自动注入:

kubectl label namespace default istio-injection=enabled

二、demo测试

继续沿用此前的测试demo,对此demo拆分结构不了解的可以先看前面的文章:

git clone https://github.com/yinwenqin/istio-test.git

部署服务:

cd istio-test

kubectl apply -f service/go/v1/go-v1.yml

kubectl apply -f service/go/v2/go-v2.yml

kubectl apply -f service/python/v1/python-v1.yml

kubectl apply -f service/python/v2/python-v2.yml

kubectl apply -f service/js/v1/js-v1.yml

kubectl apply -f service/js/v2/js-v2.yml

kubectl apply -f service/node/v1/node-v1.yml

kubectl apply -f service/node/v2/node-v2.yml

kubectl apply -f service/lua/v1/lua-v1.yml

kubectl apply -f service/lua/v2/lua-v2.yml

暴露服务

kubectl apply -f istio/ingress-python.yml

kubectl apply -f istio/ingress-js.yml

# 这两个ingress内的host字段均为http://istio-test.will,根据path路由到不同的服务上

查看部署结果:

root@yksv001238:~/istio-test/service# kubectl get pods

service-go-v1-84498949c8-4cpks 2/2 Running 1 5h

service-go-v1-84498949c8-cjnwq 2/2 Running 0 5h

service-go-v2-f7c7fb8d-46xq5 2/2 Running 0 5h

service-go-v2-f7c7fb8d-7t7fm 2/2 Running 0 5h

service-js-v1-6454464f4c-czqsh 2/2 Running 1 5h

service-js-v1-6454464f4c-v6l58 2/2 Running 0 5h

service-lua-v1-65bb7c6b8d-kk4xm 2/2 Running 0 5h

service-lua-v1-65bb7c6b8d-pbkng 2/2 Running 0 5h

service-lua-v2-864d7954f4-fd454 2/2 Running 0 5h

service-lua-v2-864d7954f4-h89h8 2/2 Running 0 5h

service-node-v1-6c87fdc44d-mf7ch 2/2 Running 0 5h

service-node-v1-6c87fdc44d-zlbsj 2/2 Running 1 5h

service-node-v2-7698dc75cd-qhckq 2/2 Running 0 5h

service-node-v2-7698dc75cd-r4qs8 2/2 Running 0 5h

service-python-v1-c5bf498b-7s47j 2/2 Running 0 5h

service-python-v1-c5bf498b-cb7wr 2/2 Running 0 5h

service-python-v2-7c8b865568-6wzhk 2/2 Running 0 5h

service-python-v2-7c8b865568-8bf7h 2/2 Running 0 5h

root@yksv001238:~/istio-test/service# kubectl describe pod service-go-v1-84498949c8-4cpks

...

Image: gcr.io/istio-release/proxy_init:1.0.0

Image: registry.cn-shanghai.aliyuncs.com/istio-test/service-go:v1

##可以看到初始化步骤中生成了两个容器,自动注入成功!

获取到istio-ingressgateway的ClusterIP:

root@yksv001238:~/istio-test/istio# kubectl get svc -n istio-system | grep istio-ingressgateway

istio-ingressgateway ClusterIP 10.96.171.51 <none> 80/TCP,443/TCP,31400/TCP,15011/TCP,8060/TCP,15030/TCP,15031/TCP 19h

在本机添加一条dns记录,关联ingress host和istio-ingressgateway:

10.96.171.51 istio-test.will

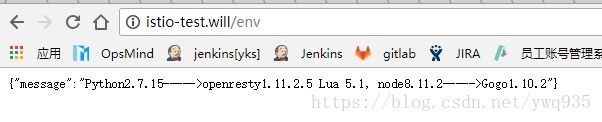

打开浏览器测试:

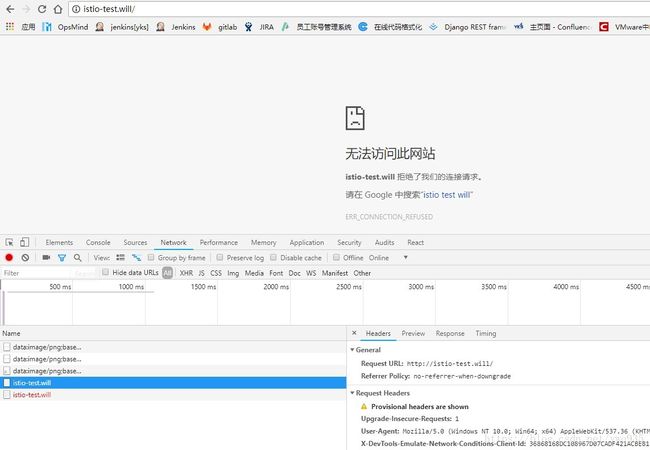

**打不开?**哭脸一张,莫不是新版资源间的交互方式有什么变化,导致此前的方式不再适用,联想到1.0版本新增了gateway这种资源,去官网查官网的用例,果然发现了不一样的地方

官网的新版bookinfo用例说明:https://istio.io/docs/examples/bookinfo/#confirm-the-app-is-running

查看官方包中用例的服务暴露文件,发现已经不再适用ingress这种资源了,现版用的是由istio申明的Gateway和VirtualService这两种资源结合工作,代替之前的ingress。

cat istio/samples/bookinfo/networking/bookinfo-gateway.yaml

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: bookinfo-gateway

spec:

selector:

istio: ingressgateway # use istio default controller

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- "*"

---

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: bookinfo

spec:

hosts:

- "*"

gateways:

- bookinfo-gateway

http:

- match:

- uri:

exact: /productpage

- uri:

exact: /login

- uri:

exact: /logout

- uri:

prefix: /api/v1/products

route:

- destination:

host: productpage

port:

number: 9080

看来要针对ingress进行修改了,原来的ingress-js.yaml和ingress-python.yaml文件内容:

# service-js ingress配置

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: ingress-js

annotations:

kubernetes.io/ingress.class: istio

spec:

rules:

- host: istio-test.will

http:

paths:

- path: /.*

backend:

serviceName: service-js

servicePort: 80

---

# service-python ingress配置

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: ingress-python

annotations:

kubernetes.io/ingress.class: istio

spec:

rules:

- host: istio-test.will

http:

paths:

- path: /env

backend:

serviceName: service-python

servicePort: 80

修改之后(几个注意的点会在后方说明):

root@yksv001238:~/istio-test/istio# cat gateway-demo.yml

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: demo-gateway

spec:

selector:

istio: ingressgateway # use istio default controller

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- "*"

---

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: test-demo

spec:

hosts:

- istio-test.will

gateways:

- demo-gateway

http:

- match:

- uri:

exact: "/"

route:

- destination:

host: service-js

port:

number: 80

- match:

- uri:

prefix: "/env"

route:

- destination:

host: service-python

port:

number: 80

查看资源是否生成:

root@yksv001238:~/istio-test/istio# kubectl apply -f gateway-demo.yml

gateway "demo-gateway" configured

virtualservice "test-demo" configured

root@yksv001238:~/istio-test/istio# kubectl get gateway

NAME AGE

demo-gateway 2m

root@yksv001238:~/istio-test/istio# kubectl get virtualservice

NAME AGE

test-demo 2m

如此前一样,多次点击发射按钮,前端js服务在调用后端的服务时,k8s会根据svc的ep负载均衡策略将流量均衡调度,因此可以看到每次点击按钮,后端服务的版本号都不一致。

服务暴露注意事项:

一、.gateway是推荐作为一个项目的所有微服务节点的通用网关来使用的,建议所有VirtualService共用此gateway

二、.gateway.spec.selector 必须有istio: ingressgateway标签,才能加入istio-ingress-gateway这个统一的入口

三、.如果gateway之下的存在多个VirtualService,且VirtualService.spec.hosts如果存在域名冲突的情况,后者会覆盖前者的配置,因此,同一域名下的服务,建议放入同一VirtualService下。

四(重要)、.VirtualService.spec.http中的uri匹配规则有三种:

1.不写match规则,从根路径起包含所有子路径完全匹配到destination.host指向的svc

2.match匹配方式为exact,此方式为路径完全绝对匹配,例如上方的**exact: "/"只能匹配根路径

3.match匹配方式为prefix,此方式为路径前缀匹配,例如上方的prefix: “/env”**则会匹配以/env开头的所有路径

补充说明:根据路径做路由匹配时,如果写为模糊匹配的方式,且存在某一匹配规则的匹配路径覆盖了其他规则的匹配路径,此时被覆盖的规则对应的服务如果存在前端js与后端交互,则会出现304跳转以及随之而来的同源策略造成的跨域问题,因此这里的路径规则匹配要避免不必要的路径重叠以避免出现此类问题。(见下方举例)

举例:

将上方的VirtualService的第一段稍作修改,exact方式注释掉改为prefix,其余保持不变,此时匹配前缀"/”路径会覆盖其余所有的子路径,因此会产生304跳转:

http:

- match:

- uri:

#exact: "/"

prefix: "/"

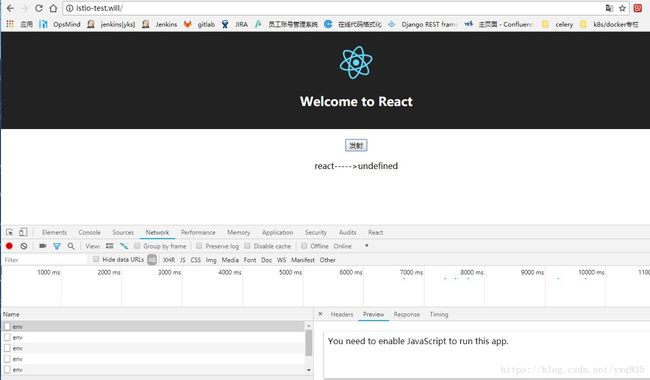

kubectl apply之后再次打开浏览器查看效果:

无论点击多少次“发射”按钮,结果都是undefined,浏览器提示js未运行,原因如上方说明,请求在处理的过程中先从"/"在转到"/env"过程中出现了304跳转,此时js跨域将被禁,因此,一定要避免不必要的路径设计重叠。

简单测试使用先到这里,随后将继续体验关于流量策略以及安全策略的一些新特性,待补充。