编译flink源码(flink1.9.2 hadoop 2.7.2)

为什么要编译Flink源码

根据flink官方文档,flink在1.8以后就不提供on hadoop的二进制包了,所以如果需要部署flink on yarn,官方虽然不支持了,但是提供了重新编译on hadoop的flink的二进制安装包的方法,首先我们来梳理一下编译思路。(P.S.:这篇blog主要说的是maven编译flink的操作步骤):

准备

摘自 Project Template for Java

The only requirements are working Maven 3.0.4 (or higher) and Java 8.x installations.

摘自Building Flink from Source

Maven 3.3.x can build Flink, but will not properly shade away certain dependencies. Maven 3.2.5 creates the libraries properly. To build unit tests use Java 8u51 or above to prevent failures in unit tests that use the PowerMock runner.

根据以上两段摘要我们了解了我们需要准备的基础环境,maven3.2.5(其实3.3.9也可以,我本地就是3.3.9懒得换maven版本了,抱着试试看的心态,嘿,还真行)和jdk8u51或以上版本。这俩组件的安装就不再多说了,补充一句,在国内的网络环境编译flink请将你的maven仓库配置成aliyun的仓库。具体配置方法送你一句话:"外事问谷哥,内事文度娘。”(一定要配置好环境变量)

编译步骤

想要编译flink总共分几步?答:三步,首先把冰箱门打开,然后把flink装进去,最后把冰箱门关上。开个玩笑,不过分三步是真的。

- 安装基础环境JDK8u51+和maven 3.0.4+,并配置环境变量和maven的repo

- 编译flink-shaded (要编译)

- 编译flink,打包安装

安装基础环境

这里主要说下下载源码包的方法:

https://flink.apache.org/downloads.html

访问这个链接,拉倒页面最下面就可以找到flink对应版本的源码,以及flink-shaded的源码,进行下载即可。

也可以访问github进行源码下载。

编译flink-shaded

直接编译flink不行吗,为啥还要编译shaded这个玩意,通过官网描述我么你知道,直接编译hadoop版的flink仅支持hdp的2.4.1、2.6.5、 2.7.5、2.8.3,对于shaded版本也仅仅支持到10.0,如果你先用shaded11那么也需要自己单独编译。所以如果想使用hdp其他版本,以及hadoop相关的发行版(如cdh和hortonwork)也需要单独编译shaded。

- 解压flink-shaded包

unzip flink-shaded-release-7.0.zip

- 增加缺少依赖

(官网也有坑)首先明确下我们的目标,我们的目标是要让flink在hadoop上跳舞。所以我们对于1.8以后的版本我们要自行编译出来对应我们需要的hadoop版本的二进制包。但是官方提供的shaded的源码中缺少一个依赖commons-cli

我们需要将以下依赖,添加到flink-shaded-7.0/flink-shaded-hadoop-2-uber/pom.xml 中的 dependencyManagement 标签中,不然后续flink启动yarn session或直接向yarn提交任务都会出现NoSuchMethodError的错误

<dependency>

<groupId>commons-cligroupId>

<artifactId>commons-cliartifactId>

<version>1.3.1version>

dependency>

错误信息

java.lang.NoSuchMethodError: org.apache.commons.cli.Option.builder(Ljava/lang/String;)Lorg/apache/commons/cli/Option$Builder;

at org.apache.flink.yarn.cli.FlinkYarnSessionCli.<init>(FlinkYarnSessionCli.java:197)

at org.apache.flink.yarn.cli.FlinkYarnSessionCli.<init>(FlinkYarnSessionCli.java:173)

at org.apache.flink.yarn.cli.FlinkYarnSessionCli.main(FlinkYarnSessionCli.java:836)

- 根据需要的hadoop版本进行编译

进入解压好的目录使用mvn命令进行编译

mvn clean install -DskipTests -Dhadoop.version=2.7.2

然后就是一个不太漫长的等待过程当出现以下提示说明你已经成功地完成了flink编译的一半工作量

[INFO] Reactor Summary:

[INFO]

[INFO] flink-shaded 7.0 ................................... SUCCESS [ 3.483 s]

[INFO] flink-shaded-force-shading 7.0 ..................... SUCCESS [ 0.597 s]

[INFO] flink-shaded-asm-6 6.2.1-7.0 ....................... SUCCESS [ 0.627 s]

[INFO] flink-shaded-guava-18 18.0-7.0 ..................... SUCCESS [ 0.994 s]

[INFO] flink-shaded-netty-4 4.1.32.Final-7.0 .............. SUCCESS [ 3.469 s]

[INFO] flink-shaded-netty-tcnative-dynamic 2.0.25.Final-7.0 SUCCESS [ 0.572 s]

[INFO] flink-shaded-jackson-parent 2.9.8-7.0 .............. SUCCESS [ 0.035 s]

[INFO] flink-shaded-jackson-2 2.9.8-7.0 ................... SUCCESS [ 1.479 s]

[INFO] flink-shaded-jackson-module-jsonSchema-2 2.9.8-7.0 . SUCCESS [ 0.857 s]

[INFO] flink-shaded-hadoop-2 2.7.2-7.0 .................... SUCCESS [ 9.132 s]

[INFO] flink-shaded-hadoop-2-uber 2.7.2-7.0 ............... SUCCESS [ 17.173 s]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 38.612 s

[INFO] Finished at: 2020-03-12T15:14:42+08:00

[INFO] ------------------------------------------------------------------------

编译flink

本节就要完成flink的编译了,很简单解压flink源码(我这里用的是1.9.2),进入解压出来的目录执行一下命令:

mvn clean install -DskipTests -Drat.skip=true -Pinclude-hadoop -Dhadoop.version=2.7.2 -Dscala-2.12

下面我们来说一下参数

-DskipTests 跳过单元测试(你懂得)

-Drat.skip=true 跳过apache rat的编译,加快编译

-Pinclude-hadoop on_hadoop版本的编译需要带上这个参数

-Dhadoop.version=2.7.2 hadoop的版本

-Dscala-2.12 scala版本

这回是一个漫长的等待过程,在编译的过程中maven下载依赖包的时候可能需要两个ca证书,

在编译的过程中出现如下错误

Downloading: https://repo.hortonworks.com/content/repositories/jetty-hadoop/com/microsoft/azure/azure-mgmt-trafficmanager/1.16.0/azure-mgmt-trafficmanager-1.16.0.pom

[ERROR] Failed to execute goal on project flink-swift-fs-hadoop: Could not resolve dependencies for project org.apache.flink:flink-swift-fs-hadoop:jar:1.9.1: The following artifacts could not be resolved: org.apache.hadoop:hadoop-client:jar:2.8.1, org.apache.hadoop:hadoop-common:jar:2.8.1, org.mortbay.jetty:jetty-sslengine:jar:6.1.26, commons-beanutils:commons-beanutils:jar:1.8.3, com.google.code.gson:gson:jar:2.2.4, org.apache.hadoop:hadoop-auth:jar:2.8.1, com.nimbusds:nimbus-jose-jwt:jar:3.9, net.jcip:jcip-annotations:jar:1.0, net.minidev:json-smart:jar:1.1.1, org.apache.htrace:htrace-core4:jar:4.0.1-incubating, org.apache.hadoop:hadoop-hdfs:jar:2.8.1, org.apache.hadoop:hadoop-hdfs-client:jar:2.8.1, com.squareup.okhttp:okhttp:jar:2.4.0, com.squareup.okio:okio:jar:1.4.0, org.apache.hadoop:hadoop-annotations:jar:2.8.1, org.apache.hadoop:hadoop-openstack:jar:2.8.1: Could not transfer artifact org.apache.hadoop:hadoop-client:jar:2.8.1 from/to mapr-releases (https://repository.mapr.com/maven/): sun.security.validator.ValidatorException: PKIX path building failed: sun.security.provider.certpath.SunCertPathBuilderException: unable to find valid certification path to requested target -> [Help 1]

[ERROR]

[ERROR] To see the full stack trace of the errors, re-run Maven with the -e switch.

[ERROR] Re-run Maven using the -X switch to enable full debug logging.

[ERROR]

[ERROR] For more information about the errors and possible solutions, please read the following articles:

[ERROR] [Help 1] http://cwiki.apache.org/confluence/display/MAVEN/DependencyResolutionException

[ERROR]

[ERROR] After correcting the problems, you can resume the build with the command

[ERROR] mvn -rf :flink-swift-fs-hadoop

没有ca证书无法下载咋办?按一个呗!

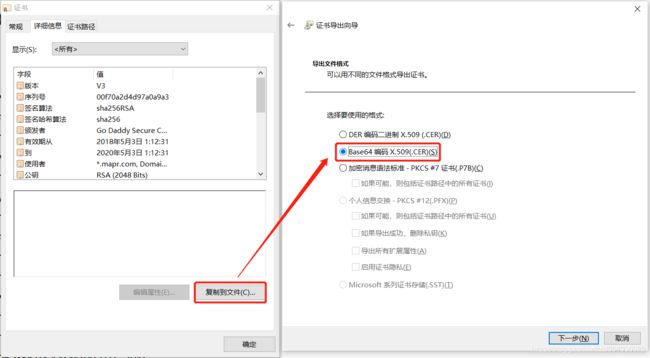

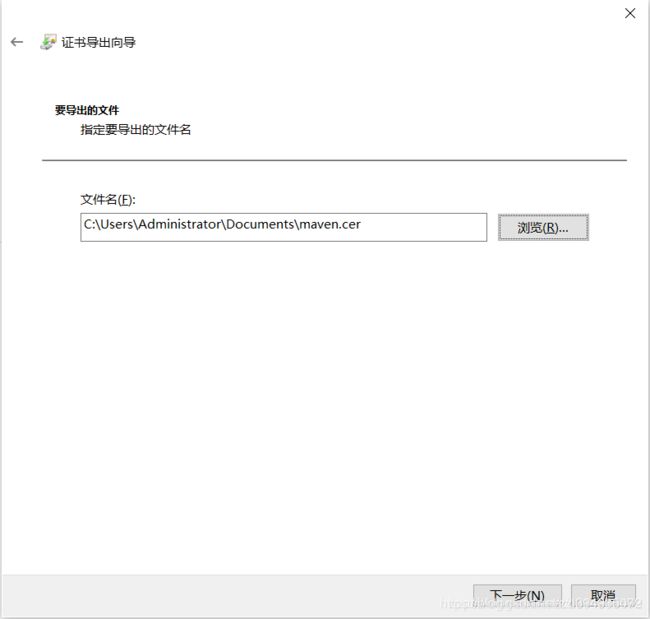

首先,获取CA证书(图是盗的,原文链接奉上)

将下载好的ca证书上传到Centos中

安装证书:

注意:一定要在${JAVA_HOME}/jre/lib/security/目录下安装证书。

库密钥口令输入:changeit,是否信任证书:Y。

经过漫长的等待,当等待到所有的组件编译成功:

[INFO] Reactor Summary for flink 1.9.2:

[INFO]

[INFO] force-shading ...................................... SUCCESS [ 1.281 s]

[INFO] flink .............................................. SUCCESS [ 1.829 s]

[INFO] flink-annotations .................................. SUCCESS [ 1.280 s]

[INFO] flink-shaded-curator ............................... SUCCESS [ 1.501 s]

[INFO] flink-metrics ...................................... SUCCESS [ 0.188 s]

[INFO] flink-metrics-core ................................. SUCCESS [ 0.736 s]

[INFO] flink-test-utils-parent ............................ SUCCESS [ 0.157 s]

[INFO] flink-test-utils-junit ............................. SUCCESS [ 0.928 s]

[INFO] flink-core ......................................... SUCCESS [ 33.771 s]

[INFO] flink-java ......................................... SUCCESS [ 4.827 s]

[INFO] flink-queryable-state .............................. SUCCESS [ 0.100 s]

[INFO] flink-queryable-state-client-java .................. SUCCESS [ 0.643 s]

[INFO] flink-filesystems .................................. SUCCESS [ 0.082 s]

[INFO] flink-hadoop-fs .................................... SUCCESS [ 1.168 s]

[INFO] flink-runtime ...................................... SUCCESS [01:58 min]

[INFO] flink-scala ........................................ SUCCESS [ 29.960 s]

[INFO] flink-mapr-fs ...................................... SUCCESS [ 0.394 s]

[INFO] flink-filesystems :: flink-fs-hadoop-shaded ........ SUCCESS [ 2.916 s]

[INFO] flink-s3-fs-base ................................... SUCCESS [ 5.740 s]

[INFO] flink-s3-fs-hadoop ................................. SUCCESS [ 8.235 s]

[INFO] flink-s3-fs-presto ................................. SUCCESS [ 12.510 s]

[INFO] flink-swift-fs-hadoop .............................. SUCCESS [ 13.618 s]

[INFO] flink-oss-fs-hadoop ................................ SUCCESS [ 4.483 s]

[INFO] flink-azure-fs-hadoop .............................. SUCCESS [ 5.729 s]

[INFO] flink-optimizer .................................... SUCCESS [ 11.589 s]

[INFO] flink-clients ...................................... SUCCESS [ 1.179 s]

[INFO] flink-streaming-java ............................... SUCCESS [ 8.882 s]

[INFO] flink-test-utils ................................... SUCCESS [ 1.453 s]

[INFO] flink-runtime-web .................................. SUCCESS [02:06 min]

[INFO] flink-examples ..................................... SUCCESS [ 0.112 s]

[INFO] flink-examples-batch ............................... SUCCESS [ 10.577 s]

[INFO] flink-connectors ................................... SUCCESS [ 0.096 s]

[INFO] flink-hadoop-compatibility ......................... SUCCESS [ 4.225 s]

[INFO] flink-state-backends ............................... SUCCESS [ 0.070 s]

[INFO] flink-statebackend-rocksdb ......................... SUCCESS [ 1.102 s]

[INFO] flink-tests ........................................ SUCCESS [ 28.820 s]

[INFO] flink-streaming-scala .............................. SUCCESS [ 26.143 s]

[INFO] flink-table ........................................ SUCCESS [ 0.054 s]

[INFO] flink-table-common ................................. SUCCESS [ 1.771 s]

[INFO] flink-table-api-java ............................... SUCCESS [ 1.416 s]

[INFO] flink-table-api-java-bridge ........................ SUCCESS [ 0.570 s]

[INFO] flink-table-api-scala .............................. SUCCESS [ 5.054 s]

[INFO] flink-table-api-scala-bridge ....................... SUCCESS [ 7.626 s]

[INFO] flink-sql-parser ................................... SUCCESS [ 3.073 s]

[INFO] flink-libraries .................................... SUCCESS [ 0.056 s]

[INFO] flink-cep .......................................... SUCCESS [ 2.437 s]

[INFO] flink-table-planner ................................ SUCCESS [01:37 min]

[INFO] flink-orc .......................................... SUCCESS [ 0.579 s]

[INFO] flink-jdbc ......................................... SUCCESS [ 0.546 s]

[INFO] flink-table-runtime-blink .......................... SUCCESS [ 3.868 s]

[INFO] flink-table-planner-blink .......................... SUCCESS [02:02 min]

[INFO] flink-hbase ........................................ SUCCESS [ 1.725 s]

[INFO] flink-hcatalog ..................................... SUCCESS [ 4.158 s]

[INFO] flink-metrics-jmx .................................. SUCCESS [ 0.272 s]

[INFO] flink-connector-kafka-base ......................... SUCCESS [ 1.671 s]

[INFO] flink-connector-kafka-0.9 .......................... SUCCESS [ 0.768 s]

[INFO] flink-connector-kafka-0.10 ......................... SUCCESS [ 0.547 s]

[INFO] flink-connector-kafka-0.11 ......................... SUCCESS [ 0.692 s]

[INFO] flink-formats ...................................... SUCCESS [ 0.054 s]

[INFO] flink-json ......................................... SUCCESS [ 0.332 s]

[INFO] flink-connector-elasticsearch-base ................. SUCCESS [ 0.810 s]

[INFO] flink-connector-elasticsearch2 ..................... SUCCESS [ 8.239 s]

[INFO] flink-connector-elasticsearch5 ..................... SUCCESS [ 9.715 s]

[INFO] flink-connector-elasticsearch6 ..................... SUCCESS [ 0.747 s]

[INFO] flink-csv .......................................... SUCCESS [ 0.251 s]

[INFO] flink-connector-hive ............................... SUCCESS [ 1.942 s]

[INFO] flink-connector-rabbitmq ........................... SUCCESS [ 0.332 s]

[INFO] flink-connector-twitter ............................ SUCCESS [ 1.304 s]

[INFO] flink-connector-nifi ............................... SUCCESS [ 0.349 s]

[INFO] flink-connector-cassandra .......................... SUCCESS [ 2.205 s]

[INFO] flink-avro ......................................... SUCCESS [ 1.565 s]

[INFO] flink-connector-filesystem ......................... SUCCESS [ 0.861 s]

[INFO] flink-connector-kafka .............................. SUCCESS [ 0.973 s]

[INFO] flink-connector-gcp-pubsub ......................... SUCCESS [ 0.582 s]

[INFO] flink-sql-connector-elasticsearch6 ................. SUCCESS [ 4.583 s]

[INFO] flink-sql-connector-kafka-0.9 ...................... SUCCESS [ 0.256 s]

[INFO] flink-sql-connector-kafka-0.10 ..................... SUCCESS [ 0.312 s]

[INFO] flink-sql-connector-kafka-0.11 ..................... SUCCESS [ 0.413 s]

[INFO] flink-sql-connector-kafka .......................... SUCCESS [ 0.641 s]

[INFO] flink-connector-kafka-0.8 .......................... SUCCESS [ 0.700 s]

[INFO] flink-avro-confluent-registry ...................... SUCCESS [ 0.843 s]

[INFO] flink-parquet ...................................... SUCCESS [ 0.726 s]

[INFO] flink-sequence-file ................................ SUCCESS [ 0.247 s]

[INFO] flink-examples-streaming ........................... SUCCESS [ 10.970 s]

[INFO] flink-examples-table ............................... SUCCESS [ 5.554 s]

[INFO] flink-examples-build-helper ........................ SUCCESS [ 0.069 s]

[INFO] flink-examples-streaming-twitter ................... SUCCESS [ 0.464 s]

[INFO] flink-examples-streaming-state-machine ............. SUCCESS [ 0.298 s]

[INFO] flink-examples-streaming-gcp-pubsub ................ SUCCESS [ 3.640 s]

[INFO] flink-container .................................... SUCCESS [ 0.304 s]

[INFO] flink-queryable-state-runtime ...................... SUCCESS [ 0.555 s]

[INFO] flink-end-to-end-tests ............................. SUCCESS [ 0.056 s]

[INFO] flink-cli-test ..................................... SUCCESS [ 0.151 s]

[INFO] flink-parent-child-classloading-test-program ....... SUCCESS [ 0.154 s]

[INFO] flink-parent-child-classloading-test-lib-package ... SUCCESS [ 0.098 s]

[INFO] flink-dataset-allround-test ........................ SUCCESS [ 0.153 s]

[INFO] flink-dataset-fine-grained-recovery-test ........... SUCCESS [ 0.141 s]

[INFO] flink-datastream-allround-test ..................... SUCCESS [ 1.119 s]

[INFO] flink-batch-sql-test ............................... SUCCESS [ 0.136 s]

[INFO] flink-stream-sql-test .............................. SUCCESS [ 0.183 s]

[INFO] flink-bucketing-sink-test .......................... SUCCESS [ 0.233 s]

[INFO] flink-distributed-cache-via-blob ................... SUCCESS [ 0.138 s]

[INFO] flink-high-parallelism-iterations-test ............. SUCCESS [ 5.724 s]

[INFO] flink-stream-stateful-job-upgrade-test ............. SUCCESS [ 0.636 s]

[INFO] flink-queryable-state-test ......................... SUCCESS [ 1.688 s]

[INFO] flink-local-recovery-and-allocation-test ........... SUCCESS [ 0.221 s]

[INFO] flink-elasticsearch2-test .......................... SUCCESS [ 3.299 s]

[INFO] flink-elasticsearch5-test .......................... SUCCESS [ 3.969 s]

[INFO] flink-elasticsearch6-test .......................... SUCCESS [ 2.275 s]

[INFO] flink-quickstart ................................... SUCCESS [ 0.781 s]

[INFO] flink-quickstart-java .............................. SUCCESS [ 0.350 s]

[INFO] flink-quickstart-scala ............................. SUCCESS [ 0.145 s]

[INFO] flink-quickstart-test .............................. SUCCESS [ 0.323 s]

[INFO] flink-confluent-schema-registry .................... SUCCESS [ 1.202 s]

[INFO] flink-stream-state-ttl-test ........................ SUCCESS [ 3.172 s]

[INFO] flink-sql-client-test .............................. SUCCESS [ 0.671 s]

[INFO] flink-streaming-file-sink-test ..................... SUCCESS [ 0.221 s]

[INFO] flink-state-evolution-test ......................... SUCCESS [ 0.822 s]

[INFO] flink-e2e-test-utils ............................... SUCCESS [ 6.170 s]

[INFO] flink-mesos ........................................ SUCCESS [ 17.591 s]

[INFO] flink-yarn ......................................... SUCCESS [ 0.915 s]

[INFO] flink-gelly ........................................ SUCCESS [ 2.601 s]

[INFO] flink-gelly-scala .................................. SUCCESS [ 13.500 s]

[INFO] flink-gelly-examples ............................... SUCCESS [ 7.711 s]

[INFO] flink-metrics-dropwizard ........................... SUCCESS [ 0.193 s]

[INFO] flink-metrics-graphite ............................. SUCCESS [ 0.123 s]

[INFO] flink-metrics-influxdb ............................. SUCCESS [ 0.594 s]

[INFO] flink-metrics-prometheus ........................... SUCCESS [ 0.371 s]

[INFO] flink-metrics-statsd ............................... SUCCESS [ 0.179 s]

[INFO] flink-metrics-datadog .............................. SUCCESS [ 0.237 s]

[INFO] flink-metrics-slf4j ................................ SUCCESS [ 0.157 s]

[INFO] flink-cep-scala .................................... SUCCESS [ 9.399 s]

[INFO] flink-table-uber ................................... SUCCESS [ 1.794 s]

[INFO] flink-table-uber-blink ............................. SUCCESS [ 2.050 s]

[INFO] flink-sql-client ................................... SUCCESS [ 1.090 s]

[INFO] flink-state-processor-api .......................... SUCCESS [ 0.598 s]

[INFO] flink-python ....................................... SUCCESS [ 0.621 s]

[INFO] flink-scala-shell .................................. SUCCESS [ 8.821 s]

[INFO] flink-dist ......................................... SUCCESS [ 10.765 s]

[INFO] flink-end-to-end-tests-common ...................... SUCCESS [ 0.285 s]

[INFO] flink-metrics-availability-test .................... SUCCESS [ 0.153 s]

[INFO] flink-metrics-reporter-prometheus-test ............. SUCCESS [ 0.132 s]

[INFO] flink-heavy-deployment-stress-test ................. SUCCESS [ 6.045 s]

[INFO] flink-connector-gcp-pubsub-emulator-tests .......... SUCCESS [ 0.516 s]

[INFO] flink-streaming-kafka-test-base .................... SUCCESS [ 0.192 s]

[INFO] flink-streaming-kafka-test ......................... SUCCESS [ 5.923 s]

[INFO] flink-streaming-kafka011-test ...................... SUCCESS [ 5.786 s]

[INFO] flink-streaming-kafka010-test ...................... SUCCESS [ 5.000 s]

[INFO] flink-plugins-test ................................. SUCCESS [ 0.095 s]

[INFO] flink-tpch-test .................................... SUCCESS [ 1.210 s]

[INFO] flink-contrib ...................................... SUCCESS [ 0.052 s]

[INFO] flink-connector-wikiedits .......................... SUCCESS [ 0.258 s]

[INFO] flink-yarn-tests ................................... SUCCESS [ 2.919 s]

[INFO] flink-fs-tests ..................................... SUCCESS [ 0.330 s]

[INFO] flink-docs ......................................... SUCCESS [ 0.531 s]

[INFO] flink-ml-parent .................................... SUCCESS [ 0.052 s]

[INFO] flink-ml-api ....................................... SUCCESS [ 0.284 s]

[INFO] flink-ml-lib ....................................... SUCCESS [ 0.164 s]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 15:33 min

[INFO] Finished at: 2020-03-12T17:41:19+08:00

[INFO] -----------------------------------------------------------------------

在编译过程中出现的问题大部分都是缺少依赖,或者依赖下载不到。

com.mapr.hadoop:maprfs:5.2.1-mapr找不到

编译之后,会出现各种错误,首先碰到flink-mapr-fs模块下面的依赖jar包com.mapr.hadoop:maprfs:5.2.1-mapr找不到。

下载地址:

https://repository.mapr.com/nexus/content/groups/mapr-public/com/mapr/hadoop/maprfs/5.2.1-mapr/maprfs-5.2.1-mapr.jar

执行命令:

mvn install:install-file -DgroupId=com.mapr.hadoop -DartifactId=maprfs -Dversion=5.2.1-mapr -Dpackaging=jar -Dfile=maprfs-5.2.1-mapr.jar

kafka-schema-registry-client-3.3.1.jar找不到

下载地址

http://packages.confluent.io/maven/io/confluent/kafka-schema-registry-client/3.3.1/kafka-schema-registry-client-3.3.1.jar

执行命令

mvn install:install-file -DgroupId=io.confluent -DartifactId=kafka-schema-registry-client -Dversion=3.3.1 -Dpackaging=jar -Dfile=kafka-schema-registry-client-3.3.1.jar

经过我们漫长的等待,编译就完成了,成果存放目录请收下(相对源码存放目录):

./flink-dist/target/flink-1.9.2-bin/flink-1.9.2

拿着这个去搞flink on yarn吧!