linux--kubernetes(调度)

Kuberbetes调度

- 调度器通过kubernetes的watch机制来发现集群中新创建且尚未被调度到node上的pod。调度器会将发现的每一个未调度的pod调度到一个合适的node上来运行。

- kube-scheduler 是 Kubernetes 集群的默认调度器,并且是集群 控制面

的一部分。如果你真的希望或者有这方面的需求,kube-scheduler 在设计上是允许你自己写一个调度组件并替换原有的

kube-scheduler。 - 在做调度决定时需要考虑的因素包括:单独和整体的资源请求、硬件/软件/策略限制、亲和以及反亲和要求、数据局域性、负载间的干扰等等。

https://kubernetes.io/zh/docs/concepts/scheduling-eviction/kube-scheduler/

nodename

- nodename是节点选择约束的最简单方法,但是一般不推荐。

- 如果nodename在podspec中制定了,则它的优先级最高。

- 使用nodename来选择节点的一些限制:

1.如果指定的节点不存在

2.如果指定的节点没有资源来容纳pod,则pod调度失败

3.云环境中的节点名称并非总是可预测或稳定的

[kubeadm@server2 scheduler]$ cat pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx

nodeName: server3 绑定

只能在server3上:

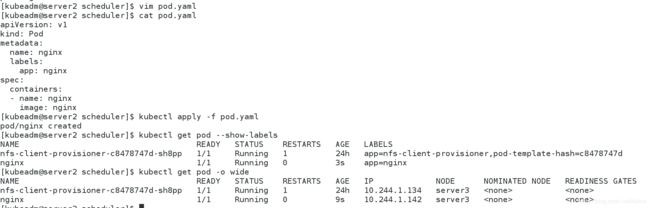

nodeSelector

- nodeselector是节点选择约束的最简单推荐形式

- 给选择的节点添加标签:

kubectl label nodes <node name> disktype=ssd

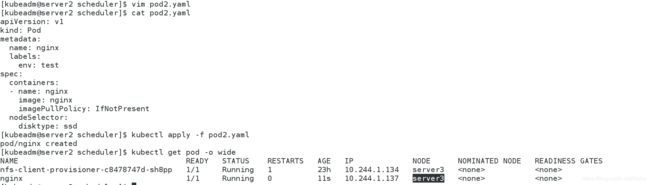

[kubeadm@server2 scheduler]$ cat pod2.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

env: test

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

nodeSelector:

disktype: ssd

给server3加标签

亲和和与反亲和

节点亲和

- 简单的来说就是用一些标签规则来约束pod的调度

requiredDuringSchedulingIgnoredDuringExecution 必须满足

preferredDuringSchedulingIgnoredDuringExecution 倾向满足

- ignoreDuringExecution 表示如果在pod运行期间node的标签发生变化,导致亲和性策略不能满足,则继续运行当前的pod

https://kubernetes.io/zh/docs/concepts/configuration/assign-pod-node.

必须满足:

[kubeadm@server2 scheduler]$ cat pod2.yaml

apiVersion: v1

kind: Pod

metadata:

name: node-affinity

spec:

containers:

- name: nginx

image: nginx

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: disktype

operator: In

values:

- ssd

给server4加上标签

更改清单

[kubeadm@server2 scheduler]$ cat pod2.yaml

apiVersion: v1

kind: Pod

metadata:

name: node-affinity

spec:

containers:

- name: nginx

image: nginx

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: disktype

operator: In

values:

- ssd

- sata

倾向满足:

apiVersion: v1

kind: Pod

metadata:

name: node-affinity

spec:

containers:

- name: nginx

image: nginx

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: NotIn

values:

- server3

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1

preference:

matchExpressions:

- key: disktype

operator: In

values:

- ssd

server3上有disktype=ssd

pod在server4上,不会调度到server3

总结: 必须满足去除的条件就算满足倾向满足也不会实现

pod亲和与反亲和

pod亲和:

给nginx加标签

apiVersion: v1

kind: Pod

metadata:

name: mysql

labels:

app: mysql

spec:

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- nginx

topologyKey: kubernetes.io/hostname

containers:

- name: mysql

image: mysql:5.7

env:

- name: "MYSQL_ROOT_PASSWORD"

value: "westos"

pod反亲和

apiVersion: v1

kind: Pod

metadata:

name: mysql

labels:

app: mysql

spec:

affinity:

podAntiAffinity: 区别

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- nginx

topologyKey: kubernetes.io/hostname

containers:

- name: mysql

image: mysql:5.7

env:

- name: "MYSQL_ROOT_PASSWORD"

value: "westos"

pod亲和也有软硬设置

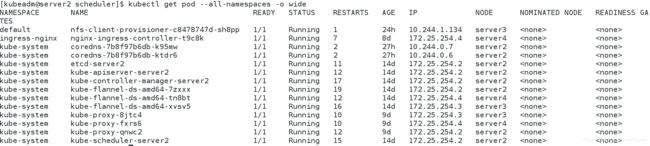

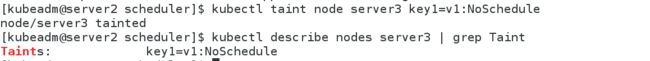

污点

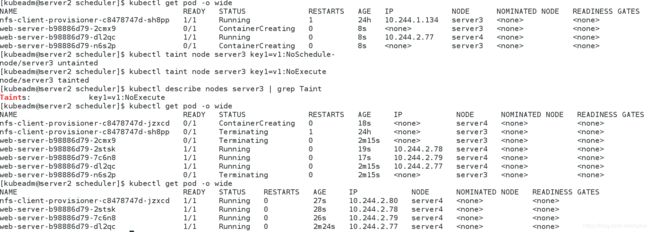

- 污点作为节点上的标签拒绝运行调度pod,甚至驱逐pod

- 有污点就有容忍,容忍可以容忍节点上的污点,可以调度pod

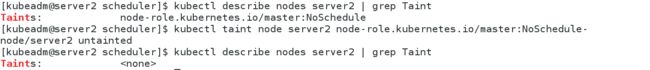

kubectl taint nodes nodename key=value:NoSchedul 创建

kubectl describe nodes nodename | grep Taint 查询

kubectl taint nodes nodename key:NoSchedule- 删除

- NoSchedule: pod不会调度到污点节点

- PreferNoSchedule: NoSchedule 的软策略版本

- NoExecute: 生效就会驱逐,除非pod内Tolerate设置对应

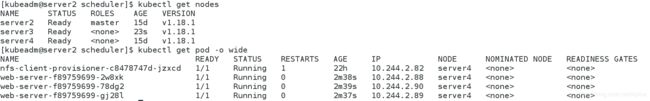

server2不参加调度的原因是因为有污点

创建一个控制器

[kubeadm@server2 scheduler]$ cat deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-server

spec:

selector:

matchLabels:

app: nginx

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

server2没有参加调度

让server2参加调度的方法

删除server2污点,重新调度

一般情况下因为server2启动pod太多,不会参与调度(如果pod基数太大就会参与调度)

污点容忍

给server3打上标签

[kubeadm@server2 scheduler]$ cat deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-server

spec:

selector:

matchLabels:

app: nginx

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

tolerations:

- key: "key1"

operator: "Equal"

value: "v1"

effect: "NoSchedule"

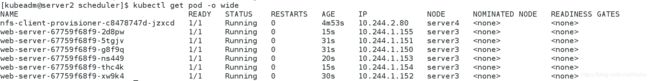

server3参与调度

给server3添加驱逐标签,server3上运行的pod都调度到server4

在清单中改变容忍标签

effect: "NoExecute"

server3又可以参与调度,并且全部调度到sevrer3上(因为压力小)

容忍所有污点

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-server

spec:

selector:

matchLabels:

app: nginx

replicas: 6

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

tolerations:

- operator: "Exists"

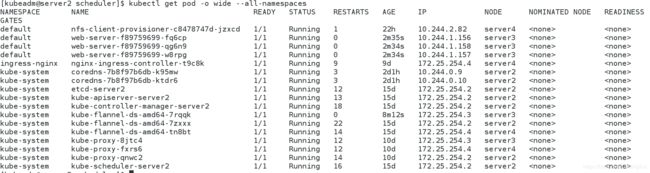

影响pod调度的指令还有: cordon,drain,delete,后期创建的pod都不会被调度到该节点上,但操作的暴力程度不一样。

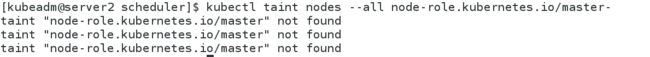

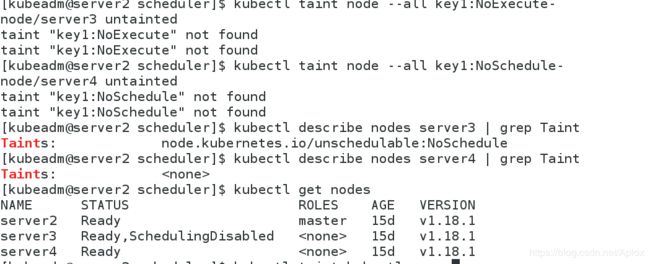

在所有节点上去掉污点:

用于集群新建时

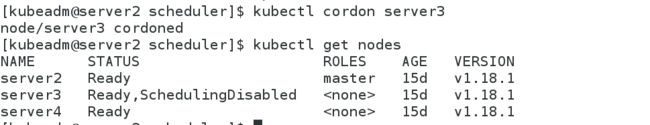

cordon停止调度:

原来存在的pod依然会存在,新建pod不会再调度

恢复调度:

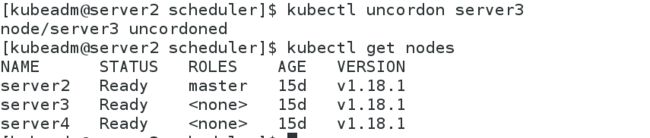

drain驱逐节点:

- 首先驱离节点上的pod,再在其它节点重建,最后将节点调为SchedulingDisabled

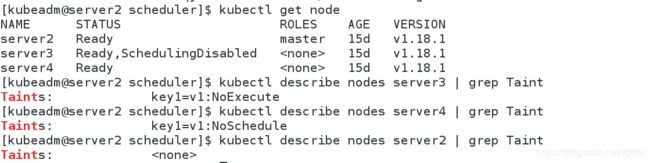

- 当前server3和server4都有污点

- 删除server3和server4的污点

- 在集群上的pod由集群自己做均衡

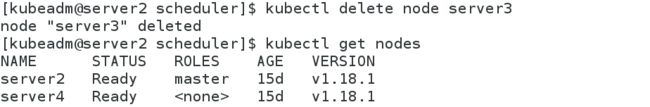

delete删除节点:

- 最暴力的参数

- 从master节点删除node,master失去对该节点控制,也就是该节点上的pod,如果想要恢复,需要在该节点重启kubelet服务

删除:

- 在server3重启kubelet,集群上节点自动上线

- 官方推荐在做delete动作之前先删除daemonset

kubectl drain <node name> --delete-local-data --force --ignore-daemonsets