openstack+ceph大型试验!全程可跟做!

文章目录

- 前言

- 一:CEPH理论基础

- 1.1:概述

- 1.2:相关概念

- 1.3:完全无中心架构

- 二:部署CEPH集群

- 2.1:部署CEPH环境

- 2.2:CEPH集群搭建

- 2.3:CEPH集群管理页面安装

- 三:CEPH与openstack对接

- 3.1:CEPH与OPenStack对接环境初始化准备

- 3.2:在运行nova-compute的计算节点讲临时密钥文件添加到libvirt 中然后删除

- 3.2.1:在c1节点上操作

- 3.2.2:在C2节点上操作

- 3.3:Ceph对接Glance

- 3.4:ceph与cinder对接

- 3.5:Ceph与Nova 对接

前言

一:CEPH理论基础

1.1:概述

- Ceph是一个分布式集群系统,是一个可靠、自动重均衡、自动恢复的分布式存储系统,Ceph的主要优点是分布式存储,在存储每一个数据时,都会通过计算得出该数据存储的位置,尽量将数据分布均衡,不存在传统的单点故障的问题,可以水平扩展。

- Ceph可以提供对象存储(RADOSGW)、块设备存储(RBD)和文件系统服务(CephFS),其对象存储可以对接网盘(owncloud)应用业务等;其块设备存储可以对接(IaaS),当前主流的IaaS运平台软件,如:OpenStack、CloudStack、Zstack、Eucalyptus等以及kvm等。

- 至少需要一个monitor和2个OSD守护进程,运行Ceph文件系统客户端还需要MS(Metadata server)

1.2:相关概念

- 什么是OSD

- OSD是存储数据、处理数据的复制、回复、回填在均衡、并且通过检查其他的OSD的

守护进程的心跳,向monitor提供给一些监控信息

- OSD是存储数据、处理数据的复制、回复、回填在均衡、并且通过检查其他的OSD的

- 什么是Monitor

- 监视整个集群的状态信息、当Ceph的集群为2个副本,至少需要2个OSD ,才能达到健康的状态,同时还守护各种图表(OSD图、PG组、Crush图等等)

- ceph-mgr

- Ceph Manager Daemon,简称ceph-mgr。 该组件的主要作用是分担和扩展monitor的部分功能,

减轻monitor的负担,让更好地管理ceph存储系统ceph

- Ceph Manager Daemon,简称ceph-mgr。 该组件的主要作用是分担和扩展monitor的部分功能,

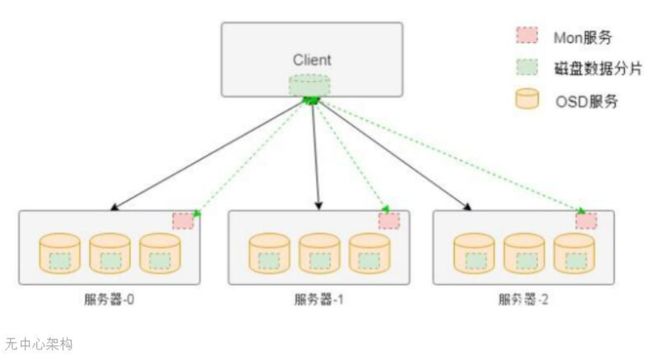

1.3:完全无中心架构

-

完全无中心架构一-计算模式(ceph)

-

如图是ceph存储系统的架构,在该架构中与HDFS不同的地方在于该架构中没有中心节点。客户端是通过一个设备映射关系计算出来其写入数据的位置,这样客户端可以直接与存储节点通信,从而避免中心节点的性能瓶颈。

-

在ceph存储系统架构中核心组件有Mon服务、OSD服务和MDS服务等。对于块存储类型只需要Mon服务、OSD服务和客户端的软件即可。其中Mon服务用于维护存储系统的硬件逻辑关系,主要是服务器和硬盘等在线信息。Mon服务通过集群的方式保证其服务的可用性。OSD服务用于实现对磁盘的管理,实现真正的数据读写,通常一个磁盘对应一个OSD服务。

-

客户端访问存储的大致流程是,客户端在启动后会首先从Mon服务拉取存储资源布局信息,然后根据该布局信息和写入数据的名称等信息计算出期望数据的位置(包含具体的物理服务器信息和磁盘信息),然后该位置信息直接通信,读取或者写入数据。

二:部署CEPH集群

- 本次实验使用之前的openstack多节点的环境,具体参考博客https://blog.csdn.net/CN_TangZheng/article/details/104543185

- 采用的是本地源方式,因为实验环境有限采用777的内存分配,推荐32G内存使用888的内存分配

2.1:部署CEPH环境

-

部署CEPH之前,必须把和存储有关系数据清理干净

- 1、如果OpenStack安装了实例,必须删除----在控制台dashboard删除

- 2、如果OPenStack上产的镜像,必须删除----在控制台dashboard删除

- 3、如果OpenStack的cinder块,必须删除----在控制台dashboard删除

-

三个节点iptables 防火墙关闭

'//三个节点操作相同,仅展示控制节点的操作' [root@ct ~]# systemctl stop iptables [root@ct ~]# systemctl disable iptables Removed symlink /etc/systemd/system/basic.target.wants/iptables.service. -

检查节点的免交互、主机名、hosts、关闭防火墙等

-

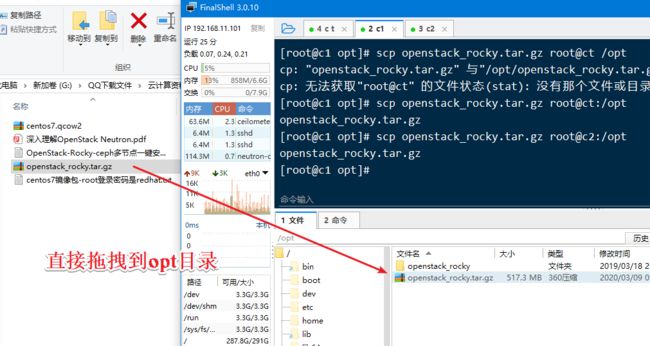

三个节点本地源配置(仅展示控制节点的操作)

[root@ct opt]# tar zxvf openstack_rocky.tar.gz '//解压上传的包' [root@ct opt]# ls openstack_rocky openstack_rocky.tar.gz [root@ct opt]# cd /etc/yum.repos.d [root@ct yum.repos.d]# vi local.repo [openstack] name=openstack baseurl=file:///opt/openstack_rocky '//刚解压的文件夹名称和之前的名称一样,所以不需要修改' gpgcheck=0 enabled=1 [mnt] name=mnt baseurl=file:///mnt gpgcheck=0 enabled=1 [root@ct yum.repos.d]# yum clean all '//清除缓存' 已加载插件:fastestmirror 正在清理软件源: mnt openstack Cleaning up list of fastest mirrors [root@ct yum.repos.d]# yum makecache '//创建缓存'

2.2:CEPH集群搭建

-

三个节点安装Python-setuptools工具和ceph软件

[root@ct yum.repos.d]# yum -y install python-setuptools [root@ct yum.repos.d]# yum -y install ceph -

在控制节点,创建ceph配置文件目录并安装ceph-deploy

[root@ct yum.repos.d]# mkdir -p /etc/ceph [root@ct yum.repos.d]# yum -y install ceph-deploy -

控制节点创建三个mon

[root@ct yum.repos.d]# cd /etc/ceph [root@ct ceph]# ceph-deploy new ct c1 c2 [root@ct ceph]# more /etc/ceph/ceph.conf [global] fsid = 8c9d2d27-492b-48a4-beb6-7de453cf45d6 mon_initial_members = ct, c1, c2 mon_host = 192.168.11.100,192.168.11.101,192.168.11.102 auth_cluster_required = cephx auth_service_required = cephx auth_client_required = cephx -

控制节点操作:初始化mon 并收集秘钥(三个节点)

[root@ct ceph]# ceph-deploy mon create-initial [root@ct ceph]# ls ceph.bootstrap-mds.keyring ceph.bootstrap-rgw.keyring ceph-deploy-ceph.log ceph.bootstrap-mgr.keyring ceph.client.admin.keyring ceph.mon.keyring ceph.bootstrap-osd.keyring ceph.conf rbdmap -

控制节点创建OSD

[root@ct ceph]# ceph-deploy osd create --data /dev/sdb ct [root@ct ceph]# ceph-deploy osd create --data /dev/sdb c1 [root@ct ceph]# ceph-deploy osd create --data /dev/sdb c2 -

使用ceph-deploy下发配置文件和admin秘钥下发到ct c1 c2

[root@ct ceph]# ceph-deploy admin ct c1 c2 -

给ct c1 c2 每个节点的keyring增加权限.

[root@ct ceph]# chmod +x /etc/ceph/ceph.client.admin.keyring [root@c1 ceph]# chmod +x /etc/ceph/ceph.client.admin.keyring [root@c2 ceph]# chmod +x /etc/ceph/ceph.client.admin.keyring -

创建mgr管理服务

[root@ct ceph]# ceph-deploy mgr create ct c1 c2 -

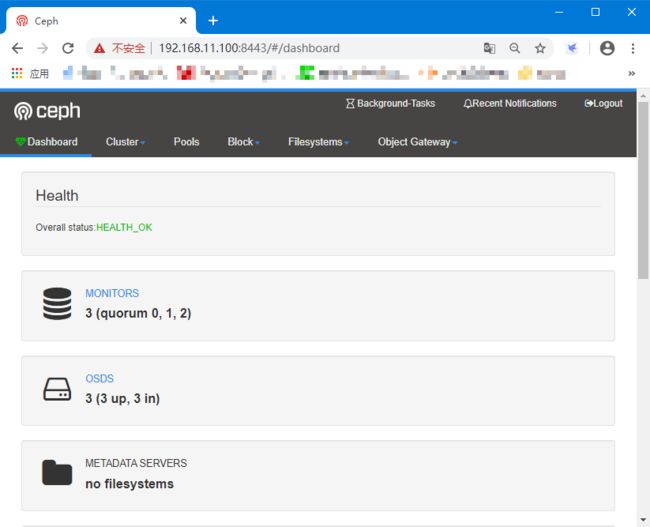

查看ceph集群状态

[root@ct ceph]# ceph -s cluster: id: 8c9d2d27-492b-48a4-beb6-7de453cf45d6 health: HEALTH_OK '//健康状态OK' services: mon: 3 daemons, quorum ct,c1,c2 mgr: ct(active), standbys: c1, c2 osd: 3 osds: 3 up, 3 in data: pools: 0 pools, 0 pgs objects: 0 objects, 0 B usage: 3.0 GiB used, 3.0 TiB / 3.0 TiB avail pgs: -

创建三个与openstack对接的pool(volumes、vms、images)64是PG

[root@ct ceph]# ceph osd pool create volumes 64 pool 'volumes' created [root@ct ceph]# ceph osd pool create vms 64 pool 'vms' created [root@ct ceph]# ceph osd pool create images 64 pool 'images' created -

查看CEPH状态

[root@ct ceph]# ceph mon stat '//查看mon状态' e1: 3 mons at {c1=192.168.11.101:6789/0,c2=192.168.11.102:6789/0,ct=192.168.11.100:6789/0}, election epoch 4, leader 0 ct, quorum 0,1,2 ct,c1,c2 [root@ct ceph]# ceph osd status '//查看osd状态' +----+------+-------+-------+--------+---------+--------+---------+-----------+ | id | host | used | avail | wr ops | wr data | rd ops | rd data | state | +----+------+-------+-------+--------+---------+--------+---------+-----------+ | 0 | ct | 1026M | 1022G | 0 | 0 | 0 | 0 | exists,up | | 1 | c1 | 1026M | 1022G | 0 | 0 | 0 | 0 | exists,up | | 2 | c2 | 1026M | 1022G | 0 | 0 | 0 | 0 | exists,up | +----+------+-------+-------+--------+---------+--------+---------+-----------+ [root@ct ceph]# ceph osd lspools '//查看osd创建的pools池' 1 volumes 2 vms 3 images

2.3:CEPH集群管理页面安装

-

查看CEPH状态 ,状态不要出现错误error

-

启用dashboard模块

[root@ct ceph]# ceph mgr module enable dashboard -

创建https证书

[root@ct ceph]# ceph dashboard create-self-signed-cert Self-signed certificate created -

查看mgr服务

[root@ct ceph]# ceph mgr services { "dashboard": "https://ct:8443/" } -

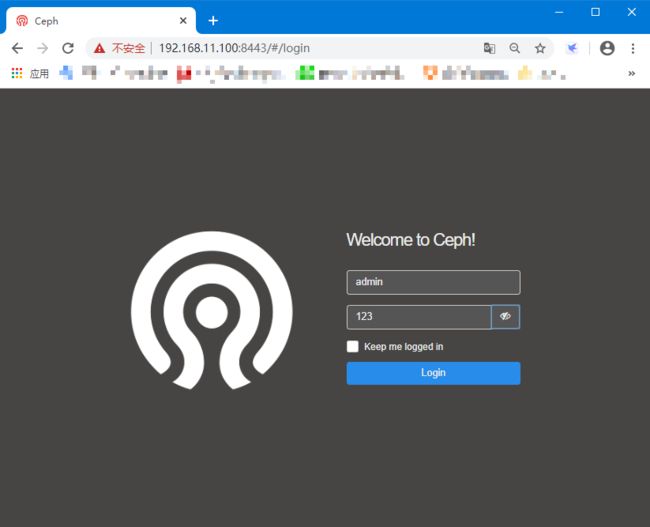

设置账号和密码

[root@ct ceph]# ceph dashboard set-login-credentials admin 123 '//admin是账号 123是密码这个可以改' Username and password updated -

在浏览器中打开ceph网页,https://192.168.11.100:8443

三:CEPH与openstack对接

3.1:CEPH与OPenStack对接环境初始化准备

-

控制节点创建client.cinder并设置权限

[root@ct ceph]# ceph auth get-or-create client.cinder mon 'allow r' osd 'allow class-read object_prefix rbd_children,allow rwx pool=volumes,allow rwx pool=vms,allow rx pool=images' [client.cinder] key = AQBQ7GRett8kAhAA4Q2fFNQybe0RJaEubK8eFQ== -

控制节点创建client.glance并设置权限

[root@ct ceph]# ceph auth get-or-create client.glance mon 'allow r' osd 'allow class-read object_prefix rbd_children,allow rwx pool=images' [client.glance] key = AQB37GRe64mXGxAApD5AVQ4Js7++tZQvVz1RgA== -

传送秘钥到对接的节点,因为glance自身就装在控制节点所以不需要发送到其他的节点

[root@ct ceph]# chown glance.glance /etc/ceph/ceph.client.glance.keyring '//设置属主属组' -

传送秘钥到对接的节点,将client.cinder节点 因为这个默认也是安装在controller上 ,所以不需要传递到其他节点

[root@ct ceph]# ceph auth get-or-create client.cinder | tee /etc/ceph/ceph.client.cinder.keyring [client.cinder] key = AQBQ7GRett8kAhAA4Q2fFNQybe0RJaEubK8eFQ== [root@ct ceph]# chown cinder.cinder /etc/ceph/ceph.client.cinder.keyring -

同时也需要将client.cinder 传递到计算节点(计算节点要调用)

'//由于计算节点需要将用户的client.cinder用户的密钥文件存储在libvirt中,所以需要执行如下操作' [root@ct ceph]# ceph auth get-key client.cinder |ssh c1 tee client.cinder.key AQBQ7GRett8kAhAA4Q2fFNQybe0RJaEubK8eFQ== [root@ct ceph]# ceph auth get-key client.cinder |ssh c2 tee client.cinder.key AQBQ7GRett8kAhAA4Q2fFNQybe0RJaEubK8eFQ== [root@c1 ceph]# ls ~ '//c1节点查看是否收到' anaconda-ks.cfg client.cinder.key [root@c2 ceph]# ls ~ '//c2节点查看是否收到' anaconda-ks.cfg client.cinder.key

3.2:在运行nova-compute的计算节点讲临时密钥文件添加到libvirt 中然后删除

- 配置libvirt secret

- KVM虚拟机需要使用librbd才可以访问ceph集群

- Librbd访问ceph又需要账户认证

- 因此在这里,需要给libvirt设置账户信息

3.2.1:在c1节点上操作

-

生成UUID

[root@c1 ceph]# uuidgen f4cd0fff-b0e4-4699-88a8-72149e9865d7 '//此UUID复制,之后都要用到它' -

用如下内容创建一个秘钥文件确保使用上一步骤中生成唯一的UUID

[root@c1 ceph]# cd /root [root@c1 ~]# cat >secret.xml <'no' private='no'> > > EOF [root@c1 ~]# ls anaconda-ks.cfg client.cinder.key secret.xmlf4cd0fff-b0e4-4699-88a8-72149e9865d7 >'ceph'> > >client.cinder secret > -

定义秘钥,并将其保存。后续步骤中使用这个秘钥

[root@c1 ~]# virsh secret-define --file secret.xml 生成 secret f4cd0fff-b0e4-4699-88a8-72149e9865d7 -

设置秘钥并删除临时文件。删除文件的步骤是可选的,目的是保持系统的纯净

[root@c1 ~]# virsh secret-set-value --secret f4cd0fff-b0e4-4699-88a8-72149e9865d7 --base64 $(cat client.cinder.key) && rm -rf client.cinder.key secret.xml secret 值设定

3.2.2:在C2节点上操作

-

创建秘钥文件

[root@c2 ceph]# cd /root [root@c2 ~]# cat >secret.xml <'no' private='no'> > > EOF [root@c2 ~]# ls anaconda-ks.cfg client.cinder.key secret.xmlf4cd0fff-b0e4-4699-88a8-72149e9865d7 >'ceph'> > >client.cinder secret > -

定义秘钥,并将其保存

[root@c2 ~]# virsh secret-define --file secret.xml 生成 secret f4cd0fff-b0e4-4699-88a8-72149e9865d7 -

设置秘钥并删除临时文件

[root@c2 ~]# virsh secret-set-value --secret f4cd0fff-b0e4-4699-88a8-72149e9865d7 --base64 $(cat client.cinder.key) && rm -rf client.cinder.key secret.xml secret 值设定 -

控制节点上开启ceph的监控

[root@ct ceph]# ceph osd pool application enable vms mon enabled application 'mon' on pool 'vms' [root@ct ceph]# ceph osd pool application enable images mon enabled application 'mon' on pool 'images' [root@ct ceph]# ceph osd pool application enable volumes mon enabled application 'mon' on pool 'volumes'

3.3:Ceph对接Glance

-

登录到glance 所在的节点(控制节点) 然后修改

-

备份配置文件

[root@ct ceph]# cp /etc/glance/glance-api.conf /etc/glance/glance-api.conf.bak -

修改对接配置文件

[root@ct ceph]# vi /etc/glance/glance-api.conf 2054 stores=rbd '//2054行修改为rbd格式(存储的类型格式)' 2108 default_store=rbd '//修改默认存储格式' 2442 #filesystem_store_datadir=/var/lib/glance/images/ '//注释掉镜像本地存储' 2605 rbd_store_chunk_size = 8 '//取消注释' 2626 rbd_store_pool = images '//取消注释' 2645 rbd_store_user =glance '//取消注释,指定用户' 2664 rbd_store_ceph_conf = /etc/ceph/ceph.conf '//取消注释,指定ceph路径' -

查找glance用户,对接上面

[root@ct ceph]# source /root/keystonerc_admin [root@ct ceph(keystone_admin)]# openstack user list |grep glance | e2ee9c40f2f646d19041c28b031449d8 | glance | -

重启OpenStack-glance-api服务

[root@ct ceph(keystone_admin)]# systemctl restart openstack-glance-api -

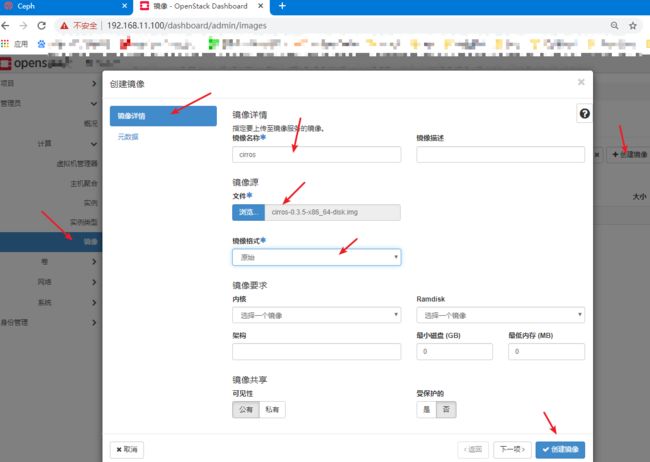

上传镜像做测试

[root@ct ceph(keystone_admin)]# ceph df '//查看镜像大小' GLOBAL: SIZE AVAIL RAW USED %RAW USED 3.0 TiB 3.0 TiB 3.0 GiB 0.10 POOLS: NAME ID USED %USED MAX AVAIL OBJECTS volumes 1 0 B 0 972 GiB 0 vms 2 0 B 0 972 GiB 0 images 3 13 MiB '//上传13M成功' 0 972 GiB 8 [root@ct ceph(keystone_admin)]# rbd ls images '//查看镜像' 0ab61b48-4e57-4df3-a029-af6843ff4fa5 -

确认本地还有没有镜像

[root@ct ceph(keystone_admin)]# ls /var/lib/glance/images '//发现下面没有镜像是正常的'

3.4:ceph与cinder对接

-

备份cinder.conf配置文件并修改

[root@ct ceph(keystone_admin)]# cp /etc/cinder/cinder.conf /etc/cinder/cinder.conf.bak [root@ct ceph(keystone_admin)]# vi /etc/cinder/cinder.conf 409 enabled_backends=ceph '//409行修改为ceph格式' 5261 #[lvm] 5262 #volume_backend_name=lvm 5263 #volume_driver=cinder.volume.drivers.lvm.LVMVolumeDriver 5264 #iscsi_ip_address=192.168.11.100 5265 #iscsi_helper=lioadm 5266 #volume_group=cinder-volumes 5267 #volumes_dir=/var/lib/cinder/volumes 5268 5269 [ceph] '//尾行添加ceph段落' 5270 default_volume_type= ceph 5271 glance_api_version = 2 5272 volume_driver = cinder.volume.drivers.rbd.RBDDriver 5273 volume_backend_name = ceph 5274 rbd_pool = volumes 5275 rbd_ceph_conf = /etc/ceph/ceph.conf 5276 rbd_flatten_volume_from_snapshot = false 5277 rbd_max_clone_depth = 5 5278 rbd_store_chunk_size = 4 5279 rados_connect_timeout = -1 5280 rbd_user = cinder 5281 rbd_secret_uuid = f4cd0fff-b0e4-4699-88a8-72149e9865d7 '//使用之前的UUID' -

重启cinder服务

[root@ct ceph(keystone_admin)]# systemctl restart openstack-cinder-volume -

查看cinder卷的类型有几个

[root@ct ceph(keystone_admin)]# cinder type-list +--------------------------------------+-------+-------------+-----------+ | ID | Name | Description | Is_Public | +--------------------------------------+-------+-------------+-----------+ | c33f5b0a-b74e-47ab-92f0-162be0bafa1f | iscsi | - | True | +--------------------------------------+-------+-------------+-----------+ -

命令行创建cinder 的ceph存储后端相应的type

[root@ct ceph(keystone_admin)]# cinder type-create ceph '//创建ceph类型' +--------------------------------------+------+-------------+-----------+ | ID | Name | Description | Is_Public | +--------------------------------------+------+-------------+-----------+ | e1137ee4-0a45-4a21-9c16-55dfb7e3d737 | ceph | - | True | +--------------------------------------+------+-------------+-----------+ [root@ct ceph(keystone_admin)]# cinder type-list '//查看类型' +--------------------------------------+-------+-------------+-----------+ | ID | Name | Description | Is_Public | +--------------------------------------+-------+-------------+-----------+ | c33f5b0a-b74e-47ab-92f0-162be0bafa1f | iscsi | - | True | | e1137ee4-0a45-4a21-9c16-55dfb7e3d737 | ceph | - | True | +--------------------------------------+-------+-------------+-----------+ [root@ct ceph(keystone_admin)]# cinder type-key ceph set volume_backend_name=ceph '//设置后端的存储类型 volume_backend_name=ceph一定要顶格写不能有空格' -

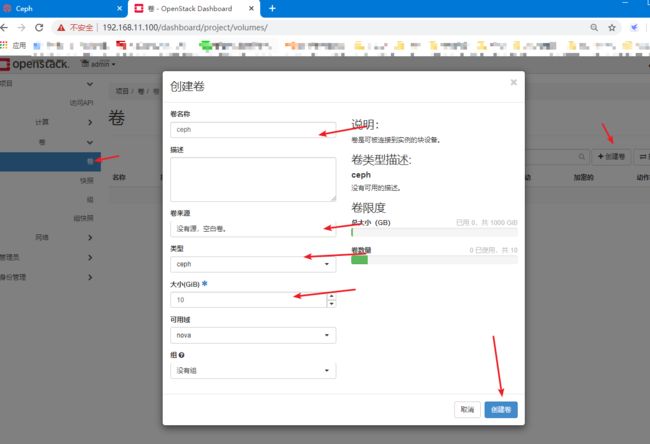

创建卷

-

查看创建的卷

[root@ct ceph(keystone_admin)]# ceph osd lspools 1 volumes 2 vms 3 images [root@ct ceph(keystone_admin)]# rbd ls volumes volume-47728c44-85db-4257-a723-2ab8c5249f5d -

开启cinder服务

[root@ct ceph(keystone_admin)]# systemctl start openstack-cinder-api.service openstack-cinder-scheduler.service [root@ct ceph(keystone_admin)]# systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service

3.5:Ceph与Nova 对接

-

备份配置文件(C1、C2节点)

[root@c1 ~]# cp /etc/nova/nova.conf /etc/nova/nova.conf.bak [root@c2 ~]# cp /etc/nova/nova.conf /etc/nova/nova.conf.bak -

修改配置文件(C1、C2节点)(仅展示c1)

7072 images_type=rbd '//7072行修改为rbd格式,取消注释' 7096 images_rbd_pool=vms '//7096行、去掉注释、改为VMS在CEPH中声明的' 7099 images_rbd_ceph_conf =/etc/ceph/ceph.conf '//7099行、去掉注释、添加CEPH配置文件路径' 7256 rbd_user=cinder '//7256行、去掉注释、添加cinder' 7261 rbd_secret_uuid=f4cd0fff-b0e4-4699-88a8-72149e9865d7 '//7261行、去掉注释、添加UUID值' 6932 disk_cachemodes ="network=writeback" '//6932行、去掉注释、添加"network=writeback"硬盘缓存模式' live_migration_flag="VIR_MIGRATE_UNDEFINE_SOURCE,VIR_MIGRATE_PEER2PEER,VIR_MIGRATE_LIVE, VIR_MIGRATE_PERSIST_DEST,VIR_MIGRATE_TUNNELLED" '//找到live_migration附近添加整行 是否启用热迁移' hw_disk_discard=unmap '//取消注释,添加unmap' -

安装Libvirt(C1、C2节点)

[root@c1 ~]# yum -y install libvirt [root@c2 ~]# yum -y install libvirt -

编辑计算节点(C1、C2节点)(仅展示c1节点操作)

[root@c1 ~]# vi /etc/ceph/ceph.conf '//尾行添加' [client] rbd cache=true rbd cache writethrough until flush=true admin socket = /var/run/ceph/guests/$cluster-$type.$id.$pid.$cctid.asok log file = /var/log/qemu/qemu-guest-$pid.log rbd concurrent management ops = 20 [root@c1 ~]# mkdir -p /var/run/ceph/guests/ /var/log/qemu/ '//' -

创建配置文件目录和权限

[root@c1 ~]# mkdir -p /var/run/ceph/guests/ /var/log/qemu/ [root@c1 ~]# chown 777 -R /var/run/ceph/guests/ /var/log/qemu/ -

将控制节点的/ect/ceph/下的密钥复制到两个计算节点

[root@ct ceph(keystone_admin)]# cd /etc/ceph [root@ct ceph(keystone_admin)]# scp ceph.client.cinder.keyring root@c1:/etc/ceph ceph.client.cinder.keyring 100% 64 83.5KB/s 00:00 [root@ct ceph(keystone_admin)]# scp ceph.client.cinder.keyring root@c2:/etc/ceph ceph.client.cinder.keyring 100% 64 52.2KB/s 00:00 -

计算节点重启服务

[root@c1 ~]# systemctl restart libvirtd [root@c1 ~]# systemctl enable libvirtd [root@c1 ~]# systemctl restart openstack-nova-compute '//c2节点相同操作' -

实验成功,谢谢观看。可自行创建实例测试!