NLPer必会:bert+bilstm+CRF进行中文命名实体识别(NER)

1、命名实体识别由于有标注标签的存在,转化为多分类问题。

标注标签本次训练集中为‘BIO’方式标注

命名实体标注三种:人物,地点,组织机构

标签共7个类别:B-PER,I-PER,B-LOC,I-LOC,B-ORG,I-ORG,O

2、对于文本向量表示,如使用预训练模型BERT的向量:

安装腾讯肖涵博士开发的Bert Server(bert-serving-server)和Client(bert-serving-client)的python包

下载谷歌Bert-base chinese预训练模型

启动bert-serving-server,调用bert-serving-client,对字典集中所有字符进行encode

如不使用,则可以随机初始化一个numpy矩阵作为初始向量表示

3、对于文本序列的特征学习使用bilstm,可以双向获取各节点上下文特征

使用tensorflow 1.14版本构建bilstm模型主流程:

构建静态计算图(input层(输入数据的占位符)-embedding层(Bert或随机)-bilstm层(计算output)-softmax_or_crf层(计算预测标签概率值)-loss层(定义损失函数,计算loss)-optimizer层(定义优化损失函数方式:Adam/RMSProp…)-变量初始化开始层)

其中,softmax_or_crf层(最终分类使用softmax或CRF)

如使用softmax作为最后一层激活函数,则对于各节点,每个节点都在N个标签中选取softmax概率值最大的作为标签,标签选取时不在考虑上个节点的标签类别;

如使用CRF作为最后一层进行分类,则对于各节点,每个节点在N个标签中选取标签时,还同时考虑上个节点的标签与该节点标签的转移概率,最后用维特比算法,根据每个节点标签的likelihood和标签之间的转移概率,计算出最优标签序列

数据集 char sentence PER LOC ORG

训练集:1000043 20864 8144 16571 9277

验证集:112187 2318 884 1951 984

测试集:223832 4636 1864 3658 6185

中文数据集,感谢博文命名实体识别训练集汇总(一直更新)中提供的语料地址

epoch=20

loss:

代码:

代码:

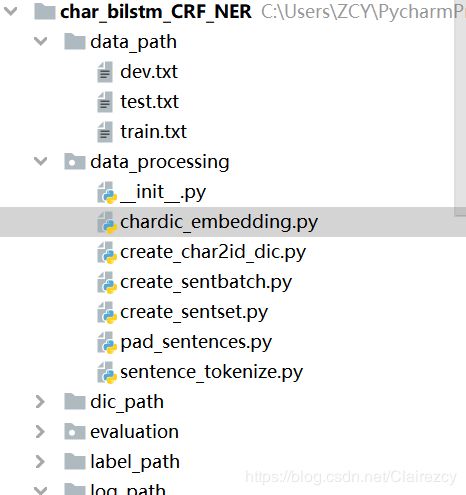

1、data_processing数据处理模块:

重点:字向量生成

重点:字向量生成

chardic_embedding.py:

from run.parameters_set import args

import numpy as np

from data_processing.create_char2id_dic import char_dic

from bert_serving.client import BertClient

bert_root = 'C:\\Users\\ZCY\\Anaconda3\\NLP_BERT\\chinese_L-12_H-768_A-12\\'

bert_config_file = bert_root+'bert_config.json'

def chardic_embedding():

embedding_dim = args.embedding_dim

if args.char_embedding_init == 'random':

embedding_matrix = np.random.uniform(-0.25,0.25,(len(char_dic.keys()),embedding_dim))

embedding_matrix = np.float32(embedding_matrix)

elif args.char_embedding_init == 'BERT':

embedding_matrix = BertClient().encode(list(char_dic.keys()))#bert_base的维度768,bert_large的维度1024,均不可更改,如需改可以添加隐层的方式更改

#print(embedding_matrix)

return embedding_matriximport tensorflow as tf

from tensorflow.contrib.rnn import LSTMCell

from tensorflow.contrib.crf import crf_log_likelihood

from tensorflow.contrib.layers import xavier_initializer

from data_processing.create_sentset import tag2id

from data_processing.create_char2id_dic import char_dic

from data_processing.chardic_embedding import chardic_embedding

from run.parameters_set import model_path

class Bilstm_CRF(object):

def __init__(self,args):

self.hidden_dim = args.hidden_dim

self.epoch_num = args.epochs

self.optimizer = args.optimizer

self.learning_rate = args.learning_rate

self.gradient_clipping = args.gradient_clipping

self.dropout_rate = args.dropout

self.CRF_SOFTMAX = args.CRF_SOFTMAX

self.embedding = chardic_embedding()

self.char_dic = char_dic

self.tag2id = tag2id

self.model_path = model_path

def computing_graph(self):

self.input_placeholder()

self.embedding_layer()

self.bilstm_layer()

self.softmax_or_crf()

self.loss_operation()

self.optimize_operation()

self.init_operation()

def input_placeholder(self):

self.sent_token_pad_set = tf.placeholder(tf.int32,shape=[None,None],name='padded_tokenized_sentences_dataset')

self.tag_label_pad_set = tf.placeholder(tf.int32,shape=[None,None],name='padded_labeled_tags_dataset')

self.sent_original_length = tf.placeholder(tf.int32,shape=[None],name='original_sentence_length')

def embedding_layer(self):

with tf.variable_scope('char_embedding'):

chardic_embeding_variable = tf.Variable(self.embedding,dtype=tf.float32,trainable=True,name='char_embedding_dic')

char_embedding_variable = tf.nn.embedding_lookup(params=chardic_embeding_variable,ids=self.sent_token_pad_set,name='char_embedding')

self.char_embedding = tf.nn.dropout(char_embedding_variable,self.dropout_rate)

def bilstm_layer(self):

cell_forward = LSTMCell(self.hidden_dim)

cell_backward = LSTMCell(self.hidden_dim)

(out_fw,out_bw),(out_state_fw, out_state_bw) = tf.nn.bidirectional_dynamic_rnn(cell_forward,

cell_backward,

inputs=self.char_embedding,

sequence_length=self.sent_original_length,

dtype=tf.float32)

output = tf.concat([out_fw,out_bw],axis=-1)

output = tf.nn.dropout(output,self.dropout_rate)

output_dim = tf.shape(output)

output = tf.reshape(output,shape=[-1,2*self.hidden_dim])

with tf.variable_scope('Denselayer_prameters'):

W = tf.get_variable(name='W',shape=[2*self.hidden_dim,len(self.tag2id.keys())],

initializer=xavier_initializer(),dtype=tf.float32)

b = tf.get_variable(name='b',shape=[len(self.tag2id.keys())],initializer=tf.zeros_initializer(),dtype=tf.float32)

pred = tf.matmul(output, W) + b

self.pred_tag_label = tf.reshape(pred,shape=[-1,output_dim[1],len(self.tag2id.keys())])

def loss_operation(self):

if self.CRF_SOFTMAX == 'CRF':

self.CRF_log_likelihood, self.CRF_transition_matrix = crf_log_likelihood(inputs=self.pred_tag_label,

tag_indices=self.tag_label_pad_set,

sequence_lengths=self.sent_original_length)

self.loss = -tf.reduce_mean(self.CRF_log_likelihood)

else:

loss = tf.nn.sparse_softmax_cross_entropy_with_logits(labels=self.tag_label_pad_set,logits=self.pred_tag_label)

mask = tf.sequence_mask(lengths=self.sent_original_length)

loss = tf.boolean_mask(loss,mask)

self.loss = tf.reduce_mean(loss)

def softmax_or_crf(self):

if self.CRF_SOFTMAX != 'CRF':

self.softmax_labels = tf.cast(tf.argmax(self.pred_tag_label, axis=-1), tf.int32)

def optimize_operation(self):

with tf.variable_scope('optimizer'):

if self.optimizer == 'Adam':#Adam/RMSProp/Adagrad/Momentum/SGD

optim = tf.train.AdamOptimizer(learning_rate=self.learning_rate)

elif self.optimizer == 'RMSProp':

optim = tf.train.RMSPropOptimizer(learning_rate=self.learning_rate)

gradient_and_variable_update = optim.compute_gradients(self.loss)

gradient_and_variable_clipping = [[tf.clip_by_value(grad,-self.gradient_clipping,self.gradient_clipping), var] for grad,var in gradient_and_variable_update]

self.optimize_op = optim.apply_gradients(gradient_and_variable_clipping)

def init_operation(self):

self.init_op = tf.global_variables_initializer()3、utils用于训练数据的工具模块

重点:每一轮次训练和验证过程

run_one_epoch.py

from data_processing.create_sentbatch import create_sent_batch

from data_processing.create_sentset import tag2id

from data_processing.pad_sentences import pad_sentences

from data_processing.sentence_tokenize import sentence_tokenize

from run.parameters_set import args,model_path

from utils.create_feed_dict import create_feed_dict

from utils.keep_log import keep_log

from utils.predict import predict

from evaluation.evaluate import evaluate

import time

import sys

import os

def run_one_epoch(model,sess,trainset,devset,epoch,saver):

starttime = time.strftime('%Y-%m-%d %H:%M:%S',time.localtime())

#print('sentset:',sentset[0])

train_sentset_token = [(sentence_tokenize(sent_list),[tag2id[tag] for tag in tag_list]) for (sent_list,tag_list)

in trainset]

dev_sentset = [sent_list for (sent_list, _) in devset]

dev_sentset_token = [(sentence_tokenize(sent_list), [tag2id[tag] for tag in tag_list]) for (sent_list, tag_list)

in devset]

train_sentset_pad,train_tagset_pad,train_sent_length_list = pad_sentences(train_sentset_token)

dev_sentset_pad, dev_tagset_pad, dev_sent_length_list = pad_sentences(dev_sentset_token)

train_dataset_pad = []

for sent_pad,tag_pad,sent_length in zip(train_sentset_pad,train_tagset_pad,train_sent_length_list):

train_dataset_pad.append((sent_pad,tag_pad,sent_length))

train_sent_batches = create_sent_batch(train_dataset_pad)

train_num_batches = int((len(train_dataset_pad)+args.batch_size-1)/args.batch_size)

dev_dataset_pad = []

for sent_pad, tag_pad, sent_length in zip(dev_sentset_pad, dev_tagset_pad, dev_sent_length_list):

dev_dataset_pad.append((sent_pad, tag_pad, sent_length))

dev_sent_batches = create_sent_batch(dev_dataset_pad)

for step,(sent_list,tag_list,length_list) in enumerate(train_sent_batches):

feed_dict = create_feed_dict(model,sent_list,length_list,tag_list)

#print(feed_dict)

train_loss = sess.run(model.loss,feed_dict=feed_dict)

if step%300==0 or step+1 ==train_num_batches:

sys.stdout.write('{} batch in {} batches'.format(step + 1, train_num_batches))

keep_log().info('{} epoch: {} step:{} loss:{}'.format(starttime,epoch,step,train_loss))

if step+1==train_num_batches:

saver.save(sess,os.path.join(model_path,'model'))

keep_log().info('------------------validation--------------')

dev_predlable_list = []

for sent_list, tag_list, length_list in dev_sent_batches:

predlable_batch = predict(model, sess, sent_list, length_list)

dev_predlable_list.extend(predlable_batch)

evaluate(dev_sentset, dev_tagset_pad, dev_predlable_list)4、evaluate模型评估模块:

重点:

使用perl的conlleval_rev.pl程序进行评估:

conlleval.py

在这里插入rom run.parameters_set import label_toeval_path,perl_metric_path

import os

def conlleval(char_label_pred_set):

conlleval_perl_path = os.path.join(os.path.abspath('..'),"conlleval_rev.pl")

print(conlleval_perl_path)

with open(os.path.join(label_toeval_path,'label_toeval'), "w",encoding='utf-8') as fw:

line_toeval = []

for char, tag, tag_pred in char_label_pred_set:

if tag == 'O':tag = '0'

else: tag

if tag_pred =='O':tag_pred = '0'

else: tag_pred

line_toeval.append("{} {} {}\n".format(char, tag, tag_pred))

line_toeval.append("\n")

fw.writelines(line_toeval)

os.system("perl {} < {} > {}".format(conlleval_perl_path, os.path.join(label_toeval_path,'label_toeval'), os.path.join(perl_metric_path,'per_metric')))

with open(os.path.join(perl_metric_path,'per_metric')) as fr:

perl_metrics = [line.strip() for line in fr]

return perl_metrics