ResNeSt——ResNet最强改进版

论文地址:https://hangzhang.org/files/resnest.pdf

github:https://github.com/zhanghang1989/ResNeSt

1. 先来看看作者,都是大牛,感谢给我们提供优越的网络,并且开源

Caption

Caption

2. 再来看看网络的性能

在图像识别,目标检测和图像分割都有长点,说明网络的泛化能力极强。

Caption

Caption

ResNeSt在图像分类上中ImageNet数据集上超越了其前辈ResNet、ResNeXt、SENet以及EfficientNet。使用ResNeSt-50为基本骨架的Faster-RCNN比使用ResNet-50的mAP要高出3.08%。使用ResNeSt-50为基本骨架的DeeplabV3比使用ResNet-50的mIOU要高出3.02%。涨点效果非常明显。

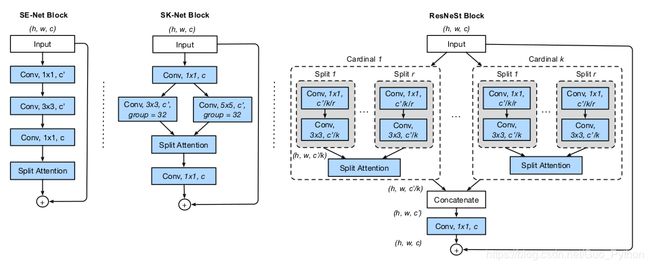

3. 直接看重点,Split-Attention模块

Caption

Caption

从左到右分别是SENet, SKNet, ResNeSt的网络结构,Split-Attention其本质可理解为切片的注意力监督机制。

Caption

Caption

每个Cardinal的实现细节,悄悄告诉你,看代码会更加清楚哦!像不像多个SENet的组合?哈哈哈。。。

4. 开源的模型是从ResNeSt50开始的,怎么用ResNeSt18呢? 博主给你答案

在官方代码中添加下面的一段代码,就可以调用ResNeSt18了,是不是很简单。

def resnest18(pretrained=False, root='~/.encoding/models', **kwargs):

model = ResNet(Bottleneck, [2, 2, 2, 2],

radix=2, groups=1, bottleneck_width=64,

deep_stem=True, stem_width=32, avg_down=True,

avd=True, avd_first=False, **kwargs)

if pretrained:

# 官方没有提供resnest18的预训练模型,我这里用resnest50的预训练模型加载

weight = torch.hub.load_state_dict_from_url(

resnest_model_urls['resnest50'], progress=True, check_hash=True)

model_dict = model.state_dict()

for k,v in weight.items():

if k in model_dict.keys():

model_dict[k] = v

model.load_state_dict(model_dict)

return model5. 测试一下

整体代码是这样的

##+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

## Created by: Hang Zhang

## Email: [email protected]

## Copyright (c) 2020

##

## LICENSE file in the root directory of this source tree

##+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

"""ResNeSt models"""

import torch

from resnet import ResNet, Bottleneck

__all__ = ['resnest50', 'resnest101', 'resnest200', 'resnest269']

_url_format = 'https://hangzh.s3.amazonaws.com/encoding/models/{}-{}.pth'

_model_sha256 = {name: checksum for checksum, name in [

('528c19ca', 'resnest50'),

('22405ba7', 'resnest101'),

('75117900', 'resnest200'),

('0cc87c48', 'resnest269'),

]}

def short_hash(name):

if name not in _model_sha256:

raise ValueError('Pretrained model for {name} is not available.'.format(name=name))

return _model_sha256[name][:8]

resnest_model_urls = {name: _url_format.format(name, short_hash(name)) for

name in _model_sha256.keys()

}

# 博主添加的

def resnest18(pretrained=False, root='~/.encoding/models', **kwargs):

model = ResNet(Bottleneck, [2, 2, 2, 2],

radix=2, groups=1, bottleneck_width=64,

deep_stem=True, stem_width=32, avg_down=True,

avd=True, avd_first=False, **kwargs)

if pretrained:

# 官方没有提供resnest18的预训练模型,我这里用resnest50的预训练模型加载

weight = torch.hub.load_state_dict_from_url(

resnest_model_urls['resnest50'], progress=True, check_hash=True)

model_dict = model.state_dict()

for k,v in weight.items():

if k in model_dict.keys():

model_dict[k] = v

model.load_state_dict(model_dict)

return model

def resnest50(pretrained=False, root='~/.encoding/models', **kwargs):

model = ResNet(Bottleneck, [3, 4, 6, 3],

radix=2, groups=1, bottleneck_width=64,

deep_stem=True, stem_width=32, avg_down=True,

avd=True, avd_first=False, **kwargs)

if pretrained:

model.load_state_dict(torch.hub.load_state_dict_from_url(

resnest_model_urls['resnest50'], progress=True, check_hash=True))

return model

def resnest101(pretrained=False, root='~/.encoding/models', **kwargs):

model = ResNet(Bottleneck, [3, 4, 23, 3],

radix=2, groups=1, bottleneck_width=64,

deep_stem=True, stem_width=64, avg_down=True,

avd=True, avd_first=False, **kwargs)

if pretrained:

model.load_state_dict(torch.hub.load_state_dict_from_url(

resnest_model_urls['resnest101'], progress=True, check_hash=True))

return model

def resnest200(pretrained=False, root='~/.encoding/models', **kwargs):

model = ResNet(Bottleneck, [3, 24, 36, 3],

radix=2, groups=1, bottleneck_width=64,

deep_stem=True, stem_width=64, avg_down=True,

avd=True, avd_first=False, **kwargs)

if pretrained:

model.load_state_dict(torch.hub.load_state_dict_from_url(

resnest_model_urls['resnest200'], progress=True, check_hash=True))

return model

def resnest269(pretrained=False, root='~/.encoding/models', **kwargs):

model = ResNet(Bottleneck, [3, 30, 48, 8],

radix=2, groups=1, bottleneck_width=64,

deep_stem=True, stem_width=64, avg_down=True,

avd=True, avd_first=False, **kwargs)

if pretrained:

model.load_state_dict(torch.hub.load_state_dict_from_url(

resnest_model_urls['resnest269'], progress=True, check_hash=True))

return model

if __name__ == "__main__":

net = resnest18(pretrained=True)

print(net)运行一下,加载了预训练模型,ResNeSt18的网络结构如下:

ResNet(

(conv1): Sequential(

(0): Conv2d(3, 32, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(4): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(5): ReLU(inplace=True)

(6): Conv2d(32, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(maxpool): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

(layer1): Sequential(

(0): Bottleneck(

(conv1): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): SplAtConv2d(

(conv): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=2, bias=False)

(bn0): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(fc1): Conv2d(64, 32, kernel_size=(1, 1), stride=(1, 1))

(bn1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(fc2): Conv2d(32, 128, kernel_size=(1, 1), stride=(1, 1))

)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): AvgPool2d(kernel_size=1, stride=1, padding=0)

(1): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): SplAtConv2d(

(conv): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=2, bias=False)

(bn0): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(fc1): Conv2d(64, 32, kernel_size=(1, 1), stride=(1, 1))

(bn1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(fc2): Conv2d(32, 128, kernel_size=(1, 1), stride=(1, 1))

)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

)

(layer2): Sequential(

(0): Bottleneck(

(conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(avd_layer): AvgPool2d(kernel_size=3, stride=2, padding=1)

(conv2): SplAtConv2d(

(conv): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=2, bias=False)

(bn0): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(fc1): Conv2d(128, 64, kernel_size=(1, 1), stride=(1, 1))

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(fc2): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1))

)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): AvgPool2d(kernel_size=2, stride=2, padding=0)

(1): Conv2d(256, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): SplAtConv2d(

(conv): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=2, bias=False)

(bn0): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(fc1): Conv2d(128, 64, kernel_size=(1, 1), stride=(1, 1))

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(fc2): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1))

)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

)

(layer3): Sequential(

(0): Bottleneck(

(conv1): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(avd_layer): AvgPool2d(kernel_size=3, stride=2, padding=1)

(conv2): SplAtConv2d(

(conv): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=2, bias=False)

(bn0): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(fc1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1))

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(fc2): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1))

)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): AvgPool2d(kernel_size=2, stride=2, padding=0)

(1): Conv2d(512, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(2): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): SplAtConv2d(

(conv): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=2, bias=False)

(bn0): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(fc1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1))

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(fc2): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1))

)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

)

(layer4): Sequential(

(0): Bottleneck(

(conv1): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(avd_layer): AvgPool2d(kernel_size=3, stride=2, padding=1)

(conv2): SplAtConv2d(

(conv): Conv2d(512, 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=2, bias=False)

(bn0): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(fc1): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1))

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(fc2): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1))

)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): AvgPool2d(kernel_size=2, stride=2, padding=0)

(1): Conv2d(1024, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(2): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): SplAtConv2d(

(conv): Conv2d(512, 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=2, bias=False)

(bn0): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(fc1): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1))

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(fc2): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1))

)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

)

(avgpool): GlobalAvgPool2d()

(fc): Linear(in_features=2048, out_features=1000, bias=True)

)

就是这么简单,替换掉你的ResNet吧! 如果喜欢就给博主点个赞吧。。。

相关: https://www.cnblogs.com/xiximayou/p/12728644.html

机器之心