Flume-自定义拦截器,自定义Source从MySQL读取数据,自定义Sink

目录

- 自定义拦截器

- 自定义Source

- 自定义Sink

Flume-1.9.0安装、监听端口、监控本地文件并上传HDFS、监控目录新文件并上传HDFS、监控追加文件(断点续传)

Flume-Flume结构,单数据源多出口,故障转移(Failover),负载均衡,聚合等案例

自定义拦截器

使用Flume采集服务器本地日志,需要按照日志类型的不同,将不同种类的日志发往不同的分析系统。

在实际的开发中,一台服务器产生的日志类型可能有很多种,不同类型的日志可能需要发送到不同的分析系统。此时会用到Flume拓扑结构中的Multiplexing结构,Multiplexing的原理是,根据event中Header的某个key的值,将不同的event发送到不同的Channel中,所以我们需要自定义一个Interceptor,为不同类型的event的Header中的key赋予不同的值。

拦截器除了可以将不同类型的日志发往不同的地方,还可以过滤指定的事件。

下面Flume1监听端口,自定义一个拦截器,如果接收到的数据为字符串“hello”,则将其传给Flume2;否则将字符串改为“good morning”,并将其传给Flume3.

-

添加依赖

org.apache.flume flume-ng-core 1.7.0 -

定义一个类并实现Interceptor接口,一定要记得写Builder内部类。然后打包放到flume目录下的lib目录。

package flume;

import java.util.ArrayList;

import java.util.List;

import java.util.Map;

import org.apache.flume.Context;

import org.apache.flume.Event;

import org.apache.flume.interceptor.Interceptor;

public class DIYInterceptor implements Interceptor {

public List<Event> list = null;

public void initialize() {

list = new ArrayList<Event>();

}

public Event intercept(Event event) {

Map<String, String> header = event.getHeaders();

String body = new String(event.getBody());

if (body.contains("hello")) {

header.put("isHello", "hello");

}else {

header.put("isHello", "other");

event.setBody("good morning".getBytes());

}

event.setHeaders(header);

return event;

}

public List<Event> intercept(List<Event> events) {

list.clear();

for (Event event : events) {

list.add(intercept(event));

}

return list;

}

public void close() {}

public static class Builder implements Interceptor.Builder {

public void configure(Context context) {}

public Interceptor build() {

return new DIYInterceptor();

}

}

}

-

编写配置文件flume1,flume2,flume3:

flume1的source的类型是netcat,监听端口。Channel Selector的类型是multiplexing,事件如果被设置为“hello”则选择c1这个channel,否则选择c2。注意拦截器interceptor的类型需要全类名以及内部类Builder。sink的类型是avro,对接另外两个Agent。# Name the components on this agent a1.sources = r1 a1.channels = c1 c2 a1.sinks = k1 k2 # Describe/configure the source a1.sources.r1.type = netcat a1.sources.r1.bind = master a1.sources.r1.port = 33333 # Describe the channel selector a1.sources.r1.selector.type = multiplexing a1.sources.r1.selector.header = isHello a1.sources.r1.selector.mapping.hello = c1 a1.sources.r1.selector.mapping.other = c2 # Describe the interceptor a1.sources.r1.interceptors = i1 a1.sources.r1.interceptors.i1.type = flume.DIYInterceptor$Builder # Describe the sink a1.sinks.k1.type = avro a1.sinks.k1.hostname = master a1.sinks.k1.port = 44444 a1.sinks.k2.type = avro a1.sinks.k2.hostname = master a1.sinks.k2.port = 55555 # Use a channel which buffers events in memory a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 a1.channels.c2.type = memory a1.channels.c2.capacity = 1000 a1.channels.c2.transactionCapacity = 100 # Bind the source and sink to the channel a1.sources.r1.channels = c1 c2 a1.sinks.k1.channel = c1 a1.sinks.k2.channel = c2下面是flume2和flume3的配置文件

# Name the components on this agent a2.sources = r1 a2.channels = c1 a2.sinks = k1 # Describe/configure the source a2.sources.r1.type = avro a2.sources.r1.bind = master a2.sources.r1.port = 44444 # Describe the sink a2.sinks.k1.type = logger # Use a channel which buffers events in memory a2.channels.c1.type = memory a2.channels.c1.capacity = 1000 a2.channels.c1.transactionCapacity = 100 # Bind the source and sink to the channel a2.sources.r1.channels = c1 a2.sinks.k1.channel = c1flume2和flume3的配置文件类似,将数据大打印到控制台。

# Name the components on this agent a3.sources = r1 a3.channels = c1 a3.sinks = k1 # Describe/configure the source a3.sources.r1.type = avro a3.sources.r1.bind = master a3.sources.r1.port = 55555 # Describe the sink a3.sinks.k1.type = logger # Use a channel which buffers events in memory a3.channels.c1.type = memory a3.channels.c1.capacity = 1000 a3.channels.c1.transactionCapacity = 100 # Bind the source and sink to the channel a3.sources.r1.channels = c1 a3.sinks.k1.channel = c1 -

启动flume1、flume2、flume3。注意先启动flume2、flume3,avro作为服务端。

bin/flume-ng agent -c conf -f flume2.conf -n a2 -Dflume.root.logger=INFO,console bin/flume-ng agent -c conf -f flume3.conf -n a3 -Dflume.root.logger=INFO,console bin/flume-ng agent -c conf -f flume1.conf -n a1 -Dflume.root.logger=INFO,console -

启动新终端,输入nc master 3333,然后输入信息

左上角是flume1,右上角是flume2,左下角是flume3,右下角是输入窗口。可以看到,如果数据是”hello”,那么它会传给flume2,否则将变成“good morning”传给flume3.

参考博客:自定义flume拦截器-实现了多种功能

自定义Source

Source是负责接收数据到Flume Agent的组件。Source组件可以处理各种类型、各种格式的日志数据,包括avro、thrift、exec、jms、spoolingdirectory、netcat、sequencegenerator、syslog、http、legacy。官方提供的source类型已经很多,但是有时候并不能满足实际开发当中的需求,此时我们就需要根据实际需求自定义某些source。

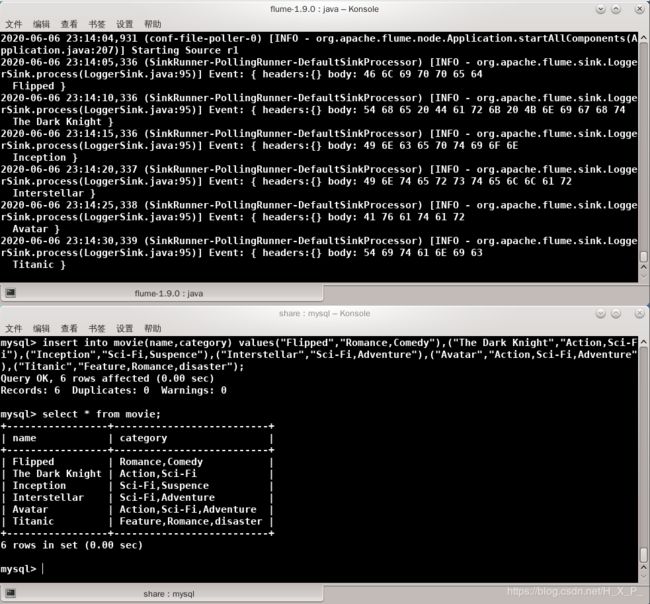

下面自定义一个source,接收MySQL中study库下的movie表的数据。

- 登录数据库,建表

create database if not exists study;

create table if not exists movie(

name varchar(100),

category varchar(100));

- 插入数据

insert into movie(name,category)

values("Flipped","Romance,Comedy"),

("The Dark Knight","Action,Sci-Fi"),

("Inception","Sci-Fi,Suspence"),

("Interstellar","Sci-Fi,Adventure"),

("Avatar","Action,Sci-Fi,Adventure"),

("Titanic","Feature,Romance,disaster");

- 编写JDBCUtil类,实现连接数据库,查询获取数据

package source;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

import java.sql.ResultSetMetaData;

import java.sql.SQLException;

import java.util.ArrayList;

import java.util.List;

public class JDBCUtil {

public static Connection getConnection(String url, String user, String password, String driverClass) throws ClassNotFoundException, SQLException {

Connection connection = null;

Class.forName(driverClass);

connection = DriverManager.getConnection(url, user, password);

return connection;

}

public static List<String> getData(String url, String user, String password, String driverClass, String sql) {

Connection con = null;

PreparedStatement ps = null;

ResultSet resultSet = null;

try {

//获取连接

con = getConnection(url, user, password, driverClass);

if (con == null) {

return null;

}

//预编译SQL语句,返回PreparedStatement对象

ps = con.prepareStatement(sql);

//执行SQL并获取返回集

resultSet = ps.executeQuery();

//获取结果集的元数据

ResultSetMetaData rsmd = resultSet.getMetaData();

//通过元数据获取结果集的列数

int columnCount = rsmd.getColumnCount();

//迭代获取行数据

List<String> list = new ArrayList<String>();

while (resultSet.next()) {

String result = "";

//处理结果集的每一行数据

for (int i = 1; i <= columnCount; i++) {

//获取列值

result += resultSet.getString(i);

}

list.add(result);

}

return list;

} catch (SQLException e){

e.printStackTrace();

} catch (IllegalArgumentException e) {

e.printStackTrace();

} catch (SecurityException e) {

e.printStackTrace();

} catch (ClassNotFoundException e) {

e.printStackTrace();

} finally {

//关闭资源

closeResource(con, ps, resultSet);

}

return null;

}

private static void closeResource(Connection con, PreparedStatement ps, ResultSet resultSet) {

try {

if (con != null)

con.close();

if (ps != null)

ps.close();

if (resultSet != null)

resultSet.close();

} catch (SQLException e) {

e.printStackTrace();

}

}

}

- 实现一个类继承AbstractSource类并实现Configurable、PollableSource接口。configure(Context context) 方法可以从配置文件获取数据。process() 方法会被循环调用。getChannelProcessor().processEvent(event); 将事件传给Channel。然后打包放到flume目录下的lib目录。

package source;

import java.util.List;

import org.apache.flume.Context;

import org.apache.flume.Event;

import org.apache.flume.EventDeliveryException;

import org.apache.flume.PollableSource;

import org.apache.flume.conf.Configurable;

import org.apache.flume.event.SimpleEvent;

import org.apache.flume.source.AbstractSource;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

public class DIYSource extends AbstractSource implements Configurable, PollableSource {

private String url;

private String user;

private String password;

private String driverClass;

private Event event = new SimpleEvent();

private Logger logger = LoggerFactory.getLogger(DIYSource.class);

public void configure(Context context) {

// 从配置文件获取数据

url = context.getString("mysql.url");

user = context.getString("mysql.user");

password = context.getString("mysql.password");

driverClass = context.getString("mysql.driverClass");

}

public Status process() throws EventDeliveryException {

Status status = null;

// 获取数据

String sql = "select name from movie";

List<String> result = JDBCUtil.getData(url, user, password, driverClass, sql);

if (result == null || result.isEmpty()) {

return Status.BACKOFF;

}

try {

// 将每行数据设置为一个事件

for (String string : result) {

//logger.info(string);

event.setBody(string.getBytes());

// 将事件传给Channel

getChannelProcessor().processEvent(event);

status = Status.READY;

Thread.sleep(5000);

}

} catch (Exception e) {

e.printStackTrace();

status = Status.BACKOFF;

}

return status;

}

public long getBackOffSleepIncrement() {

return 0;

}

public long getMaxBackOffSleepInterval() {

return 0;

}

}

-

创建配置文件mysql-flume-logger.conf。source的类型是自己写的类的全类名,还要JDBC连接数据库的四个要素。sink的类型是logger,如果想输出到其它地方可以使用其他类型的sink。

# Name the components on this agent a1.sources = r1 a1.channels = c1 a1.sinks = k1 # Describe/configure the source a1.sources.r1.type = source.DIYSource a1.sources.r1.mysql.url = jdbc:mysql://master:3306/study?useSSL=false a1.sources.r1.mysql.user = root a1.sources.r1.mysql.password = 123456 a1.sources.r1.mysql.driverClass = com.mysql.jdbc.Driver a1.sources.r1.maxBytesToLog = 256 # Describe the sink a1.sinks.k1.type = logger # Use a channel which buffers events in memory a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 # Bind the source and sink to the channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1 -

启动flume

bin/flume-ng agent -c conf -f mysql-flume-logger.conf -n a1 -Dflume.root.logger=INFO,console

自定义Sink

Sink不断地轮询Channel中的事件,并将这些事件批量写入到存储或索引系统、或者发送到另一个FlumeAgent。然后Channel批量地移除它们。

Sink是完全事务性的。在从Channel批量删除数据之前,每个Sink用Channel启动一个事务。批量事件一旦成功写出到存储系统或下一个FlumeAgent,Sink就利用Channel提交事务。事务一旦被提交,该Channel从自己的内部缓冲区删除事件。

Sink组件目的地包括hdfs、logger、avro、thrift、ipc、file、null、HBase、solr、自定义。官方提供的Sink类型已经很多,但是有时候并不能满足实际开发当中的需求,此时我们就需要根据实际需求自定义某些Sink。

下面自定义一个sink,实现将数据写入到本地文件中。

- 实现一个类继承AbstractSink类并实现Configurable接口。然后打包放到flume目录下的lib目录。

package sink;

import java.io.File;

import java.io.FileOutputStream;

import java.io.IOException;

import org.apache.flume.Channel;

import org.apache.flume.Context;

import org.apache.flume.Event;

import org.apache.flume.EventDeliveryException;

import org.apache.flume.Transaction;

import org.apache.flume.conf.Configurable;

import org.apache.flume.sink.AbstractSink;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

public class DIYSink extends AbstractSink implements Configurable{

private String path;

private Logger logger = LoggerFactory.getLogger(DIYSink.class);

public void configure(Context context) {

path = context.getString("path");

}

public Status process() throws EventDeliveryException {

Status status =null;

Transaction transaction = null;

FileOutputStream fos = null;

try {

// 获取channel

Channel channel = getChannel();

// 获取事务

transaction = channel.getTransaction();

// 开启事务

transaction.begin();

// 处理事件

Event event = channel.take();

// 第二个参数true表示追加在文件末尾

fos = new FileOutputStream(new File(path), true);

logger.info(new String(event.getBody()));

fos.write(event.getBody());

fos.write("\n".getBytes());

fos.flush();

// 提交事务

transaction.commit();

status = Status.READY;

} catch (Exception e) {

e.getMessage();

// 回滚事务

transaction.rollback();

status = Status.BACKOFF;

} finally {

// 关闭事务

transaction.close();

try {

if (fos != null) {

fos.close();

}

} catch (IOException e) {

e.getMessage();

}

}

return status;

}

}

-

创建配置文件netcat-flume-file.conf。

# Name the components on this agent a1.sources = r1 a1.channels = c1 a1.sinks = k1 # Describe/configure the source a1.sources.r1.type = netcat a1.sources.r1.bind = master a1.sources.r1.port = 33333 # Describe the sink a1.sinks.k1.type = sink.DIYSink a1.sinks.k1.path = /opt/flume-1.9.0/file.txt # Use a channel which buffers events in memory a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 # Bind the source and sink to the channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1