pytorch 入门 DenseNet

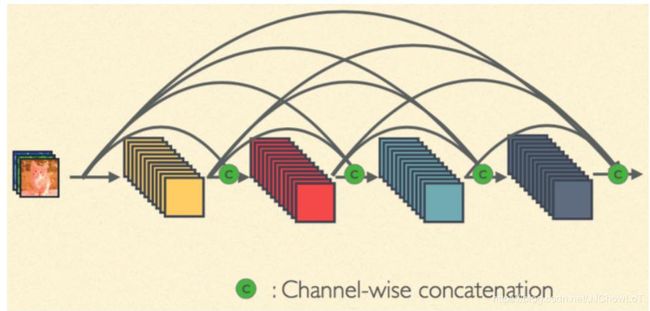

知识点0、dense_block的结构

知识点1、定义dense_block

知识点2、定义DenseNet的主体

知识点3、add_module

知识点

densenet是由 多个这种结构串联而成的

import torch

import numpy

from torch import nn

from torch.autograd import Variable

from torchvision.datasets import CIFAR10

定义conv_block

def conv_block(in_channel, out_channel)

layer = nn.Sequential(

nn.BatchNorm2d(in_channel),

nn.ReLU(True),

nn.conv2d(in_channel, out_channel, 3, padding=1, bias=False)

)

return layer

知识点1

定义dense_block

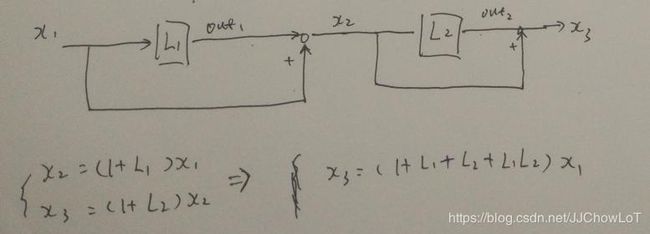

这里定义的for 循环 咋一看,好像不是DenseNet结构的

为了分析到底是什么情况,借助了 自动控制原理 的结构图思想

下图为for循环的结构图

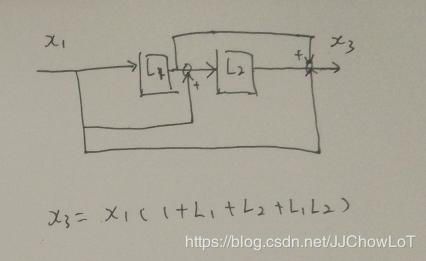

下图为DenseNet 应有的结构图

可见,两者的传递函数是一样的,所以,这里的for是没错的,佩服!

class dense_block(nn.Module):

def __init__(self, in_channel, growth_rate, num_layers):

super(dense_block, self).__init__()

block = []

channel = in_channel

for i in range(num_layers):

block.append(conv_block(channel, growth_rate))

channel += growth_rate

self.net = nn.Sequential(*block)

def forward(self, x):

for layer in self.net:

out = layer(x)

x = torch.cat((out, x), dim=1) # concatenate row dim=0 ; concatenate col dim=1

return x

知识点

这里定义block2的方式很新颖,在__ini__下用for 定义一个超大的网络

知识点

add_module是一种添加children的方法,在循环中更能体现作用

class densenet(nn.Module):

def __init__(self, in_channel, num_classes, growth_rate=32, block_layers=[6, 12, 24, 16]):

super(densenet, self).__init__()

self.block1 = nn.Sequential(

nn.Conv2d(in_channel, 64, 7, 2, 3),

nn.BatchNorm2d(64),

nn.ReLU(True),

nn.MaxPool2d(3, 2, padding=1)

)

channels = 64

block = []

for i, layers in enumerate(block_layers):

block.append(dense_block(channels, growth_rate, layers))

channels += layers * growth_rate

if i != len(block_layers) - 1:

block.append(transition(channels, channels // 2))

channels = channels // 2

self.block2 = nn.Sequential(*block)

self.block2.add_module('bn', nn.BatchNorm2d(channels)) # 是一种添加children的方法

self.block2.add_module('relu', nn.ReLU(True))

self.block2.add_module('avg_pool', nn.AvgPool2d(3))

self.classifier = nn.Linear(channels, num_classes)

def forward(self, x):

x = self.block1(x)

x = self.block2(x)

x = x.view(x.shape[0], -1)

x = self.classifier(x)

return x

基操不BB

test_net = densenet(3, 10)

test_x = Variable(torch.zeros(1, 3, 96, 96))

test_y = test_net(test_x)

print('output: {}'.format(test_y.shape))

def data_tf(x):

x = x.resize((96, 96), 2)

x = np.array(x, dtype='float32') / 255

x = (x - 0.5) / 0.5

x = x.transpose((2, 0, 1))

x = torch.from_numpy(x)

return x

from torch.utils.data import DataLoader

from jc_utils import train

train_set = CIFAR10('./data', train=True, transform=data_tf)

train_data = DataLoader(train_set, batch_size=64, shuffle=True)

test_set = CIFAR10('./data', train=False, transform=data_tf)

test_data = DataLoader(test_set, batch_size=128, shuffle=False)

net = densenet(3, 10)

optimizer = torch.optim.SGD(net.parameters(), lr=0.01)

criterion = nn.CrossEntropyLoss()

train(net, train_data, test_data, 20, optimizer, criterion)