实操:搭建ceph群集并进行扩容恢复故障验证

文章目录

- 一:基本环境

- 二:IP配置,修改主机名

- 三:配置其他基本环境

- 3.1 配置host文件

- 3.2 关闭防火墙和核心防护(三个节点都做)

- 3.3 节点之间配置ssh免交互

- 3.4 配置yum在线源(三个节点都做)

- 3.5 配置NTP时间服务

- 3.5.1 ceph00搭建ntpd服务

- 3.5.2 然后两外两个节点去同步ceph00(操作一致)

- 3.5.3 为ntpdate创建计划性任务

- 四:集群搭建

- 4.1 在ceph00上安装ceph-deploy

- 4.2 相关组件包安装完毕,接下来在ceph00的/etc/ceph上创建mon

- 4.3 初始化mon并收集密钥

- 4.4 创建osd

- 4.5 查看osd分配情况

- 4.6 在ceph00,将配置文件和admin密钥下发到ceph00 ceph01

- 4.7 给两个节点的ceph.client.admin.keyring执行权限

- 4.8 创建mgr

- 五:ceph扩容测试

- 5.1 首先先增加mon

- 5.2 然后创建osd

- 六:osd数据恢复

- 6.1 查看当前状态

- 6.2 模拟故障ceph osd out osd.2

- 6.3 然后到ceph02节点重启osd

- 6.4 恢复OSD到集群中

- 6.5 依据uuid创建

- 6.6 增加权限

- 6.7 查看ceph状态

- 6.8 为了保险,可以每个节点都重启一下

- 七:优化ceph内部通信网段

- 八:创建pool

一:基本环境

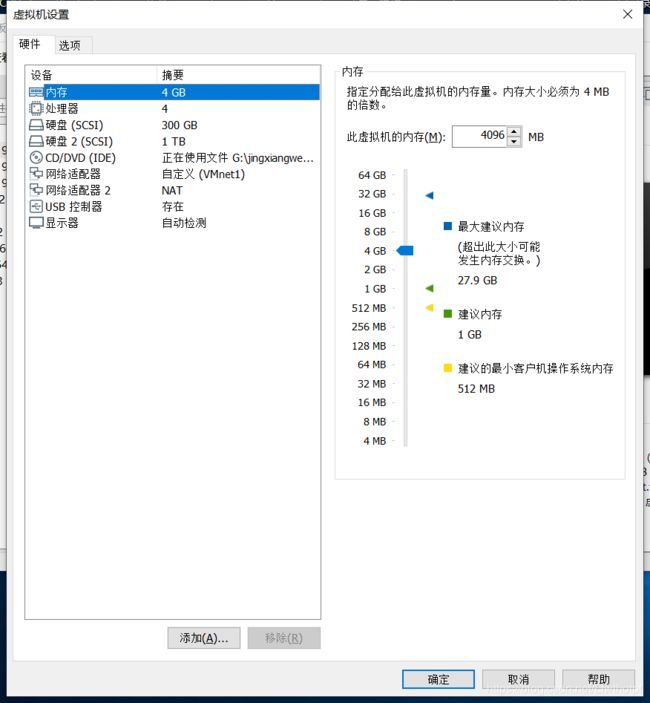

服务器版本:centos 7 1908版

三台服务器,每台服务器配置如下

二:IP配置,修改主机名

[root@ct ~]# hostnamectl set-hostname ceph00

[root@ct ~]# su

[root@ceph00 ~]# ip addr

2: eth0: mtu 1500 qdisc

inet 192.168.254.20/24 brd 192.168.254.255 scope global eth0

3: eth1: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

inet 192.168.247.20/24 brd 192.168.247.255 scope global eth1

[root@comp1 ~]# hostnamectl set-hostname ceph01

[root@comp1 ~]# su

[root@ceph1 network-scripts]# ip addr

2: eth0: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

inet 192.168.254.21/24 brd 192.168.254.255 scope global eth0

3: eth1: mtu 1500 qdisc

inet 192.168.247.21/24 brd 192.168.247.255 scope global eth1

[root@comp2 ~]# hostnamectl set-hostname ceph02

[root@comp2 ~]# su

[root@ceph02 ~]# ip addr

2: eth0: mtu 1500 qdisc

inet 192.168.254.22/24 brd 192.168.254.255 scope global eth0

3: eth1: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

inet 192.168.247.22/24 brd 192.168.247.255 scope global eth1

vmnet1:192.168.254.0/24

net:192.168.247.0/24

ceph00:192.168.254.20 192.168.247.20

ceph01:192.168.254.21 192.168.247.21

ceph02:192.168.254.22 192.168.247.22

三:配置其他基本环境

3.1 配置host文件

[root@ceph00 ~]# vi /etc/hosts

192.168.254.20 ceph00

192.168.254.21 ceph01

192.168.254.22 ceph02

[root@ceph00 ~]# scp -p /etc/hosts [email protected]:/etc/

hosts 100% 224 294.2KB/s 00:00

[root@ceph00 ~]# scp -p /etc/hosts [email protected]:/etc/

hosts 100% 224 158.9KB/s 00:00

3.2 关闭防火墙和核心防护(三个节点都做)

[root@ceph00 ~]# systemctl stop firewalld

[root@ceph00 ~]# systemctl disable firewalld

[root@ceph00 ~]# setenforce 0

setenforce: SELinux is disabled

[root@ceph00 ~]# sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

3.3 节点之间配置ssh免交互

[root@ceph00 ~]# ssh-keygen -t rsa

[root@ceph00 ~]# ssh-copy-id ceph01

[root@ceph00 ~]# ssh-copy-id ceph02

[root@ceph01 ~]# ssh-keygen -t rsa

[root@ceph01 ~]# ssh-copy-id ceph00

[root@ceph01 ~]# ssh-copy-id ceph02

[root@ceph02 ~]# ssh-keygen -t rsa

[root@ceph02 ~]# ssh-copy-id ceph00

[root@ceph02 ~]# ssh-copy-id ceph01

3.4 配置yum在线源(三个节点都做)

[root@ceph00 ~]# vi /etc/yum.conf

keepcache=1 //单独在ceph00节点开启yum缓存,用来做离线包

[root@ceph00 ~]# yum install wget curl -y //安装wget和curl方便在线下载repo

[root@ceph00 ~]# cd /etc/yum.repos.d/

[root@ceph00 yum.repos.d]# ls

CentOS-Base.repo CentOS-Debuginfo.repo CentOS-Media.repo CentOS-Vault.repo

CentOS-CR.repo CentOS-fasttrack.repo CentOS-Sources.repo

[root@ceph00 yum.repos.d]# mkdir bak

[root@ceph00 yum.repos.d]# mv * bak

mv: cannot move ‘bak’ to a subdirectory of itself, ‘bak/bak’

[root@ceph00 yum.repos.d]# ls

bak

获取阿里云的repo

[root@ceph00 yum.repos.d]# wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

//获取Centos-7.repo

--2020-03-30 20:42:13-- http://mirrors.aliyun.com/repo/Centos-7.repo

Resolving mirrors.aliyun.com (mirrors.aliyun.com)... 180.97.251.225, 218.94.207.205, 180.122.76.241, ...

Connecting to mirrors.aliyun.com (mirrors.aliyun.com)|180.97.251.225|:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 2523 (2.5K) [application/octet-stream]

Saving to: ‘/etc/yum.repos.d/CentOS-Base.repo’

100%[=======================================================================>] 2,523 --.-K/s in 0s

2020-03-30 20:42:14 (717 MB/s) - ‘/etc/yum.repos.d/CentOS-Base.repo’ saved [2523/2523]

[root@ceph00 yum.repos.d]# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

//获取epel-7.repo

--2020-03-30 20:43:09-- http://mirrors.aliyun.com/repo/epel-7.repo

Resolving mirrors.aliyun.com (mirrors.aliyun.com)... 218.94.207.212, 180.97.251.228, 180.122.76.244, ...

Connecting to mirrors.aliyun.com (mirrors.aliyun.com)|218.94.207.212|:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 664 [application/octet-stream]

Saving to: ‘/etc/yum.repos.d/epel.repo’

100%[=======================================================================>] 664 --.-K/s in 0s

2020-03-30 20:43:09 (108 MB/s) - ‘/etc/yum.repos.d/epel.repo’ saved [664/664]

[root@ceph00 yum.repos.d]# ls

bak CentOS-Base.repo epel.repo

[root@ceph00 yum.repos.d]# vi /etc/yum.repos.d/ceph.repo

//编辑ceph.repo

[ceph]

name=Ceph packages for

baseurl=https://mirrors.aliyun.com/ceph/rpm-mimic/el7/$basearch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc

priority=1

[ceph-noarch]

name=Ceph noarch packages

baseurl=https://mirrors.aliyun.com/ceph/rpm-mimic/el7/noarch/

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc

priority=1

[ceph-source]

name=Ceph source packages

baseurl=https://mirrors.aliyun.com/ceph/rpm-mimic/el7/SRPMS/

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc

priority=1

[root@ceph00 yum.repos.d]# yum update -y //更新

3.5 配置NTP时间服务

思路:ceph00同步阿里云,另外两个节点同步ceph00

3.5.1 ceph00搭建ntpd服务

[root@ceph00 yum.repos.d]# yum install ntpdate ntp -y

[root@ceph00 yum.repos.d]# ntpdate ntp1.aliyun.com

30 Mar 20:53:00 ntpdate[63952]: step time server 120.25.115.20 offset -2.117958 sec

[root@ceph00 yum.repos.d]# clock -w

//把当前的系统时间写入到CMOS中,以防开机重启时时间同步失效

[root@ceph00 yum.repos.d]# vi /etc/ntp.conf

//原有内容全部删除,写入下面参数

driftfile /var/lib/ntp/drift

restrict default nomodify

restrict 127.0.0.1

restrict ::1

restrict 192.168.254.0 mask 255.255.255.0 nomodify notrap fudge 127.127.1.0 stratum 10

server 127.127.1.0

includerfile /etc/ntp/crypto/pw

keys /etc/ntp/keys

disable monitor

[root@ceph00 yum.repos.d]# systemctl start ntpd

[root@ceph00 yum.repos.d]# systemctl enable ntpd

3.5.2 然后两外两个节点去同步ceph00(操作一致)

[root@ceph01 yum.repos.d]# yum install -y ntpdate

[root@ceph01 yum.repos.d]# ntpdate ceph00

30 Mar 21:01:26 ntpdate[79612]: step time server 192.168.254.20 offset -2.117961 sec

3.5.3 为ntpdate创建计划性任务

[root@ceph01 yum.repos.d]# crontab -e

*/10 * * * * /usr/sbin/ntpdate ceph00 >> /var/log/ntpdate.log

[root@ceph01 yum.repos.d]# crontab -l

*/10 * * * * /usr/sbin/ntpdate ceph00 >> /var/log/ntpdate.log

[root@ceph01 yum.repos.d]# systemctl restart crond

[root@ceph01 yum.repos.d]# systemctl enable crond

ceph集群环境整体搭建完毕

接下来开始正式进行集群搭建

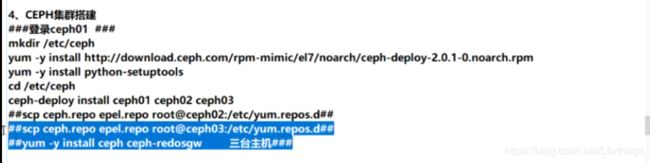

四:集群搭建

若是没有ceph.repo源,也可以先安装ceph-deploy,使用这个部署工具去在线安装ceph源

这里我们直接安装即可

4.1 在ceph00上安装ceph-deploy

[root@ceph00 yum.repos.d]# mkdir /etc/ceph //三个节点都做

[root@ceph00 yum.repos.d]# cd /etc/ceph/

[root@ceph00 ceph]# ls

[root@ceph00 ceph]#

[root@ceph00 ceph]# yum install -y python-setuptools ceph-deploy

[root@ceph00 ceph]# yum install -y ceph //三个节点都做

4.2 相关组件包安装完毕,接下来在ceph00的/etc/ceph上创建mon

备注:这里先只创建两个,另外一个做扩容操作验证

[root@ceph00 ceph]# pwd

/etc/ceph

[root@ceph00 ceph]# ceph-deploy new ceph00 ceph01

//产生ceph.conf ceph-deploy-ceph.log ceph.mon.keyring 三个文件

[root@ceph00 ceph]# ls

ceph.conf ceph-deploy-ceph.log ceph.mon.keyring rbdmap

[root@ceph01 yum.repos.d]# cd /etc/ceph //抽空看一下ceph01节点,这个rbdmap是安装ceph产生的

[root@ceph01 ceph]# ls

rbdmap

[root@ceph02 yum.repos.d]# cd /etc/ceph/

You have new mail in /var/spool/mail/root

[root@ceph02 ceph]# ls

rbdmap

[root@ceph00 ceph]# cat ceph.conf

[global]

fsid = eb1a67de-c665-4305-836d-4ace17bf145c

mon_initial_members = ceph00, ceph01

mon_host = 192.168.254.20,192.168.254.21

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

4.3 初始化mon并收集密钥

[root@ceph00 ceph]# pwd

/etc/ceph

[root@ceph00 ceph]# ceph-deploy mon create-initial

[root@ceph00 ceph]# ls //查看因为此条命令新增的文件

ceph.bootstrap-mds.keyring ceph.bootstrap-rgw.keyring ceph-deploy-ceph.log

ceph.bootstrap-mgr.keyring ceph.client.admin.keyring ceph.mon.keyring

ceph.bootstrap-osd.keyring ceph.conf rbdmap

[root@ceph01 ceph]# ls

ceph.conf rbdmap tmp5u17Ke

[root@ceph00 ceph]# ceph -s //查看状态

cluster:

id: eb1a67de-c665-4305-836d-4ace17bf145c

health: HEALTH_OK

services:

mon: 2 daemons, quorum ceph00,ceph01

mgr: no daemons active

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

4.4 创建osd

[root@ceph00 ceph]# pwd

/etc/ceph

You have new mail in /var/spool/mail/root

[root@ceph00 ceph]# ceph-deploy osd create --data /dev/sdb ceph00

[root@ceph00 ceph]# ceph-deploy osd create --data /dev/sdb ceph01

[root@ceph00 ceph]# ceph -s //查看状态

cluster:

id: eb1a67de-c665-4305-836d-4ace17bf145c

health: HEALTH_WARN

no active mgr

services:

mon: 2 daemons, quorum ceph00,ceph01

mgr: no daemons active

osd: 2 osds: 2 up, 2 in //显示两个osdup

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

4.5 查看osd分配情况

[root@ceph00 ceph]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 1.99799 root default

-3 0.99899 host ceph00

0 hdd 0.99899 osd.0 up 1.00000 1.00000

-5 0.99899 host ceph01

1 hdd 0.99899 osd.1 up 1.00000 1.00000

[root@ceph00 ceph]# ceph osd stat

2 osds: 2 up, 2 in; epoch: e9

4.6 在ceph00,将配置文件和admin密钥下发到ceph00 ceph01

[root@ceph00 ceph]# ls

ceph.bootstrap-mds.keyring ceph.bootstrap-rgw.keyring ceph-deploy-ceph.log

ceph.bootstrap-mgr.keyring ceph.client.admin.keyring ceph.mon.keyring

ceph.bootstrap-osd.keyring ceph.conf rbdmap

[root@ceph01 ceph]# ls

ceph.conf rbdmap tmp5u17Ke

[root@ceph00 ceph]# ceph-deploy admin ceph00 ceph01

[root@ceph00 ceph]# ls

ceph.bootstrap-mds.keyring ceph.bootstrap-rgw.keyring ceph-deploy-ceph.log

ceph.bootstrap-mgr.keyring ceph.client.admin.keyring ceph.mon.keyring

ceph.bootstrap-osd.keyring ceph.conf rbdmap

[root@ceph01 ceph]# ls

ceph.client.admin.keyring ceph.conf rbdmap tmp5u17Ke

4.7 给两个节点的ceph.client.admin.keyring执行权限

[root@ceph00 ceph]# chmod +x /etc/ceph/ceph.client.admin.keyring

[root@ceph00 ceph]# ceph -s

cluster:

id: eb1a67de-c665-4305-836d-4ace17bf145c

health: HEALTH_WARN

no active mgr

services:

mon: 2 daemons, quorum ceph00,ceph01

mgr: no daemons active

osd: 2 osds: 2 up, 2 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 2.0 GiB used, 2.0 TiB / 2.0 TiB avail //得等一会才能出现

pgs:

4.8 创建mgr

[root@ceph00 ceph]# ceph-deploy mgr create ceph00 ceph01

[root@ceph00 ceph]# ceph -s

cluster:

id: eb1a67de-c665-4305-836d-4ace17bf145c

health: HEALTH_WARN

OSD count 2 < osd_pool_default_size 3 //反馈osd节点少,接下来扩容

services:

mon: 2 daemons, quorum ceph00,ceph01

mgr: ceph00(active), standbys: ceph01

osd: 2 osds: 2 up, 2 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 2.0 GiB used, 2.0 TiB / 2.0 TiB avail

pgs:

五:ceph扩容测试

5.1 首先先增加mon

[root@ceph00 ceph]# pwd

/etc/ceph

[root@ceph00 ceph]# ceph-deploy mon add ceph02 //创建ceph02的mon。显示有报错

[ceph02][ERROR ] admin_socket: exception getting command descriptions: [Errno 2] No such file or directory

[ceph02][ERROR ] admin_socket: exception getting command descriptions: [Errno 2] No such file or directory

[root@ceph02 ceph]# ls

ceph.client.admin.keyring ceph.conf rbdmap tmp3nyuhx

[root@ceph00 ceph]# ceph -s

cluster:

id: eb1a67de-c665-4305-836d-4ace17bf145c

health: HEALTH_WARN

OSD count 2 < osd_pool_default_size 3

services:

mon: 2 daemons, quorum ceph00,ceph01

mgr: ceph00(active), standbys: ceph01

osd: 2 osds: 2 up, 2 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 2.0 GiB used, 2.0 TiB / 2.0 TiB avail

pgs:

5.2 然后创建osd

[root@ceph00 ceph]# ceph-deploy osd create --data /dev/sdb ceph02

[root@ceph00 ceph]# ceph -s

//此时健康状态正常,但ceph02的mon并没有显示开启,修改配置文件

cluster:

id: eb1a67de-c665-4305-836d-4ace17bf145c

health: HEALTH_OK

services:

mon: 2 daemons, quorum ceph00,ceph01

mgr: ceph00(active), standbys: ceph01

osd: 3 osds: 3 up, 3 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 3.0 TiB / 3.0 TiB avail

pgs:

[root@ceph00 ceph]# vi ceph.conf //添加ceph02参数

You have new mail in /var/spool/mail/root

mon_initial_members = ceph00, ceph01, ceph02

mon_host = 192.168.254.20,192.168.254.21,192.168.254.22

auth_cluster_required = cephx

[root@ceph00 ceph]# ceph-deploy --overwrite-conf admin ceph01 ceph02 //重写配置文件

[root@ceph01 ceph]# cat ceph.conf //到ceph01节点验证查看

[global]

fsid = eb1a67de-c665-4305-836d-4ace17bf145c

mon_initial_members = ceph00, ceph01, ceph02

mon_host = 192.168.254.20,192.168.254.21,192.168.254.22

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

[root@ceph00 ceph]# ceph-deploy --overwrite-conf config push ceph00 ceph01 ceph02

[root@ceph00 ceph]# systemctl restart ceph-mon.target

//三个节点都重启服务

[root@ceph00 ceph]# ceph -s

cluster:

id: eb1a67de-c665-4305-836d-4ace17bf145c

health: HEALTH_OK

services:

mon: 2 daemons, quorum ceph00,ceph01

mgr: ceph00(active), standbys: ceph01

osd: 3 osds: 3 up, 3 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 3.0 TiB / 3.0 TiB avail

pgs:

[root@ceph00 ceph]# ceph-deploy mgr create ceph02

备注:如果不知道重启mon服务,可以通过如下命令查询

systemctl list-unit-files | grep mon

[root@ceph02 ceph]# chmod +x ceph.client.admin.keyring

六:osd数据恢复

6.1 查看当前状态

[root@ceph00 ceph]# ceph -s

cluster:

id: eb1a67de-c665-4305-836d-4ace17bf145c

health: HEALTH_OK

services:

mon: 2 daemons, quorum ceph00,ceph01

mgr: ceph00(active), standbys: ceph01, ceph02

osd: 3 osds: 3 up, 3 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 3.0 TiB / 3.0 TiB avail

pgs:

[root@ceph00 ceph]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 2.99698 root default

-3 0.99899 host ceph00

0 hdd 0.99899 osd.0 up 1.00000 1.00000

-5 0.99899 host ceph01

1 hdd 0.99899 osd.1 up 1.00000 1.00000

-7 0.99899 host ceph02

2 hdd 0.99899 osd.2 up 1.00000 1.00000

6.2 模拟故障ceph osd out osd.2

[root@ceph00 ceph]# ceph osd out osd.2 //移除osd.2

marked out osd.2.

[root@ceph00 ceph]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 2.99698 root default

-3 0.99899 host ceph00

0 hdd 0.99899 osd.0 up 1.00000 1.00000

-5 0.99899 host ceph01

1 hdd 0.99899 osd.1 up 1.00000 1.00000

-7 0.99899 host ceph02

2 hdd 0.99899 osd.2 up 0 1.00000

[root@ceph00 ceph]# ceph osd crush remove osd.2 //删除osd.2

removed item id 2 name 'osd.2' from crush map

[root@ceph00 ceph]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 1.99799 root default

-3 0.99899 host ceph00

0 hdd 0.99899 osd.0 up 1.00000 1.00000

-5 0.99899 host ceph01

1 hdd 0.99899 osd.1 up 1.00000 1.00000

-7 0 host ceph02

2 0 osd.2 up 0 1.00000

[root@ceph00 ceph]# ceph auth del osd.2 //删除osd.2的认证

updated

[root@ceph00 ceph]# ceph osd tree //此时osd.2没有权重

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 1.99799 root default

-3 0.99899 host ceph00

0 hdd 0.99899 osd.0 up 1.00000 1.00000

-5 0.99899 host ceph01

1 hdd 0.99899 osd.1 up 1.00000 1.00000

-7 0 host ceph02

2 0 osd.2 up 0 1.00000

[root@ceph00 ceph]# ceph osd rm osd.2 //彻底删除osd.2

Error EBUSY: osd.2 is still up; must be down before removal.

[root@ceph00 ceph]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 1.99799 root default

-3 0.99899 host ceph00

0 hdd 0.99899 osd.0 up 1.00000 1.00000

-5 0.99899 host ceph01

1 hdd 0.99899 osd.1 up 1.00000 1.00000

-7 0 host ceph02

2 0 osd.2 up 0 1.00000

6.3 然后到ceph02节点重启osd

[root@ceph02 ceph]# systemctl restart ceph-osd.target

You have new mail in /var/spool/mail/root

[root@ceph02 ceph]# ceph -s

cluster:

id: eb1a67de-c665-4305-836d-4ace17bf145c

health: HEALTH_OK

services:

mon: 2 daemons, quorum ceph00,ceph01

mgr: ceph00(active), standbys: ceph01, ceph02

osd: 3 osds: 2 up, 2 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 2.0 GiB used, 2.0 TiB / 2.0 TiB avail

pgs:

[root@ceph02 ceph]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 1.99799 root default

-3 0.99899 host ceph00

0 hdd 0.99899 osd.0 up 1.00000 1.00000

-5 0.99899 host ceph01

1 hdd 0.99899 osd.1 up 1.00000 1.00000

-7 0 host ceph02

2 0 osd.2 down 0 1.00000

[root@ceph02 ceph]# ceph osd stat

3 osds: 2 up, 2 in; epoch: e16

6.4 恢复OSD到集群中

[root@ceph02 ceph]# df -Th

Filesystem Type Size Used Avail Use% Mounted on

devtmpfs devtmpfs 1.9G 0 1.9G 0% /dev

tmpfs tmpfs 1.9G 0 1.9G 0% /dev/shm

tmpfs tmpfs 1.9G 12M 1.9G 1% /run

tmpfs tmpfs 1.9G 0 1.9G 0% /sys/fs/cgroup

/dev/sda3 xfs 291G 2.0G 290G 1% /

/dev/sr0 iso9660 4.4G 4.4G 0 100% /centosjxy

/dev/sda1 xfs 1014M 169M 846M 17% /boot

tmpfs tmpfs 378M 0 378M 0% /run/user/0

tmpfs tmpfs 1.9G 52K 1.9G 1% /var/lib/ceph/osd/ceph-2

[root@ceph02 ceph]# cd /var/lib/ceph/osd/ceph-2/

[root@ceph02 ceph-2]# ls

activate.monmap bluefs fsid kv_backend mkfs_done ready type

block ceph_fsid keyring magic osd_key require_osd_release whoami

[root@ceph02 ceph-2]# more fsid

4cbf41d0-5309-4b7c-a392-59cbc92e12d3 //uuid

6.5 依据uuid创建

[root@ceph02 ceph-2]# ceph osd create 4cbf41d0-5309-4b7c-a392-59cbc92e12d3

2 //确定为osd.2

6.6 增加权限

[root@ceph02 ceph-2]# ceph auth add osd.2 osd 'allow *' mon 'allow rwx' -i /var/lib/ceph/osd/ceph-2/keyring

added key for osd.2

[root@ceph02 ceph-2]# ceph osd crush add 2 0.99899 host=ceph02

set item id 2 name 'osd.2' weight 0.99899 at location {host=ceph02} to crush map

[root@ceph02 ceph-2]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 2.99696 root default

-3 0.99899 host ceph00

0 hdd 0.99899 osd.0 up 1.00000 1.00000

-5 0.99899 host ceph01

1 hdd 0.99899 osd.1 up 1.00000 1.00000

-7 0.99898 host ceph02

2 hdd 0.99898 osd.2 up 0 1.00000

[root@ceph02 ceph-2]# ceph osd in osd.2

marked in osd.2.

[root@ceph02 ceph-2]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 2.99696 root default

-3 0.99899 host ceph00

0 hdd 0.99899 osd.0 up 1.00000 1.00000

-5 0.99899 host ceph01

1 hdd 0.99899 osd.1 up 1.00000 1.00000

-7 0.99898 host ceph02

2 hdd 0.99898 osd.2 up 1.00000 1.00000

6.7 查看ceph状态

[root@ceph02 ceph-2]# ceph -s

cluster:

id: eb1a67de-c665-4305-836d-4ace17bf145c

health: HEALTH_OK

services:

mon: 2 daemons, quorum ceph00,ceph01

mgr: ceph00(active), standbys: ceph01, ceph02

osd: 3 osds: 3 up, 3 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 3.0 TiB / 3.0 TiB avail

pgs:

6.8 为了保险,可以每个节点都重启一下

[root@ceph02 ceph-2]# systemctl restart ceph-osd.target

[root@ceph02 ceph-2]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 2.99696 root default

-3 0.99899 host ceph00

0 hdd 0.99899 osd.0 up 1.00000 1.00000

-5 0.99899 host ceph01

1 hdd 0.99899 osd.1 up 1.00000 1.00000

-7 0.99898 host ceph02

2 hdd 0.99898 osd.2 up 1.00000 1.00000

七:优化ceph内部通信网段

[root@ceph00 ceph]# vi /etc/ceph/ceph.conf

public network = 192.168.254.0/24 //添加

[root@ceph00 ceph]# ceph-deploy --overwrite-conf admin ceph01 ceph02

[root@ceph00 ceph]# systemctl restart ceph-mon.target

[root@ceph00 ceph]# systemctl restart ceph-osd.target

[root@ceph00 ceph]# systemctl restart ceph-mgr.target

[root@ceph02 ceph-2]# ceph -s

cluster:

id: eb1a67de-c665-4305-836d-4ace17bf145c

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph00,ceph01,ceph02

mgr: ceph00(active), standbys: ceph02, ceph01

osd: 3 osds: 3 up, 3 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 3.0 TiB / 3.0 TiB avail

pgs:

备注:查看ceph命令

ceph --help

ceph osd --help

八:创建pool

[root@ceph00 ceph]# ceph osd pool create cinder 64

pool 'cinder' created

[root@ceph00 ceph]# ceph osd pool create nova 64

pool 'nova' created

[root@ceph00 ceph]# ceph osd pool create glance 64

pool 'glance' created

[root@ceph00 ceph]# ceph osd pool ls

cinder

nova

glance

[root@ceph00 ceph]# ceph osd pool rm cinder cinder --yes-i-really-really-mean-it

Error EPERM: pool deletion is disabled; you must first set the mon_allow_pool_delete config option to true before you can destroy a pool

[root@ceph00 ceph]# vi /etc/ceph/ceph.conf

mon_allow_pool_delete = true

[root@ceph00 ceph]# systemctl restart ceph-mon.target

[root@ceph00 ceph]# ceph osd pool rm cinder cinder --yes-i-really-really-mean-it

pool 'cinder' removed

[root@ceph00 ceph]# ceph osd pool ls

nova

glance

[root@ceph00 ceph]# ceph osd pool rename nova nova999

pool 'nova' renamed to 'nova999'

[root@ceph00 ceph]# ceph osd pool ls

nova999

glance