实操:搭建ceph集群+openstack_rocky

文章目录

- 一:ceph基础环境

- 二:ceph集群搭建

- 三:安装步骤

- 3.1 各节点关闭防火墙

- 3.2 各节点安装pyhton-setuptools工具

- 3.3 在openstack的控制节点,创建cph配置文件目录

- 3.4 在控制节点安装ceph-deploy部署工具

- 3.5 在三个节点安装ceph软件

- 3.6 使用ceph-deploy部署工具创建三个mon (进入控制节点/etc/ceph的目录)

- 3.7 初始化mon 并收集秘钥(三个节点)(在控制节点/etc/ceph的目录下)

- 3.8 创建OSD(在控制节点/etc/ceph的目录下)

- 3.9 使用ceph-deploy下发配置文件和admin秘钥下发到ct comp1 comp2

- 3.10 给每个节点的keyring增加执行权限

- 3.11 查看ceph的集群状态

- 3.12 创建mgr管理服务(在控制节点/etc/ceph下)

- 3.13 创建三个与openstack对接的pool(volumes、vms、images)64是指定PG的单位

- 3.14 查看CEPH状态

- 四:CEPH集群管理页面安装

- 4.1 查看CEPH状态(检查是否出现error)

- 4.2 启用dashboard模块

- 4.3 创建https证书

- 4.4 查看mgr服务

- 4.5 在浏览器中打开ceph网页

- 4.6 创建账号密码

- 4.7 登陆

- 五:ceph与openstack对接环境初始化准备

- 5.1 控制节点创建client.cinder并设置权限

- 5.2 控制节点创建client.glance并设置权限

- 5.3 传送秘钥到对接的节点,因为glance自身就装在控制节点所以不需要发送到其他的节点

- 5.4 将client.cinder节点 因为这个默认也是安装在controller上 ,所以不需要传递到其他节点,如果在其他节点就执行第一条语句

- 5.5 同时也需要将client.cinder 传递到计算节点

- 5.6 在运行nova-compute的计算节点,将临时密钥文件添加到libvirt 中然后删除

- 5.6.1 在comp1节点上操作

- 5.6.2 在C2节点上操作

- 5.7 设置自启动

- 六:ceph对接glance

- 6.1 备份配置文件

- 6.2 修改参数

- 6.3 查找glance用户,对接上面

- 6.4 重启OpenStack-glance-api服务

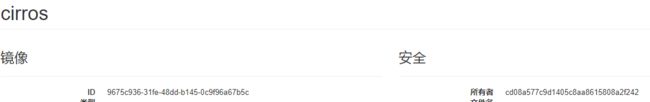

- 6.5 上传镜像做测试

- 6.6 在ct节点查看

- 6.7 确认之前的本地目录还有没有镜像

- 6.8 常规命令概述,记得所有的服务都要设置为开机自启动

- 七:ceph与cinder对接

- 7.1 备份cinder.conf配置文件

- 7.2 修改配置文件

- 7.3 重启cinder服务

- 7.4 查看cinder卷的类型

- 7.5 命令行创建cinder 的ceph存储后端相应的type

- 7.6 设置后端的存储类型

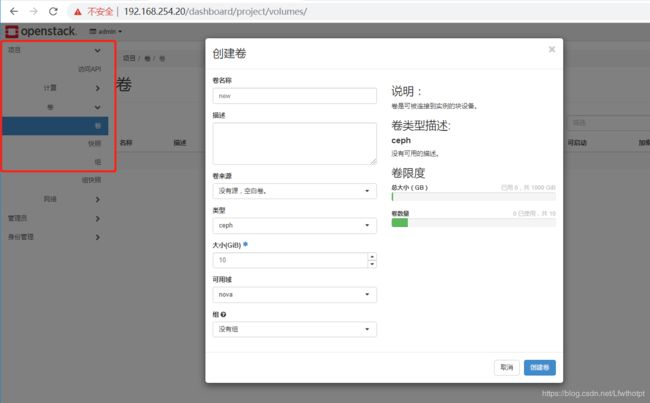

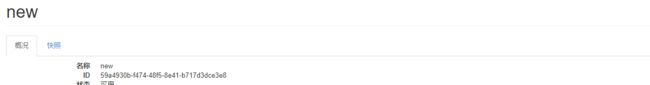

- 7.7 创建卷

- 7.8 查看创建的卷

- 7.9 设为开启自启动

- 八:ceph与nova对接

- 8.1 备份配置文件(两个计算节点都做)

- 8.2 修改配置文件

- 8.3 安装Libvirt(两个计算节点)

- 8.4 编辑计算节点(两个计算节点)

- 8.5 将控制节点的/ect/ceph/下的密钥下发

- 8.6 计算节点重启服务

- 8.7 测试

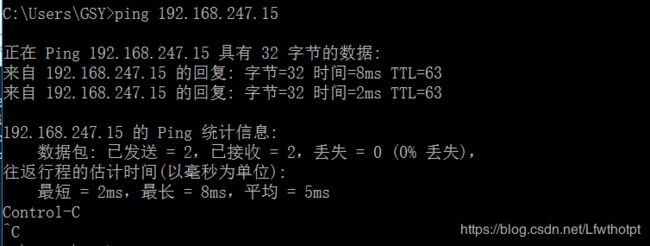

- 九: 测试热迁移

- 9.1 需要使用raw 格式镜像进行转化

前言:

Ceph: 开源的分布式存储系统。主要分为对象存储、块设备存储、文件系统服务。Ceph核心组件包括:Ceph OSDs、Monitors、Managers、MDSs。Ceph存储集群至少需要一个Ceph Monitor,Ceph Manager和Ceph OSD(对象存储守护进程)。运行Ceph Filesystem客户端时也需要Ceph元数据服务器( Metadata Server )。

Ceph OSDs: Ceph OSD 守护进程(ceph-osd)的功能是存储数据,处理数据的复制、恢复、回填、再均衡,并通过检查其他 OSD 守护进程的心跳来向 Ceph Monitors 提供一些监控信息。冗余和高可用性通常至少需要3个Ceph OSD。当 Ceph 存储集群设定为有2个副本时,至少需要2个 OSD 守护进程,集群才能达到 active+clean 状态( Ceph 默认有3个副本,但你可以调整副本数)。

Monitors: Ceph Monitor(ceph-mon) 维护着展示集群状态的各种图表,包括监视器图、 OSD 图、归置组( PG )图、和 CRUSH 图。 Ceph 保存着发生在Monitors 、 OSD 和 PG上的每一次状态变更的历史信息(称为 epoch )。监视器还负责管理守护进程和客户端之间的身份验证。冗余和高可用性通常至少需要三个监视器。

Managers: Ceph Manager守护进程(ceph-mgr)负责跟踪运行时指标和Ceph集群的当前状态,包括存储利用率,当前性能指标和系统负载。Ceph Manager守护进程还托管基于python的插件来管理和公开Ceph集群信息,包括基于Web的Ceph Manager Dashboard和 REST API。高可用性通常至少需要两个管理器。

MDSs: Ceph 元数据服务器( MDS )为 Ceph 文件系统存储元数据(也就是说,Ceph 块设备和 Ceph 对象存储不使用MDS )。元数据服务器使得 POSIX 文件系统的用户们,可以在不对 Ceph 存储集群造成负担的前提下,执行诸如 ls、find 等基本命令。

一:ceph基础环境

(在openstack-rocky基础上配置)

1.控制节点 (ct)

CPU:双核双线程-CPU虚拟化开启

内存:6G 硬盘:300G+1024G(充当CEPH块存储)

网卡:vm1-192.168.254.10 nat-192.168.247.200

操作系统:Centos 7.5 (1804)-最小化安装

2.计算节点1 (comp1)

CPU:双核双线程-CPU虚拟化开启

内存:8G 硬盘:300G+1024G(充当CEPH块存储)

网卡:vm1-192.168.254.11

操作系统:Centos 7.5 (1804)-最小化安装

3.计算节点2 (comp2)

CPU:双核双线程-CPU虚拟化开启

内存:8G 硬盘:300G+1024G(充当CEPH块存储)

网卡:vm1-192.168.254.12

操作系统:Centos 7.5 (1804)-最小化安装

采用YUM安装的方法安装、

备注:在部署ceph之前,必须先把和存储有关系的数据清理干净

实例、镜像、cinder块,都必须在控制台处删除

三个节点,都要关闭防火墙

systemctl stop iptables

systemctl disable iptables

二:ceph集群搭建

ceph需要做免交互,因为只前的openstack已经做了,所以这个地方不需要做

ceph 简述:

数据是分布到各个节点上去,不在是存储在一个节点

目前主流的方案是Openstack+Ceph的方案

###主要作用###

主要功能是对象存储、块存储、文件系统

Ceph----Cinder ●块存储 为云主机提供数据盘

Ceph----Swift ●对象存储 是一个网络盘用来存储数据

Ceph----Glance ●存储镜像

Ceph----Nova ●存储云机主机

Ceph----文件系统 是将Ceph的存储划分给其他的服务使用,类似NFS、samba

#####Ceph集群特点####

Ceph是一个分布式集群系统,至少需要一个monitor和2个OSD守护进程

运行Ceph文件系统客户端还需要MS(Metadata server)

###什么是OSD####

OSD是存储数据、处理数据的复制、回复、回填在均衡、并且通过检查其他的OSD的

守护进程的心跳,向monitor提供给一些监控信息

###什么是Monitor####

监视整个集群的状态信息、当Ceph的集群为2个副本,至少需要2个OSD

才能达到健康的状态,同时还守护各种图表(OSD图、PG组、Crush图等等)

●●●节点必须做免交互●●●

●●●主机名、hosts、关闭防火墙、hostname●●●

三:安装步骤

3.1 各节点关闭防火墙

[root@ct ~(keystone_admin)]# systemctl stop iptables

[root@ct ~(keystone_admin)]# systemctl disable iptables

Removed symlink /etc/systemd/system/basic.target.wants/iptables.service.

[root@ct ~(keystone_admin)]# systemctl status iptables

3.2 各节点安装pyhton-setuptools工具

[root@ct ~(keystone_admin)]# yum install phton-setuptools -y

//安之前首先先上传本地包

[root@ct ~(keystone_admin)]# cd /opt

[root@ct opt(keystone_admin)]# ls

openstack_rocky openstack_rocky.tar.gz

[root@ct opt(keystone_admin)]# rm -rf openstack_rocky*

[root@ct opt(keystone_admin)]# ls

//这里使用finshell工具上传

[root@ct opt(keystone_admin)]# ll

total 1048512

-rw-r--r--. 1 root root 538306858 Mar 8 19:55 openstack_rocky.tar.gz

[root@ct opt(keystone_admin)]# tar xzvf openstack_rocky.tar.gz

[root@ct opt(keystone_admin)]# yum makecache

[root@ct opt(keystone_admin)]# yum -y install python-setuptools

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

Package python-setuptools-0.9.8-7.el7.noarch is obsoleted by python2-setuptools-38.4.0-3.el7.noarch which is already installed

Nothing to do

3.3 在openstack的控制节点,创建cph配置文件目录

[root@ct yum.repos.d(keystone_admin)]# cd ~

[root@ct ~(keystone_admin)]# mkdir -p /etc/ceph

3.4 在控制节点安装ceph-deploy部署工具

[root@ct ~(keystone_admin)]# yum -y install ceph-deploy

3.5 在三个节点安装ceph软件

[root@ct ~(keystone_admin)]# yum -y install ceph

3.6 使用ceph-deploy部署工具创建三个mon (进入控制节点/etc/ceph的目录)

[root@ct ~(keystone_admin)]# cd /etc/ceph

[root@ct ceph(keystone_admin)]# ceph-deploy new ct comp1 comp2 //创建三个节点,指定节点主机名

[root@ct ceph(keystone_admin)]# cat /etc/ceph/ceph.conf //查看ct节点的配置文件

[global] //此处为全局选项

fsid = 15200f4f-1a57-46c5-848f-9b8af9747e54

mon_initial_members = ct, comp1, comp2 //指定mon成员

mon_host = 192.168.254.20,192.168.254.21,192.168.254.22 //指定mon成员主机IP

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

3.7 初始化mon 并收集秘钥(三个节点)(在控制节点/etc/ceph的目录下)

[root@ct ceph(keystone_admin)]# cd /etc/ceph

[root@ct ceph(keystone_admin)]# ceph-deploy mon create-initial //使用ceph-deploy部署工具创建mon成员

[root@ct ceph(keystone_admin)]# ls

ceph.bootstrap-mds.keyring ceph.bootstrap-osd.keyring ceph.client.admin.keyring ceph-deploy-ceph.log rbdmap

ceph.bootstrap-mgr.keyring ceph.bootstrap-rgw.keyring ceph.conf ceph.mon.keyring

3.8 创建OSD(在控制节点/etc/ceph的目录下)

创建osd,指定节点,指定磁盘,默认模式为rbd块存储

[root@ct ceph(keystone_admin)]# cd /etc/ceph

[root@ct ceph(keystone_admin)]# ceph-deploy osd create --data /dev/sdb ct

[root@ct ceph(keystone_admin)]# ceph-deploy osd create --data /dev/sdb comp1

[root@ct ceph(keystone_admin)]# ceph-deploy osd create --data /dev/sdb comp2

3.9 使用ceph-deploy下发配置文件和admin秘钥下发到ct comp1 comp2

[root@ct ceph(keystone_admin)]# ceph-deploy admin ct comp1 comp2

3.10 给每个节点的keyring增加执行权限

[root@ct ceph(keystone_admin)]# chmod +x /etc/ceph/ceph.client.admin.keyring

3.11 查看ceph的集群状态

反馈健康状态为warn,没有mgr

[root@ct ceph(keystone_admin)]# ceph -s

cluster:

id: 15200f4f-1a57-46c5-848f-9b8af9747e54

health: HEALTH_WARN

no active mgr

services:

mon: 3 daemons, quorum ct,comp1,comp2

mgr: no daemons active

osd: 3 osds: 3 up, 3 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

[root@ct ceph(keystone_admin)]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 3.00000 root default

-5 1.00000 host comp1

1 hdd 1.00000 osd.1 up 1.00000 1.00000

-7 1.00000 host comp2

2 hdd 1.00000 osd.2 up 1.00000 1.00000

-3 1.00000 host ct

0 hdd 1.00000 osd.0 up 1.00000 1.00000

3.12 创建mgr管理服务(在控制节点/etc/ceph下)

此时健康状态变为OK

[root@ct ceph(keystone_admin)]# ceph-deploy mgr create ct comp1 comp2

[root@ct ceph(keystone_admin)]# ceph -s

cluster:

id: 15200f4f-1a57-46c5-848f-9b8af9747e54

health: HEALTH_OK

services:

mon: 3 daemons, quorum ct,comp1,comp2

mgr: ct(active), standbys: comp1, comp2

osd: 3 osds: 3 up, 3 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 3.0 TiB / 3.0 TiB avail

pgs:

3.13 创建三个与openstack对接的pool(volumes、vms、images)64是指定PG的单位

[root@ct ceph(keystone_admin)]# ceph osd pool create volumes 64

pool 'volumes' created

You have new mail in /var/spool/mail/root

[root@ct ceph(keystone_admin)]# ceph osd pool create vms 64

pool 'vms' created

[root@ct ceph(keystone_admin)]# ceph osd pool create images 64

pool 'images' created

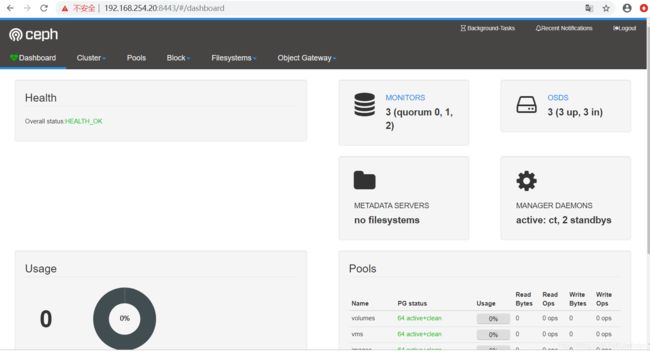

3.14 查看CEPH状态

[root@ct ceph(keystone_admin)]# ceph mon stat

e1: 3 mons at {comp1=192.168.254.21:6789/0,comp2=192.168.254.22:6789/0,ct=192.168.254.20:6789/0}, election epoch 12, leader 0 ct, quorum 0,1,2 ct,comp1,comp2

[root@ct ceph(keystone_admin)]# ceph osd status

+----+-------+-------+-------+--------+---------+--------+---------+-----------+

| id | host | used | avail | wr ops | wr data | rd ops | rd data | state |

+----+-------+-------+-------+--------+---------+--------+---------+-----------+

| 0 | ct | 1026M | 1022G | 0 | 0 | 0 | 0 | exists,up |

| 1 | comp1 | 1026M | 1022G | 0 | 0 | 0 | 0 | exists,up |

| 2 | comp2 | 1026M | 1022G | 0 | 0 | 0 | 0 | exists,up |

+----+-------+-------+-------+--------+---------+--------+---------+-----------+

[root@ct ceph(keystone_admin)]# ceph osd lspools

1 volumes

2 vms

3 images

此时ceph集群搭建完毕,接下来进行页面管理安装

四:CEPH集群管理页面安装

4.1 查看CEPH状态(检查是否出现error)

[root@ct ceph(keystone_admin)]# ceph -s

cluster:

id: 15200f4f-1a57-46c5-848f-9b8af9747e54

health: HEALTH_OK

services:

mon: 3 daemons, quorum ct,comp1,comp2

mgr: ct(active), standbys: comp1, comp2

osd: 3 osds: 3 up, 3 in

data:

pools: 3 pools, 192 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 3.0 TiB / 3.0 TiB avail

pgs: 192 active+clean

4.2 启用dashboard模块

[root@ct ceph(keystone_admin)]# ceph mgr module enable dashboard

4.3 创建https证书

[root@ct ceph(keystone_admin)]# ceph dashboard create-self-signed-cert

Self-signed certificate created

4.4 查看mgr服务

[root@ct ceph(keystone_admin)]# ceph mgr services

{

"dashboard": "https://ct:8443/"

}

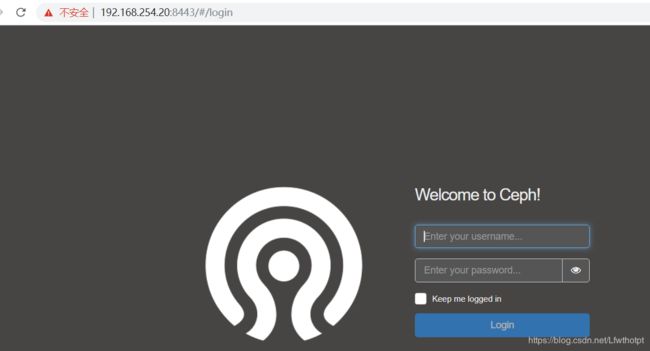

4.5 在浏览器中打开ceph网页

4.6 创建账号密码

[root@ct ceph(keystone_admin)]# ceph dashboard set-login-credentials admin 123

Username and password updated

You have new mail in /var/spool/mail/root

4.7 登陆

五:ceph与openstack对接环境初始化准备

5.1 控制节点创建client.cinder并设置权限

[root@ct ceph(keystone_admin)]# ceph auth get-or-create client.cinder mon 'allow r' osd 'allow class-read object_prefix rbd_children,allow rwx pool=volumes,allow rwx pool=vms,allow rx pool=images'

[client.cinder]

key = AQAk7mReJugbHRAATQw4YZ2VwBs9P0qpnW4WEQ==

You have new mail in /var/spool/mail/root

5.2 控制节点创建client.glance并设置权限

[root@ct ceph(keystone_admin)]# ceph auth get-or-create client.glance mon 'allow r' osd 'allow class-read object_prefix rbd_children,allow rwx pool=images'

[client.glance]

key = AQBo7mRe5PMgOhAAeFsRcPdBpHsdQ+MTdAo9mQ==

5.3 传送秘钥到对接的节点,因为glance自身就装在控制节点所以不需要发送到其他的节点

[root@ct ceph(keystone_admin)]# ceph auth get-or-create client.glance |tee /etc/ceph/ceph.client.glance.keyring

[client.glance]

key = AQBo7mRe5PMgOhAAeFsRcPdBpHsdQ+MTdAo9mQ==

You have new mail in /var/spool/mail/root

[root@ct ceph(keystone_admin)]# chown glance.glance /etc/ceph/ceph.client.glance.keyring

5.4 将client.cinder节点 因为这个默认也是安装在controller上 ,所以不需要传递到其他节点,如果在其他节点就执行第一条语句

[root@ct ceph(keystone_admin)]# ceph auth get-or-create client.cinder | tee /etc/ceph/ceph.client.cinder.keyring

[client.cinder]

key = AQAk7mReJugbHRAATQw4YZ2VwBs9P0qpnW4WEQ==

[root@ct ceph(keystone_admin)]# chown cinder.cinder /etc/ceph/ceph.client.cinder.keyring

5.5 同时也需要将client.cinder 传递到计算节点

由于计算节点需要将用户的client.cinder用户的密钥文件存储在libvirt中,所以需要执行如下操作

[root@ct ceph(keystone_admin)]# ceph auth get-key client.cinder |ssh comp1 tee client.cinder.key

AQAk7mReJugbHRAATQw4YZ2VwBs9P0qpnW4WEQ==

[root@ct ceph(keystone_admin)]#

[root@ct ceph(keystone_admin)]# ceph auth get-key client.cinder |ssh comp2 tee client.cinder.key

AQAk7mReJugbHRAATQw4YZ2VwBs9P0qpnW4WEQ==

[root@ct ceph(keystone_admin)]#

5.6 在运行nova-compute的计算节点,将临时密钥文件添加到libvirt 中然后删除

配置libvirt secret

KVM虚拟机需要使用librbd才可以访问ceph集群

Librbd访问ceph又需要账户认证

因此在这里,需要给libvirt设置账户信息

5.6.1 在comp1节点上操作

⑴生成UUID,这个uuid用在comp1和comp2上

[root@comp1 ceph]# uuidgen

05af6de3-ddb0-49a1-add9-255f14dd9a48

⑵用如下内容创建一个秘钥文件确保使用上一步骤中生成唯一的UUID

[root@comp1 ceph]# cd /root

[root@comp1 ~]# ls

anaconda-ks.cfg client.cinder.key

[root@comp1 ~]# cat >secret.xml <

05af6de3-ddb0-49a1-add9-255f14dd9a48

client.cinder secret

EOF

⑶定义秘钥,并将其保存。后续步骤中使用这个秘钥

[root@comp1 ~]# virsh secret-define --file secret.xml

Secret 05af6de3-ddb0-49a1-add9-255f14dd9a48 created

[root@comp1 ~]# ls

anaconda-ks.cfg client.cinder.key secret.xml

⑷设置秘钥并删除临时文件。

[root@comp1 ~]# virsh secret-set-value --secret 05af6de3-ddb0-49a1-add9-255f14dd9a48 --base64 $(cat client.cinder.key) && rm -rf client.cinder.key secret.xml

Secret value set

[root@comp1 ~]# ls

anaconda-ks.cfg

5.6.2 在C2节点上操作

[root@comp2 ceph]# cd /root

[root@comp2 ~]# cat >secret.xml <

05af6de3-ddb0-49a1-add9-255f14dd9a48

client.cinder secret

EOF

[root@comp2 ~]# ls

anaconda-ks.cfg client.cinder.key secret.xml

[root@comp2 ~]# virsh secret-define --file secret.xml

Secret 05af6de3-ddb0-49a1-add9-255f14dd9a48 created

[root@comp2 ~]# ls

anaconda-ks.cfg client.cinder.key secret.xml

[root@comp2 ~]# virsh secret-set-value --secret 05af6de3-ddb0-49a1-add9-255f14dd9a48 --base64 $(cat client.cinder.key) && rm -rf client.cinder.key secret.xml

Secret value set

[root@comp2 ~]# ls

anaconda-ks.cfg

5.7 设置自启动

[root@ct ceph(keystone_admin)]# ceph osd pool application enable vms mon

[root@ct ceph(keystone_admin)]# ceph osd pool application enable images mon

[root@ct ceph(keystone_admin)]# ceph osd pool application enable volumes mon

六:ceph对接glance

登录到glance 所在的节点ct, 然后修改

6.1 备份配置文件

[root@ct ceph(keystone_admin)]# cd /etc/glance/

[root@ct glance(keystone_admin)]# ls

glance-api.conf glance-image-import.conf glance-scrubber.conf metadefs rootwrap.conf schema-image.json

glance-cache.conf glance-registry.conf glance-swift.conf policy.json rootwrap.d

[root@ct glance(keystone_admin)]# cp /etc/glance/glance-api.conf /etc/glance/glance-api.conf.bak

6.2 修改参数

[root@ct glance(keystone_admin)]# vi /etc/glance/glance-api.conf

2054 stores=rbd //存储类型格式修改为rbd

2108 default_store=rbd //默认存储类型格式修改为rbd

2442 #filesystem_store_datadir=/var/lib/glance/images/ //注销存储本地的参数

2605 rbd_store_chunk_size = 8

2626 rbd_store_pool = images //开启

2645 rbd_store_user = glance //开启

2664 rbd_store_ceph_conf = /etc/ceph/ceph.conf //开启,指定ceph的路径

6.3 查找glance用户,对接上面

[root@ct glance(keystone_admin)]# source ~/keystonerc_admin

[root@ct glance(keystone_admin)]# openstack user list |grep glance

| a23b8bc722db436aad006075702315ee | glance |

6.4 重启OpenStack-glance-api服务

[root@ct glance(keystone_admin)]# systemctl restart openstack-glance-api

可以查看日志文件

tail -f /var/log/glance/api.log

tail -f /var/log/glance/registry.log

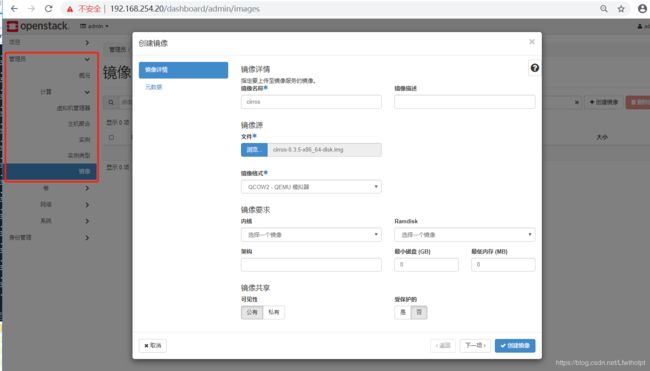

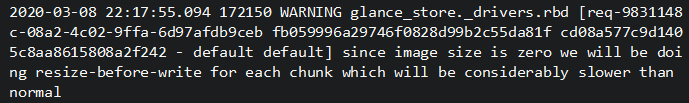

6.5 上传镜像做测试

等等

在日志文件中可以看到有这个记录了

刷新一下

6.6 在ct节点查看

[root@ct ~]# ceph df

GLOBAL:

SIZE AVAIL RAW USED %RAW USED

3.0 TiB 3.0 TiB 3.0 GiB 0.10

POOLS:

NAME ID USED %USED MAX AVAIL OBJECTS

volumes 1 0 B 0 972 GiB 0

vms 2 0 B 0 972 GiB 0

images 3 13 MiB 0 972 GiB 8

[root@ct ~]# rbd ls images

9675c936-31fe-48dd-b145-0c9f96a67b5c

6.7 确认之前的本地目录还有没有镜像

[root@ct ~]# cd /var/lib/glance/images

[root@ct images]# ls

[root@ct images]#

6.8 常规命令概述,记得所有的服务都要设置为开机自启动

systemctl stop ceph-mon.target

systemctl restart ceph-mon.target

systemctl status ceph-mon.target

systemctl enable ceph-mon.target

systemctl stop ceph-mgr.target

systemctl restart ceph-mgr.target

systemctl status ceph-mgr.target

systemctl enable ceph-mgr.target

systemctl restart ceph-osd.target

systemctl status ceph-osd.target

systemctl enable ceph-osd.target

ceph osd pool application enable vms mon

ceph osd pool application enable images mon

ceph osd pool application enable volumes mon

七:ceph与cinder对接

7.1 备份cinder.conf配置文件

[root@ct images]# cd /etc/cinder/

You have new mail in /var/spool/mail/root

[root@ct cinder]# ls

api-paste.ini cinder.conf resource_filters.json rootwrap.conf rootwrap.d volumes

[root@ct cinder]# cp /etc/cinder/cinder.conf /etc/cinder/cinder.conf.bak

7.2 修改配置文件

lvm可以和ceph混合使用

如果enabled_backends有2中类型的格式(ceph和lvmd) glance_api_version=2 就写在 default 选项中

如如果这边有1中类型的格式 如ceph中,那么glance_api_version=2 就写在 ceph 选项中

[root@ct cinder]# vi /etc/cinder/cinder.conf

409 enabled_backends=ceph //只使用ceph

//添加ceph选项参数,注释掉lvm参数

5267 [ceph]

5268 default_volume_type= ceph

5269 glance_api_version = 2

5270 volume_driver = cinder.volume.drivers.rbd.RBDDriver

5271 volume_backend_name = ceph

5272 rbd_pool = volumes

5273 rbd_ceph_conf = /etc/ceph/ceph.conf

5274 rbd_flatten_volume_from_snapshot = false

5275 rbd_max_clone_depth = 5

5276 rbd_store_chunk_size = 4

5277 rados_connect_timeout = -1

5278 rbd_user = cinder

5279 rbd_secret_uuid = 05af6de3-ddb0-49a1-add9-255f14dd9a48

//uuid填写之前配置在comp节点上的

7.3 重启cinder服务

[root@ct cinder]# systemctl restart openstack-cinder-volume

[root@ct cinder]# systemctl status openstack-cinder-volume

可以查看cinder的日志文件

7.4 查看cinder卷的类型

[root@ct cinder]# source /root/keystonerc_admin

[root@ct cinder(keystone_admin)]# cinder type-list

+--------------------------------------+-------+-------------+-----------+

| ID | Name | Description | Is_Public |

+--------------------------------------+-------+-------------+-----------+

| e8fc3f97-7266-47a4-a65c-63db757ad5f6 | iscsi | - | True |

+--------------------------------------+-------+-------------+-----------+

7.5 命令行创建cinder 的ceph存储后端相应的type

[root@ct cinder(keystone_admin)]# cinder type-create ceph

+--------------------------------------+------+-------------+-----------+

| ID | Name | Description | Is_Public |

+--------------------------------------+------+-------------+-----------+

| 7e9f8c14-673c-4ab0-a305-c598884f54dd | ceph | - | True |

+--------------------------------------+------+-------------+-----------+

[root@ct cinder(keystone_admin)]# cinder type-list

+--------------------------------------+-------+-------------+-----------+

| ID | Name | Description | Is_Public |

+--------------------------------------+-------+-------------+-----------+

| 7e9f8c14-673c-4ab0-a305-c598884f54dd | ceph | - | True |

| e8fc3f97-7266-47a4-a65c-63db757ad5f6 | iscsi | - | True |

+--------------------------------------+-------+-------------+-----------+

7.6 设置后端的存储类型

[root@ct cinder(keystone_admin)]# cinder type-key ceph set volume_backend_name=ceph

7.7 创建卷

查看日志

7.8 查看创建的卷

[root@ct cinder(keystone_admin)]# ceph -s

cluster:

id: 15200f4f-1a57-46c5-848f-9b8af9747e54

health: HEALTH_OK

services:

mon: 3 daemons, quorum ct,comp1,comp2

mgr: ct(active), standbys: comp2, comp1

osd: 3 osds: 3 up, 3 in

data:

pools: 3 pools, 192 pgs

objects: 13 objects, 13 MiB

usage: 3.1 GiB used, 3.0 TiB / 3.0 TiB avail

pgs: 192 active+clean

[root@ct cinder(keystone_admin)]# ceph osd lspools

1 volumes

2 vms

3 images

[root@ct cinder(keystone_admin)]# rbd ls volumes

volume-59a4930b-f474-48f5-8e41-b717d3dce3e8

[root@ct cinder(keystone_admin)]# ceph osd status

+----+-------+-------+-------+--------+---------+--------+---------+-----------+

| id | host | used | avail | wr ops | wr data | rd ops | rd data | state |

+----+-------+-------+-------+--------+---------+--------+---------+-----------+

| 0 | ct | 1041M | 1022G | 0 | 0 | 0 | 0 | exists,up |

| 1 | comp1 | 1041M | 1022G | 0 | 0 | 0 | 0 | exists,up |

| 2 | comp2 | 1041M | 1022G | 0 | 0 | 0 | 0 | exists,up |

+----+-------+-------+-------+--------+---------+--------+---------+-----------+

[root@ct cinder(keystone_admin)]# tail -f /var/log/cinder/volume.log

7.9 设为开启自启动

[root@ct cinder(keystone_admin)]# systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service

[root@ct cinder(keystone_admin)]# systemctl start openstack-cinder-api.service openstack-cinder-scheduler.service

八:ceph与nova对接

注意事项:对接之前要把OpenStack中的实例删除

8.1 备份配置文件(两个计算节点都做)

[root@comp1 ~]# cp /etc/nova/nova.conf /etc/nova/nova.conf.bak

8.2 修改配置文件

修改好一个,然后ssh传送过去

[root@comp1 ~]# vi /etc/nova/nova.conf

6421 inject_password=False

6446 inject_key=False

6480 inject_partition=-2

6598 live_migration_flag="VIR_MIGRATE_UNDEFINE_SOURCE,VIR_MIGRATE_PEER2PEER,VIR_MIGRATE_LIVE,VIR_MIGRATE_PERSIST_DEST,VIR_MIGRATE_TUNNELLED"

//添加是否启用热迁移参数,在live_migration部分内添加即可

6932 disk_cachemodes = "network=writeback"

//添加"network=writeback"硬盘缓存模式

7072 images_type=rbd

//修改类型RBD

7096 images_rbd_pool=vms

//改为VMS在CEPH中声明的

7099 images_rbd_ceph_conf = /etc/ceph/ceph.conf

//添加CEPH配置文件路径

7113 hw_disk_discard=unmap

//添加unmap

7256 rbd_user=cinder

//添加cinder

7261 rbd_secret_uuid=05af6de3-ddb0-49a1-add9-255f14dd9a48

//添加UUID值

[root@comp1 ~]# scp /etc/nova/nova.conf root@comp2:/etc/nova/

nova.conf 100% 382KB 15.5MB/s 00:00

[root@comp1 ~]#

8.3 安装Libvirt(两个计算节点)

[root@comp1 ~]# yum -y install libvirt

8.4 编辑计算节点(两个计算节点)

[root@comp1 ~]# vi /etc/ceph/ceph.conf

//添加

[client]

rbd cache=true

rbd cache writethrough until flush=true

admin socket = /var/run/ceph/guests/$cluster-$type.$id.$pid.$cctid.asok

log file = /var/log/qemu/qemu-guest-$pid.log

rbd concurrent management ops = 20

[root@comp2 ~]# mkdir -p /var/run/ceph/guests/ /var/log/qemu/

[root@comp2 ~]# chown 777 -R /var/run/ceph/guests/ /var/log/qemu/

备注:因为计算节点的nova.conf配置文件我们之前修改过所以不需要修改了

8.5 将控制节点的/ect/ceph/下的密钥下发

[root@ct cinder(keystone_admin)]# cd /etc/ceph

You have new mail in /var/spool/mail/root

[root@ct ceph(keystone_admin)]# scp ceph.client.cinder.keyring root@comp1:/etc/ceph

ceph.client.cinder.keyring 100% 64 2.7KB/s 00:00

[root@ct ceph(keystone_admin)]# scp ceph.client.cinder.keyring root@comp2:/etc/ceph

ceph.client.cinder.keyring 100% 64 19.4KB/s 00:00

[root@ct ceph(keystone_admin)]#

8.6 计算节点重启服务

[root@comp1 ~]# systemctl restart libvirtd

[root@comp1 ~]# systemctl enable libvirtd

[root@comp1 ~]# systemctl restart openstack-nova-compute

[root@comp1 ~]#

tail -f /var/log/nova/nova-compute.log

8.7 测试

[root@ct ~]# rbd ls vms

cc4b9c21-e5a6-4563-ac15-067fd021e317_disk

九: 测试热迁移

将 centos7.qcow2 上传到/opt目录

[root@ct ~]# cd /opt

[root@ct opt]# ls

centos7.qcow2 openstack_rocky openstack_rocky.tar.gz

9.1 需要使用raw 格式镜像进行转化

openstack image create --file 镜像 --disk-format qcow2 --container-format bare --public 镜像名

[root@ct opt]# openstack image create --file centos7.qcow2 --disk-format qcow2 --container-format bare --public centos7

Missing value auth-url required for auth plugin password

You have new mail in /var/spool/mail/root

[root@ct opt]# source ~/keystonerc_admin

[root@ct opt(keystone_admin)]# openstack image create --file centos7.qcow2 --disk-format qcow2 --container-format bare --public centos7

+------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| checksum | 380360f5896cdd63aa578cd003276144 |

| container_format | bare |

| created_at | 2020-03-08T16:01:25Z |

| disk_format | qcow2 |

| file | /v2/images/20158b49-bc2f-4fb6-98e3-657dfce722c7/file |

| id | 20158b49-bc2f-4fb6-98e3-657dfce722c7 |

| min_disk | 0 |

| min_ram | 0 |

| name | centos7 |

| owner | cd08a577c9d1405c8aa8615808a2f242 |

| properties | os_hash_algo='sha512', os_hash_value='a98c961e29390a344793c66aa115ab2d5a34877fb1207111dc71f96182545d673e58d8e6401883914ba6bebc279a03ea538ae0edbfbf70bd77596293e8e40306', os_hidden='False' |

| protected | False |

| schema | /v2/schemas/image |

| size | 620691456 |

| status | active |

| tags | |

| updated_at | 2020-03-08T16:01:42Z |

| virtual_size | None |

| visibility | public |

+------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

[root@ct opt(keystone_admin)]# rbd ls images

20158b49-bc2f-4fb6-98e3-657dfce722c7

9675c936-31fe-48dd-b145-0c9f96a67b5c

[root@ct opt(keystone_admin)]#

创建路由

[root@ct opt(keystone_admin)]# ceph -s

cluster:

id: 15200f4f-1a57-46c5-848f-9b8af9747e54

health: HEALTH_OK

services:

mon: 3 daemons, quorum ct,comp1,comp2

mgr: ct(active), standbys: comp2, comp1

osd: 3 osds: 3 up, 3 in

data:

pools: 3 pools, 192 pgs

objects: 406 objects, 1.8 GiB

usage: 8.3 GiB used, 3.0 TiB / 3.0 TiB avail

pgs: 192 active+clean

io:

client: 1.8 MiB/s rd, 146 KiB/s wr, 35 op/s rd, 7 op/s wr

添加安全组规则

热迁移