elasticsearch中query统计网络请求访问的成功率

在之前的一篇(https://blog.csdn.net/QYHuiiQ/article/details/89843141)中已经使用logstash实现解析apache log,将信息匹配成各个字段后放到ealsticsearch中了,这次我们要做的是统计每3分钟每个url访问的成功率。

1.先在/usr/local/wyh/elk-kafka/apache-log下创建一个log文件apachelog.txt,里面放的是apache log:

192.168.184.128 - - [11/Apr/2019:16:47:37 -0400] Second:18 "POST /index/article?writer=wyh&articleid=jsd_kojb_91.43.68_2548_33_87_3849 HTTP/1.1" 200 658 "-" "Java/1.8.0_51"

192.168.184.128 - - [14/May/2019:18:47:37 -0400] Second:28 "POST /index/writer?writer=wyh&articleid=jsd_kojb_91.43.68_2548_33_87_3049 HTTP/1.1" 200 658 "-" "Java/1.8.0_51"

192.168.184.128 - - [12/May/2019:16:47:37 -0400] Second:180 "POST /index/article?writer=wyh&articleid=jsd_kojb_91.43.68_2548_33_89_3849 HTTP/1.1" 503 658 "-" "Java/1.8.0_51"

192.168.184.129 - - [14/May/2019:18:27:37 -0400] Second:36 "POST /index/login?writer=wyh&articleid=jsd_kojb_91.43.68_2548_33_87_4276 HTTP/1.1" 200 658 "-" "Java/1.8.0_51"

192.168.184.129 - - [15/May/2019:19:47:37 -0400] Second:35 "POST /index/article?writer=wyh&articleid=jsd_kojb_91.43.68_2548_33_87_3841 HTTP/1.1" 200 658 "-" "Java/1.8.0_51"

192.168.184.129 - - [17/May/2019:19:27:37 -0400] Second:15 "POST /index/login?writer=wyh&articleid=jsd_kojb_91.43.68_2548_31_87_3841 HTTP/1.1" 200 658 "-" "Java/1.8.0_51"2.启动elasticsearch:

3.启动kibana

4.在config文件夹下创建logstash的配置文件wyh-apache-log.conf:

input{

file{

path => "/usr/local/wyh/elk-kafka/apache-log/apachelog.txt"

type => "apachelog"

start_position => "beginning"

}

}

filter{

grok{

patterns_dir => "/usr/local/wyh/elk-kafka/logstash-6.6.0/custom/patterns"

match => {

"message" => "%{IP:client_address} - - \[%{HTTPDATE:timestamp}\] Second:%{NUMBER:second} \"%{WORD:http_method} %{URIPATHPARAM:url}\?writer=%{WORD:writer}&articleid=%{ARTICLEID:articleid} HTTP/%{NUMBER:http_version}\" %{NUMBER:http_code} %{NUMBER:bytes} \"(?:%{URI:http_referer}|-)\" %{QS:java_version}"

}

remove_field => "message"

}

date{ --使用date插件转换日期格式

match => ["timestamp", "dd/MMM/yyyy:HH:mm:ss Z"] --timestamp是在上面的match中的自己指定的字段显示名称,后面的是对日期格式化,注意这里是3个MMM,如果写的是两个M,在kibana中不会显示@request_timestamp这个字段,后面的Z表示时区

target => "@request_timestamp" --将上面的时间格式化后放到这个字段中,相当于在这里是新建的字段,注意这里要在字段前面加个@,否则即使这里改成了date类型,到了es中还是会显示text类型

}

}

output{

elasticsearch{

hosts => ["192.168.184.128:9200"]

index => "wyh-apache-log"

}

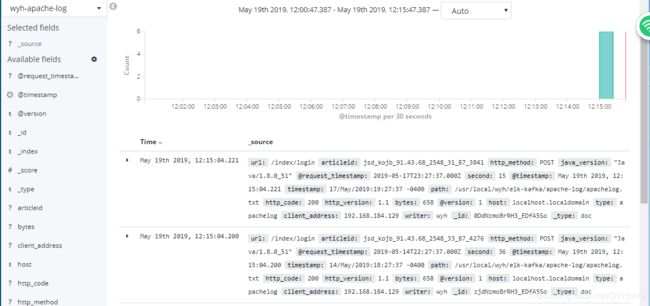

}5.启动logstash,在kibana中查看索引:

[root@localhost logstash-6.6.0]# ./bin/logstash -f ./config/wyh-apache-log.conf6.es中使用左侧菜单栏dev tools编写query实现统计每分钟各个url请求的成功率:

GET /wyh-apache-log/_search

{

"size":0,

"aggs":{

"count_minute":{

"date_histogram": {

"field": "@request_timestamp", --使用date_histogramshi时该字段必须是date类型

"interval": "4d", --此处自己指定聚合时间,这里是4天

"format":"yyyy/MM/dd HH:mm:ss Z", --对日期进行格式化,Z表示时区

"min_doc_count": 0

},

"aggs":{

"url_count":{

"terms":{

"field": "url.keyword", --统计分类总共多少个url

"size":1000

},

"aggs":{

"request_success_count":{ --统计每个url的http_code为2开头(表示成功)的个数

"filter": {

"range":{

"http_code": {

"gte":200,

"lte":300

}

}

}

},

"success_rate":{

"bucket_script": {

"buckets_path": {

"success_count":"request_success_count>_count",

"total_count":"_count"

},

"script": "params.success_count / params.total_count * 100"

}

},

"failure_rate":{

"bucket_script": {

"buckets_path": {

"succ_rate":"success_rate"

},

"script": "100 - params.succ_rate"

}

}

}

}

}

}

}

}7.运行之后的结果:

{

"took" : 44,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : 6,

"max_score" : 0.0,

"hits" : [ ]

},

"aggregations" : {

"count_minute" : {

"buckets" : [

{

"key_as_string" : "2019/04/10 00:00:00 +0000",

"key" : 1554854400000,

"doc_count" : 1,

"url_count" : {

"doc_count_error_upper_bound" : 0,

"sum_other_doc_count" : 0,

"buckets" : [

{

"key" : "/index/article",

"doc_count" : 1,

"request_success_count" : {

"doc_count" : 1

},

"success_rate" : {

"value" : 100.0

},

"failure_rate" : {

"value" : 0.0

}

}

]

}

},

{

"key_as_string" : "2019/04/14 00:00:00 +0000",

"key" : 1555200000000,

"doc_count" : 0,

"url_count" : {

"doc_count_error_upper_bound" : 0,

"sum_other_doc_count" : 0,

"buckets" : [ ]

}

},

{

"key_as_string" : "2019/04/18 00:00:00 +0000",

"key" : 1555545600000,

"doc_count" : 0,

"url_count" : {

"doc_count_error_upper_bound" : 0,

"sum_other_doc_count" : 0,

"buckets" : [ ]

}

},

{

"key_as_string" : "2019/04/22 00:00:00 +0000",

"key" : 1555891200000,

"doc_count" : 0,

"url_count" : {

"doc_count_error_upper_bound" : 0,

"sum_other_doc_count" : 0,

"buckets" : [ ]

}

},

{

"key_as_string" : "2019/04/26 00:00:00 +0000",

"key" : 1556236800000,

"doc_count" : 0,

"url_count" : {

"doc_count_error_upper_bound" : 0,

"sum_other_doc_count" : 0,

"buckets" : [ ]

}

},

{

"key_as_string" : "2019/04/30 00:00:00 +0000",

"key" : 1556582400000,

"doc_count" : 0,

"url_count" : {

"doc_count_error_upper_bound" : 0,

"sum_other_doc_count" : 0,

"buckets" : [ ]

}

},

{

"key_as_string" : "2019/05/04 00:00:00 +0000",

"key" : 1556928000000,

"doc_count" : 0,

"url_count" : {

"doc_count_error_upper_bound" : 0,

"sum_other_doc_count" : 0,

"buckets" : [ ]

}

},

{

"key_as_string" : "2019/05/08 00:00:00 +0000",

"key" : 1557273600000,

"doc_count" : 0,

"url_count" : {

"doc_count_error_upper_bound" : 0,

"sum_other_doc_count" : 0,

"buckets" : [ ]

}

},

{

"key_as_string" : "2019/05/12 00:00:00 +0000",

"key" : 1557619200000,

"doc_count" : 4,

"url_count" : {

"doc_count_error_upper_bound" : 0,

"sum_other_doc_count" : 0,

"buckets" : [

{

"key" : "/index/article",

"doc_count" : 2,

"request_success_count" : {

"doc_count" : 1

},

"success_rate" : {

"value" : 50.0 --log中该url有一个是200

},

"failure_rate" : { --log中该url有一个是503

"value" : 50.0

}

},

{

"key" : "/index/login",

"doc_count" : 1,

"request_success_count" : {

"doc_count" : 1

},

"success_rate" : {

"value" : 100.0

},

"failure_rate" : {

"value" : 0.0

}

},

{

"key" : "/index/writer",

"doc_count" : 1,

"request_success_count" : {

"doc_count" : 1

},

"success_rate" : {

"value" : 100.0

},

"failure_rate" : {

"value" : 0.0

}

}

]

}

},

{

"key_as_string" : "2019/05/16 00:00:00 +0000",

"key" : 1557964800000,

"doc_count" : 1,

"url_count" : {

"doc_count_error_upper_bound" : 0,

"sum_other_doc_count" : 0,

"buckets" : [

{

"key" : "/index/login",

"doc_count" : 1,

"request_success_count" : {

"doc_count" : 1

},

"success_rate" : {

"value" : 100.0

},

"failure_rate" : {

"value" : 0.0

}

}

]

}

}

]

}

}

}

这样就得到了预期的效果,实现了统计某个时间频率下每个Url访问请求情况。