Python高级:多进程(MultiProcess)

进程(Process)是计算机中的程序关于某数据集合上的一次运行活动,是系统进行资源分配和调度的基本单位,是操作系统结构的基础。进程之间无法共享数据

示例一

import multiprocessing as mp

def job(q):

res=0

for i in range(1000):

res+=i+i**2+i**3

q.put(res)

if __name__=='__main__':

q=mp.Queue()

p1=mp.Process(target=job,args=(q,))

p2=mp.Process(target=job,args=(q,))

p1.start()

p2.start()

p1.join()

p2.join()

res1=q.get()

res2=q.get()

print(res1+res2)

利用队列将每个进程计算的结果进行存储。这里的进程初始化、启动、主进程阻塞设置与线程基本一致。

示例二

import multiprocessing as mp

import threading as td

import time

def job(q):

res = 0

for i in range(1000000):

res += i + i ** 2 + i ** 3

q.put(res)

def multiCore():

q = mp.Queue()

p1 = mp.Process(target=job, args=(q,))

p2 = mp.Process(target=job, args=(q,))

p1.start()

p2.start()

p1.join()

p2.join()

res1 = q.get()

res2 = q.get()

print('multiCore:',res1 + res2)

def normal():

res=0

for _ in range(2):

for i in range(1000000):

res+=i+i**2+i**3

print("normal: ",res)

def multiThread():

q=mp.Queue()

t1=td.Thread(target=job,args=(q,))

t2=td.Thread(target=job,args=(q,))

t1.start()

t2.start()

t1.join()

t2.join()

res1=q.get()

res2=q.get()

print('multiThread:',res2+res1)

if __name__ == '__main__':

st = time.time()

normal()

st1 = time.time()

print('normal time:', st1 - st)

multiThread()

st2 = time.time()

print('multithread time:', st2 - st1)

multiCore()

print('multicore time:', time.time() - st2)

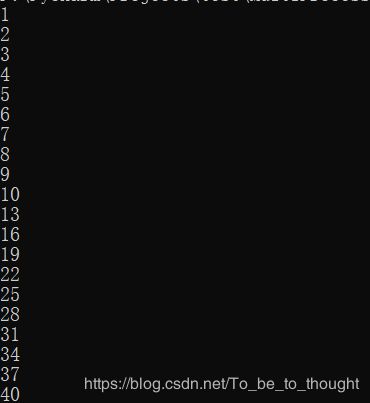

输出如下:

这里多进程确实比普通的要快3倍,但是多线程比普通的还要慢,这是因为GIL全局锁的原因。

这里请注意,在CPU密集型任务下,多进程更快,或者说效果更好;而IO密集型,多线程能有效提高效率

示例三

import multiprocessing as mp

import time

def job(x):

return x**2

def multiCore(n):

pool=mp.Pool(processes=n)

res=pool.map(job,range(1000000))

#print(res)

#res=pool.apply_async(job,(2,))

#print(res.get())

#multi_res=[pool.apply_async(job,(j,)) for j in range(1000000)]

#print([res.get() for res in multi_res])

if __name__ == '__main__':

for i in range(1,7):

s_time=time.time()

multiCore(i)

print("使用%d个核花费时间为%s"%(i,time.time()-s_time))

示例三 多进程共享资源

import multiprocessing as mp

import time

value = mp.Value('d', 1)

array = mp.Array('i', [1, 2, 3, 4])

def job(v, num, lock):

lock.acquire()

for _ in range(10):

time.sleep(0.1)

v.value += num

print(v.value)

lock.release()

def multiCore():

lock = mp.Lock()

v = mp.Value('i', 0)

p1 = mp.Process(target=job, args=(v, 1, lock))

p2 = mp.Process(target=job, args=(v, 3, lock))

p1.start()

p2.start()

p1.join()

p2.join()

if __name__ == '__main__':

multiCore()

示例四

import multiprocessing as mp

import time

def job(x):

return x**2

def multiCore1():

pool=mp.Pool()

multi_res=[pool.apply(job,(j,)) for j in range(1000000)]

res=[res for res in multi_res]

#print(res)

#res=pool.apply_async(job,(2,))

#print(res.get())

#multi_res=[pool.apply_async(job,(j,)) for j in range(1000000)]

#print([res.get() for res in multi_res])

def multiCore2():

pool = mp.Pool()

multi_res = [pool.apply_async(job, (j,)) for j in range(1000000)]

res = [res.get() for res in multi_res]

if __name__ == '__main__':

s_time=time.time()

multiCore1()

print("apply运行时间:",time.time()-s_time)

s_time=time.time()

multiCore2()

print("apply_async运行时间:", time.time() - s_time)

apply方法是阻塞式的,比apply_asyncy要慢。