docker-compose部署Elasticsearch,Logstash,Kibana,Filebeat 6.5.4

部署 ES 集群

Elasticsearch的可以用 docker 镜像部署,其base镜像是 centos:7,所有镜像版本在www.docker.elastic.co.

系统配置

-

创建 elasticsearch 用户(容器启动后的用户是 elasticsearch,在系统创建相应的用户是为了容器挂载卷时能够有权限操作相关文件,否则会报错)

-

配置最大文件打开数为至少为65536

centos 配置如下

root@sit-server:~# tail /etc/security/limits.conf

* hard nofile 1024000

* soft nofile 1024000

* soft memlock unlimited

* hard memlock unlimited

ubuntu 配置需要注释/etc/pam.d/su 文件的如下这段话 ,然后在进行和 如上和 centos 相同的配置

# session required pam_limits.so

- 禁用 swap 分区

root@sit-server:~# swapoff -a

- 虚拟内存设置

Elasticsearch 默认用 mmapfs存储 indices,默认的操作系统对 mmapfs的内存太低,可能导致内存溢出,linux 中可这样设置

$ grep vm.max_map_count /etc/sysctl.conf

vm.max_map_count=262144

- 线程数只是设置为4096

root@sit-server:~# ulimit -u 4096

root@sit-server:~# tail /etc/security/limits.conf

* hard nproc 100000

* soft nproc 100000

docker-compose 文件如下

version: '2.2'

networks:

esnet:

driver: bridge

services:

es1:

image: docker.elastic.co/elasticsearch/elasticsearch:6.5.4

container_name: es1

hostname: es1

volumes:

- /app/esdata/es1:/usr/share/elasticsearch/data #数据卷挂载(注:挂载卷的系统文件夹需要修改属主属组为 elasticsearch)

- /home/elasticsearch/elk/esconfig/es1.yml:/usr/share/elasticsearch/config/elasticsearch.yml #配置文件挂载

- /etc/localtime:/etc/localtime #时区同步

environment:

- TZ="Asia/Shanghai"

- "ES_JAVA_OPTS=-Xms1g -Xmx1g" #设置 heap size,通常设置为物理内存的一半,我这里机器是8G 内存,部署 3 个ES设置为 1g

ulimits: #固定内存,保证性能

memlock:

soft: -1

hard: -1

ports:

- "9201:9201"

- "9301:9301"

networks:

- esnet

es2:

image: docker.elastic.co/elasticsearch/elasticsearch:6.5.4

container_name: es2

hostname: es2

volumes:

- /app/esdata/es2:/usr/share/elasticsearch/data

- /home/elasticsearch/elk/esconfig/es2.yml:/usr/share/elasticsearch/config/elasticsearch.yml

- /etc/localtime:/etc/localtime

environment:

- TZ="Asia/Shanghai"

- "ES_JAVA_OPTS=-Xms1g -Xmx1g"

ulimits:

memlock:

soft: -1

hard: -1

ports:

- "9202:9202"

- "9302:9302"

networks:

- esnet

es3:

image: docker.elastic.co/elasticsearch/elasticsearch:6.5.4

container_name: es3

hostname: es3

volumes:

- /app/esdata/es3:/usr/share/elasticsearch/data

- /home/elasticsearch/elk/esconfig/es3.yml:/usr/share/elasticsearch/config/elasticsearch.yml

- /etc/localtime:/etc/localtime

environment:

- TZ="Asia/Shanghai"

- "ES_JAVA_OPTS=-Xms1g -Xmx1g"

ulimits:

memlock:

soft: -1

hard: -1

ports:

- "9203:9203"

- "9303:9303"

networks:

- esnet

- Elasticsearch 配置文件如下

root@ELK:/home/elasticsearch/elk/esconfig# pwd

/home/elasticsearch/elk/esconfig

root@ELK:/home/elasticsearch/elk/esconfig# ls

es1.yml es2.yml es3.yml jvm.options

root@ELK:/home/elasticsearch/elk/esconfig# cat *.yml|grep -v "^#"

cluster.name: "es-cluster"

node.name: es1

network.host: 0.0.0.0

http.port: 9201 #设置对外服务的http端口

transport.tcp.port: 9301 #设置节点间交互的tcp端口

http.cors.enabled: true

http.cors.allow-origin: "*"

bootstrap.memory_lock: true

xpack.monitoring.collection.enabled: true #默认是 false,设置为 true,表示启用监控数据的收集

node.master: true #指定该节点是否有资格被选举成为node,默认是true,es是默认集群中的第一台机器为master,如果这台机挂了就会重新选举master

node.data: true #指定该节点是否存储索引数据,默认为true

discovery.zen.ping.unicast.hosts: ["es1:9301","es2:9302","es3:9303"]

discovery.zen.minimum_master_nodes: 2 #设置这个参数来保证集群中的节点可以知道其它N个有master资格的节点。默认为1,对于大的集群来说,为避免脑裂,应将此参数设置为(master_eligible_nodes / 2) + 1

cluster.name: "es-cluster"

node.name: es2

network.host: 0.0.0.0

http.port: 9202

transport.tcp.port: 9302

http.cors.enabled: true

http.cors.allow-origin: "*"

bootstrap.memory_lock: true

node.master: true

node.data: true

xpack.monitoring.collection.enabled: true #默认是 false,设置为 true,表示启用监控数据的收集

discovery.zen.ping.unicast.hosts: ["es1:9301","es2:9302","es3:9303"]

discovery.zen.minimum_master_nodes: 2

cluster.name: "es-cluster"

node.name: es3

network.host: 0.0.0.0

http.port: 9203

transport.tcp.port: 9303

http.cors.enabled: true

http.cors.allow-origin: "*"

bootstrap.memory_lock: true

node.master: true

node.data: true

xpack.monitoring.collection.enabled: true #默认是 false,设置为 true,表示启用监控数据的收集

discovery.zen.ping.unicast.hosts: ["es1:9301","es2:9302","es3:9303"]

discovery.zen.minimum_master_nodes: 2

- 部署验证

root@ELK:/home/elasticsearch/elk/logstash# curl -X GET "localhost:9201/_cluster/settings?pretty"

{

"persistent" : {

"xpack" : {

"monitoring" : {

"collection" : {

"enabled" : "true"

}

}

}

},

"transient" : { }

}

部署logstash

- docker-compose 文件

version: '2.2'

networks:

esnet:

driver: bridge

services:

logstash:

image: docker.elastic.co/logstash/logstash:6.5.4

hostname: logstash

# user: root

container_name: logstash

environment:

- TZ="Asia/Shanghai"

volumes:

- /home/elasticsearch/elk/logstash/logstash.yml:/usr/share/logstash/config/logstash.yml:ro #主配置文件

# - /home/elasticsearch/elk/logstash/logstash.conf:/usr/share/logstash/config/logstash.conf

# - /home/elasticsearch/elk/logstash/jvm.options:/usr/share/elasticsearch/logstash/jvm.options

- /home/elasticsearch/elk/logstash/pipeline/logstash.conf:/usr/share/logstash/pipeline/logstash.conf:ro #pipeline 配置文件

ports:

- "5044:5044"

networks:

- esnet

- 配置文件

root@ELK:/home/elasticsearch/elk/logstash# pwd

/home/elasticsearch/elk/logstash

root@ELK:/home/elasticsearch/elk/logstash# cat logstash.yml

node.name: logstash

#log.level: info

#log.format: json

http.host: "0.0.0.0"

xpack.monitoring.elasticsearch.url: ["http://es1:9201","http://es2:9202"]

xpack.monitoring.enabled: true # 收集监控数据

root@ELK:/home/elasticsearch/elk/logstash# cat pipeline/logstash.conf

input {

beats {

port => 5044

}

}

filter {

if [fields][log-source] == 'iot-gateway' {

grok {

match => { "message" => "%{COMBINEDAPACHELOG}" } #可参考https://www.jianshu.com/p/ee4266b1cb85

}

}

grok {

match => { "message" => "%{LOGLEVEL:level} %{WORD} \[%{DATA:request}\]" } #可参考 grok 语法https://www.cnblogs.com/stozen/p/5638369.html

}

grok {

match => { "message" => "%{PATH:uri}" }

}

date {

match => [ "timestamp" , "dd/MMM/yyyy:HH:mm:ss Z" ]

}

# json {

# source => "message"

# }

geoip {

source => "clientip" #解析 ip

}

}

output {

elasticsearch {

hosts => ["es1:9201","es2:9202"]

#index => "%{[@metadata][beat]}-%{[@metadata][version]}-%{+YYYY.MM.dd}"

index => "%{[beat][hostname]}-%{[fields][log-source]}-%{+YYYY.MM.dd}" # 创建索引根据logstash输出beat主机名和日志来源定义index命名,来自于 filebeat 对于日志文件的fields

#user => "elastic"

#password => "changeme"

}

# stdout { codec => rubydebug } #打开注释可用于输出日志调试

}

- 部署验证

root@ELK:/home/elasticsearch/elk/logstash# curl -XGET 'http://localhost:9201/prod-*/_search?pretty'

注:prod-*为 logstash 中 output 所创建的索引名

{

"_index" : "prod-web-iot-gateway-2019.07.23",

"_type" : "doc",

"_id" : "KVoGHWwBx3_VG-jcXYsJ",

"_score" : 1.0,

"_source" : {

"agent" : "\"curl\"",

"@timestamp" : "2019-07-23T04:10:50.000Z",

"prospector" : {

"type" : "log"

},

"tags" : [

"beats_input_codec_plain_applied",

"_grokparsefailure"

],

"timestamp" : "23/Jul/2019:12:10:50 +0800",

"host" : {

"name" : "prod-web"

},

"message" : "180.163.9.69 - - [23/Jul/2019:12:10:50 +0800] \"GET / HTTP/1.1\" 200 612 \"-\" \"curl\" \"-\"",

"response" : "200",

"@version" : "1",

"ident" : "-",

"offset" : 83180,

"uri" : "/Jul/2019:12:10:50",

"fields" : {

"log-source" : "iot-gateway"

},

"verb" : "GET",

"bytes" : "612",

"geoip" : {

"ip" : "180.163.9.69",

"country_code3" : "CN",

"continent_code" : "AS",

"location" : {

"lat" : 31.0456,

"lon" : 121.3997

},

"country_code2" : "CN",

"country_name" : "China",

"region_name" : "Shanghai",

"longitude" : 121.3997,

"latitude" : 31.0456,

"city_name" : "Shanghai",

"region_code" : "31",

"timezone" : "Asia/Shanghai"

},

"beat" : {

"hostname" : "prod-web",

"name" : "prod-web",

"version" : "6.5.4"

},

"referrer" : "\"-\"",

"source" : "/tmp/micro_services_log/iot-gateway/access.log",

"clientip" : "180.163.9.69",

"auth" : "-",

"request" : "/",

"input" : {

"type" : "log"

},

"httpversion" : "1.1"

}

部署 kibana

- docker-compose 文件如下

version: '2.2'

networks:

esnet:

driver: bridge

services:

kibana:

image: docker.elastic.co/kibana/kibana:6.5.4

hostname: kibana

container_name: kibana

environment:

- TZ="Asia/Shanghai"

volumes:

- /home/elasticsearch/elk/kibana/kibana.yml:/usr/share/kibana/config/kibana.yml

networks:

- esnet

- kibana 配置文件如下

server.port: 5601

server.host: "0.0.0.0"

elasticsearch.url: "http://es3:9203"

server.name: "kibana"

xpack.monitoring.ui.container.elasticsearch.enabled: "true" #,默认为 false,对于使用 docker 运行的 elasticsearch,此设置为 true,改变 Node Listing 展示 基于 Cgooup 数据的CPU,增加了 Node Overview 的展示

xpack.monitoring.kibana.collection.enabled: true #当使用 beats 收集数据时候需要设置为 false,默认为 true

xpack.monitoring.enabled: true #启用监控数据收集,默认为 true

部署 filebeat

- docker 启动文件如下

#!/bin/bash

docker run -d --name=filebeat \

--user=root \

--hostname=nginx \

--restart=on-failure \

--net=host \

--log-driver json-file --log-opt max-size=10m --log-opt max-file=3 \

-v "/usr/local/filebeat/filebeat.yml:/usr/share/filebeat/filebeat.yml" \

-v "/tmp/micro_services_log:/tmp/micro_services_log" \

docker.elastic.co/beats/filebeat:6.5.4

- filebeat 配置文件

filebeat.inputs:

- type: log

enabled: true

paths:

- /tmp/micro_services_log/iot-gateway/*.log

fields:

log-source: nginx

# exclude_lines: ['^[0-9]{4}-[0-9]{2}-[0-9]{2}\s[0-9]{2}:[0-9]{2}:[0-9]{2}\sINFO']

#setup.template.name: "indoormap"

#setup.template.pattern: "indoormap-"

#output.elasticsearch:

# hosts: ["http://192.168.110.7:9201"]

# index: "indoormap-%{[fields][log-source]}-%{+yyyy.MM.dd}"

output.logstash:

hosts: ["192.168.110.7:5044"]

打开kibana 页面验证部署

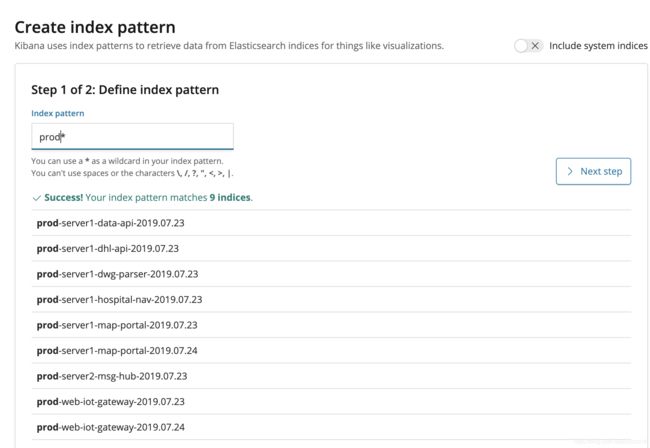

依次点击 Management->index Patterns->Create index patter->输入 index pattern->Next step->选择@timestamp->Create index pattern , 再根据 logstash 中 output 创建的 index 来创建 index,如下图

- 验证监控

- 验证主页面