Hive错误解决:Failed with exception Operation category READ is not supported in state standby

问题描述:

通过Azkaban调Sqoop,将Oracle数据导入Hive,报Failed with exception Operation category READ is not supported in state standby错误。Azkaban日志如下:

22-03-2019 15:01:14 CST sqoop INFO - Starting job sqoop at 1553238074471

......省略部分内容

22-03-2019 15:01:19 CST sqoop INFO - INFO: HADOOP_MAPRED_HOME is /u01/app/hadoop

22-03-2019 15:01:23 CST sqoop INFO - Note: /tmp/sqoop-hadoop/compile/4aa3a6c562acbf7c394308adbd2ba742/SSJ_BURIEDINFO_CALL.java uses or overrides a deprecated API.

......省略部分内容

22-03-2019 15:01:23 CST sqoop INFO - Mar 22, 2019 7:01:23 AM org.apache.sqoop.mapreduce.ImportJobBase runImport

22-03-2019 15:01:23 CST sqoop INFO - INFO: Beginning import of SSJ.BURIEDINFO_CALL

22-03-2019 15:01:23 CST sqoop INFO - Mar 22, 2019 7:01:23 AM org.apache.hadoop.conf.Configuration.deprecation warnOnceIfDeprecated

22-03-2019 15:01:23 CST sqoop INFO - INFO: mapred.job.tracker is deprecated. Instead, use mapreduce.jobtracker.address

......省略部分内容

22-03-2019 15:01:24 CST sqoop INFO - INFO: Connecting to ResourceManager at /10.200.4.117:8032

22-03-2019 15:01:26 CST sqoop INFO - Mar 22, 2019 7:01:26 AM org.apache.sqoop.mapreduce.db.DBInputFormat setTxIsolation

22-03-2019 15:01:26 CST sqoop INFO - INFO: Using read commited transaction isolation

22-03-2019 15:01:26 CST sqoop INFO - Mar 22, 2019 7:01:26 AM org.apache.hadoop.mapreduce.JobSubmitter submitJobInternal

22-03-2019 15:01:26 CST sqoop INFO - INFO: number of splits:1

22-03-2019 15:01:27 CST sqoop INFO - Mar 22, 2019 7:01:27 AM org.apache.hadoop.mapreduce.JobSubmitter printTokens

22-03-2019 15:01:27 CST sqoop INFO - INFO: Submitting tokens for job: job_1553163796336_0004

22-03-2019 15:01:27 CST sqoop INFO - Mar 22, 2019 7:01:27 AM org.apache.hadoop.yarn.client.api.impl.YarnClientImpl submitApplication

22-03-2019 15:01:27 CST sqoop INFO - INFO: Submitted application application_1553163796336_0004

22-03-2019 15:01:27 CST sqoop INFO - Mar 22, 2019 7:01:27 AM org.apache.hadoop.mapreduce.Job submit

22-03-2019 15:01:27 CST sqoop INFO - INFO: The url to track the job: http://oracle02.auditonline.prd.df.cn:8088/proxy/application_1553163796336_0004/

22-03-2019 15:01:27 CST sqoop INFO - Mar 22, 2019 7:01:27 AM org.apache.hadoop.mapreduce.Job monitorAndPrintJob

22-03-2019 15:01:27 CST sqoop INFO - INFO: Running job: job_1553163796336_0004

22-03-2019 15:01:34 CST sqoop INFO - Mar 22, 2019 7:01:34 AM org.apache.hadoop.mapreduce.Job monitorAndPrintJob

22-03-2019 15:01:34 CST sqoop INFO - INFO: Job job_1553163796336_0004 running in uber mode : false

22-03-2019 15:01:34 CST sqoop INFO - Mar 22, 2019 7:01:34 AM org.apache.hadoop.mapreduce.Job monitorAndPrintJob

22-03-2019 15:01:34 CST sqoop INFO - INFO: map 0% reduce 0%

22-03-2019 15:05:30 CST sqoop INFO - Mar 22, 2019 7:05:30 AM org.apache.hadoop.mapreduce.Job monitorAndPrintJob

22-03-2019 15:05:30 CST sqoop INFO - INFO: map 100% reduce 0%

22-03-2019 15:05:31 CST sqoop INFO - Mar 22, 2019 7:05:31 AM org.apache.hadoop.mapreduce.Job monitorAndPrintJob

22-03-2019 15:05:31 CST sqoop INFO - INFO: Job job_1553163796336_0004 completed successfully

22-03-2019 15:05:31 CST sqoop INFO - Mar 22, 2019 7:05:31 AM org.apache.hadoop.mapreduce.Job monitorAndPrintJob

22-03-2019 15:05:31 CST sqoop INFO - INFO: Counters: 30

22-03-2019 15:05:31 CST sqoop INFO - File System Counters

22-03-2019 15:05:31 CST sqoop INFO - FILE: Number of bytes read=0

22-03-2019 15:05:31 CST sqoop INFO - FILE: Number of bytes written=127688

22-03-2019 15:05:31 CST sqoop INFO - FILE: Number of read operations=0

22-03-2019 15:05:31 CST sqoop INFO - FILE: Number of large read operations=0

22-03-2019 15:05:31 CST sqoop INFO - FILE: Number of write operations=0

22-03-2019 15:05:31 CST sqoop INFO - HDFS: Number of bytes read=87

22-03-2019 15:05:31 CST sqoop INFO - HDFS: Number of bytes written=7331590541

22-03-2019 15:05:31 CST sqoop INFO - HDFS: Number of read operations=4

22-03-2019 15:05:31 CST sqoop INFO - HDFS: Number of large read operations=0

22-03-2019 15:05:31 CST sqoop INFO - HDFS: Number of write operations=2

22-03-2019 15:05:31 CST sqoop INFO - Job Counters

22-03-2019 15:05:31 CST sqoop INFO - Launched map tasks=1

22-03-2019 15:05:31 CST sqoop INFO - Other local map tasks=1

22-03-2019 15:05:31 CST sqoop INFO - Total time spent by all maps in occupied slots (ms)=233694

22-03-2019 15:05:31 CST sqoop INFO - Total time spent by all reduces in occupied slots (ms)=0

22-03-2019 15:05:31 CST sqoop INFO - Total time spent by all map tasks (ms)=233694

22-03-2019 15:05:31 CST sqoop INFO - Total vcore-milliseconds taken by all map tasks=233694

22-03-2019 15:05:31 CST sqoop INFO - Total megabyte-milliseconds taken by all map tasks=239302656

22-03-2019 15:05:31 CST sqoop INFO - Map-Reduce Framework

22-03-2019 15:05:31 CST sqoop INFO - Map input records=65351814

22-03-2019 15:05:31 CST sqoop INFO - Map output records=65351814

22-03-2019 15:05:31 CST sqoop INFO - Input split bytes=87

22-03-2019 15:05:31 CST sqoop INFO - Spilled Records=0

22-03-2019 15:05:31 CST sqoop INFO - Failed Shuffles=0

22-03-2019 15:05:31 CST sqoop INFO - Merged Map outputs=0

22-03-2019 15:05:31 CST sqoop INFO - GC time elapsed (ms)=5748

22-03-2019 15:05:31 CST sqoop INFO - CPU time spent (ms)=259570

22-03-2019 15:05:31 CST sqoop INFO - Physical memory (bytes) snapshot=281100288

22-03-2019 15:05:31 CST sqoop INFO - Virtual memory (bytes) snapshot=2138533888

22-03-2019 15:05:31 CST sqoop INFO - Total committed heap usage (bytes)=179830784

22-03-2019 15:05:31 CST sqoop INFO - File Input Format Counters

22-03-2019 15:05:31 CST sqoop INFO - Bytes Read=0

22-03-2019 15:05:31 CST sqoop INFO - File Output Format Counters

22-03-2019 15:05:31 CST sqoop INFO - Bytes Written=7331590541

22-03-2019 15:05:31 CST sqoop INFO - Mar 22, 2019 7:05:31 AM org.apache.sqoop.mapreduce.ImportJobBase runJob

22-03-2019 15:05:31 CST sqoop INFO - INFO: Transferred 6.8281 GB in 247.242 seconds (28.2798 MB/sec)

22-03-2019 15:05:31 CST sqoop INFO - Mar 22, 2019 7:05:31 AM org.apache.sqoop.mapreduce.ImportJobBase runJob

22-03-2019 15:05:31 CST sqoop INFO - INFO: Retrieved 65351814 records.

22-03-2019 15:05:31 CST sqoop INFO - Mar 22, 2019 7:05:31 AM org.apache.sqoop.mapreduce.ImportJobBase runImport

22-03-2019 15:05:31 CST sqoop INFO - INFO: Publishing Hive/Hcat import job data to Listeners for table SSJ.BURIEDINFO_CALL

22-03-2019 15:05:31 CST sqoop INFO - Mar 22, 2019 7:05:31 AM org.apache.sqoop.manager.OracleManager setSessionTimeZone

22-03-2019 15:05:31 CST sqoop INFO - INFO: Time zone has been set to GMT

22-03-2019 15:05:31 CST sqoop INFO - Mar 22, 2019 7:05:31 AM org.apache.sqoop.manager.SqlManager execute

22-03-2019 15:05:31 CST sqoop INFO - INFO: Executing SQL statement: SELECT t.* FROM SSJ.BURIEDINFO_CALL t WHERE 1=0

22-03-2019 15:05:31 CST sqoop INFO - Mar 22, 2019 7:05:31 AM org.apache.sqoop.hive.TableDefWriter getCreateTableStmt

22-03-2019 15:05:31 CST sqoop INFO - WARNING: Column CALL_TIME had to be cast to a less precise type in Hive

22-03-2019 15:05:31 CST sqoop INFO - Mar 22, 2019 7:05:31 AM org.apache.sqoop.hive.TableDefWriter getCreateTableStmt

22-03-2019 15:05:31 CST sqoop INFO - WARNING: Column CREATE_TIME had to be cast to a less precise type in Hive

22-03-2019 15:05:31 CST sqoop INFO - Mar 22, 2019 7:05:31 AM org.apache.sqoop.hive.HiveImport importTable

22-03-2019 15:05:31 CST sqoop INFO - INFO: Loading uploaded data into Hive

22-03-2019 15:05:31 CST sqoop INFO - 19/03/22 15:05:31 INFO conf.HiveConf: Found configuration file null

22-03-2019 15:05:33 CST sqoop INFO - Mar 22, 2019 7:05:33 AM org.apache.sqoop.hive.HiveImport run

22-03-2019 15:05:33 CST sqoop INFO - INFO: which: no hbase in (/usr/local/bin:/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/sbin:/usr/java/jdk1.8.0_121/bin:/u01/app/hadoop/bin:/u01/app/hadoop/sbin:/u01/app/spark-2.4.0-bin-hadoop2.6/bin:/u01/app/spark-2.4.0-bin-hadoop2.6/sbin:/u01/app/zookeeper-3.4.13/bin:/u01/app/kafka_2.12-2.1.0/bin:/u01/app/apache-hive-2.3.4-bin/bin:/u01/app/sqoop-1.4.7.bin-hadoop-2.6.0/bin:/home/hadoop/bin:/data/ogg_app)

22-03-2019 15:05:33 CST sqoop INFO - Mar 22, 2019 7:05:33 AM org.apache.sqoop.hive.HiveImport run

......省略部分内容

22-03-2019 15:05:40 CST sqoop INFO - Mar 22, 2019 7:05:40 AM org.apache.sqoop.hive.HiveImport run

22-03-2019 15:05:40 CST sqoop INFO - INFO: OK

22-03-2019 15:05:40 CST sqoop INFO - Mar 22, 2019 7:05:40 AM org.apache.sqoop.hive.HiveImport run

22-03-2019 15:05:40 CST sqoop INFO - INFO: Time taken: 1.714 seconds

22-03-2019 15:05:40 CST sqoop INFO - Mar 22, 2019 7:05:40 AM org.apache.sqoop.hive.HiveImport run

22-03-2019 15:05:40 CST sqoop INFO - INFO: Loading data to table ssj.buriedinfo_call

22-03-2019 15:05:41 CST sqoop INFO - Mar 22, 2019 7:05:41 AM org.apache.sqoop.hive.HiveImport run

22-03-2019 15:05:41 CST sqoop INFO - INFO: Failed with exception Operation category READ is not supported in state standby

22-03-2019 15:05:41 CST sqoop INFO - Mar 22, 2019 7:05:41 AM org.apache.sqoop.hive.HiveImport run

22-03-2019 15:05:41 CST sqoop INFO - INFO: at org.apache.hadoop.hdfs.server.namenode.ha.StandbyState.checkOperation(StandbyState.java:87)

22-03-2019 15:05:41 CST sqoop INFO - Mar 22, 2019 7:05:41 AM org.apache.sqoop.hive.HiveImport run

22-03-2019 15:05:41 CST sqoop INFO - INFO: at org.apache.hadoop.hdfs.server.namenode.NameNode$NameNodeHAContext.checkOperation(NameNode.java:1727)

22-03-2019 15:05:41 CST sqoop INFO - Mar 22, 2019 7:05:41 AM org.apache.sqoop.hive.HiveImport run

22-03-2019 15:05:41 CST sqoop INFO - INFO: at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkOperation(FSNamesystem.java:1352)

22-03-2019 15:05:41 CST sqoop INFO - Mar 22, 2019 7:05:41 AM org.apache.sqoop.hive.HiveImport run

22-03-2019 15:05:41 CST sqoop INFO - INFO: at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getFileInfo(FSNamesystem.java:4174)

22-03-2019 15:05:41 CST sqoop INFO - Mar 22, 2019 7:05:41 AM org.apache.sqoop.hive.HiveImport run

22-03-2019 15:05:41 CST sqoop INFO - INFO: at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.getFileInfo(NameNodeRpcServer.java:881)

22-03-2019 15:05:41 CST sqoop INFO - Mar 22, 2019 7:05:41 AM org.apache.sqoop.hive.HiveImport run

22-03-2019 15:05:41 CST sqoop INFO - INFO: at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.getFileInfo(ClientNamenodeProtocolServerSideTranslatorPB.java:821)

22-03-2019 15:05:41 CST sqoop INFO - Mar 22, 2019 7:05:41 AM org.apache.sqoop.hive.HiveImport run

22-03-2019 15:05:41 CST sqoop INFO - INFO: at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

22-03-2019 15:05:41 CST sqoop INFO - Mar 22, 2019 7:05:41 AM org.apache.sqoop.hive.HiveImport run

22-03-2019 15:05:41 CST sqoop INFO - INFO: at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:619)

22-03-2019 15:05:41 CST sqoop INFO - Mar 22, 2019 7:05:41 AM org.apache.sqoop.hive.HiveImport run

22-03-2019 15:05:41 CST sqoop INFO - INFO: at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:975)

22-03-2019 15:05:41 CST sqoop INFO - Mar 22, 2019 7:05:41 AM org.apache.sqoop.hive.HiveImport run

22-03-2019 15:05:41 CST sqoop INFO - INFO: at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2040)

22-03-2019 15:05:41 CST sqoop INFO - Mar 22, 2019 7:05:41 AM org.apache.sqoop.hive.HiveImport run

22-03-2019 15:05:41 CST sqoop INFO - INFO: at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2036)

22-03-2019 15:05:41 CST sqoop INFO - Mar 22, 2019 7:05:41 AM org.apache.sqoop.hive.HiveImport run

22-03-2019 15:05:41 CST sqoop INFO - INFO: at java.security.AccessController.doPrivileged(Native Method)

22-03-2019 15:05:41 CST sqoop INFO - Mar 22, 2019 7:05:41 AM org.apache.sqoop.hive.HiveImport run

22-03-2019 15:05:41 CST sqoop INFO - INFO: at javax.security.auth.Subject.doAs(Subject.java:422)

22-03-2019 15:05:41 CST sqoop INFO - Mar 22, 2019 7:05:41 AM org.apache.sqoop.hive.HiveImport run

22-03-2019 15:05:41 CST sqoop INFO - INFO: at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1692)

22-03-2019 15:05:41 CST sqoop INFO - Mar 22, 2019 7:05:41 AM org.apache.sqoop.hive.HiveImport run

22-03-2019 15:05:41 CST sqoop INFO - INFO: at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2034)

22-03-2019 15:05:41 CST sqoop INFO - Mar 22, 2019 7:05:41 AM org.apache.sqoop.hive.HiveImport run

22-03-2019 15:05:41 CST sqoop INFO - INFO:

22-03-2019 15:05:41 CST sqoop INFO - Mar 22, 2019 7:05:41 AM org.apache.sqoop.hive.HiveImport run

22-03-2019 15:05:41 CST sqoop INFO - INFO: FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.MoveTask. Operation category READ is not supported in state standby

22-03-2019 15:05:41 CST sqoop INFO - Mar 22, 2019 7:05:41 AM org.apache.sqoop.hive.HiveImport run

22-03-2019 15:05:41 CST sqoop INFO - INFO: at org.apache.hadoop.hdfs.server.namenode.ha.StandbyState.checkOperation(StandbyState.java:87)

......省略部分内容

22-03-2019 15:05:41 CST sqoop INFO - INFO: at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2034)

22-03-2019 15:05:41 CST sqoop INFO - Mar 22, 2019 7:05:41 AM org.apache.sqoop.hive.HiveImport run

22-03-2019 15:05:41 CST sqoop INFO - INFO:

22-03-2019 15:05:41 CST sqoop INFO - Mar 22, 2019 7:05:41 AM org.apache.sqoop.tool.ImportTool run

22-03-2019 15:05:41 CST sqoop INFO - SEVERE: Import failed: java.io.IOException: Hive exited with status 1

22-03-2019 15:05:41 CST sqoop INFO - at org.apache.sqoop.hive.HiveImport.executeExternalHiveScript(HiveImport.java:384)

22-03-2019 15:05:41 CST sqoop INFO - at org.apache.sqoop.hive.HiveImport.executeScript(HiveImport.java:337)

22-03-2019 15:05:41 CST sqoop INFO - at org.apache.sqoop.hive.HiveImport.importTable(HiveImport.java:241)

22-03-2019 15:05:41 CST sqoop INFO - at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:537)

22-03-2019 15:05:41 CST sqoop INFO - at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:628)

22-03-2019 15:05:41 CST sqoop INFO - at org.apache.sqoop.Sqoop.run(Sqoop.java:147)

22-03-2019 15:05:41 CST sqoop INFO - at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

22-03-2019 15:05:41 CST sqoop INFO - at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183)

22-03-2019 15:05:41 CST sqoop INFO - at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234)

22-03-2019 15:05:41 CST sqoop INFO - at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243)

22-03-2019 15:05:41 CST sqoop INFO - at org.apache.sqoop.Sqoop.main(Sqoop.java:252)

22-03-2019 15:05:41 CST sqoop INFO -

22-03-2019 15:05:41 CST sqoop INFO - Process completed unsuccessfully in 266 seconds.

22-03-2019 15:05:41 CST sqoop ERROR - Job run failed!

java.lang.RuntimeException: azkaban.jobExecutor.utils.process.ProcessFailureException

at azkaban.jobExecutor.ProcessJob.run(ProcessJob.java:304)

at azkaban.execapp.JobRunner.runJob(JobRunner.java:784)

at azkaban.execapp.JobRunner.doRun(JobRunner.java:600)

at azkaban.execapp.JobRunner.run(JobRunner.java:561)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Caused by: azkaban.jobExecutor.utils.process.ProcessFailureException

at azkaban.jobExecutor.utils.process.AzkabanProcess.run(AzkabanProcess.java:130)

at azkaban.jobExecutor.ProcessJob.run(ProcessJob.java:296)

... 8 more

22-03-2019 15:05:41 CST sqoop ERROR - azkaban.jobExecutor.utils.process.ProcessFailureException cause: azkaban.jobExecutor.utils.process.ProcessFailureException

22-03-2019 15:05:41 CST sqoop INFO - Finishing job sqoop at 1553238341300 with status FAILED

重点看以下内容:

22-03-2019 15:05:40 CST sqoop INFO - Mar 22, 2019 7:05:40 AM org.apache.sqoop.hive.HiveImport run

22-03-2019 15:05:40 CST sqoop INFO - INFO: OK

22-03-2019 15:05:40 CST sqoop INFO - Mar 22, 2019 7:05:40 AM org.apache.sqoop.hive.HiveImport run

22-03-2019 15:05:40 CST sqoop INFO - INFO: Time taken: 1.714 seconds

22-03-2019 15:05:40 CST sqoop INFO - Mar 22, 2019 7:05:40 AM org.apache.sqoop.hive.HiveImport run

22-03-2019 15:05:40 CST sqoop INFO - INFO: Loading data to table ssj.buriedinfo_call

22-03-2019 15:05:41 CST sqoop INFO - Mar 22, 2019 7:05:41 AM org.apache.sqoop.hive.HiveImport run

22-03-2019 15:05:41 CST sqoop INFO - INFO: Failed with exception Operation category READ is not supported in state standby

可以看到,数据是在写入HIVE表的时候报错。

解决方法:

我这里的集群架构是:

| 服务器 | NameNode | DataNode | Hive |

|---|---|---|---|

| 117(nn1) | 是(standby) | 是 | |

| 116(nn2) | 是(active) | 是 | |

| 156 | 是 |

有3台服务器,117(nn1)和116(nn2)为主备NameNode(117为standby,116为active),116和156为DataNode。

也就是116同时作为NameNode和DataNode,并且是active状态,117为standby,Hive程序安装在117上面。将117和116的状态切换后,117作为active,116作为standby,问题解决。

思考:

Sqoop导入数据到Hive的数据流程为:

1、Oracle表导入到HDFS的当前用户目录下(相当于临时文件);

2、从上面的临时文件复制到Hive指定的HDFS目录下;

3、删除临时目录。

我们验证下这个流程。先查看HDFS的临时文件目录,里面有新生成的文件:

hdfs dfs -ls /user/hadoop/SSJ.BURIEDINFO_CALL

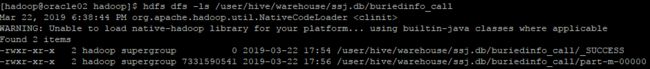

再查看HDFS的Hive文件目录,没有新生成的文件:(下面截图里面,数据文件是前一天的,没有重新生成)

hdfs dfs -ls /user/hive/warehouse/ssj.db/buriedinfo_call

然后将117和116的状态进行切换,117(nn1)从standby变为active,116(nn2)从active变为standby。关于两个NameNode之间的状态切换,我这里再多提一下。网上很多介绍的是通过命令hdfs haadmin -transitionToActive --forcemanual nn1,我这里执行后提示以下信息:

[hadoop@oracle02 logs]$ hdfs haadmin -transitionToActive --forcemanual nn1

You have specified the forcemanual flag. This flag is dangerous, as it can induce a split-brain scenario that WILL CORRUPT your HDFS namespace, possibly irrecoverably.

It is recommended not to use this flag, but instead to shut down the cluster and disable automatic failover if you prefer to manually manage your HA state.

You may abort safely by answering 'n' or hitting ^C now.

提示信息里面说,指定forcemanual参数是危险的,因为它可能导致集群脑裂和不可修复的HDFS命名空间损坏。如果想手工切换NameNode状态,建议先关闭集群,再将配置项automatic failover置为false。配置项在文件hdfs-site.xml,我这里配置的是true:

dfs.ha.automatic-failover.enabled

true

因为我是想让集群保持自动切换,所有没有修改automatic failover配置项,采取了另外一种方法:先kill掉116上面的NameNode进程,这样117的状态就由standby变为active,再将116的NameNode进程启动,116的状态为standby。

在116执行:

jps #找到NameNode进程ID

kill -9 NameNode进程ID

hadoop-daemon.sh start namenode

再查看两个NameNode的状态:

hdfs haadmin -getServiceState nn1

hdfs haadmin -getServiceState nn2

117(nn1)变为active,116(nn2)变为standby。

最后,删除HDFS的临时文件目录:

hdfs dfs -rm -r /user/hadoop/SSJ.BURIEDINFO_CALL

这个时候,我们可以再看一下HDFS的临时文件目录和Hive文件目录。

HDFS临时文件目录:

hdfs dfs -ls /user/hadoop

hdfs dfs -ls /user

可以看到,原来的临时文件目录/user/hadoop/SSJ.BURIEDINFO_CALL不见了。

再查看HDFS的Hive文件目录:

hdfs dfs -ls /user/hive/warehouse/ssj.db/buriedinfo_call

有最新文件生成。也就是,数据从HDFS的临时文件目录复制到Hive文件目录后,临时文件目录会删除。

完毕。