ELK日志管理系统优化之filebeat+kafka+zookeeper集群

Note:安装包下载url

filebeat下载网址:https://artifacts.elastic.co/downloads/kibana/kibana-7.5.1-linux-x86_64.tar.gz

kafka下载网址:https://mirrors.cnnic.cn/apache/kafka/2.4.1/kafka_2.13-2.4.1.tgz

zookeeper下载网址:http://archive.apache.org/dist/zookeeper/zookeeper-3.4.9/zookeeper-3.4.9.tar.gz

ELK其他服务的安装部署见:https://blog.csdn.net/baidu_38432732/article/details/103878589

1、filebeat安装部署(在所有需要搜集日志的服务器客户机上都部署)

[root@master-node elk]# tar -xf updates/filebeat-7.5.1-linux-x86_64.tar.gz

[root@master-node elk]# mv filebeat-7.5.1-linux-x86_64/ filebeat

[root@master-node elk]# cd filebeat/2、安装zookeeper

[root@server-mid elk]# tar -xf zookeeper-3.4.9.tar.gz

[root@server-mid elk]# mv zookeeper-3.4.9 zookeeper

[root@server-mid bin]# cd zookeeper/bin

[root@server-mid bin]# grep -v ^# ../conf/zoo.cfg (配置zoo.cfg配置文件内容,如下)

tickTime=10000

initLimit=10

syncLimit=5

dataDir=/tmp/zookeeper

clientPort=2181

[root@server-mid bin]# ./zkServer.sh start3、部署kafka

1)解压安装包

[root@master-node elk]# tar -xf kafka_2.13-2.4.1.tgz

[root@master-node elk]# mv kafka_2.13-2.4.1 kfk2)修改配置文件(其中192.168.0.194是kafka本机地址,192.168.0.197是zookeeper的地址)

[root@master-node ~]# grep -v ^# /usr/local/elk/kfk/config/server.properties |grep -v ^$

broker.id=0

host.name=192.168.0.194

listeners=PLAINTEXT://192.168.0.194:19092

advertised.listeners=PLAINTEXT://192.168.0.194:19092

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=1024000

socket.receive.buffer.bytes=1024000

socket.request.max.bytes=1048576000

log.dirs=/tmp/kafka-logs

num.partitions=2

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

port = 19092

zookeeper.connect=192.168.0.197:2181

zookeeper.connection.timeout.ms=6000000

group.initial.rebalance.delay.ms=03)启动kafka服务

[root@master-node ~]# /usr/local/elk/kfk/bin/kafka-server-start.sh -daemon /usr/local/elk/kfk/config/server.propertiesstop命令:/usr/local/elk/kfk/bin/zookeeper-server-stop.sh /usr/local/elk/kfk/config/zookeeper.properties &

kafka设置系统启动并开机启动

[root@data-node1 system]# vim /lib/systemd/system/kafka.service

[Unit]

Description=kafka

After=network.target

[Service]

Type=simple

StandardOutput=syslog

StandardError=syslog

SyslogIdentifier=kafka

User=root

WorkingDirectory=/usr/local/elk/kfk

ExecStart=/usr/local/elk/kfk/bin/kafka-server-start.sh /usr/local/elk/kfk/config/server.properties

KillMode=process

TimeoutStopSec=60

Restart=on-failure

RestartSec=5

RemainAfterExit=no

[Install]

WantedBy=multi-user.target扩展阅读:

Kafka创建topic

/usr/local/elk/kfk/bin/kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic test

/usr/local/elk/kfk/bin/kafka-topics.sh --list --zookeeper localhost:2181 test

/usr/local/elk/kfk/bin/kafka-console-producer.sh --broker-list localhost:19092 --topic test --from-beginning4、配置filebeat启动配置文件,配置文件内容如下(其中multiline的配置项的内容末尾不能有空格,更不能加注释,因为filebeat会将注释也当做参数内容)一般不急着启动,我们要先启动logstash在启动filebeat

[root@manage-host ~]# vim /usr/local/elk/filebeat/filebeat_logstash.yml

filebeat.inputs:

- type: log

enabled: true

reload.enabled: false

paths:

- /var/log/messages

# 正则匹配 符合的日志文件.

multiline.pattern: '^\w{3} [0-9]{2} [0-9]{2}:[0-9]{2}:[0-9]{2}'

# 正则匹配 符合的日志文件.

multiline.negate: true

# 正则匹配 符合的日志文件.

multiline.match: after

fields: # 这个字段名一定是fields

log_topics: system # 起标志行作用,这个字段名可以自己定义,也可以加多个,在后面条件判断和索引配置调用起作用,一个fields标志一个项目的内容

- type: log

enabled: true

reload.enabled: false

paths:

- /home/deploy/nginx/logs/access.log

multiline.pattern: '^(((25[0-5]|2[0-4]\d|1\d{2}|[1-9]?\d)\.){3}(25[0-5]|2[0-4]\d|1\d{2}|[1-9]?\d))'

multiline.negate: true

multiline.match: after

fields:

log_topics: nginx_access

- type: log

enabled: true

reload.enabled: false

paths:

- /home/deploy/tomcat8_manage1/logs/catalina-*.out

- /home/deploy/tomcat8_manage2/logs/catalina-*.out

multiline.pattern: '^\['

multiline.negate: true

multiline.match: after

fields:

log_topics: manage

- type: log

reload.enabled: false

enabled: true

paths:

- /home/deploy/tomcat8_coin/logs/catalina-*.out

multiline.pattern: '^\['

multiline.negate: true

multiline.match: after

fields:

log_topics: coin

output.kafka:

enabled: true

hosts: ["192.168.0.194:19092"] # kafa的端口和路径

topic: '%{[fields][log_topics]}'

partition.round_robin:

reachable_only: false

compression: gzip

max_message_bytes: 1000000

required_acks: 1

设置系统启动并开机启动

[root@data-node1 system]# vim /lib/systemd/system/filebeat.service

[Unit]

Description=Filebeat

After=network.target

[Service]

Type=simple

StandardOutput=syslog

StandardError=syslog

SyslogIdentifier=filebeat

User=root

WorkingDirectory=/usr/local/elk/filebeat/

ExecStart=/usr/local/elk/filebeat/filebeat -e -c /usr/local/elk/filebeat/filebeat_logstash.yml

KillMode=process

TimeoutStopSec=60

Restart=on-failure

RestartSec=5

RemainAfterExit=no

[Install]

WantedBy=multi-user.target

启动并查看服务状态

[root@data-node1 system]# systemctl enable filebeat

Created symlink from /etc/systemd/system/multi-user.target.wants/filebeat.service to /usr/lib/systemd/system/kafka.service.

[root@data-node1 system]# systemctl start filebeat

[root@manage-host system]# systemctl status filebeat.service

● filebeat.service - Ethereum ACL Test chain

Loaded: loaded (/usr/lib/systemd/system/filebeat.service; enabled; vendor preset: disabled)

Active: active (running) since 四 2020-04-09 17:23:16 CST; 2h 59min ago

Main PID: 19494 (filebeat)

Tasks: 22

Memory: 31.7M

CGroup: /system.slice/filebeat.service

└─19494 /usr/local/elk/filebeat/filebeat -e -c /usr/local/elk/filebeat/filebeat_logstash.yml

4月 09 20:17:46 manage-host filebeat[19494]: 2020-04-09T20:17:46.399+0800 INFO [monitoring] log/log.go:145 Non-zero metrics in the last 30s {"monitorin...

4月 09 20:18:16 manage-host filebeat[19494]: 2020-04-09T20:18:16.401+0800 INFO [monitoring] log/log.go:145 Non-zero metrics in the last 30s {"monitorin...

4月 09 20:18:46 manage-host filebeat[19494]: 2020-04-09T20:18:46.402+0800 INFO [monitoring] log/log.go:145 Non-zero metrics in the last 30s {"monitorin...

4月 09 20:19:16 manage-host filebeat[19494]: 2020-04-09T20:19:16.402+0800 INFO [monitoring] log/log.go:145 Non-zero metrics in the last 30s {"monitorin...

4月 09 20:19:46 manage-host filebeat[19494]: 2020-04-09T20:19:46.400+0800 INFO [monitoring] log/log.go:145 Non-zero metrics in the last 30s {"monitorin...

4月 09 20:20:16 manage-host filebeat[19494]: 2020-04-09T20:20:16.399+0800 INFO [monitoring] log/log.go:145 Non-zero metrics in the last 30s {"monitorin...

4月 09 20:20:46 manage-host filebeat[19494]: 2020-04-09T20:20:46.402+0800 INFO [monitoring] log/log.go:145 Non-zero metrics in the last 30s {"monitorin...

4月 09 20:21:16 manage-host filebeat[19494]: 2020-04-09T20:21:16.399+0800 INFO [monitoring] log/log.go:145 Non-zero metrics in the last 30s {"monitorin...

4月 09 20:21:46 manage-host filebeat[19494]: 2020-04-09T20:21:46.402+0800 INFO [monitoring] log/log.go:145 Non-zero metrics in the last 30s {"monitorin...

4月 09 20:22:16 manage-host filebeat[19494]: 2020-04-09T20:22:16.403+0800 INFO [monitoring] log/log.go:145 Non-zero metrics in the last 30s {"monitorin...

Hint: Some lines were ellipsized, use -l to show in full.

5、配置logstash的配置文件

input {

kafka {

topics => ["manage","coin","nginx_access","system"] # 刚刚filebeat配置文件里的fields参数的内容

bootstrap_servers => "192.168.0.194:19092" # kafka的url

auto_offset_reset => "latest" # 从最新的偏移量开始消费

decorate_events => true # 此属性会将当前topic、offset、group、partition等信息也带到message中

codec=>"json" # 此时是json数据格式

}

}

output {

elasticsearch { #就输出到Elasticsearch服务器

hosts => ["192.168.0.117:9200","192.168.0.140:9200","192.168.0.156:9200","192.168.0.197:9200"] #Elasticsearch监听地址及端口

index => "%{[@metadata][kafka][topic]}_log-%{+YYYY.MM.dd}" #指定索引格式

}

}6、启动logstash和filebeat(注意生产消费模型要先启动消费者在启动生产者,在这里就先启动logstash在启动filebeat)

1)启动logstash

[root@master-node bin]# /usr/local/elk/logstash/logstash -f /usr/local/elk/logstash/bin/output_kfk.conf &2)启动filebeat

[root@master-node bin]# /usr/local/elk/filebeat/filebeat -e -c /usr/local/elk/filebeat/filebeat_logstash.yml &7、我们可以在kafka查询我们的消息消费情况

1)查询消费组名称(如下查询到了消费者是logstash)

[root@master-node ~]# /usr/local/elk/kfk/bin/kafka-consumer-groups.sh --bootstrap-server 192.168.0.194:19092 --list

logstash2)根据消费组在查询消息消费情况

[root@master-node ~]# /usr/local/elk/kfk/bin/kafka-consumer-groups.sh --bootstrap-server 192.168.0.194:19092 -describe -group logstash

GROUP TOPIC PARTITION CURRENT-OFFSET LOG-END-OFFSET LAG CONSUMER-ID HOST CLIENT-ID

logstash coin 1 117358 117358 0 logstash-0-9213015f-04f2-456a-8f54-7062cb171b9e /192.168.0.194 logstash-0

logstash manage 0 2169033 2169033 0 logstash-0-9213015f-04f2-456a-8f54-7062cb171b9e /192.168.0.194 logstash-0

logstash manage 1 2169030 2169030 0 logstash-0-9213015f-04f2-456a-8f54-7062cb171b9e /192.168.0.194 logstash-0

logstash coin 0 117362 117362 0 logstash-0-9213015f-04f2-456a-8f54-7062cb171b9e /192.168.0.194 logstash-0

注:各参数意义解读

[root@master-node ~]# /usr/local/elk/kfk/bin/kafka-consumer-groups.sh --bootstrap-server 192.168.0.194:19092 -describe -group logstash

GROUP TOPIC PARTITION CURRENT-OFFSET LOG-END-OFFSET LAG CONSUMER-ID HOST CLIENT-ID

消费组 topic 区块 当前消费多少消息 总共多小消息 还剩多少消息 消费者id 客户主机 客户id

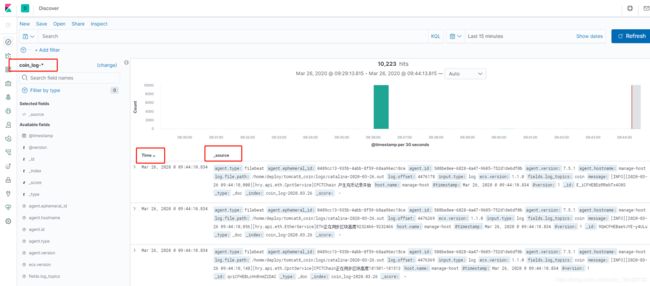

8、至此我们的部署工作和配置工作已完成,现在我们做的工作就是在kibana上查询我们对应的index的日志数据

1)看我们显示的各自字段的json数据如下

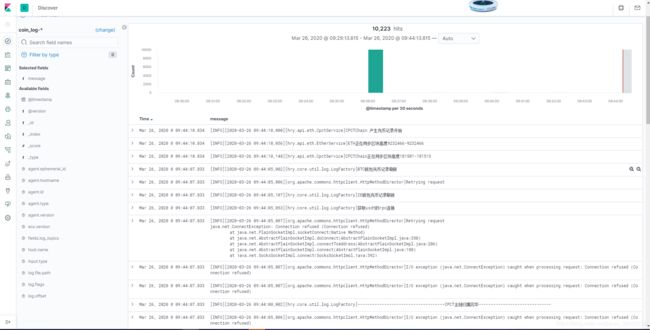

2)查看我们最原始的日志数据

A、勾选我们的message的选项

B、查看我们的原始日志数据

1、zookeeper+kafka可以做个集群

2、如果数据量比较大的话我们横向扩容elasticsearch的服务器