基于Kubernetes的框架和应用系列之三:部署Ceph用于Kubernetes POD的永久存储

一、前言

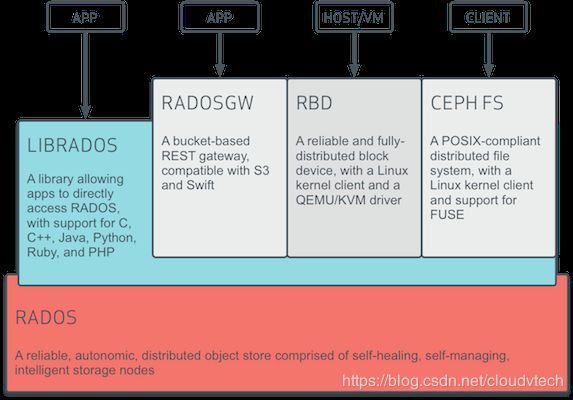

Ceph是业界标准化的存储集成框架,支持多种底层存储,可以提供块存储、共享文件系统存储和对象存储,是Kubernetes存储标准化的未来集成方向。本文将基于Helm部署Ceph并向Kubernetes POD提供基于RBD的PVC

转载自https://blog.csdn.net/cloudvtech

二、安装前准备工作

2.1 获取代码并打包成chart

helm serve &

helm repo add local http://localhost:8879/charts

git clone https://github.com/ceph/ceph-helm

cd ceph-helm/ceph

make

ls ceph/ceph-0.1.0.tgz2.2 安装依赖

yum install -y ceph-common

# as node does config k8s internal DNS and ceph-mon is a NodePort service

echo "172.2.2.11 ceph-mon.ceph.svc.cluster.local" > /etc/hosts

2.3 Ceph的配置

ceph-overrides.yaml

network:

public: 172.2.0.0/16

cluster: 172.2.0.0/16

osd_devices:

- name: dev-sdf

device: /dev/sdf

zap: "1"

storageclass:

name: ceph-rbd

pool: rbd

user_id: admin

上述配置的说明:

-

172.2.0.0/16是 k8s node所在IP range

-

作为Ceph存储的硬盘/dev/sdf,三台k8s节点都有这样一个设备,如果设备名称不同,后面node的label也需要变化

ls -l /dev/sdf

brw-rw---- 1 root disk 8, 80 Oct 11 12:03 /dev/sdf- user_id: admin,这里的 storageclass中的user_id 如果不是 admin,则需要在 ceph 集群手动创建并在 kubernetes 中创建对应的 secret

2.4 配置namespace和node label

NS

kubectl create namespace ceph

RBAC

kubectl create -f ~/ceph-helm/ceph/rbac.yaml

LABEL

kubectl label node k8s-node-01 ceph-mon=enabled ceph-mgr=enabled ceph-osd=enabled ceph-osd-device-dev-sdf=enabled

kubectl label node k8s-node-02 ceph-mon=enabled ceph-osd=enabled ceph-osd-device-dev-sdf=enabled

kubectl label node k8s-node-03 ceph-mon=enabled ceph-osd=enabled ceph-osd-device-dev-sdf=enabled说明:

这里的 ceph-osd-dev-vdf 这个label 是根据 ceps-overrides.yaml中的 osd_devices 的名称创建,如果每个节点多个 osd 盘,那么需要打相应个数的labels

ceph-mgr 只能以单副本运行

转载自https://blog.csdn.net/cloudvtech

三、安装Ceph

3.1 修改value文件

diff --git a/ceph/ceph/values.yaml b/ceph/ceph/values.yaml

index 5831c53..617f53b 100644

--- a/ceph/ceph/values.yaml

+++ b/ceph/ceph/values.yaml

@@ -254,8 +254,8 @@ ceph:

mgr: true

storage:

# will have $NAMESPACE/{osd,mon} appended

- osd_directory: /var/lib/ceph-helm

- mon_directory: /var/lib/ceph-helm

+ osd_directory: /var/lib/ceph

+ mon_directory: /var/lib/ceph

# use /var/log for fluentd to collect ceph log

# mon_log: /var/log/ceph/mon

# osd_log: /var/log/ceph/osd3.2 部署

helm install --name=ceph ceph/ceph-0.1.0.tgz --namespace=ceph -f ceph-overrides.yaml

LAST DEPLOYED: Thu Oct 11 04:05:45 2018

NAMESPACE: ceph

STATUS: DEPLOYED

RESOURCES:

==> v1beta1/DaemonSet

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

ceph-mon 3 3 3 3 3 ceph-mon=enabled 1h

ceph-osd-dev-sdf 3 3 3 3 3 ceph-osd-device-dev-sdf=enabled,ceph-osd=enabled 1h

==> v1beta1/Deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

ceph-mds 1 1 1 0 1h

ceph-mgr 1 1 1 1 1h

ceph-mon-check 1 1 1 1 1h

ceph-rbd-provisioner 2 2 2 2 1h

ceph-rgw 1 1 1 0 1h

==> v1/Job

NAME DESIRED SUCCESSFUL AGE

ceph-mon-keyring-generator 1 1 1h

ceph-rgw-keyring-generator 1 1 1h

ceph-osd-keyring-generator 1 1 1h

ceph-mds-keyring-generator 1 1 1h

ceph-mgr-keyring-generator 1 1 1h

ceph-namespace-client-key-generator 1 1 1h

ceph-storage-keys-generator 1 1 1h

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

ceph-mon-565l5 3/3 Running 0 1h

ceph-mon-ctr8c 3/3 Running 0 1h

ceph-mon-rgs5p 3/3 Running 0 1h

ceph-osd-dev-sdf-jqk86 1/1 Running 0 1h

ceph-osd-dev-sdf-m5vc6 1/1 Running 0 1h

ceph-osd-dev-sdf-pn6kt 1/1 Running 0 1h

ceph-mds-666578c5f5-cw2fr 0/1 Pending 0 1h

ceph-mgr-69c4b4d4bb-2hvtr 1/1 Running 1 1h

ceph-mon-check-59499b664d-6jtrp 1/1 Running 0 1h

ceph-rbd-provisioner-5bc57f5f64-2wg9w 1/1 Running 0 1h

ceph-rbd-provisioner-5bc57f5f64-bmsmd 1/1 Running 0 1h

ceph-rgw-58c67497fb-llm5f 0/1 Pending 0 1h

ceph-mon-keyring-generator-862pg 0/1 Completed 0 1h

ceph-rgw-keyring-generator-jvbcb 0/1 Completed 0 1h

ceph-osd-keyring-generator-slms7 0/1 Completed 0 1h

ceph-mds-keyring-generator-c8jzj 0/1 Completed 0 1h

ceph-mgr-keyring-generator-5wrl7 0/1 Completed 0 1h

ceph-namespace-client-key-generator-w2w7f 0/1 Completed 0 1h

ceph-storage-keys-generator-vzs5m 0/1 Completed 0 1h

==> v1/Secret

NAME TYPE DATA AGE

ceph-keystone-user-rgw Opaque 7 1h

==> v1/ConfigMap

NAME DATA AGE

ceph-bin-clients 2 1h

ceph-bin 26 1h

ceph-etc 1 1h

ceph-templates 5 1h

==> v1/StorageClass

NAME PROVISIONER AGE

ceph-rbd ceph.com/rbd 1h

==> v1/Service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ceph-mon ClusterIP None

6789/TCP 1h ceph-rgw ClusterIP 10.96.22.221

8088/TCP 1h

3.3 查看部署之后的信息

[root@k8s-master-01 ceph-helm]# kubectl get pod -n ceph -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

ceph-mds-666578c5f5-cw2fr 0/1 Pending 0 1h

ceph-mds-keyring-generator-c8jzj 0/1 Completed 0 1h 10.244.89.137 k8s-node-03

ceph-mgr-69c4b4d4bb-2hvtr 1/1 Running 1 1h 172.2.2.11 k8s-node-01

ceph-mgr-keyring-generator-5wrl7 0/1 Completed 0 1h 10.244.89.172 k8s-node-03

ceph-mon-565l5 3/3 Running 0 1h 172.2.2.11 k8s-node-01

ceph-mon-check-59499b664d-6jtrp 1/1 Running 0 1h 10.244.44.233 k8s-node-02

ceph-mon-ctr8c 3/3 Running 0 1h 172.2.2.12 k8s-node-02

ceph-mon-keyring-generator-862pg 0/1 Completed 0 1h 10.244.154.203 k8s-node-01

ceph-mon-rgs5p 3/3 Running 0 1h 172.2.2.13 k8s-node-03

ceph-namespace-client-key-generator-w2w7f 0/1 Completed 0 1h 10.244.44.206 k8s-node-02

ceph-osd-dev-sdf-jqk86 1/1 Running 0 1h 172.2.2.12 k8s-node-02

ceph-osd-dev-sdf-m5vc6 1/1 Running 0 1h 172.2.2.11 k8s-node-01

ceph-osd-dev-sdf-pn6kt 1/1 Running 0 1h 172.2.2.13 k8s-node-03

ceph-osd-keyring-generator-slms7 0/1 Completed 0 1h 10.244.154.198 k8s-node-01

ceph-rbd-provisioner-5bc57f5f64-2wg9w 1/1 Running 0 1h 10.244.44.205 k8s-node-02

ceph-rbd-provisioner-5bc57f5f64-bmsmd 1/1 Running 0 1h 10.244.89.133 k8s-node-03

ceph-rgw-58c67497fb-llm5f 0/1 Pending 0 1h

ceph-rgw-keyring-generator-jvbcb 0/1 Completed 0 1h 10.244.44.193 k8s-node-02

ceph-storage-keys-generator-vzs5m 0/1 Completed 0 1h 10.244.154.236 k8s-node-01 说明:

The MDS and RGW Pods are pending since we did not label any nodes with ceph-rgw=enabled or ceph-mds=enabled

获取StorageClass

kubectl get sc

NAME PROVISIONER AGE

ceph-rbd ceph.com/rbd 2h

nfs-client cluster.local/quiet-toucan-nfs-client-provisioner 20h查看ceph-mon内部

kubectl exec -it ceph-mon-ctr8c -n ceph -c ceph-mon -- ceph -s

cluster:

id: 676af649-2604-42ac-be5f-8cf37a712880

health: HEALTH_WARN

clock skew detected on mon.k8s-node-02, mon.k8s-node-03

services:

mon: 3 daemons, quorum k8s-node-01,k8s-node-02,k8s-node-03

mgr: k8s-node-01(active)

osd: 3 osds: 3 up, 3 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 bytes

usage: 322 MB used, 32825 GB / 32826 GB avail

pgs:3.4 配置key文件

kubectl exec -it ceph-mon-ctr8c -n ceph -c ceph-mon -- grep key /etc/ceph/ceph.client.admin.keyring |awk '{printf "%s", $NF}'|base64

QVFBNXk3NWJBQUFBQUJBQXVicjRvNzVJa2xkN1g3YU5oQ1BGUHc9PQ0=

Edit the user secret present in the ceph namespace:

kubectl edit secret pvc-ceph-client-key -n ceph

Add the base64 value to the key value with your own and save:

We are going to create a Pod that consumes a RBD in the default namespace. Copy the user secret from the ceph namespace to default:

kubectl -n ceph get secrets/pvc-ceph-client-key -o json | jq '.metadata.namespace = "default"' | kubectl create -f -

secret/pvc-ceph-client-key created

配置RBD池

kubectl -n ceph exec -ti ceph-mon-565l5 -c ceph-mon -- ceph osd pool create rbd 128

pool 'rbd' created

kubectl -n ceph exec -ti ceph-mon-565l5 -c ceph-mon -- rbd pool init rbd

kubectl -n ceph exec -ti ceph-mon-565l5 -c ceph-mon -- ceph osd crush tunables hammer转载自https://blog.csdn.net/cloudvtech

四、使用Ceph RBD作为POD的PVC

4.1 创建PVC

pvc-rbd.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: ceph-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

storageClassName: ceph-rbd查看PVC在Ceph内部对应的RBD信息

kubectl get pv | grep ceph

pvc-4a8085ca-cd0d-11e8-9135-fa163ebda1b8 20Gi RWO Delete Bound default/ceph-pvc ceph-rbd 1h

kubectl -n ceph exec -ti ceph-mon-565l5 -c ceph-mon -- rbd ls

kubernetes-dynamic-pvc-5c8953c2-cd0d-11e8-bb17-8a18da79c673

kubectl -n ceph exec -ti ceph-mon-565l5 -c ceph-mon -- rbd info kubernetes-dynamic-pvc-5c8953c2-cd0d-11e8-bb17-8a18da79c673

rbd image 'kubernetes-dynamic-pvc-5c8953c2-cd0d-11e8-bb17-8a18da79c673':

size 20480 MB in 5120 objects

order 22 (4096 kB objects)

block_name_prefix: rbd_data.12f3238e1f29

format: 2

features: layering

flags:

create_timestamp: Thu Oct 11 04:19:23 20184.2 创建一个使用这个PVC的POD

pod-with-rbd.yaml

kind: Pod

apiVersion: v1

metadata:

name: ceph-rbd-pod

spec:

containers:

- name: busybox

image: busybox

command:

- sleep

- "3600"

volumeMounts:

- mountPath: "/mnt/rbd"

name: vol1

volumes:

- name: vol1

persistentVolumeClaim:

claimName: ceph-pvc4.3 查看部署结果

[root@k8s-master-01 test-pv]# kubectl get pvc | grep ceph

ceph-pvc Bound pvc-4a8085ca-cd0d-11e8-9135-fa163ebda1b8 20Gi RWO ceph-rbd 1h

kubectl describe pod ceph-rbd-pod

…

Volumes:

vol1:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: ceph-pvc

ReadOnly: false

default-token-khs2h:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-khs2h

Optional: false

…

[root@k8s-master-01 test-pv]# kubectl exec -it ceph-rbd-pod sh

/ # mount | grep rbd

/dev/rbd0 on /mnt/rbd type ext4 (rw,relatime,stripe=1024,data=ordered)

/ # df -h | grep rbd

/dev/rbd0 19.6G 44.0M 19.5G 0% /mnt/rbd4.4 相关日志

[root@k8s-master-01 ceph-helm]# kubectl -n ceph logs ceph-mon-565l5 -c cluster-log-tailer

tail: can't open '/var/log/ceph/ceph.log': No such file or directory

2018-10-11 04:02:24.250362 mon.k8s-node-01 unknown.0 - 0 : [INF] mkfs 676af649-2604-42ac-be5f-8cf37a712880

2018-10-11 04:02:14.222918 mon.k8s-node-01 mon.0 172.2.2.11:6789/0 1 : cluster [INF] mon.k8s-node-01 calling monitor election

tail: /var/log/ceph/ceph.log has appeared; following end of new file

2018-10-11 04:02:19.227301 mon.k8s-node-01 mon.0 172.2.2.11:6789/0 2 : cluster [INF] mon.k8s-node-01 is new leader, mons k8s-node-01,k8s-node-02 in quorum (ranks 0,1)

2018-10-11 04:02:19.235731 mon.k8s-node-01 mon.0 172.2.2.11:6789/0 3 : cluster [WRN] message from mon.1 was stamped 236.528097s in the future, clocks not synchronized

2018-10-11 04:02:19.238536 mon.k8s-node-01 mon.0 172.2.2.11:6789/0 4 : cluster [INF] mon.k8s-node-01 calling monitor election

2018-10-11 04:02:24.241970 mon.k8s-node-01 mon.0 172.2.2.11:6789/0 5 : cluster [INF] mon.k8s-node-01 is new leader, mons k8s-node-01,k8s-node-02 in quorum (ranks 0,1)

2018-10-11 04:02:24.251072 mon.k8s-node-01 mon.0 172.2.2.11:6789/0 7 : cluster [WRN] mon.1 172.2.2.12:6789/0 clock skew 236.521s > max 0.05s

2018-10-11 04:02:24.251235 mon.k8s-node-01 mon.0 172.2.2.11:6789/0 8 : cluster [WRN] message from mon.1 was stamped 236.529044s in the future, clocks not synchronized

2018-10-11 04:02:24.252357 mon.k8s-node-01 mon.0 172.2.2.11:6789/0 9 : cluster [INF] pgmap 0 pgs: ; 0 bytes data, 0 kB used, 0 kB / 0 kB avail

2018-10-11 04:02:24.253631 mon.k8s-node-01 mon.0 172.2.2.11:6789/0 10 : cluster [INF] HEALTH_ERR; no osds; 3 mons down, quorum

2018-10-11 04:02:24.253670 mon.k8s-node-01 mon.0 172.2.2.11:6789/0 11 : cluster [INF] mon.k8s-node-01 calling monitor election

2018-10-11 04:02:24.258318 mon.k8s-node-01 mon.0 172.2.2.11:6789/0 12 : cluster [INF] mon.k8s-node-01 is new leader, mons k8s-node-01,k8s-node-02,k8s-node-03 in quorum (ranks 0,1,2)

2018-10-11 04:02:24.261000 mon.k8s-node-01 mon.0 172.2.2.11:6789/0 13 : cluster [WRN] mon.1 172.2.2.12:6789/0 clock skew 236.53s > max 0.05s

2018-10-11 04:02:24.263522 mon.k8s-node-01 mon.0 172.2.2.11:6789/0 14 : cluster [WRN] mon.2 172.2.2.13:6789/0 clock skew 177.015s > max 0.05s

2018-10-11 04:02:24.310959 mon.k8s-node-01 mon.0 172.2.2.11:6789/0 19 : cluster [WRN] Health check failed: clock skew detected on mon.k8s-node-02, mon.k8s-node-03 (MON_CLOCK_SKEW)

2018-10-11 04:02:24.391378 mon.k8s-node-01 mon.0 172.2.2.11:6789/0 20 : cluster [WRN] overall HEALTH_WARN clock skew detected on mon.k8s-node-02, mon.k8s-node-03

2018-10-11 04:02:47.259003 mon.k8s-node-01 mon.0 172.2.2.11:6789/0 34 : cluster [INF] Activating manager daemon k8s-node-01

2018-10-11 04:02:47.822055 mon.k8s-node-01 mon.0 172.2.2.11:6789/0 41 : cluster [INF] Manager daemon k8s-node-01 is now available

2018-10-11 04:02:49.345659 mon.k8s-node-01 mon.0 172.2.2.11:6789/0 44 : cluster [WRN] message from mon.1 was stamped 236.531430s in the future, clocks not synchronized

2018-10-11 04:02:54.264590 mon.k8s-node-01 mon.0 172.2.2.11:6789/0 46 : cluster [WRN] mon.2 172.2.2.13:6789/0 clock skew 177.019s > max 0.05s

2018-10-11 04:02:54.264691 mon.k8s-node-01 mon.0 172.2.2.11:6789/0 47 : cluster [WRN] mon.1 172.2.2.12:6789/0 clock skew 236.53s > max 0.05s

2018-10-11 04:03:33.379645 mon.k8s-node-01 mon.0 172.2.2.11:6789/0 82 : cluster [INF] osd.1 172.2.2.11:6801/53205 boot

2018-10-11 04:03:47.829164 mon.k8s-node-01 mon.0 172.2.2.11:6789/0 108 : cluster [INF] osd.0 172.2.2.13:6800/151522 boot

2018-10-11 04:03:54.265810 mon.k8s-node-01 mon.0 172.2.2.11:6789/0 114 : cluster [WRN] mon.2 172.2.2.13:6789/0 clock skew 177.019s > max 0.05s

2018-10-11 04:03:54.265891 mon.k8s-node-01 mon.0 172.2.2.11:6789/0 115 : cluster [WRN] mon.1 172.2.2.12:6789/0 clock skew 236.531s > max 0.05s

2018-10-11 04:04:07.363697 mon.k8s-node-01 mon.0 172.2.2.11:6789/0 129 : cluster [INF] osd.2 172.2.2.12:6800/39966 boot

2018-10-11 04:04:55.365117 mon.k8s-node-01 mon.0 172.2.2.11:6789/0 143 : cluster [WRN] message from mon.1 was stamped 236.532211s in the future, clocks not synchronized

2018-10-11 04:05:24.266925 mon.k8s-node-01 mon.0 172.2.2.11:6789/0 151 : cluster [WRN] mon.2 172.2.2.13:6789/0 clock skew 177.019s > max 0.05s

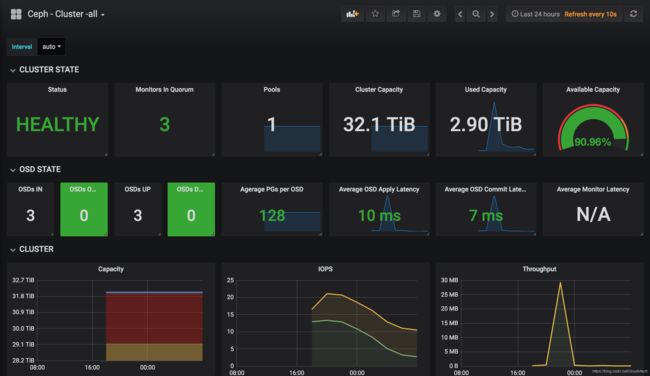

2018-10-11 04:05:24.267013 mon.k8s-node-01 mon.0 172.2.2.11:6789/0 152 : cluster [WRN] mon.1 172.2.2.12:6789/0 clock skew 236.531s > max 0.05s五、grafana监控

Add monitoring

Log Into OSD pod and run

ceph mgr module enable prometheus

Edit ceph-mon service to bellow:

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: ceph

name: ceph-mon

namespace: ceph

spec:

clusterIP: None

ports:

- name: tcp

port: 6789

protocol: TCP

targetPort: 6789

- name: metrics

port: 9283

protocol: TCP

targetPort: 9283

selector:

application: ceph

component: mon

release_group: ceph

sessionAffinity: None

type: ClusterIPAdd servicemonitor via ServiceMonitor-ceph.yaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: ceph

namespace: monitoring

labels:

prometheus: kube-prometheus

spec:

jobLabel: k8s-ceph

endpoints:

- port: metrics

interval: 10s

selector:

matchLabels:

k8s-app: ceph

namespaceSelector:

matchNames:

- cephGrafana dashboard for Ceph