一、前言

ovs-multitenant模式与ovs-subnet模式相比,对每个project设置了VxLAN的VNID,只有在同一个project里面的POD发出的数据包只有打上一样的VNID,才能为这个project里面其它数据包接收。这为openshift的多租户模式提供了project级别的较粗粒度的网络隔离。

在这种模式下,SDN进程需要监听Openshift project的加入和删除事件,在ovs-multitenant模式下为不同project配置vxlan的VNID,进行流量隔离。

载自https://blog.csdn.net/cloudvtech

二、代码分析:SDN网络模式选择

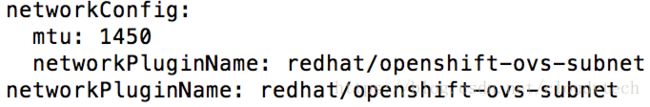

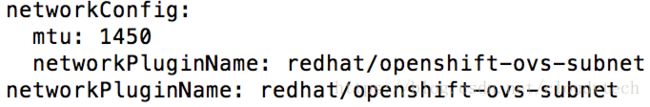

/etc/origin/node/node-config.yaml

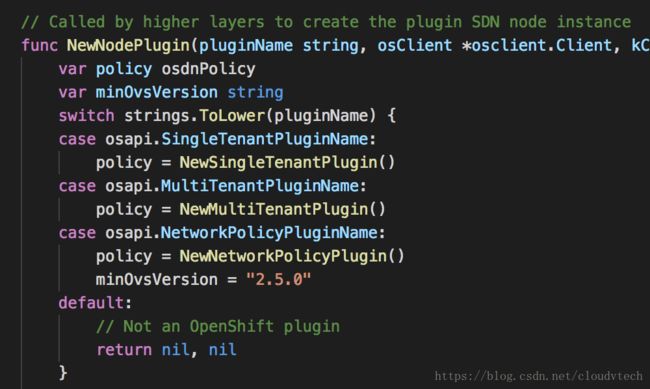

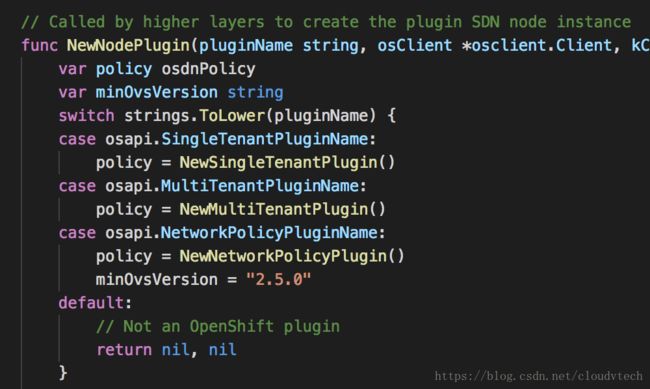

there are three SDN types (aka SDN policy) defined at origin/pkg/sdn/api/plugin.go

载自https://blog.csdn.net/cloudvtech

三、代码分析:read in configure and initialize SDN CNI plugin

origin/pkg/cmd/server/kubernetes/node_config.go

sdnPlugin, err := sdnplugin.NewNodePlugin(options.NetworkConfig.NetworkPluginName, originClient, kubeClient, options.NodeName, options.NodeIP, iptablesSyncPeriod, options.NetworkConfig.MTU)origin/pkg/sdn/plugin/node.go

载自https://blog.csdn.net/cloudvtech

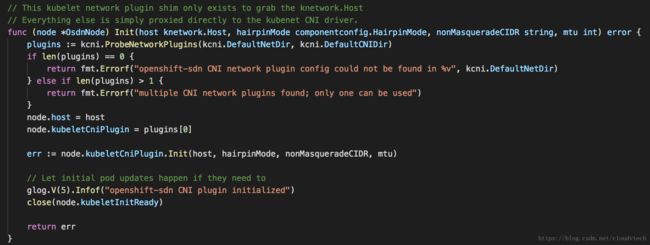

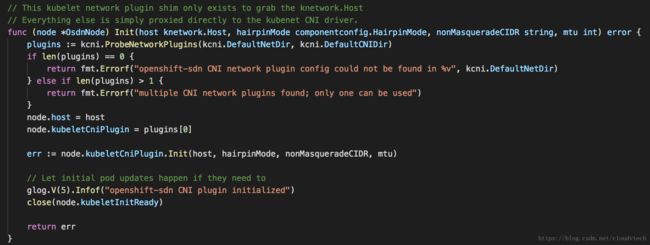

四、代码分析:calls kubelet initialize function

origin/pkg/sdn/plugin/plugin.go @ func (node *OsdnNode) Init(host knetwork.Host, hairpinMode componentconfig.HairpinMode, nonMasqueradeCIDR string, mtu int) error {

origin/vendor/k8s.io/kubernetes/pkg/kubelet/network/plugins.go @ func (plugin *NoopNetworkPlugin) Init(host Host, hairpinMode componentconfig.HairpinMode, nonMasqueradeCIDR string, mtu int) error {

Nov 28 06:45:46 ic-node8 origin-node: I1128 06:45:46.933879 1724 plugin.go:30] openshift-sdn CNI plugin initialized

origin/vendor/k8s.io/kubernetes/pkg/kubelet/network/plugins.go @ func InitNetworkPlugin(plugins []NetworkPlugin, networkPluginName string, host Host, hairpinMode componentconfig.HairpinMode, nonMasqueradeCIDR string, mtu int) (NetworkPlugin, error) {

Nov 28 06:45:46 ic-node8 origin-node: I1128 06:45:46.934221 1724 plugins.go:181] Loaded network plugin “cni"

载自https://blog.csdn.net/cloudvtech

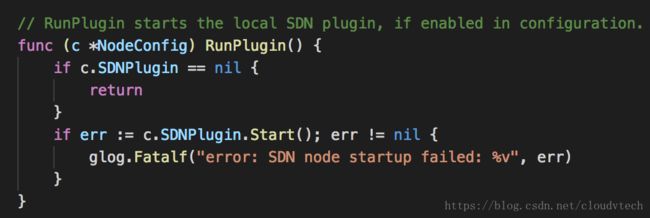

五、代码分析:run Openshift SDN - use "redhat/openshift-ovs-multitenant” mode

1. start_node.go@ StartNode

if components.Enabled(ComponentPlugins) {

config.RunPlugin()

}

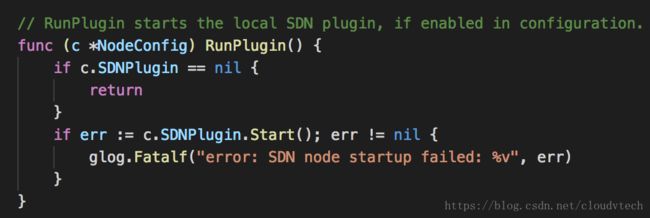

2 origin/pkg/cmd/server/kubernetes/node.go @ as this RunPlugin’s acceptor is NodeConfig

this SDNPlugin instance is the instance of NodeConfig

hence the Start func should be the func inside origin/pkg/sdn/plugin/node.go

the whole flow goes:

a. Run() @ start_node.go

b. StartNode() @ start_node.go

c. RunNode @ start_node.go

d. StartNode() @ start_node.go

e. config.RunPlugin() @ start_node.go

f. RunPlugin @origin/pkg/cmd/server/kubernetes/node.go

e. Start @ origin/pkg/sdn/plugin/node.go

3 origin/pkg/sdn/plugin/node.go @ func (node *OsdnNode) Start() error {

3.1 get network info (ClusterNetwork CIDR and ServiceNetwork CIDR) from Openshift(etcd)

3.2 get local subnet from Openshift(etcd)

3.3 initialize and setup IPTables

3.3.1 newNodeIPTables instance

3.3.2 Setup IPtables (node_iptables.go @ Setup and syncIPTableRules, iptables will get reload and call a SDN defined relocate func, in this case syncIPTableRules)

3.3.2.1 syncIPTableRules

3.3.2.1.1 getStaticNodeIPTablesRules

3.3.2.1.2 EnsureRule

3.3.2.2 add IPTables reload hook function (syncIPTableRules)

4 setup SDN

4.1 create bridge: controller.go @ err = plugin.ovs.AddBridge("fail-mode=secure", "protocols=OpenFlow13”) --> origin/pkg/util/ovs/ovs.go @ func (ovsif *Interface) AddBridge(properties ...string) error {

4.2 add port vxlan0

4.3 add port tun0

4.4 setup open flow table

4.5 set up network device

4.6 add version to flow table

4.7 delete route

5 start subnet change handling task loop

5.1 start the go routing (go utilwait.Forever(node.watchSubnets, 0))

5.2 create an event queue and repopulate the event queue every 30 minutes

5.3 upon event of k8s host node changes, the process function will be triggered

5.3.1 on add/sync event

5.3.1.1 add host subnet rules in flow table

5.3.2 on delete event

5.3.2 update multicast rules @ updateVXLANMulticastRules

载自https://blog.csdn.net/cloudvtech

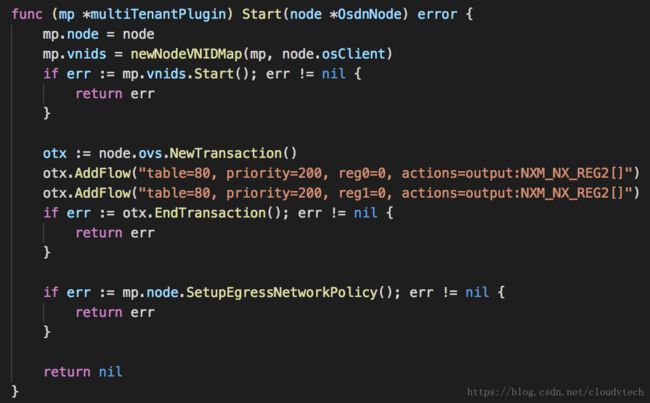

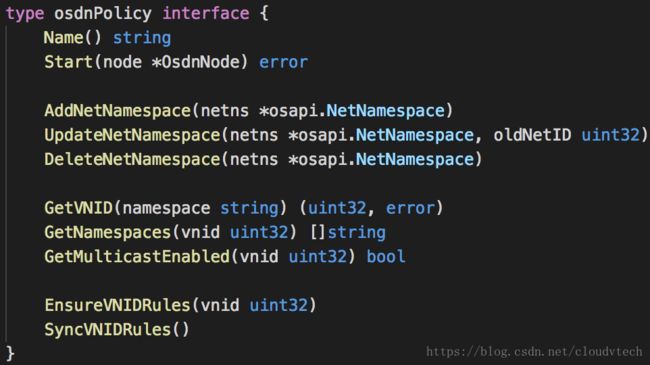

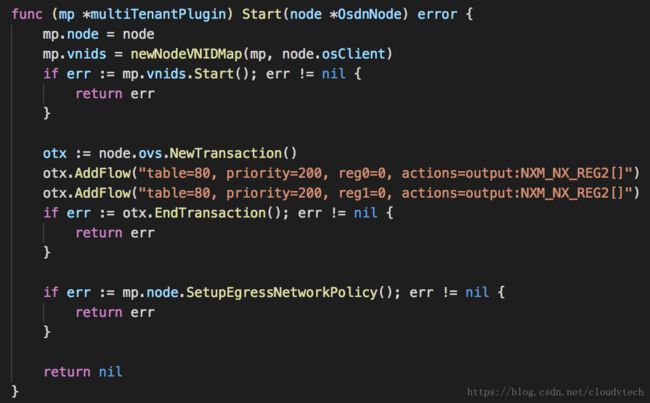

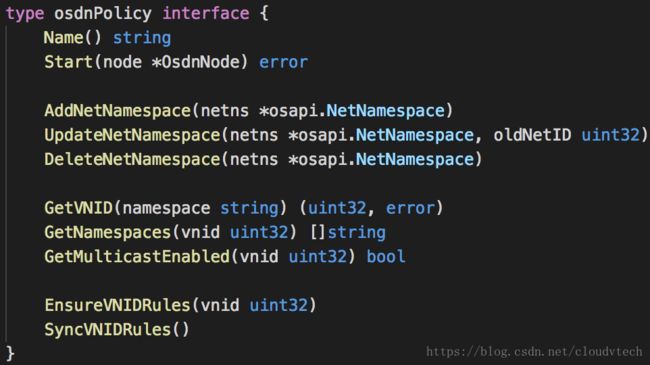

6 multi tenant SDN policy

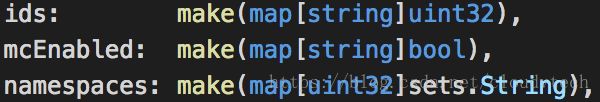

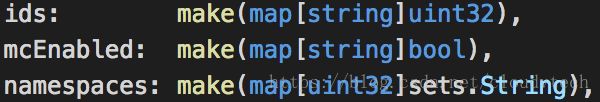

6.1 create a node level VxLAN ID to namespace map( VNID map, Virtual Network ID )management instance

6.2 start the instance

6.2.1 populate current namespaces and VLAN IDs and add to map

nets, err := vmap.osClient.NetNamespaces().List(kapi.ListOptions{})

6.2.2 start go routing handling namespace changes (i.e. tenant changes) (go utilwait.Forever(vmap.watchNetNamespaces, 0))

basiclly namespace change means VxLAN ID change or Openshift VNID change

3.2.2.1 start event queue for Openshift platform resource change notify

3.2.2.2 handle add/update event

6.2.3.1 get current VNID map

6.2.3.2 check if macvlan is enabled

6.2.3.3 update VNID map

6.2.3.4 update SDN policy/type for namespace (e.g.: origin/pkg/sdn/plugin/multitenant.go, AddNetNamespace)

6.2.3.4.1 get PODs in namespace

6.2.3.4.2 get service in namespace

6.2.3.4.3 Update OF rules for the existing/old pods in the namespace

6.2.3.4.4 Update OF rules for the old services in the namespace

6.2.3.4.5 Update namespace references in egress firewall rules

6.2.3.4.6 Update local multicast rules

6.2.2.3 handle delete event

6.3 change open flow table

otx.AddFlow("table=80, priority=200, reg0=0, actions=output:NXM_NX_REG2[]")

otx.AddFlow("table=80, priority=200, reg1=0, actions=output:NXM_NX_REG2[]”)

6.4 setup egress poliy

6.4.1 get policy from openshift ( policies, err := plugin.osClient.EgressNetworkPolicies(kapi.NamespaceAll).List(kapi.ListOptions{}))

6.4.2 update policy to osdn policy object

6.4.3 update flow table according to egress policy changes

6.4.4 start go routing watch for egress policy changes (go utilwait.Forever(plugin.watchEgressNetworkPolicies, 0)) and run updateEgressNetworkPolicyRules when there are changes

7 start go routing for K8S service changes

7.1 start the go routing (go kwait.Forever(node.watchServices, 0))

7.2 create an event queue and repopulate the event queue every 30 minutes

7.3 upon event of k8s service changes, the process function will be triggered

7.3.1 on add/sync event

7.3.1.1 get vnid from map

7.3.1.2 add rules to flow table to enable VNID

(otx.AddFlow("table=80, priority=100, reg0=%d, reg1=%d, actions=output:NXM_NX_REG2[]", vnid, vnid)) @ origin/pkg/sdn/plugin/multitenant.go

7.3.2 on delete event

8 start POD manager

8.1 get IPAM configure for CNI host-local IPAM plugin use

8.2 start CNI request process go routing (go m.processCNIRequests())

8.2.1 process the request (CNI ADD/UPDATE/DELETE)

8.2.1.1 setup/update/teardown is defined at pod_linux.go

8.2.1.2 process add request

8.2.1.2.1 get POD configure (GetVNID, Get(req.PodName), wantsMacvlan(pod), getBandwidth(pod))

8.2.1.2.2 Run CNI IPAM allocation for the container and return the allocated IP address (/opt/cni/bin/host-local)

8.2.1.2.3 open hostport (m.hostportHandler.OpenPodHostportsAndSync)

8.2.1.2.4 setup veth

8.2.1.2.5 configure interface

8.2.1.2.6 enable macvlan if needed

8.2.1.2.6 run SDN script (openshift-sdn-ovs)

8.2.1.2.7 get ofport

8.2.1.2.8 make OF table accept the new tenant

(otx.AddFlow("table=80, priority=100, reg0=%d, reg1=%d, actions=output:NXM_NX_REG2[]", vnid, vnid))

8.2.1.3 process update request

8.2.1.3.1 get namespace

8.2.1.3.2 get POD configure

8.2.1.3.2 get veth info

8.2.1.3.3 exec SDN script

8.2.1.4 process teardown request

8.2.1.4.1 get veth info

8.2.1.4.2 exec SDN script

8.2.1.4.3 Run CNI IPAM release for the container

8.2.1.4.4 sync hostport changes

8.3 start CNI server with handleCNIRequest as handler function

container run time send request to CNI server for POD configure request

and the request is process as show in 8.2.1

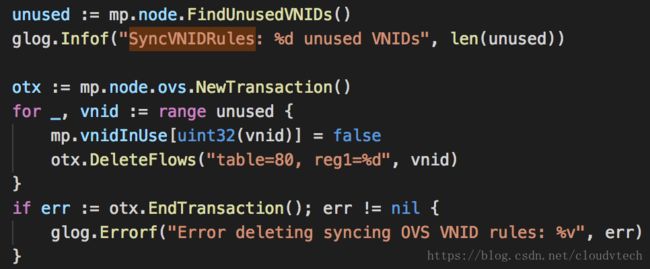

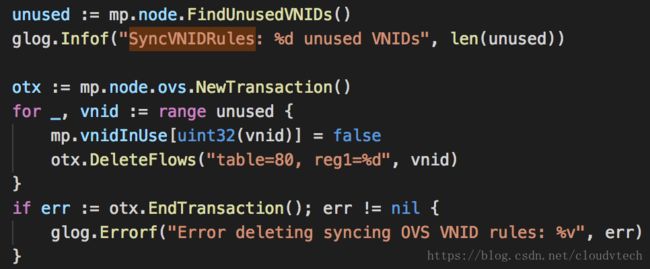

9 start vnid sync go routing (go kwait.Forever(node.policy.SyncVNIDRules, time.Hour))

clean up unused VNID

载自https://blog.csdn.net/cloudvtech