爬虫学习之1:初试scrapy:爬取应届生求职网招聘信息

之前用BeautifulSoup写过爬虫,这段时间工作需要发布一些就业信息,尝试下Scrapy框架,花了一个网上稍微了解了一下,对Scrapy框架有了一定了解,已经可以爬取到数据,并保存为Json、CSV格式,并顺利写入MySQL,但很多细节还需要进一步了解,使用框架确实省事。下面直接贴过程:

一、安装Scrapy

本来在Linux比较方便,但我电脑里的Ubuntu由于搭建了很多Hadoop相关的东西,不想搞得很混乱,就在Win7下搭建吧,我已经装了Python3.6版本的Anaconda,所以直接在命令行:pip install scrapy,安装了很多依赖包,最后提示缺少Microsoft Visual C++2015,网上下载安装后还是提示twisted安装失败,懒得想了,直接到网上下twisted.whl,然后pip安装一下,scrapy安装成功。

二、爬取应届生求职网数据代码编写

这里主要参考的是scrapy官方文档:http://scrapy-chs.readthedocs.io/zh_CN/latest/intro/overview.html,也参考了很多博客文章。

1.创建项目:

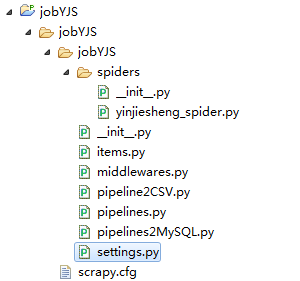

cmd > scrapy startproject jobYJS

cmd > cd ./jobYJS

cmd > scrapy genspider yinjiesheng_spider s.yingjiesheng.com生成的源代码目录如下:

2.items.py代码编写

# -*- coding: utf-8 -*-

import scrapy

class JobyjsItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

title = scrapy.Field()

companyUrl = scrapy.Field()

pubDate = scrapy.Field()

3.yinjiesheng_spider.py代码编写

# -*- coding: utf-8 -*-

import scrapy

from jobYJS.items import JobyjsItem

class YinjieshengSpiderSpider(scrapy.Spider):

name = 'yinjiesheng_spider'

allowed_domains = ['s.yingjiesheng.com']

base_url = 'http://s.yingjiesheng.com/search.php?word=计算机&area=2124&sort=score&start='

pageToCrawl=10

offset = 0

start_urls = [base_url+str(offset)]

def parse(self, response):

li_list = response.xpath("//ul[contains(@class,'searchResult')]/li")

for li in li_list:

item = JobyjsItem()

item['title']=li.xpath("./div/h3[contains(@class,'title')]/a/text()").extract()[0]

item['companyUrl']=li.xpath("./div/h3[contains(@class,'title')]/a/@href").extract()[0]

item['pubDate']=li.xpath("./div/p/span[contains(@class,'r date')]/text()").extract()

yield item

if self.offset <= self.pageToCrawl*10:

self.offset += 10

url = self.base_url + str(self.offset)

yield scrapy.Request(url, callback=self.parse)

4.结果保存为json格式的pipelines.py代码:

# -*- coding: utf-8 -*-

import json

import time

class JobyjsPipeline(object):

def __init__(self):

today =time.strftime('%Y-%m-%d',time.localtime(time.time()))

self.f = open('yinjiesheng_job'+today+'.json','a+')

def process_item(self, item, spider):

content = json.dumps(dict(item),ensure_ascii=False)

self.f.write(content)

return item

def close_spider(self,spider):

self.f.close()5.结果保存为CSV格式的pipeline2CSV.py

import csv

import time

import itertools

import codecs

class JobyjsPipeline(object):

def process_item(self, item, spider):

today =time.strftime('%Y-%m-%d',time.localtime(time.time()))

filename = today+'.csv'

with open(filename,'a+') as csvfile:

writer=csv.writer(csvfile)

writer.writerow((item['title'],item['companyUrl'],item['pubDate']))

return item6.结果存储到MySQL的pipelines2MySQL,py

import pymysql

def dbHandle():

conn = pymysql.connect(

host = "localhost",

user = "root",

passwd = "123456",

charset = "utf8",

use_unicode = False

)

return conn

class JobyjsPipeline(object):

def process_item(self,item,spider):

dbObject = dbHandle()

cursor = dbObject.cursor()

cursor.execute("USE crawl")

sql = "INSERT INTO yinjiesheng(title,companyUrl,pubDate) VALUES(%s,%s,%s)"

try:

cursor.execute(sql,(item['title'],item['companyUrl'],item['pubDate']))

cursor.connection.commit()

except BaseException as e:

print("错误在这里>>>>>>>>>>>>>",e,"<<<<<<<<<<<<<错误在这里")

dbObject.rollback()

return item

7.Setting.py代码

BOT_NAME = 'jobYJS'

SPIDER_MODULES = ['jobYJS.spiders']

NEWSPIDER_MODULE = 'jobYJS.spiders'

ITEM_PIPELINES = {

'jobYJS.pipelines.JobyjsPipeline': 300,

'jobYJS.pipeline2CSV.JobyjsPipeline':400,

'jobYJS.pipelines2MySQL.JobyjsPipeline':500,

}三、测试运行

事先在MySQL中建立了crawl database,并在其中新建了yinjiesheng表,其中包含三个字段

cmd> scrapy crawl yinjiesheng_spider