kafka for mac 环境搭建及 java 代码测试

一 环境搭建

1.安装:mac 下使用 brew 安装,会自动安装 zookeeper 依赖环境

brew install kafka需要一些时间等待下载安装环境

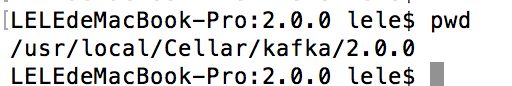

2. 安装目录: /user/local/Cellar/kafka/2.0.0 // 2.0.0 是安装版本,根据自己的安装版本而定

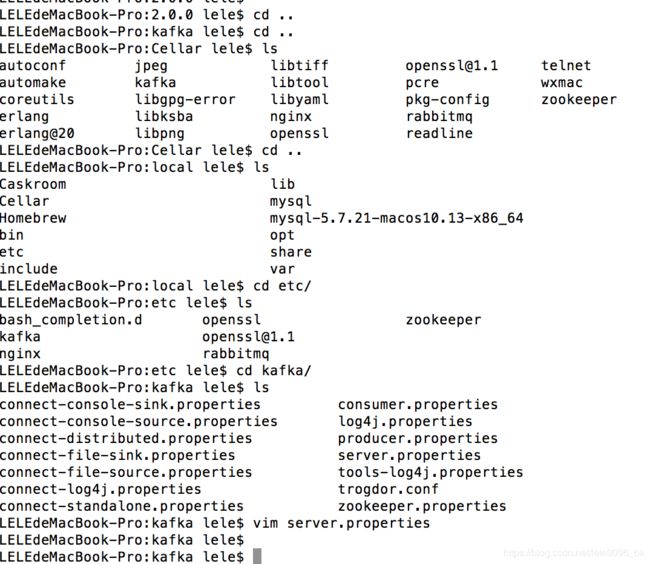

配置文件目录: /usr/local/etc/kafka/server.properties

/usr/local/etc/kafka/zookeeper.properties

3. 启动 zookeeper: 先要进入 kafka 的安装目录下,以下命令都在 在 /user/local/Cellar/kafka/2.0.0 目录下 执行

cd /usr/local/Cellar/kafka/2.0.0启动命令:

./bin/zookeeper-server-start /usr/local/etc/kafka/zookeeper.properties &4. zookeeper 启动之后启动 kafka,

./bin/kafka-server-start /usr/local/etc/kafka/server.properties &5. 创建 topic , MY_TEST_TOPIC

./bin/kafka-topics --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic MY_TEST_TOPIC6. 查看创建的 topic 列表

./bin/kafka-topics --list --zookeeper localhost:21817. 使用 kafka 自带的生产者和消费者进行测试

生产者:

./bin/kafka-console-producer --broker-list localhost:9092 --topic MY_TEST_TOPIC终端会出现 " > " , 此时输入需要的发送内容

8. 消费者,可以另起一个终端 进入 /user/local/Cellar/kafka/2.0.0 目录下

./bin/kafka-console-consumer --bootstrap-server localhost:9092 --topic MY_TEST_TPOIC --from-beginning此时,在生产者终端里面输入数据,在消费者终端中可以实时接收到数据

二 java代码测试

开发环境: IDEA 创建 SpringBoot 的 maven 项目

1. 在 pom 文件中添加 依赖

org.springframework.boot

spring-boot-starter-test

test

org.springframework.cloud

spring-cloud-dependencies

Dalston.SR1

pom

import

2. 编写配置类 ConfigureAPI

package com.chargedot.kafkademo.config;

public class ConfigureAPI {

public final static String Group_ID = "test";

public final static String TOPIC = "MY_TEST_TOPIC";

public final static String MY_TOPIC = "DWD-ERROR-REQ";

public final static int BUFFER_SIZE = 64 * 1024;

public final static int TIMEOUT = 20000;

public final static int INTERVAL = 10000;

public final static String BROKER_LIST = "127.0.0.1:9092";

public final static int GET_MEG_INTERVAL = 1000;

}3. 编写生产者 JProducer

public class JProducer implements Runnable {

private Producerproducer;

public JProducer(){

Properties props = new Properties();

props.put("bootstrap.servers", ConfigureAPI.BROKER_LIST);

props.put("acks","all");

props.put("retries",0);

props.put("batch.size",16384);

props.put("linger.ms",1);

props.put("buffer.memory",33554432);

props.put("key.serializer","org.apache.kafka.common.serialization.StringSerializer");

props.put("value.serializer","org.apache.kafka.common.serialization.StringSerializer");

props.put("request.required.acks", "-1");

producer = new KafkaProducer(props);

}

@Override

public void run() {

try {

String data = "hello world";

producer.send(new ProducerRecord(ConfigureAPI.TOPIC,data));

System.out.println("send data = " + data);

}catch (Exception e){

System.out.println("exception = " + e.getMessage());

}finally {

// producer.close();

}

}

public static void main(String[] args){

ScheduledExecutorService pool = Executors.newScheduledThreadPool(5);

// pool.schedule(new JProducer(),2, TimeUnit.SECONDS);

// 创建定时线程循环发送 msg

pool.scheduleAtFixedRate(new JProducer(),1,5,TimeUnit.SECONDS);

}

}

4. 编写 消费者 JConsumer

public class JConsumer implements Runnable {

private KafkaConsumerkafkaConsumer;

private KafkaTemplatekafkaTemplate;

JConsumer(){

Properties props = new Properties();

props.put("bootstrap.servers", ConfigureAPI.BROKER_LIST);

props.put("group.id",ConfigureAPI.Group_ID);

props.put("enable.auto.commit",true);

props.put("auto.commit.interval.ms",1000);

props.put("session.timeout.ms",30000);

props.put("key.deserializer","org.apache.kafka.common.serialization.StringDeserializer");

props.put("value.deserializer","org.apache.kafka.common.serialization.StringDeserializer");

kafkaConsumer = new KafkaConsumer(props);

kafkaConsumer.subscribe(Arrays.asList(ConfigureAPI.TOPIC,ConfigureAPI.MY_TOPIC));

}

@Override

public void run() {

while (true) {

System.out.println("runloop recieve message");

ConsumerRecords consumerRecords = kafkaConsumer.poll(ConfigureAPI.GET_MEG_INTERVAL);

for (ConsumerRecord record : consumerRecords) {

Object recordValue = record.value();

if (recordValue instanceof Map){

System.out.println("record 是一个 map 对象");

}else if(recordValue instanceof String){

// json 串

System.out.println("record 是一个字符类型");

String jsonStr = (String) recordValue;

// json 串 --> map

Map msgMap = JacksonUtil.json2Bean(jsonStr,Map.class);

System.out.println("msgMap = " + msgMap);

}else {

}

showMessage("接收到的信息 key = " + record.key() + "value = " + record.value());

}

}

}

public void showMessage(String msg){

System.out.println(msg);

}

public static void main(String[] args){

ExecutorService pool = Executors.newCachedThreadPool();

pool.execute(new JConsumer());

pool.shutdown();

}

}

5. 工具类:JackSonUtil

**

* @Description: json工具类

*/

public class JacksonUtil {

private static ObjectMapper mapper;

public static synchronized ObjectMapper getMapperInstance(boolean createNew) {

if (createNew) {

return new ObjectMapper();

} else if (mapper == null) {

mapper = new ObjectMapper();

}

return mapper;

}

/**

* @param json str

* @param class1

* @param

* @return bean

*/

public static T json2Bean(String json, Class class1) {

try {

mapper = getMapperInstance(false);

return mapper.readValue(json, class1);

} catch (JsonParseException e) {

e.printStackTrace();

} catch (JsonMappingException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

}

return null;

}

/**

* @param bean

* @return json

*/

public static String bean2Json(Object bean) {

try {

mapper = getMapperInstance(false);

return mapper.writeValueAsString(bean);

} catch (JsonGenerationException e) {

e.printStackTrace();

} catch (JsonMappingException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

}

return null;

}

/**

* @param json

* @param class1

* @param

* @return List

*/

public static List json2List(String json, Class class1) {

try {

mapper = getMapperInstance(false);

JavaType javaType = mapper.getTypeFactory().constructParametricType(List.class, class1);

//如果是Map类型 mapper.getTypeFactory().constructParametricType(HashMap.class,String.class, Bean.class);

List list = (List) mapper.readValue(json, javaType);

return list;

} catch (Exception e) {

e.printStackTrace();

}

return null;

}

/**

* @param list

* @return json

*/

public static String list2Json(List list) {

try {

mapper.configure(DeserializationFeature.FAIL_ON_UNKNOWN_PROPERTIES, false);

return mapper.writeValueAsString(list);

} catch (Exception e) {

e.printStackTrace();

}

return null;

}

/**

* @param json

* @param type

* @return Map

*/

public static Map json2Map(String json, TypeReference type) {

try {

mapper.configure(DeserializationFeature.FAIL_ON_UNKNOWN_PROPERTIES, false);

return (Map) mapper.readValue(json, type);

} catch (Exception e) {

e.printStackTrace();

}

return null;

}

/**

* @param map

* @return json

*/

public static String map2Json(Map map) {

try {

mapper.configure(DeserializationFeature.FAIL_ON_UNKNOWN_PROPERTIES, false);

return mapper.writeValueAsString(map);

} catch (Exception e) {

e.printStackTrace();

}

return null;

}

}

6. 先启动消费者服务,在启动生产者,此时可以在消费者控制台中看到生产中发送的内容

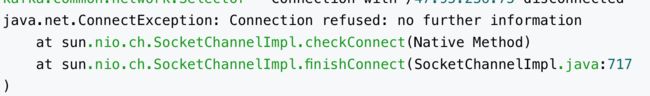

7. 服务启动时报错问题:使用客户端连接正常,使用 java 代码连接报错: Connection refused: no further information

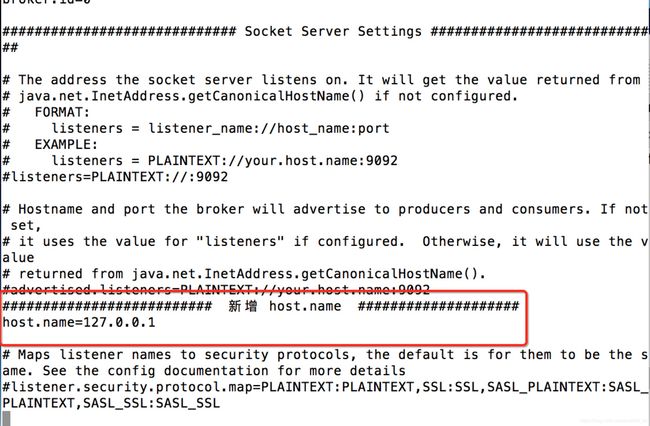

解决办法:修改kafka服务的配置文件:

cd /usr/local/etc/kafka/1. 使用 vim 打开 server.properties

2.修改如下文件内容

3. 重启 kafka 服务,再次连接即可连接成功