elk日志分析平台搭建

实验环境

- rhel6.5

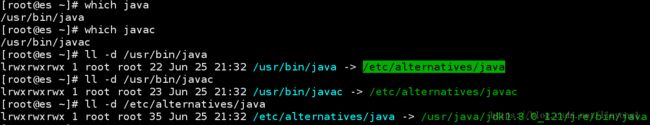

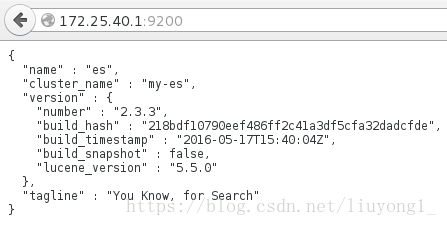

安装es:(需要java环境)

rpm -ivh jdk-8u121-linux-x64.rpm elasticsearch-2.3.3.rpm注:java 的rpm包安装直接使用rpm -qpl elasticsearch-2.3.3.rpm初步配置es:

/etc/elasticsearch/elasticsearch.yml

cluster.name: my-es #集群名称

node.name: es #节点名称

bootstrap.mlockall: true #锁定swap分区

network.host: 172.25.40.1 #本机ip

http.port: 9200 #开启端口开启es:

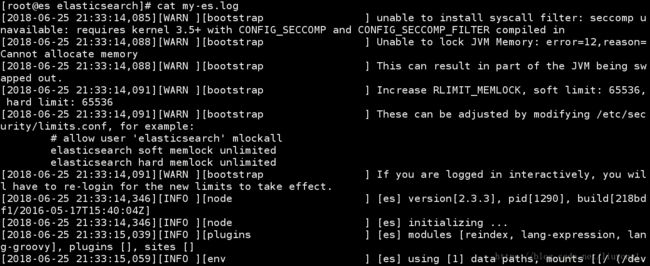

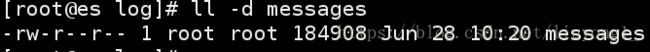

/etc/init.d/elasticsearch start查看日志:

cat /var/log/elasticsearch/my-es.logvim /etc/security/limits.conf

elasticsearch soft memlock unlimited

elasticsearch hard memlock unlimited此时重启,

/etc/init.d/elasticsearch restart查看开启信息:

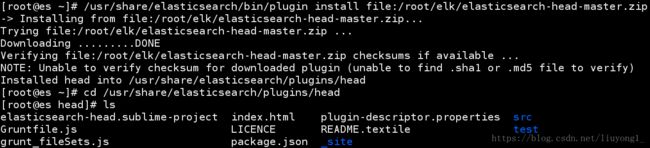

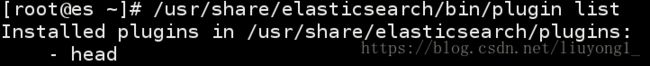

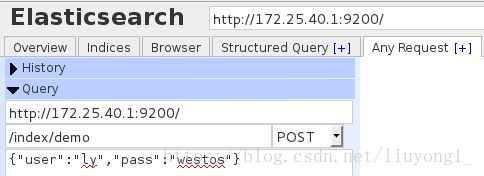

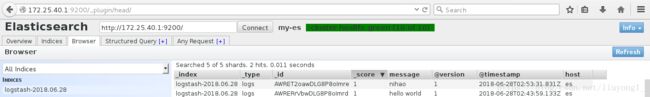

接着安装elasticsearch-head-master.zip插件,可直接监控es,利用es自带plugin功能

/usr/share/elasticsearch/bin/plugin install file:/root/elk/elasticsearch-head-master.zip接下来,实现分布式存储:

另外两台机子安装

rpm -ivh elasticsearch-2.3.3.rpm jdk-8u121-linux-x64.rpm进一步配置配置文件:

server2,server3

vim /etc/elasticsearch/elasticsearch.yml

cluster.name: my-es

node.name: server2/3

bootstrap.mlockall: true

network.host: 172.25.40.2/3

http.port: 9200

discovery.zen.ping.unicast.hosts: ["es", "server2", "server3"]

vim /etc/security/limits.conf

elasticsearch soft memlock unlimited

elasticsearch hard memlock unlimitedes

vim /etc/elasticsearch/elasticsearch.yml

discovery.zen.ping.unicast.hosts: ["es", "server2", "server3"]注:五角星代表master

继续配置实现纯master:

/etc/elasticsearch/elasticsearch.yml:

node.name: es

node.master: true

node.data: false

node.name: server2

node.master: false

node.data: true

node.name: server3

node.master: false

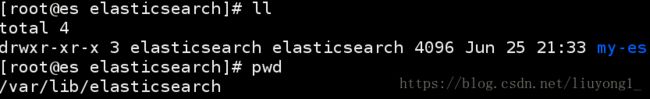

node.data: true默认数据目录:/var/lib/elasticsearch/ ,可在配置文件 # path.data: /path/to/data

配置默认目录,注意权限问题

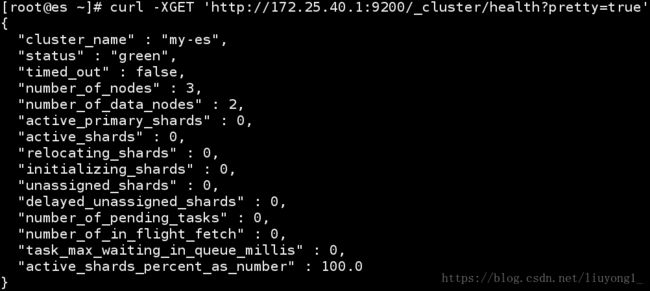

利用curl命令查看信息:

curl -XGET 'http://172.25.40.1:9200/_cluster/health?pretty=true'curl命令删除之前添加的索引

curl -XDELETE 'http://172.25.55.1:9200/index'安装logstash,logstash是专门做数据采集的

rpm -ivh logstash-2.3.3-1.noarch.rpm他的安装路径在/opt/logstash,

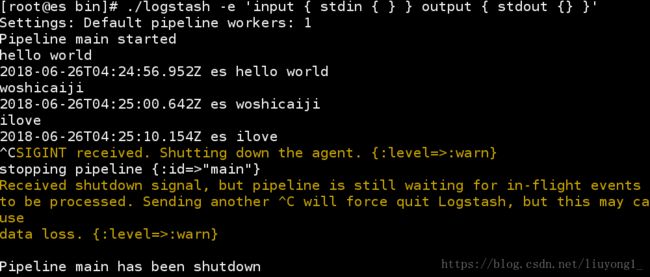

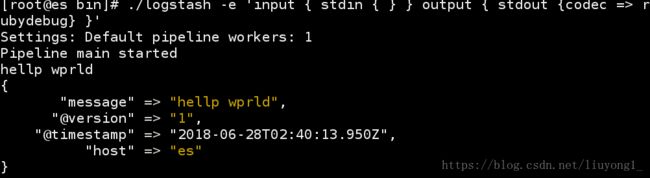

1)简单实现终端输入终端输出的数据采集

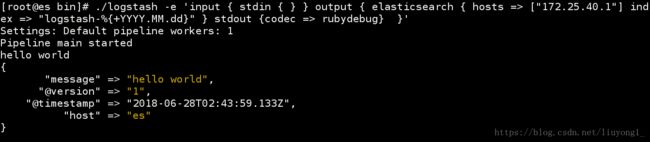

./logstash -e 'input { stdin { } } output { stdout {} }'./logstash -e 'input { stdin { } } output { stdout {codec => rubydebug} }'3)将输出导入es中并显示在终端

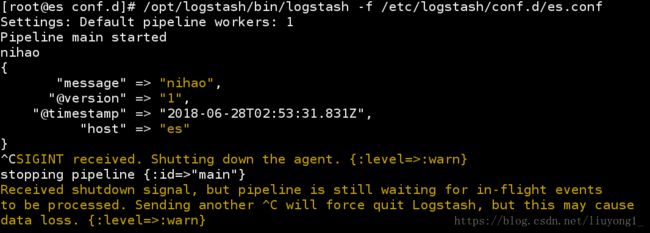

./logstash -e 'input { stdin { } } output { elasticsearch { hosts => ["172.25.55.1"] index => "logstash-%{+YYYY.MM.dd}" } stdout {codec => rubydebug} }'使用文件的形式来更轻易实现数据采集:

在/etc/logstash/conf.d下书写.conf文件

1)简单实现

/etc/logstash/conf.d:

vim es.conf

input {

stdin {}

}

output {

elasticsearch {

hosts => ["172.25.40.1"]

index => "logstash-%{+YYYY.MM.dd}" }

stdout {

codec => rubydebug

}

}vim es.conf

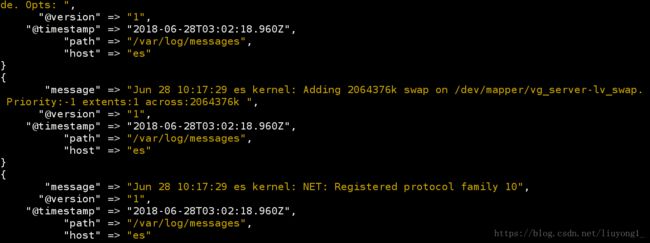

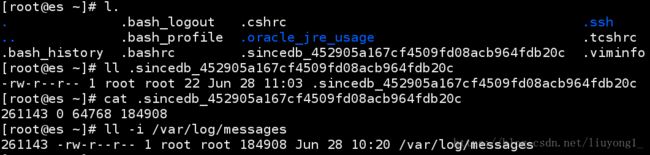

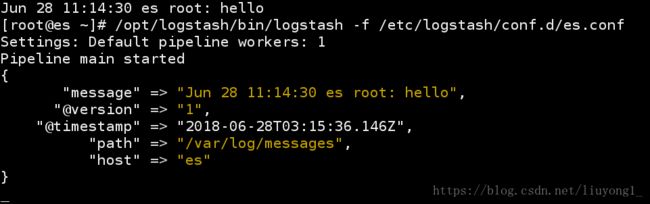

input {

file {

path => "/var/log/messages"

start_position => "beginning"

}

}

output {

elasticsearch {

hosts => ["172.25.40.1"]

index => "messages-%{+YYYY.MM.dd}" }

stdout {

codec => rubydebug

}

}/opt/logstash/bin/logstash -f /etc/logstash/conf.d/es.conf这里注意在加目录下存放着读取的标签,索引等,他会读取你的监控加载的信息,如果你当前加载的已经是全部日志,重新执行命令时无反应,若想重新加载需要删除此文件,而不单单是在图形界面删除

3)实现筛选特殊条件的监控文件

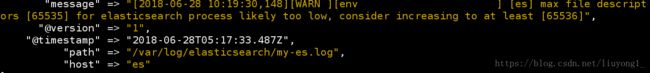

vim es.conf

input {

file {

path => "/var/log/elasticsearch/my-es.log"

start_position => "beginning"

}

}

filter {

multiline {

pattern => "^\["

negate => true

what => "previous"

}

}

output {

elasticsearch {

hosts => ["172.25.40.1"]

index => "es-%{+YYYY.MM.dd}" }

stdout {

codec => rubydebug

}

}sysctl -a | grep file

vim /etc/security/limits.conf

elasticsearch - nofile 655364)这是我们可以对输入的东西进行过滤而无需对所有的东西进行筛选

vim es.conf

input {

file {

path => "/var/log/elasticsearch/my-es.log"

start_position => "beginning"

codec => multiline {

pattern => "^\["

negate => true

what => "previous"

}

}

}

output {

elasticsearch {

hosts => ["172.25.40.1"]

index => "es-%{+YYYY.MM.dd}" }

stdout {

codec => rubydebug

}

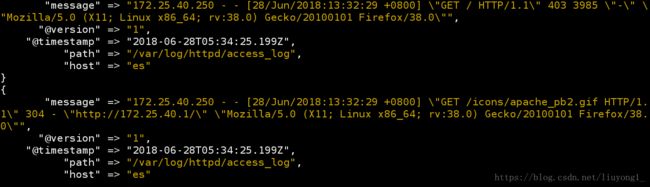

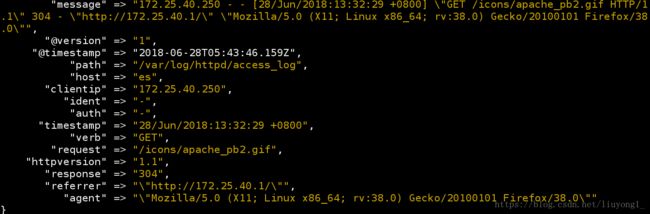

}4)监控apache服务日志

安装并访问80端口以产生日志信息

yum install -y httpd

/etc/init.d/httpd start

curl 172.25.50.1:80vim es.conf

input {

file {

path => "/var/log/httpd/access_log"

start_position => "beginning"

}

}

output {

elasticsearch {

hosts => ["172.25.40.1"]

index => "apache-%{+YYYY.MM.dd}" }

stdout {

codec => rubydebug

}

}vim es.conf

input {

file {

path => "/var/log/httpd/access_log"

start_position => "beginning"

}

}

filter {

grok {

match => { "message" => "%{COMBINEDAPACHELOG}" }

}

}

output {

elasticsearch {

hosts => ["172.25.40.1"]

index => "apache-%{+YYYY.MM.dd}" }

stdout {

codec => rubydebug

}

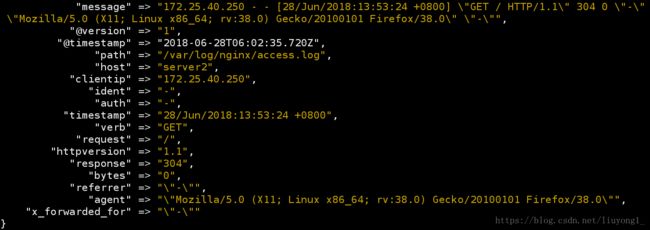

}rpm -ivh nginx-1.8.0-1.el6.ngx.x86_64.rpm

[root@server2 ~]# nginx

chmod 644 /var/log/nginx/access.log rpm -ivh logstash-2.3.3-1.noarch.rpm

vim /etc/logstash/conf.d/es.conf

input {

file {

path => "/var/log/nginx/access.log"

start_position => "beginning"

}

}

filter {

grok {

match => { "message" => "%{COMBINEDAPACHELOG} %{QS:x_forwarded_for}" }

}

}

output {

elasticsearch {

hosts => ["172.25.40.1"]

index => "nginx-%{+YYYY.MM.dd}" }

stdout {

codec => rubydebug

}

}注:参考/opt/logstash/vendor/bundle/jruby/1.9/gems/logstash-patterns-core-2.0.5/patterns/grok-patterns 文档

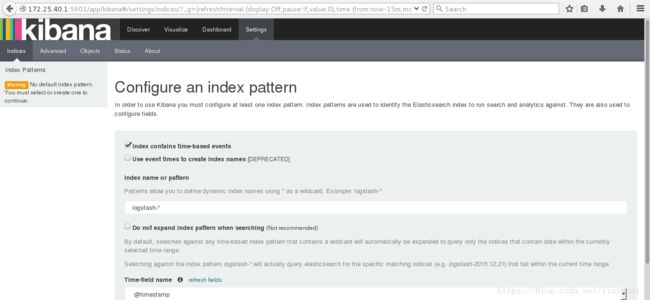

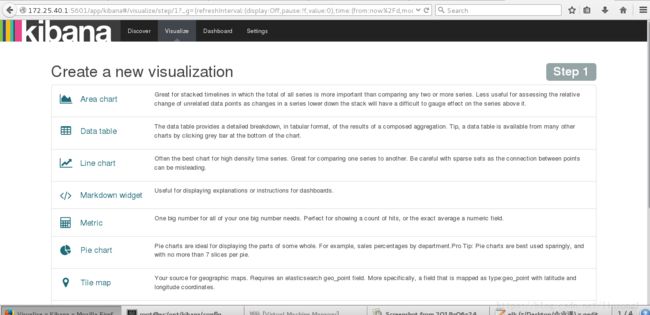

安装kibana,kinaba专门做数据监控,数据可视化

rpm -ivh kibana-4.5.1-1.x86_64.rpm简单配置kibana,

/opt/kibana/config/kibana.yml

elasticsearch.url: "http://172.25.40.1:9200" #es的位置

kibana.index: ".kibana" #在es中的索引开启

/etc/init.d/kibana start简单上传一个之前监控的nginx服务

![]()

接着可以继续将监控的各项信息输出为各种形式

保存后可将所有信息放到一个dashboard展示

注:这里可以用ab命令做压测

ab -c 1 -n 100 http://172.25.40.2/index.html

接下来做一个优化,实现数据的实时同步,基本设计思路为nginx -> logstash -> redis -> logstash -> es -> kibana

es端:

vim es.conf

input {

redis {

host => "172.25.40.3:6379"

data_type => "list"

key => "logstash:redis"

}

}

filter {

grok {

match => { "message" => "%{COMBINEDAPACHELOG} %{QS:x_forwarded_for}" }

}

output {

elasticsearch {

hosts => ["172.25.40.1"]

index => "nginx-%{+YYYY.MM.dd}" }

}/etc/init.d/logstash startserver2端:

vim es.conf

input {

file {

path => "/var/log/nginx/access.log"

start_position => "beginning"

}

}

output {

redis {

host => ["172.25.40.3"]

data_type => "list"

key => "logstash:redis"

}

}/etc/init.d/logstash startserver3端:

安装redis并开启即可

tar zxf redis-3.0.6.tar.gz

cd redis-3.0.6

yum install -y make

yum install -y gcc

make && make install

cd utils/

./install_server.sh此时利用压测命令即可实现同步刷新