tomcat coyote模块请求处理流程

以Http2.0协议,io方式为nio为例

时序图

- Connector启动时,启动其持有的EndPoint实例(NioEndPoint),EndPoint通过其持有的Acceptor轮询监听端口接收请求

protected class Acceptor extends AbstractEndpoint.Acceptor {

@Override

public void run() {

int errorDelay = 0;

// Loop until we receive a shutdown command

while (running) {

// Loop if endpoint is paused

while (paused && running) {

state = AcceptorState.PAUSED;

try {

Thread.sleep(50);

} catch (InterruptedException e) {

// Ignore

}

}

if (!running) {

break;

}

state = AcceptorState.RUNNING;

try {

//if we have reached max connections, wait

countUpOrAwaitConnection();

SocketChannel socket = null;

try {

// Accept the next incoming connection from the server

// socket

socket = serverSock.accept();

} catch (IOException ioe) {

// We didn't get a socket

countDownConnection();

if (running) {

// Introduce delay if necessary

errorDelay = handleExceptionWithDelay(errorDelay);

// re-throw

throw ioe;

} else {

break;

}

}

// Successful accept, reset the error delay

errorDelay = 0;

// Configure the socket

if (running && !paused) {

// setSocketOptions() will hand the socket off to

// an appropriate processor if successful

if (!setSocketOptions(socket)) {

closeSocket(socket);

}

} else {

closeSocket(socket);

}

} catch (Throwable t) {

ExceptionUtils.handleThrowable(t);

log.error(sm.getString("endpoint.accept.fail"), t);

}

}

state = AcceptorState.ENDED;

}

private void closeSocket(SocketChannel socket) {

countDownConnection();

try {

socket.socket().close();

} catch (IOException ioe) {

if (log.isDebugEnabled()) {

log.debug(sm.getString("endpoint.err.close"), ioe);

}

}

try {

socket.close();

} catch (IOException ioe) {

if (log.isDebugEnabled()) {

log.debug(sm.getString("endpoint.err.close"), ioe);

}

}

}

}

- 监听到请求后且socket状态为running,将channel注册到poller上,唤醒selector

protected boolean setSocketOptions(SocketChannel socket) {

// Process the connection

try {

//disable blocking, APR style, we are gonna be polling it

socket.configureBlocking(false);

Socket sock = socket.socket();

socketProperties.setProperties(sock);

NioChannel channel = nioChannels.pop();

if (channel == null) {

SocketBufferHandler bufhandler = new SocketBufferHandler(

socketProperties.getAppReadBufSize(),

socketProperties.getAppWriteBufSize(),

socketProperties.getDirectBuffer());

if (isSSLEnabled()) {

channel = new SecureNioChannel(socket, bufhandler, selectorPool, this);

} else {

channel = new NioChannel(socket, bufhandler);

}

} else {

channel.setIOChannel(socket);

channel.reset();

}

getPoller0().register(channel);

} catch (Throwable t) {

ExceptionUtils.handleThrowable(t);

try {

log.error("",t);

} catch (Throwable tt) {

ExceptionUtils.handleThrowable(tt);

}

// Tell to close the socket

return false;

}

return true;

}

private void addEvent(PollerEvent event) {

events.offer(event);

if ( wakeupCounter.incrementAndGet() == 0 ) selector.wakeup();

}

- poller实现了Runnable,在run方法中使用selector轮询socket状态,当轮询到可处理的请求时调用processKey进行处理

@Override

public void run() {

// Loop until destroy() is called

while (true) {

boolean hasEvents = false;

try {

if (!close) {

hasEvents = events();

if (wakeupCounter.getAndSet(-1) > 0) {

//if we are here, means we have other stuff to do

//do a non blocking select

keyCount = selector.selectNow();

} else {

keyCount = selector.select(selectorTimeout);

}

wakeupCounter.set(0);

}

if (close) {

events();

timeout(0, false);

try {

selector.close();

} catch (IOException ioe) {

log.error(sm.getString("endpoint.nio.selectorCloseFail"), ioe);

}

break;

}

} catch (Throwable x) {

ExceptionUtils.handleThrowable(x);

log.error("",x);

continue;

}

//either we timed out or we woke up, process events first

if ( keyCount == 0 ) hasEvents = (hasEvents | events());

Iterator<SelectionKey> iterator =

keyCount > 0 ? selector.selectedKeys().iterator() : null;

// Walk through the collection of ready keys and dispatch

// any active event.

while (iterator != null && iterator.hasNext()) {

SelectionKey sk = iterator.next();

NioSocketWrapper attachment = (NioSocketWrapper)sk.attachment();

// Attachment may be null if another thread has called

// cancelledKey()

if (attachment == null) {

iterator.remove();

} else {

iterator.remove();

processKey(sk, attachment);

}

}//while

//process timeouts

timeout(keyCount,hasEvents);

}//while

getStopLatch().countDown();

}

protected void processKey(SelectionKey sk, NioSocketWrapper attachment) {

try {

if ( close ) {

cancelledKey(sk);

} else if ( sk.isValid() && attachment != null ) {

if (sk.isReadable() || sk.isWritable() ) {

if ( attachment.getSendfileData() != null ) {

processSendfile(sk,attachment, false);

} else {

unreg(sk, attachment, sk.readyOps());

boolean closeSocket = false;

// Read goes before write

if (sk.isReadable()) {

if (!processSocket(attachment, SocketEvent.OPEN_READ, true)) {

closeSocket = true;

}

}

if (!closeSocket && sk.isWritable()) {

if (!processSocket(attachment, SocketEvent.OPEN_WRITE, true)) {

closeSocket = true;

}

}

if (closeSocket) {

cancelledKey(sk);

}

}

}

} else {

//invalid key

cancelledKey(sk);

}

} catch ( CancelledKeyException ckx ) {

cancelledKey(sk);

} catch (Throwable t) {

ExceptionUtils.handleThrowable(t);

log.error("",t);

}

}

- socket的处理方法processSocket在NioEndpoint的父类AbstractEndpoint中,将传入的SocketWrapper和SocketEnvent包装成一个可复用的SocketProcessor线程交给线程池执行,SocketProcessor的实际实现在每个EndPoint中

public boolean processSocket(SocketWrapperBase<S> socketWrapper,

SocketEvent event, boolean dispatch) {

try {

if (socketWrapper == null) {

return false;

}

SocketProcessorBase<S> sc = processorCache.pop();

if (sc == null) {

sc = createSocketProcessor(socketWrapper, event);

} else {

sc.reset(socketWrapper, event);

}

Executor executor = getExecutor();

if (dispatch && executor != null) {

executor.execute(sc);

} else {

sc.run();

}

} catch (RejectedExecutionException ree) {

getLog().warn(sm.getString("endpoint.executor.fail", socketWrapper) , ree);

return false;

} catch (Throwable t) {

ExceptionUtils.handleThrowable(t);

// This means we got an OOM or similar creating a thread, or that

// the pool and its queue are full

getLog().error(sm.getString("endpoint.process.fail"), t);

return false;

}

return true;

}

public abstract class SocketProcessorBase<S> implements Runnable {

protected SocketWrapperBase<S> socketWrapper;

protected SocketEvent event;

public SocketProcessorBase(SocketWrapperBase<S> socketWrapper, SocketEvent event) {

reset(socketWrapper, event);

}

public void reset(SocketWrapperBase<S> socketWrapper, SocketEvent event) {

Objects.requireNonNull(event);

this.socketWrapper = socketWrapper;

this.event = event;

}

@Override

public final void run() {

synchronized (socketWrapper) {

// It is possible that processing may be triggered for read and

// write at the same time. The sync above makes sure that processing

// does not occur in parallel. The test below ensures that if the

// first event to be processed results in the socket being closed,

// the subsequent events are not processed.

if (socketWrapper.isClosed()) {

return;

}

doRun();

}

}

protected abstract void doRun();

}

- NioEndpoint中SocketProcessor的实现,先判断socket的状态然后提交到ConnectionHandler处理

protected class SocketProcessor extends SocketProcessorBase<NioChannel> {

public SocketProcessor(SocketWrapperBase<NioChannel> socketWrapper, SocketEvent event) {

super(socketWrapper, event);

}

@Override

protected void doRun() {

NioChannel socket = socketWrapper.getSocket();

SelectionKey key = socket.getIOChannel().keyFor(socket.getPoller().getSelector());

try {

int handshake = -1;

try {

if (key != null) {

if (socket.isHandshakeComplete()) {

// No TLS handshaking required. Let the handler

// process this socket / event combination.

handshake = 0;

} else if (event == SocketEvent.STOP || event == SocketEvent.DISCONNECT ||

event == SocketEvent.ERROR) {

// Unable to complete the TLS handshake. Treat it as

// if the handshake failed.

handshake = -1;

} else {

handshake = socket.handshake(key.isReadable(), key.isWritable());

// The handshake process reads/writes from/to the

// socket. status may therefore be OPEN_WRITE once

// the handshake completes. However, the handshake

// happens when the socket is opened so the status

// must always be OPEN_READ after it completes. It

// is OK to always set this as it is only used if

// the handshake completes.

event = SocketEvent.OPEN_READ;

}

}

} catch (IOException x) {

handshake = -1;

if (log.isDebugEnabled()) log.debug("Error during SSL handshake",x);

} catch (CancelledKeyException ckx) {

handshake = -1;

}

if (handshake == 0) {

SocketState state = SocketState.OPEN;

// Process the request from this socket

if (event == null) {

state = getHandler().process(socketWrapper, SocketEvent.OPEN_READ);

} else {

state = getHandler().process(socketWrapper, event);

}

if (state == SocketState.CLOSED) {

close(socket, key);

}

} else if (handshake == -1 ) {

getHandler().process(socketWrapper, SocketEvent.CONNECT_FAIL);

close(socket, key);

} else if (handshake == SelectionKey.OP_READ){

socketWrapper.registerReadInterest();

} else if (handshake == SelectionKey.OP_WRITE){

socketWrapper.registerWriteInterest();

}

} catch (CancelledKeyException cx) {

socket.getPoller().cancelledKey(key);

} catch (VirtualMachineError vme) {

ExceptionUtils.handleThrowable(vme);

} catch (Throwable t) {

log.error("", t);

socket.getPoller().cancelledKey(key);

} finally {

socketWrapper = null;

event = null;

//return to cache

if (running && !paused) {

processorCache.push(this);

}

}

}

}

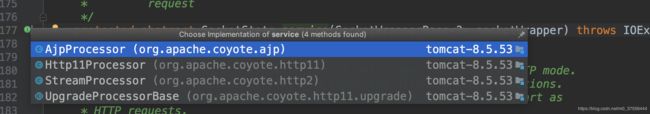

- ConnectionHandler是AbstractProtocal的一个内部类,用于为socket链接选择一个合适的Processor进行处理,协议的升级也是在ConnectionHandler中做的

@Override

public SocketState process(SocketWrapperBase<S> wrapper, SocketEvent status) {

if (getLog().isDebugEnabled()) {

getLog().debug(sm.getString("abstractConnectionHandler.process",

wrapper.getSocket(), status));

}

if (wrapper == null) {

// Nothing to do. Socket has been closed.

return SocketState.CLOSED;

}

S socket = wrapper.getSocket();

Processor processor = connections.get(socket);

if (getLog().isDebugEnabled()) {

getLog().debug(sm.getString("abstractConnectionHandler.connectionsGet",

processor, socket));

}

// Timeouts are calculated on a dedicated thread and then

// dispatched. Because of delays in the dispatch process, the

// timeout may no longer be required. Check here and avoid

// unnecessary processing.

if (SocketEvent.TIMEOUT == status &&

(processor == null ||

!processor.isAsync() && !processor.isUpgrade() ||

processor.isAsync() && !processor.checkAsyncTimeoutGeneration())) {

// This is effectively a NO-OP

return SocketState.OPEN;

}

if (processor != null) {

// Make sure an async timeout doesn't fire

getProtocol().removeWaitingProcessor(processor);

} else if (status == SocketEvent.DISCONNECT || status == SocketEvent.ERROR) {

// Nothing to do. Endpoint requested a close and there is no

// longer a processor associated with this socket.

return SocketState.CLOSED;

}

ContainerThreadMarker.set();

try {

if (processor == null) {

String negotiatedProtocol = wrapper.getNegotiatedProtocol();

// OpenSSL typically returns null whereas JSSE typically

// returns "" when no protocol is negotiated

if (negotiatedProtocol != null && negotiatedProtocol.length() > 0) {

UpgradeProtocol upgradeProtocol = getProtocol().getNegotiatedProtocol(negotiatedProtocol);

if (upgradeProtocol != null) {

processor = upgradeProtocol.getProcessor(wrapper, getProtocol().getAdapter());

if (getLog().isDebugEnabled()) {

getLog().debug(sm.getString("abstractConnectionHandler.processorCreate", processor));

}

} else if (negotiatedProtocol.equals("http/1.1")) {

// Explicitly negotiated the default protocol.

// Obtain a processor below.

} else {

// TODO:

// OpenSSL 1.0.2's ALPN callback doesn't support

// failing the handshake with an error if no

// protocol can be negotiated. Therefore, we need to

// fail the connection here. Once this is fixed,

// replace the code below with the commented out

// block.

if (getLog().isDebugEnabled()) {

getLog().debug(sm.getString("abstractConnectionHandler.negotiatedProcessor.fail",

negotiatedProtocol));

}

return SocketState.CLOSED;

/*

* To replace the code above once OpenSSL 1.1.0 is

* used.

// Failed to create processor. This is a bug.

throw new IllegalStateException(sm.getString(

"abstractConnectionHandler.negotiatedProcessor.fail",

negotiatedProtocol));

*/

}

}

}

if (processor == null) {

processor = recycledProcessors.pop();

if (getLog().isDebugEnabled()) {

getLog().debug(sm.getString("abstractConnectionHandler.processorPop", processor));

}

}

if (processor == null) {

processor = getProtocol().createProcessor();

register(processor);

if (getLog().isDebugEnabled()) {

getLog().debug(sm.getString("abstractConnectionHandler.processorCreate", processor));

}

}

processor.setSslSupport(

wrapper.getSslSupport(getProtocol().getClientCertProvider()));

// Associate the processor with the connection

connections.put(socket, processor);

SocketState state = SocketState.CLOSED;

do {

state = processor.process(wrapper, status);

if (state == SocketState.UPGRADING) {

// Get the HTTP upgrade handler

UpgradeToken upgradeToken = processor.getUpgradeToken();

// Retrieve leftover input

ByteBuffer leftOverInput = processor.getLeftoverInput();

if (upgradeToken == null) {

// Assume direct HTTP/2 connection

UpgradeProtocol upgradeProtocol = getProtocol().getUpgradeProtocol("h2c");

if (upgradeProtocol != null) {

processor = upgradeProtocol.getProcessor(

wrapper, getProtocol().getAdapter());

wrapper.unRead(leftOverInput);

// Associate with the processor with the connection

connections.put(socket, processor);

} else {

if (getLog().isDebugEnabled()) {

getLog().debug(sm.getString(

"abstractConnectionHandler.negotiatedProcessor.fail",

"h2c"));

}

return SocketState.CLOSED;

}

} else {

HttpUpgradeHandler httpUpgradeHandler = upgradeToken.getHttpUpgradeHandler();

// Release the Http11 processor to be re-used

release(processor);

// Create the upgrade processor

processor = getProtocol().createUpgradeProcessor(wrapper, upgradeToken);

if (getLog().isDebugEnabled()) {

getLog().debug(sm.getString("abstractConnectionHandler.upgradeCreate",

processor, wrapper));

}

wrapper.unRead(leftOverInput);

// Mark the connection as upgraded

wrapper.setUpgraded(true);

// Associate with the processor with the connection

connections.put(socket, processor);

// Initialise the upgrade handler (which may trigger

// some IO using the new protocol which is why the lines

// above are necessary)

// This cast should be safe. If it fails the error

// handling for the surrounding try/catch will deal with

// it.

if (upgradeToken.getInstanceManager() == null) {

httpUpgradeHandler.init((WebConnection) processor);

} else {

ClassLoader oldCL = upgradeToken.getContextBind().bind(false, null);

try {

httpUpgradeHandler.init((WebConnection) processor);

} finally {

upgradeToken.getContextBind().unbind(false, oldCL);

}

}

}

}

} while ( state == SocketState.UPGRADING);

if (state == SocketState.LONG) {

// In the middle of processing a request/response. Keep the

// socket associated with the processor. Exact requirements

// depend on type of long poll

longPoll(wrapper, processor);

if (processor.isAsync()) {

getProtocol().addWaitingProcessor(processor);

}

} else if (state == SocketState.OPEN) {

// In keep-alive but between requests. OK to recycle

// processor. Continue to poll for the next request.

connections.remove(socket);

release(processor);

wrapper.registerReadInterest();

} else if (state == SocketState.SENDFILE) {

// Sendfile in progress. If it fails, the socket will be

// closed. If it works, the socket either be added to the

// poller (or equivalent) to await more data or processed

// if there are any pipe-lined requests remaining.

} else if (state == SocketState.UPGRADED) {

// Don't add sockets back to the poller if this was a

// non-blocking write otherwise the poller may trigger

// multiple read events which may lead to thread starvation

// in the connector. The write() method will add this socket

// to the poller if necessary.

if (status != SocketEvent.OPEN_WRITE) {

longPoll(wrapper, processor);

getProtocol().addWaitingProcessor(processor);

}

} else if (state == SocketState.SUSPENDED) {

// Don't add sockets back to the poller.

// The resumeProcessing() method will add this socket

// to the poller.

} else {

// Connection closed. OK to recycle the processor.

// Processors handling upgrades require additional clean-up

// before release.

connections.remove(socket);

if (processor.isUpgrade()) {

UpgradeToken upgradeToken = processor.getUpgradeToken();

HttpUpgradeHandler httpUpgradeHandler = upgradeToken.getHttpUpgradeHandler();

InstanceManager instanceManager = upgradeToken.getInstanceManager();

if (instanceManager == null) {

httpUpgradeHandler.destroy();

} else {

ClassLoader oldCL = upgradeToken.getContextBind().bind(false, null);

try {

httpUpgradeHandler.destroy();

} finally {

try {

instanceManager.destroyInstance(httpUpgradeHandler);

} catch (Throwable e) {

ExceptionUtils.handleThrowable(e);

getLog().error(sm.getString("abstractConnectionHandler.error"), e);

}

upgradeToken.getContextBind().unbind(false, oldCL);

}

}

}

release(processor);

}

return state;

} catch(java.net.SocketException e) {

// SocketExceptions are normal

getLog().debug(sm.getString(

"abstractConnectionHandler.socketexception.debug"), e);

} catch (java.io.IOException e) {

// IOExceptions are normal

getLog().debug(sm.getString(

"abstractConnectionHandler.ioexception.debug"), e);

} catch (ProtocolException e) {

// Protocol exceptions normally mean the client sent invalid or

// incomplete data.

getLog().debug(sm.getString(

"abstractConnectionHandler.protocolexception.debug"), e);

}

// Future developers: if you discover any other

// rare-but-nonfatal exceptions, catch them here, and log as

// above.

catch (OutOfMemoryError oome) {

// Try and handle this here to give Tomcat a chance to close the

// connection and prevent clients waiting until they time out.

// Worst case, it isn't recoverable and the attempt at logging

// will trigger another OOME.

getLog().error(sm.getString("abstractConnectionHandler.oome"), oome);

} catch (Throwable e) {

ExceptionUtils.handleThrowable(e);

// any other exception or error is odd. Here we log it

// with "ERROR" level, so it will show up even on

// less-than-verbose logs.

getLog().error(sm.getString("abstractConnectionHandler.error"), e);

} finally {

ContainerThreadMarker.clear();

}

// Make sure socket/processor is removed from the list of current

// connections

connections.remove(socket);

release(processor);

return SocketState.CLOSED;

}

- 协议升级完成后,当SocketEvent变为OPEN_READ后,将会调用AbstractProcessorLight的service方法,此时会选择具体的协议处理

@Override

public SocketState process(SocketWrapperBase<?> socketWrapper, SocketEvent status)

throws IOException {

SocketState state = SocketState.CLOSED;

Iterator<DispatchType> dispatches = null;

do {

if (dispatches != null) {

DispatchType nextDispatch = dispatches.next();

if (getLog().isDebugEnabled()) {

getLog().debug("Processing dispatch type: [" + nextDispatch + "]");

}

state = dispatch(nextDispatch.getSocketStatus());

if (!dispatches.hasNext()) {

state = checkForPipelinedData(state, socketWrapper);

}

} else if (status == SocketEvent.DISCONNECT) {

// Do nothing here, just wait for it to get recycled

} else if (isAsync() || isUpgrade() || state == SocketState.ASYNC_END) {

state = dispatch(status);

state = checkForPipelinedData(state, socketWrapper);

} else if (status == SocketEvent.OPEN_WRITE) {

// Extra write event likely after async, ignore

state = SocketState.LONG;

} else if (status == SocketEvent.OPEN_READ) {

state = service(socketWrapper);

} else if (status == SocketEvent.CONNECT_FAIL) {

logAccess(socketWrapper);

} else {

// Default to closing the socket if the SocketEvent passed in

// is not consistent with the current state of the Processor

state = SocketState.CLOSED;

}

if (getLog().isDebugEnabled()) {

getLog().debug("Socket: [" + socketWrapper +

"], Status in: [" + status +

"], State out: [" + state + "]");

}

if (isAsync()) {

state = asyncPostProcess();

if (getLog().isDebugEnabled()) {

getLog().debug("Socket: [" + socketWrapper +

"], State after async post processing: [" + state + "]");

}

}

if (dispatches == null || !dispatches.hasNext()) {

// Only returns non-null iterator if there are

// dispatches to process.

dispatches = getIteratorAndClearDispatches();

}

} while (state == SocketState.ASYNC_END ||

dispatches != null && state != SocketState.CLOSED);

return state;

}

8. 在具体的协议中会对socket处理做coyoteRequest和coyoteResponse的转换,最后交给Coyote模块和catalina模块的连接器CoyoteAdapter处理,处理过程可以参考catalina模块请求处理过程

以Http11Processor的service实现为例

@Override

public SocketState service(SocketWrapperBase<?> socketWrapper)

throws IOException {

RequestInfo rp = request.getRequestProcessor();

rp.setStage(org.apache.coyote.Constants.STAGE_PARSE);

// Setting up the I/O

setSocketWrapper(socketWrapper);

// Flags

keepAlive = true;

openSocket = false;

readComplete = true;

boolean keptAlive = false;

SendfileState sendfileState = SendfileState.DONE;

while (!getErrorState().isError() && keepAlive && !isAsync() && upgradeToken == null &&

sendfileState == SendfileState.DONE && !endpoint.isPaused()) {

// Parsing the request header

try {

if (!inputBuffer.parseRequestLine(keptAlive)) {

if (inputBuffer.getParsingRequestLinePhase() == -1) {

return SocketState.UPGRADING;

} else if (handleIncompleteRequestLineRead()) {

break;

}

}

// Process the Protocol component of the request line

// Need to know if this is an HTTP 0.9 request before trying to

// parse headers.

prepareRequestProtocol();

if (endpoint.isPaused()) {

// 503 - Service unavailable

response.setStatus(503);

setErrorState(ErrorState.CLOSE_CLEAN, null);

} else {

keptAlive = true;

// Set this every time in case limit has been changed via JMX

request.getMimeHeaders().setLimit(endpoint.getMaxHeaderCount());

// Don't parse headers for HTTP/0.9

if (!http09 && !inputBuffer.parseHeaders()) {

// We've read part of the request, don't recycle it

// instead associate it with the socket

openSocket = true;

readComplete = false;

break;

}

if (!disableUploadTimeout) {

socketWrapper.setReadTimeout(connectionUploadTimeout);

}

}

} catch (IOException e) {

if (log.isDebugEnabled()) {

log.debug(sm.getString("http11processor.header.parse"), e);

}

setErrorState(ErrorState.CLOSE_CONNECTION_NOW, e);

break;

} catch (Throwable t) {

ExceptionUtils.handleThrowable(t);

UserDataHelper.Mode logMode = userDataHelper.getNextMode();

if (logMode != null) {

String message = sm.getString("http11processor.header.parse");

switch (logMode) {

case INFO_THEN_DEBUG:

message += sm.getString("http11processor.fallToDebug");

//$FALL-THROUGH$

case INFO:

log.info(message, t);

break;

case DEBUG:

log.debug(message, t);

}

}

// 400 - Bad Request

response.setStatus(400);

setErrorState(ErrorState.CLOSE_CLEAN, t);

}

// Has an upgrade been requested?

if (isConnectionToken(request.getMimeHeaders(), "upgrade")) {

// Check the protocol

String requestedProtocol = request.getHeader("Upgrade");

UpgradeProtocol upgradeProtocol = protocol.getUpgradeProtocol(requestedProtocol);

if (upgradeProtocol != null) {

if (upgradeProtocol.accept(request)) {

// TODO Figure out how to handle request bodies at this

// point.

response.setStatus(HttpServletResponse.SC_SWITCHING_PROTOCOLS);

response.setHeader("Connection", "Upgrade");

response.setHeader("Upgrade", requestedProtocol);

action(ActionCode.CLOSE, null);

getAdapter().log(request, response, 0);

InternalHttpUpgradeHandler upgradeHandler =

upgradeProtocol.getInternalUpgradeHandler(

getAdapter(), cloneRequest(request));

UpgradeToken upgradeToken = new UpgradeToken(upgradeHandler, null, null);

action(ActionCode.UPGRADE, upgradeToken);

return SocketState.UPGRADING;

}

}

}

if (getErrorState().isIoAllowed()) {

// Setting up filters, and parse some request headers

rp.setStage(org.apache.coyote.Constants.STAGE_PREPARE);

try {

prepareRequest();

} catch (Throwable t) {

ExceptionUtils.handleThrowable(t);

if (log.isDebugEnabled()) {

log.debug(sm.getString("http11processor.request.prepare"), t);

}

// 500 - Internal Server Error

response.setStatus(500);

setErrorState(ErrorState.CLOSE_CLEAN, t);

}

}

if (maxKeepAliveRequests == 1) {

keepAlive = false;

} else if (maxKeepAliveRequests > 0 &&

socketWrapper.decrementKeepAlive() <= 0) {

keepAlive = false;

}

// Process the request in the adapter

if (getErrorState().isIoAllowed()) {

try {

rp.setStage(org.apache.coyote.Constants.STAGE_SERVICE);

getAdapter().service(request, response);

// Handle when the response was committed before a serious

// error occurred. Throwing a ServletException should both

// set the status to 500 and set the errorException.

// If we fail here, then the response is likely already

// committed, so we can't try and set headers.

if(keepAlive && !getErrorState().isError() && !isAsync() &&

statusDropsConnection(response.getStatus())) {

setErrorState(ErrorState.CLOSE_CLEAN, null);

}

} catch (InterruptedIOException e) {

setErrorState(ErrorState.CLOSE_CONNECTION_NOW, e);

} catch (HeadersTooLargeException e) {

log.error(sm.getString("http11processor.request.process"), e);

// The response should not have been committed but check it

// anyway to be safe

if (response.isCommitted()) {

setErrorState(ErrorState.CLOSE_NOW, e);

} else {

response.reset();

response.setStatus(500);

setErrorState(ErrorState.CLOSE_CLEAN, e);

response.setHeader("Connection", "close"); // TODO: Remove

}

} catch (Throwable t) {

ExceptionUtils.handleThrowable(t);

log.error(sm.getString("http11processor.request.process"), t);

// 500 - Internal Server Error

response.setStatus(500);

setErrorState(ErrorState.CLOSE_CLEAN, t);

getAdapter().log(request, response, 0);

}

}

// Finish the handling of the request

rp.setStage(org.apache.coyote.Constants.STAGE_ENDINPUT);

if (!isAsync()) {

// If this is an async request then the request ends when it has

// been completed. The AsyncContext is responsible for calling

// endRequest() in that case.

endRequest();

}

rp.setStage(org.apache.coyote.Constants.STAGE_ENDOUTPUT);

// If there was an error, make sure the request is counted as

// and error, and update the statistics counter

if (getErrorState().isError()) {

response.setStatus(500);

}

if (!isAsync() || getErrorState().isError()) {

request.updateCounters();

if (getErrorState().isIoAllowed()) {

inputBuffer.nextRequest();

outputBuffer.nextRequest();

}

}

if (!disableUploadTimeout) {

int soTimeout = endpoint.getConnectionTimeout();

if(soTimeout > 0) {

socketWrapper.setReadTimeout(soTimeout);

} else {

socketWrapper.setReadTimeout(0);

}

}

rp.setStage(org.apache.coyote.Constants.STAGE_KEEPALIVE);

sendfileState = processSendfile(socketWrapper);

}

rp.setStage(org.apache.coyote.Constants.STAGE_ENDED);

if (getErrorState().isError() || (endpoint.isPaused() && !isAsync())) {

return SocketState.CLOSED;

} else if (isAsync()) {

return SocketState.LONG;

} else if (isUpgrade()) {

return SocketState.UPGRADING;

} else {

if (sendfileState == SendfileState.PENDING) {

return SocketState.SENDFILE;

} else {

if (openSocket) {

if (readComplete) {

return SocketState.OPEN;

} else {

return SocketState.LONG;

}

} else {

return SocketState.CLOSED;

}

}

}

}