Logstash日志收集实践

一、安装logstach

[root@linux-node2 ~]# tar xf /usr/local/src/logstash-5.2.2.tar.gz -C /usr/local/

[root@linux-node2 ~]# mv /usr/local/logstash-5.2.2 /usr/local/logstash

二、启动logstash

-e:在命令行执行

首先,需要先了解以下几个基本概念:

logstash收集日志基本流程: input-->codec-->filter-->codec-->output

1.input:从哪里收集日志,即输入 ;{stdin标准输入}

2.filter:发出去前进行过滤

3.output:输出至elasticsearch或redis消息队或者其他队列里面;{stdout标准输出}

4.codec:输出至前台,方便边实践边测试

5.数据量不大日志按照月来进行收集

1、通常使用rubydebug方式前台输出展示以及测试

[root@linux-node2 ~]# /usr/local/logstash/bin/logstash -e 'input { stdin{} } output { stdout{ codec => rubydebug} }'

Sending Logstash's logs to /usr/local/logstash/logs which is now configured via log4j2.properties

[2017-05-17T21:33:33,848][INFO ][logstash.pipeline ] Starting pipeline {"id"=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>5, "pipeline.max_inflight"=>250}

[2017-05-17T21:33:33,872][INFO ][logstash.pipeline ] Pipeline main started

The stdin plugin is now waiting for input:

[2017-05-17T21:33:33,908][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

备注:

也可以直接采用标准输入和标准输出的方式直接输出,即

[root@linux-node2 ~]# /usr/local/logstash/bin/logstash -e 'input { stdin{} } output { stdout{} }'

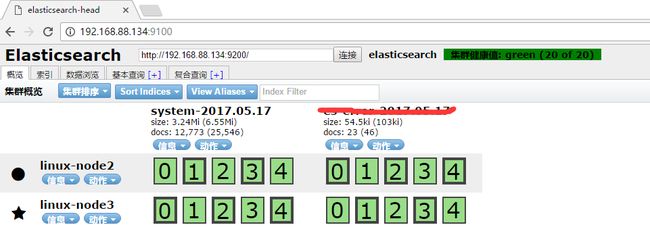

2、将logstach标准输入的内容输出到elasticsearch中

[root@linux-node2 ~]# /usr/local/logstash/bin/logstash -e 'input { stdin{} } output { elasticsearch { hosts => [“192.168.88.134:9200”] } }'

3、将logstach的信息写入到elasticsearch中,并前台展示及测试

[root@linux-node2 ~]# /usr/local/logstash/bin/logstash -e 'input { stdin{} } output { elasticsearch { hosts => [“192.168.88.134:9200”] } stdout{ codec => rubydebug } }'

三、logstach收集日志

1、收集syslog系统日志

[root@linux-node2 ~]# cat system.conf

input {

file {

path => "/var/log/messages"

type => "system"

start_position => "beginning"

}

}

output {

elasticsearch {

hosts => ["192.168.88.134:9200"]

index => "system-%{+YYYY.MM.dd}"

}

}

[root@linux-node2 ~]# /usr/local/logstash/bin/logstash -f system.conf

2、收集elasticsearch的访问日志

[root@linux-node2 ~]# cat es_access.conf

input {

file {

path => "/var/log/elasticsearch/elasticsearch.log"

type => "es-access"

start_position => "beginning"

stat_interval => "10" #设置收集日志的间隔时间,单位是s

}

}

output {

if [type] == "es-access" {

elasticsearch {

hosts => ["192.168.88.134:9200"]

index => "es-access-%{+YYYY.MM.dd}"

}

}

}

[root@linux-node2 ~]# /usr/local/logstash/bin/logstash -f es_access.conf

3、收集tcp日志,并输出到redis中

3.1 配置文件编写

[root@linux-node2 ~]# cat tcp.conf

input {

tcp {

type => "tcp_port_6666"

host => "192.168.88.134"

port => "6666"

mode => "server"

}

}

output {

redis {

host => "192.168.88.134"

port => "6379"

db => "6"

data_type => "list"

key => "tcp_port_6666"

}

}

[root@linux-node2 ~]# /usr/local/logstash/bin/logstash -f tcp.conf

3.2 向666端口发送数据几种方式:

echo "heh" |nc 192.168.88.134 6666

nc 192.168.88.134 6666 < /etc/resolv.conf

echo hehe >/dev/tcp/192.168.88.134/6666

4、收集java日志

es是java服务,收集es需要注意换行问题

[root@linux-node2 ~]# cat java.conf

input {

file {

type => "access_es"

path => "/var/log/elasticsearch/elasticsearch.log.log"

codec => multiline {

pattern => "^\["

negate => true

what => "previous"

}

}

}

output {

redis {

host => "192.168.88.134"

port => "6379"

db => "6"

data_type => "list"

key => "access_es"

}

}

[root@linux-node2 ~]# /usr/local/logstash/bin/logstash -f java.conf

备注:

1、 grok 内置正则表达式地址

2、将java日志格式设置为json格式

修改tomcat的server.xml文件

5、收集nginx日志

5.1 安装nginx,并将nginx改成json格式输出日志

#http段加如下信息(日志位置根据业务自行调整)

log_format json '{ "@timestamp": "$time_local", '

'"@fields": { '

'"remote_addr": "$remote_addr", '

'"remote_user": "$remote_user", '

'"body_bytes_sent": "$body_bytes_sent", '

'"request_time": "$request_time", '

'"status": "$status", '

'"request": "$request", '

'"request_method": "$request_method", '

'"http_referrer": "$http_referer", '

'"body_bytes_sent":"$body_bytes_sent", '

'"http_x_forwarded_for": "$http_x_forwarded_for", '

'"http_user_agent": "$http_user_agent" } }';

access_log /var/log/nginx/logs/access_json.log json;

5.2 编写收集nginx访问日志

[root@linux-node2 ~]# cat nginx.conf

input {

file {

type => "access_nginx"

path => "/var/log/nginx/access_json.log"

codec => "json"

}

}

output {

redis {

host => "192.168.88.134"

port => "6379"

db => "6"

data_type => "list"

key => "access_nginx"

}

}

[root@linux-node2 ~]# /usr/local/logstash/bin/logstash -f nginx.conf

6、消息队列解耦

6.1 将所有需要收集的日志写入一个配置文件,发送至redis服务。

[root@linux-node2~]# cat input_file_output_redis.conf

input {

#system

syslog {

type => "system_rsyslog"

host => "192.168.88.134"

port => "514"

}

#java

file {

path => "/var/log/elasticsearch/elasticsearch.log"

type => "error_es"

start_position => "beginning"

codec => multiline {

pattern => "^\["

negate => true

what => "previous"

}

}

#nginx

file {

path => "/var/log/nginx/access_json.log"

type => "access_nginx"

codec => "json"

start_position => "beginning"

}

}

output {

#多行文件判断

if [type] == "system_rsyslog" {

redis {

host => "192.168.88.134"

port=> "6379"

db => "6"

data_type => "list"

key => "system_rsyslog"

}

}

if [type] == "error_es" {

redis {

host => "192.168.88.134"

port=> "6379"

db => "6"

data_type => "list"

key => "error_es"

}

}

if [type] == "access_nginx" {

redis {

host => "192.168.88.134"

port=> "6379"

db => "6"

data_type => "list"

key => "access_nginx"

}

}

}

[root@linux-node2 ~]# /usr/local/logstash/bin/logstash -f input_file_output_redis.conf

6.2 将redis消息队列收集的所有日志,写入elasticsearch集群。

[root@linux-node2~]# cat input_redis_output_es.conf

input {

redis {

type => "system_rsyslog"

host => "192.168.88.134"

port=> "6379"

db => "6"

data_type => "list"

key => "system_rsyslog"

}

redis {

type => "error_es"

host => "192.168.88.134"

port=> "6379"

db => "6"

data_type => "list"

key => "error_es"

}

redis {

type => "access_nginx"

host => "192.168.88.134"

port=> "6379"

db => "6"

data_type => "list"

key => "access_nginx"

}

}

output {

#多行文件判断

if [type] == "system_rsyslog" {

elasticsearch {

hosts => ["192.168.88.134:9200","192.168.88.136:9200"]

index => "system_rsyslog_%{+YYYY.MM.dd}"

}

}

if [type] == "error_es" {

elasticsearch {

hosts => ["192.168.88.134:9200","192.168.88.136:9200"]

index => "error_es_%{+YYYY.MM.dd}"

}

}

if [type] == "access_nginx" {

elasticsearch {

hosts => ["192.168.88.134:9200","192.168.88.136:9200"]

index => "access_nginx_%{+YYYY.MM.dd}"

}

}

}

[root@linux-node2 ~]# /usr/local/logstash/bin/logstash -f input_redis_output_es.conf

7、将logstash写入elasticsearch(适合日志数量不大,没有redis)

[root@linux-node2 ~]# cat all.conf

input {

#system

syslog {

type => "system_rsyslog"

host => "192.168.88.134"

port => "514"

}

#java

file {

path => "/var/log/elasticsearch/elasticsearch.log"

type => "error_es"

start_position => "beginning"

codec => multiline {

pattern => "^\["

negate => true

what => "previous"

}

}

#nginx

file {

path => "/var/log/nginx/access_json.log"

type => "access_nginx"

codec => "json"

start_position => "beginning"

}

}

output {

#多行文件判断

if [type] == "system_rsyslog" {

elasticsearch {

hosts => ["192.168.88.134:9200","192.168.88.136:9200"]

index => "system_rsyslog_%{+YYYY.MM}"

}

}

if [type] == "error_es" {

elasticsearch {

hosts => ["192.168.88.134:9200","192.168.88.136:9200"]

index => "error_es_%{+YYYY.MM.dd}"

}

}

if [type] == "access_nginx" {

elasticsearch {

hosts => ["192.168.88.134:9200","192.168.88.136:9200"]

index => "access_nginx_%{+YYYY.MM.dd}"

}

}

}

[root@linux-node2 ~]# /usr/local/logstash/bin/logstash -f all.conf