Introduction to gRPC

https://docs.google.com/presentation/d/1dgI09a-_4dwBMLyqfwchvS6iXtbcISQPLAXL6gSYOcc/edit#slide=id.g1c2bc22a4a_0_0

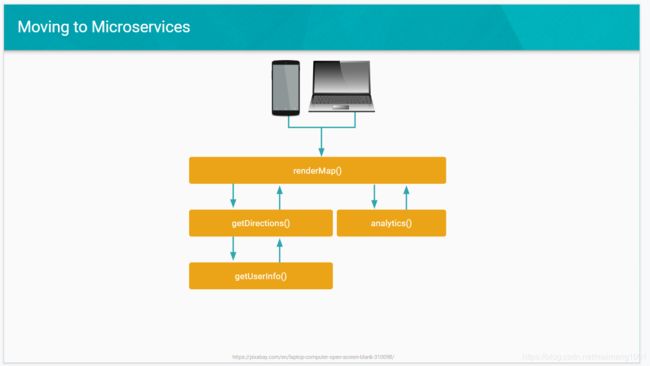

This is what your typical monolithic architecture looks like. You have a web or mobile client that directly accesses a single application. Method calls between systems are made in memory, and everything runs inside a single machine. This is easy to develop, but can be difficult to scale effectively, since everything is so tightly coupled together.

We may choose to move to a microservices architecture so that we can scale easier, update each part in isolation, and gain other such benefits.

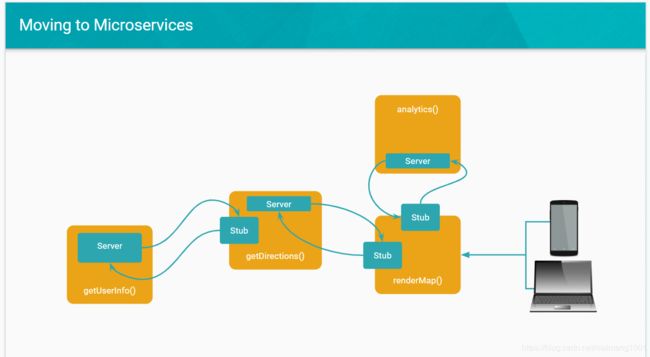

We get an architecture that looks more like this.

So while there are many strong benefits of moving to a microservices architectures - there are no silver bullets!

This means there are tradeoffs to make! (click)

Microservices gives us a higher level of complexity, and there are several inherent challenges with microservices you need to take into account when changing from a monolithic architecture.

I won’t be covering all of these topics in this talk, but gRPC gives us the tools and capabilities to combat these issues without having to roll our own solutions.

gRPC is the next generation of Stubby

gRPC is the next generation of Stubby

gRPC is an Open Source project that comes from Google.

Google has been running a microservices architecture internally for over a decade.

Google’s internal stack relies heavily on utilising RPCs (or Remote Procedure Calls). Every application is built using many RPC services that provide different tiers of functionality.

Historically, Google has used an internal system called “Stubby” as it’s RPC framework to help combat some of the issues we were looking at just previously. This gave Google a strong basis for RPC and Microservices within Google’s applications.

gRPC is the open sourcing and continued development of Stubby. This makes it available to the rest of the developer community, so you can take advantage of it for your own Microservices based applications.

So what makes gRPC so effective? Let’s look at three of its major pieces.

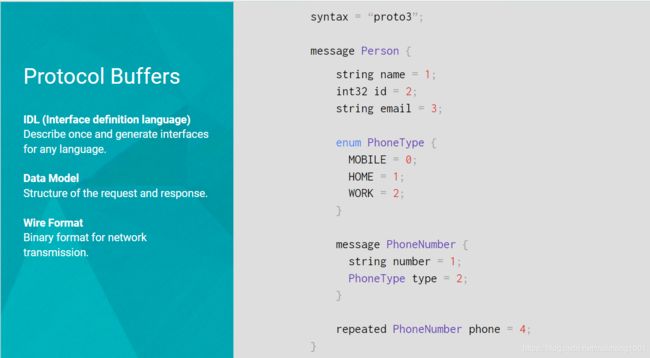

The first part of gRPC is protocol buffers, and specifically version 3 of protocol buffers.

Audience Question: Anyone used previous versions of protocol buffers?

Protocol Buffers, another open source project by Google, is a language-neutral, platform-neutral, extensible mechanism for quickly serializing structured data in a small binary packet.

By default, gRPC used Protocol Buffers v3 for data serialisation.

When working with Protocol Buffers, we write a .proto file to define the data structures that we will be using in our RPC calls. This also tells protobuf how to serialise this data when sending it over the wire.

This results in small data packets being sent over the network, which keeps your RPC calls fast, as there is less data to transmit.

It also makes your code execute faster, as it spends less time serializing and deserializing the data that is being transmitted.

Here you can see, we are defining a Person data structure, with a name, an id and multiple phone numbers of different types.

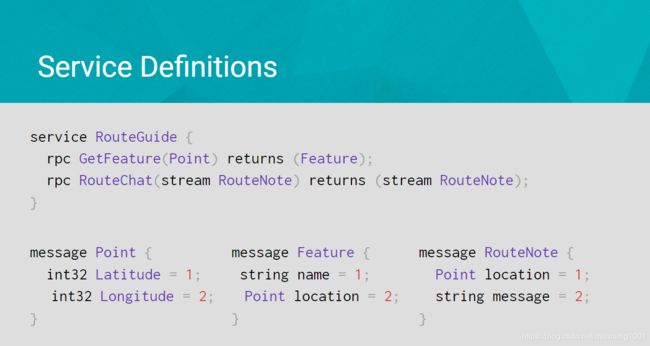

Along with the data structures, we can also define RPC functions in the services section of our .proto file.

There are several types of RPC calls available - as we can see in GetFeature, we can do the standard synchronous request/response model, but we can also more advanced types of RPC calls, such as with RouteChat, where we can send information via bi-directional streams in a completely asynchronous way.

From these .proto files, we are able to use the gRPC tooling to generate both clients and server side code that handles all the technical details of the RPC invocation, data serialisation, transmission and error handling.

This means we can focus on the logic of our application rather than worry about these implementation details.

The next part is that gRPC uses HTTP/2.

Audience Question: So who here has used HTTP/2?

-

Trick question - if you’ve ever used Google.com, you’ve used HTTP/2!

So why does gRPC use HTTP/2?

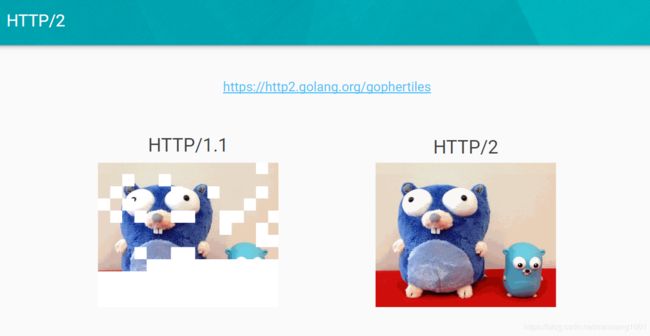

https://http2.golang.org/gophertiles

https://blog.golang.org/go-programming-language-turns-two_gophers.jpg

The first advantage HTTP/2 gives you over HTTP/1.x is speed.

Here is a demo that you can do to yourself, right now (or any time that you like).

We are downloading an image of a Gopher, which has been cut into a multitude of tiles - you can see on the left the connection to the HTTP/1.1 server. On the right we can see the same set of images displayed almost instantly. This is the power of HTTP/2.

HTTP/2 gives us multiplexing. Therefore, multiple gRPC calls can communicate over a single TCP/IP connection without the overhead of disconnecting and reconnecting over and over again as HTTP/1 will do for each request. This removes a huge overhead from traditional HTTP forms of communication.

HTTP/2 also has bi-directional streaming built in. This means gRPC can send asynchronous, non-blocking data up and down, such as the RPC example we saw earlier, without having to resort to much slower techniques like HTTP-long polling.

A gRPC client can send data to the server and the gRPC server can send data to the client in a completely asynchronous manner.

This means that doing real time, streaming communication over a microservices architecture is exceptionally easy to implement in our applications.

Security is always a huge concern with any application and microservices architectures are no exception. HTTP/2 and gRPC work over HTTPS, so we can use the battle tested security of TLS to ensure that our RPC calls are also secure.

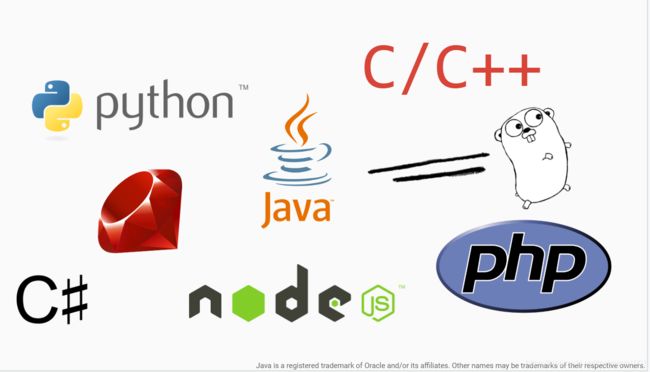

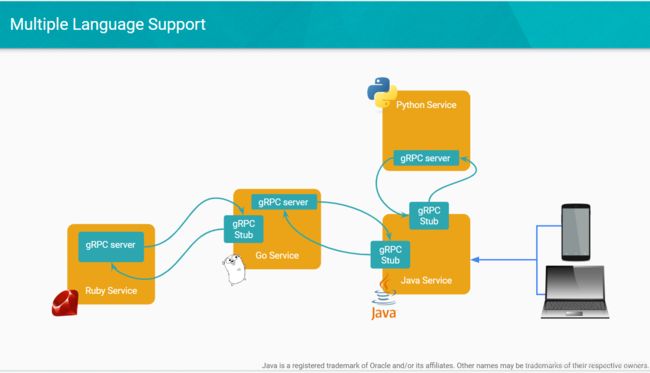

One of the big advantages of a microservices architecture is using different languages for different services. gRPC works across multiple languages and platforms. This not just includes traditional clients and servers, but mobile phones and embedded devices as well!

Currently, there is support for 10 languages

-

Go

-

Ruby

-

PHP

-

Java / Android

-

C

-

C++

-

C#

-

Objective-C / iOS

-

Python

-

Node.js

The best part is that gRPC is not just a library, but tooling as well. Once you have your .proto file defined, you can generate client and server stubs for all of these languages, allowing your RPC services to use a single API no matter what language they are written in!

This means that you can choose the right tool for the job when building your microservices - you aren’t locked into just one language or platform.

gRPC will generate clients and servers stubs that are canonically written for the language you want to use, and also take care of the serialisation and deserialisation of data in a way that your language of choice will understand!

There’s no need to worry about transport protocols or how data should be sent over the wire. All this is simply handled for you, and you can focus instead on the logic of your services as you write them.

While Stubby originated at Google, gRPC is a true community effort - a wide variety of well known companies, startups and projects use gRPC for their microservices needs.

Want to learn more?

Documentation and Code

-

http://www.grpc.io/

-

https://github.com/grpc

-

https://github.com/grpc-ecosystem

Help and Support

-

https://gitter.im/grpc/grpc

-

https://groups.google.com/forum/#!forum/grpc-io

Finally, if you are interested in learning more about gRPC, there are links above for documentation, how to guides, and sample applications.

If you need help and support there is an active Gitter chat and Google Group as well.

Thanks so much for taking the time to listen to me, and I open the floor up to questions.