python - Locust各种http client 测试

python - Locust各种http client 测试

Max.Bai

2019-08

Table of Contents

python - Locust各种http client 测试

0x00 前言

0x01 locust自带client

0x02 http.client

0x03 geventhttpclient

0x04 Urllib3

0x05 go net.http

0x06 go fasthttp

0x07 Jmeter

0x08 结果

0x00 前言

Locust是一个python的分布式性能测试工具,可以通过python编程实现各种协议的压测,详细查看locust官网 https://locust.io/

经过长时间的使用大家肯定会发现,高并发的时候locust还是不错的选择,但是需要高压力的时候,经常觉得locust压力不够(资源有限)

今天测试的目的是测试各种http client的性能在locust中的表现。

涉及的client都有:

python http.client

python geventhttpclient

python urllib3

go net.http

go fasthttp

java Jmeter

测试的服务端是4cpu 的Nginx服务。

客户端是4 cpu centos6.5

下面的测试没有记录服务器的消耗,主要是服务器没有到瓶颈,客户端已经到了瓶颈,网路卡住了。

0x01 locust自带client

locust自带client是requests, 功能强大,但是性能就一般了。

代码:

#!/usr/bin/env python

#coding:utf-8

from locust import HttpLocust, TaskSet, events, task

class WebsiteUser(HttpLocust):

host = "http://200.200.200.230"

min_wait = 0

max_wait = 0

class task_set(TaskSet):

@task

def index(self):

self.client.get('/')

if __name__ == '__main__':

user = WebsiteUser()

user.run()10 user 1 slave 使用中客户端cpu使用100%

30 user 2 slave 使用中客户端cpu使用100%

0x02 http.client

python3 中的http.client, 使用晦涩,但是性能比requests 确实高了不少。

代码:

#!/usr/bin/env python

#coding:utf-8

import time

# import StringUtils

import random

import json

import requests

from threading import Lock

from requests.exceptions import HTTPError, Timeout, ConnectionError, TooManyRedirects, RequestException

from locust import HttpLocust, TaskSet, events, task

import http.client

class WebsiteUser(HttpLocust):

host = "200.200.200.231"

min_wait = 0

max_wait = 0

class task_set(TaskSet):

def on_start(self):

self.conn = http.client.HTTPConnection("200.200.200.230")

def teardown(self):

self.conn.close()

print('close')

@task

def index(self):

method = "GET"

payload = ''

try:

start = int(time.time()*1000)

self.conn.request(method, '/')

response = self.conn.getresponse()

data = response.read()

# print(data)

c1 = int(time.time()*1000)

res_time = c1-start

if response.status == 200:

events.request_success.fire(request_type="POST", name="scene", response_time=res_time, response_length=0)

else:

events.request_failure.fire(request_type="POST", name="scene", response_time=res_time, exception="report failed:{}".format(res.text))

except Exception as e:

events.request_failure.fire(request_type="POST", name="scene", response_time=10*1000, exception="report failed:{}".format(str(e)))

self.interrupt()

# print('c:{}'.format(int(time.time()*1000)-c1))

if __name__ == '__main__':

user = WebsiteUser()

user.run()10 user 1 slave 使用中客户端cpu使用100%

40 user 4 slave 使用中客户端cpu使用100%

0x03 geventhttpclient

locust 另外提供了geventhttpclient, https://github.com/locustio/geventhttpclient

这个client性能确实不错,主要是由C实现的底层。

代码:

#!/usr/bin/env python

#coding:utf-8

import time

from locust import HttpLocust, TaskSet, events, task

from locust.exception import LocustError, StopLocust

from geventhttpclient import HTTPClient

from geventhttpclient.url import URL

class WebsiteUser(HttpLocust):

host = "200.200.200.231"

min_wait = 0

max_wait = 0

class task_set(TaskSet):

min_wait = 0

max_wait = 0

def on_start(self):

url = URL('http://200.200.200.230/')

self.conn = HTTPClient(url.host)

def teardown(self):

self.conn.close()

print('close')

@task

def index(self):

method = "GET"

payload = ''

try:

start = int(time.time()*1000)

response = self.conn.get('/')

data = response.read()

# print(data)

c1 = int(time.time()*1000)

res_time = c1-start

if response.status_code == 200:

events.request_success.fire(request_type="POST", name="scene", response_time=res_time, response_length=0)

else:

events.request_failure.fire(request_type="POST", name="scene", response_time=res_time, exception="report failed:{}".format(res.text))

except Exception as e:

events.request_failure.fire(request_type="POST", name="scene", response_time=10*1000, exception="report failed:{}".format(str(e)))

raise LocustError

def testarg(a:str=None):

print(a)

if __name__ == '__main__':

user = WebsiteUser()

user.run()

10 user 1 slave 使用中客户端cpu使用100%

30 user 2 slave 使用中客户端cpu使用100%

0x04 Urllib3

python3 有个urllib3的包,里面也有http client

代码:

#!/usr/bin/env python

#coding:utf-8

import time

from locust import HttpLocust, TaskSet, events, task

import urllib3

class WebsiteUser(HttpLocust):

host = "200.200.200.231"

min_wait = 0

max_wait = 0

class task_set(TaskSet):

def on_start(self):

self.conn = urllib3.PoolManager()

@task

def index(self):

url = "http://200.200.200.230/"

method = "GET"

payload = ''

try:

start = int(time.time()*1000)

response = self.conn.request(method, url)

c1 = int(time.time()*1000)

res_time = c1-start

if response.status == 200:

events.request_success.fire(request_type="POST", name="scene", response_time=res_time, response_length=0)

else:

events.request_failure.fire(request_type="POST", name="scene", response_time=res_time, exception="report failed:{}".format(res.text))

except Exception as e:

events.request_failure.fire(request_type="POST", name="scene", response_time=10*1000, exception="report failed:{}".format(str(e)))

self.interrupt()

if __name__ == '__main__':

user = WebsiteUser()

user.run()30 user 2 slave 使用中客户端cpu使用100%

0x05 go net.http

有大神用go语言实现了 locust 的slave,http client 使用net.http, 脚本使用的是大神提供的sample

https://github.com/myzhan/boomer

10 user 1 slave 使用中客户端cpu使用75%

30 user 3 slave 使用中客户端cpu使用60%

0x06 go fasthttp

fasthttp 是比net.http还要快的client,所以大神也提供了demo

https://github.com/myzhan/boomer

10 user 1 slave 使用中客户端cpu使用45%

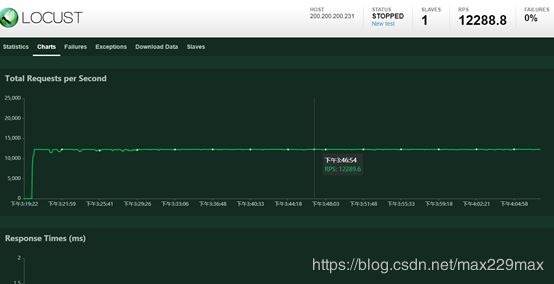

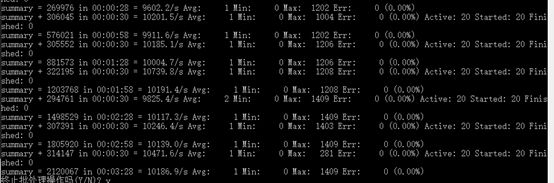

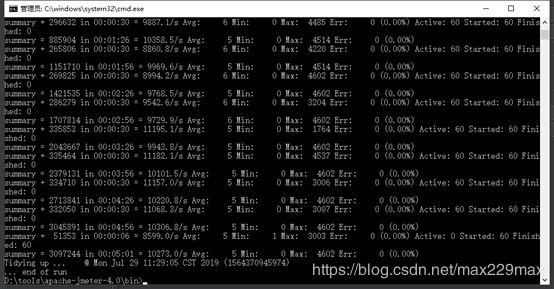

0x07 Jmeter

Jmeter是java的压测工具,我也使用Jmeter做了个简单的测试。

Jmeter使用 20 个线程

Jmeter使用 60个线程

0x08 结果

比较过程比较粗糙,但是基本可以看出来各个client的性能了。

go fasthttp > go net.http > python geventhttpclient > python http.client > python urllib3

当然,使用过程中看自己对语言的掌握程度,手上的资源,衡量选择go的还是python的库。