6. 业务系统基于K8S的CICD

将业务系统迁移到k8s中运行还不够,还应该让它能够持续化的集成部署,也就是CI/CD的过程,该过程必须保证自动化。

使用k8s进行CI/CD有以下优点:

1. 环境稳定

2. 服务不中断

3. 一次构建,多环境运行

本文通过K8S + Gitlab + Gitlab CI实现k8s集群的自动化集成部署。

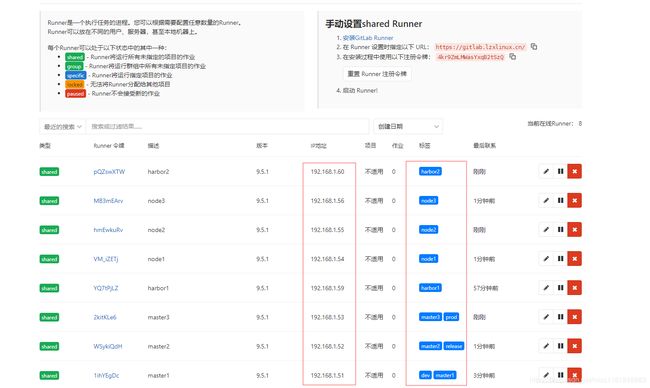

环境如下:

| ip | role | hostname | domain |

|---|---|---|---|

| 192.168.1.51 | k8s master1 | master1 | none |

| 192.168.1.52 | k8s master2 | master2 | none |

| 192.168.1.53 | k8s master3 | master3 | none |

| 192.168.1.54 | k8s node1 | node1 | none |

| 192.168.1.55 | k8s node2 | node2 | none |

| 192.168.1.56 | k8s node3 | node3 | none |

| 192.168.1.57 | gitlab server | gitlab | gitlab.lzxlinux.cn |

| 192.168.1.59 | harbor server | harbor1 | hub.lzxlinux.cn |

| 192.168.1.60 | harbor server | harbor2 | harbor.lzxlinux.cn |

搭建Gitlab服务器

搭建Gitlab服务器,参考这里:Docker + Gitlab + Gitlab CI(一),此处省略搭建过程。

没有指定节点时,默认操作在所有节点进行。另外请清空harbor仓库hub.lzxlinux.cn中kubernetes项目的所有镜像,仅保留Dockerfile中需要的基础镜像。

- 添加本地dns:

# echo '54.153.54.194 packages.gitlab.com' >> /etc/hosts

# echo '192.168.1.57 gitlab.lzxlinux.cn' >> /etc/hosts

- 注册runner:

k8s集群master节点需要注册runner,以及harbor服务器。runner是shell类型,下面以master1节点为例,

# curl -L https://packages.gitlab.com/install/repositories/runner/gitlab-ci-multi-runner/script.rpm.sh | sudo bash

# yum install -y gitlab-ci-multi-runner

# gitlab-ci-multi-runner status

gitlab-runner: Service is running!

# usermod -aG docker gitlab-runner

# systemctl restart docker

# gitlab-ci-multi-runner restart

# mkdir -p /etc/gitlab/ssl

# scp [email protected]:/etc/gitlab/ssl/gitlab.lzxlinux.cn.crt /etc/gitlab/ssl

# gitlab-ci-multi-runner register \

--tls-ca-file=/etc/gitlab/ssl/gitlab.lzxlinux.cn.crt \

--url "https://gitlab.lzxlinux.cn/" \

--registration-token "4kr9ZmLMWasYxqB2tSzQ" \

--name "master1" \

--tag-list "dev, master1" \

--run-untagged="false" \

--locked="false" \

--executor "shell"

# gitlab-ci-multi-runner list

Listing configured runners ConfigFile=/etc/gitlab-runner/config.toml

master1 Executor=shell Token=1ihYEgDcnaqqXuiygHhn URL=https://gitlab.lzxlinux.cn/

- 给予权限:

要在k8s集群部署项目,需要以root身份执行命令kubectl apply -f filename.yaml。

因此3个master节点需要给gitlab-runner用户以root用户执行这条命令的权限。

# visudo

gitlab-runner ALL=(ALL) NOPASSWD: ALL

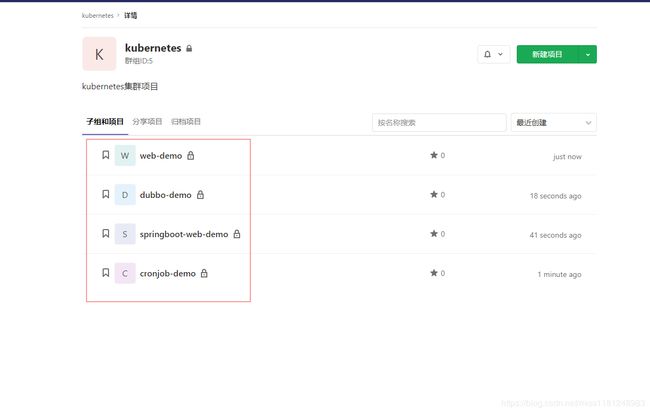

- 创建项目:

新建一个群组kubernetes,

在该群组下新建4个项目:cronjob-demo、springboot-web-demo、debbo-demo、web-demo,

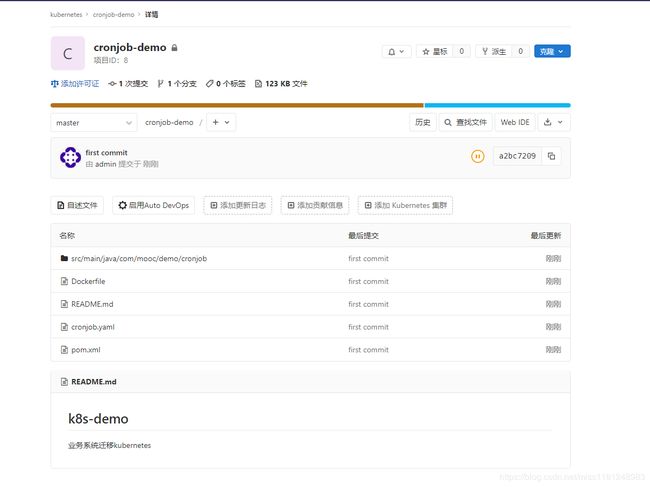

- push代码到gitlab(harbor1):

以cronjob-demo项目为例,

# cd /software

# git config --global user.name "admin"

# git config --global user.email "[email protected]"

# git -c http.sslVerify=false clone https://gitlab.lzxlinux.cn/kubernetes/cronjob-demo.git

# mv mooc-k8s-demo-docker/cronjob-demo/* cronjob-demo/

# cp mooc-k8s-demo-docker/README.md cronjob-demo/

# cd cronjob-demo/

# tree -L 2

.

├── cronjob.yaml

├── Dockerfile

├── pom.xml

├── README.md

└── src

└── main

2 directories, 4 files

# git add .

# git commit -m 'first commit'

# git -c http.sslVerify=false push origin master

其余项目与上面类似操作,将项目代码准备完毕。

- 创建harbor仓库的secret(master1):

# kubectl create secret docker-registry hub-secret --docker-server=hub.lzxlinux.cn --docker-username=admin --docker-password=Harbor12345

secret/hub-secret created

# kubectl create secret docker-registry harbor-secret --docker-server=hharbor.lzxlinux.cn --docker-username=admin --docker-password=Harbor12345

secret/harbor-secret created

# kubectl get secret

NAME TYPE DATA AGE

default-token-f758k kubernetes.io/service-account-token 3 9d

harbor-secret kubernetes.io/dockerconfigjson 1 10s

hub-secret kubernetes.io/dockerconfigjson 1 21s

这样后续就可以在部署项目的yaml文件中使用secret来登录harbor仓库了。

- 所有项目设置:

设置 → 仓库 → Protected Branches,设置No one Allow to push到master分支。

当项目有代码风格检查及单元测试时,设置 → 通用 → 合并请求,勾选流水线必须成功,这里我们不设置这个。

接下来分别演示项目在 测试 → 预发布 → 生产 等环境下的k8s中的部署。

当然,我们这里只有一个k8s集群,所以项目实际上部署在一个k8s中,通过3个master节点代表3种环境分别部署项目。

Java定时任务的CI/CD

- 设置项目变量:

设置 → CI/CD → 变量,

- 修改代码(harbor1):

创建新的dev分支,克隆代码到本地主机。

# cd /home

# git config --global user.name "admin"

# git config --global user.email "[email protected]"

# git -c http.sslVerify=false clone https://gitlab.lzxlinux.cn/kubernetes/cronjob-demo.git

# cd cronjob-demo/

# git checkout dev

# ls

cronjob.yaml Dockerfile pom.xml README.md src

# vim src/main/java/com/mooc/demo/cronjob/Main.java

package com.mooc.demo.cronjob;

import java.util.Random;

/**

* Created by Michael on 2018/9/29.

*/

public class Main {

public static void main(String args[]) {

Random r = new Random();

int time = r.nextInt(20)+10;

System.out.println("I will working for "+time+" seconds! It's very good!");

try{

Thread.sleep(time*1000);

}catch (Exception e) {

e.printStackTrace();

}

System.out.println("All work is done! This is the first k8s ci/cd!");

}

}

# vim cronjob.yaml

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: cronjob-demo

spec:

schedule: "*/1 * * * *"

successfulJobsHistoryLimit: 3

suspend: false

concurrencyPolicy: Forbid

failedJobsHistoryLimit: 1

jobTemplate:

spec:

template:

metadata:

labels:

app: cronjob-demo

spec:

restartPolicy: Never

containers:

- name: cronjob-demo

image: hub.lzxlinux.cn/kubernetes/cronjob:latest

imagePullPolicy: Always

imagePullSecrets:

- name: hub-secret

# vim .gitlab-ci.yml

stages:

- login

- build

- dev

- release

- prod

docker-login:

stage: login

script:

- docker login hub.lzxlinux.cn -u $HARBOR_USER -p $HARBOR_PASS

- docker image prune -f

tags:

- harbor1

only:

- master

docker-build1:

stage: build

script:

- mvn clean package

- docker build -t hub.lzxlinux.cn/kubernetes/cronjob:latest .

- docker push hub.lzxlinux.cn/kubernetes/cronjob:latest

tags:

- harbor1

only:

- master

docker-build2:

stage: build

script:

- mvn clean package

- docker build -t hub.lzxlinux.cn/kubernetes/cronjob:$TAG .

- docker push hub.lzxlinux.cn/kubernetes/cronjob:$TAG

when: manual

tags:

- harbor1

only:

- master

docker-build:

stage: build

script:

- mvn clean package

- docker build -t hub.lzxlinux.cn/kubernetes/cronjob:$CI_COMMIT_TAG .

- docker push hub.lzxlinux.cn/kubernetes/cronjob:$CI_COMMIT_TAG

tags:

- harbor1

only:

- tags

k8s-dev:

stage: dev

script:

- sudo su root -c "kubectl apply -f cronjob.yaml"

tags:

- dev

only:

- master

k8s-release:

stage: release

script:

- cp -f cronjob.yaml cronjob-$TAG.yaml

- sed -i "s#cronjob-demo#cronjob-$TAG#g" cronjob-$TAG.yaml

- sed -i "s#hub.lzxlinux.cn/kubernetes/cronjob:latest#hub.lzxlinux.cn/kubernetes/cronjob:$TAG#g" cronjob-$TAG.yaml

- sudo su root -c "kubectl apply -f cronjob-$TAG.yaml"

after_script:

- rm -f cronjob-$TAG.yaml

when: manual

tags:

- release

only:

- master

k8s-prod:

stage: prod

script:

- cp -f cronjob.yaml cronjob-$CI_COMMIT_TAG.yaml

- VER=$(echo $CI_COMMIT_TAG |awk -F '.' '{print $1}')

- sed -i "s#cronjob-demo#cronjob-$VER#g" cronjob-$CI_COMMIT_TAG.yaml

- sed -i "s#hub.lzxlinux.cn/kubernetes/cronjob:latest#hub.lzxlinux.cn/kubernetes/cronjob:$CI_COMMIT_TAG#g" cronjob-$CI_COMMIT_TAG.yaml

- sudo su root -c "kubectl apply -f cronjob-$CI_COMMIT_TAG.yaml"

after_script:

- rm -f cronjob-$CI_COMMIT_TAG.yaml

tags:

- prod

only:

- tags

修改完代码后可以在本地运行项目,看是否存在问题,这样可以在merge到master分支前避免低级错误。

# mvn clean package

# cd target/

# java -cp cronjob-demo-1.0-SNAPSHOT.jar com.mooc.demo.cronjob.Main

I will working for 29 seconds! It's very good!

All work is done! This is the first k8s ci/cd!

# cd ..

# rm -rf target/

可以看到,在本地运行该项目没有问题。

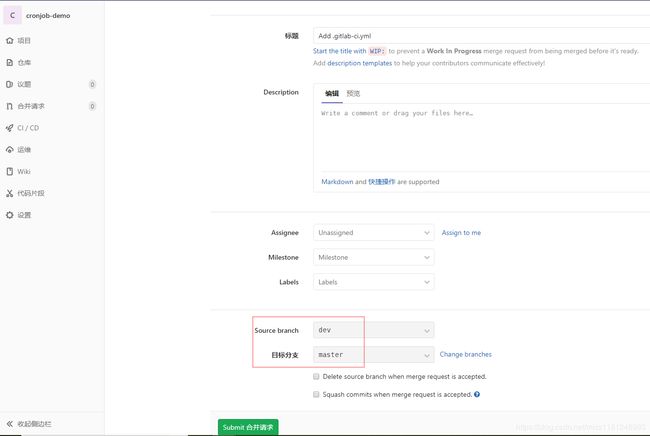

- push代码(harbor1):

# git add .

# git commit -m 'Add .gitlab-ci.yml'

# git -c http.sslVerify=false push origin dev

直接发起合并申请,merge到master分支,

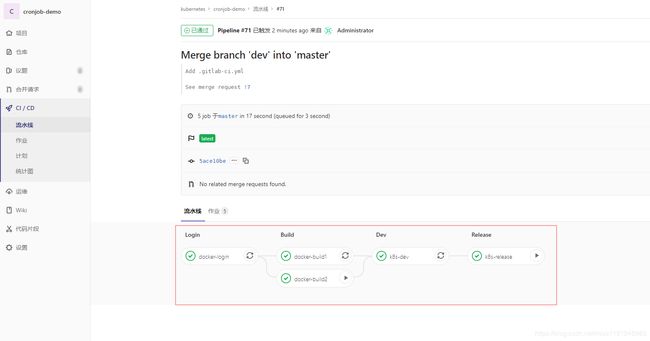

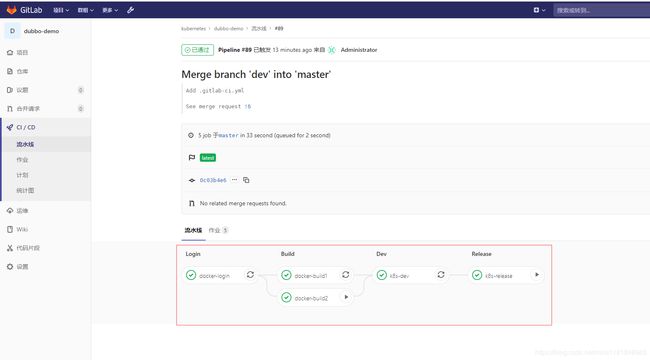

点击合并,CI/CD → 流水线,

测试环境项目部署完成,到master1节点查看,

# kubectl get pod |grep cronjob-demo

NAME READY STATUS RESTARTS AGE

cronjob-demo-1574412360-9mmxx 0/1 Completed 0 2m34s

cronjob-demo-1574412420-qhlv5 0/1 Completed 0 94s

cronjob-demo-1574412480-6z587 0/1 Completed 0 34s

# kubectl logs cronjob-demo-1574412360-9mmxx

I will working for 21 seconds! It's very good!

All work is done! This is the first k8s ci/cd!

# kubectl logs cronjob-demo-1574412420-qhlv5

I will working for 24 seconds! It's very good!

All work is done! This is the first k8s ci/cd!

# kubectl logs cronjob-demo-1574412480-6z587

I will working for 27 seconds! It's very good!

All work is done! This is the first k8s ci/cd!

可以看到,java的定时任务cronjob的输出与之前修改的代码一致,测试环境下部署没有问题。

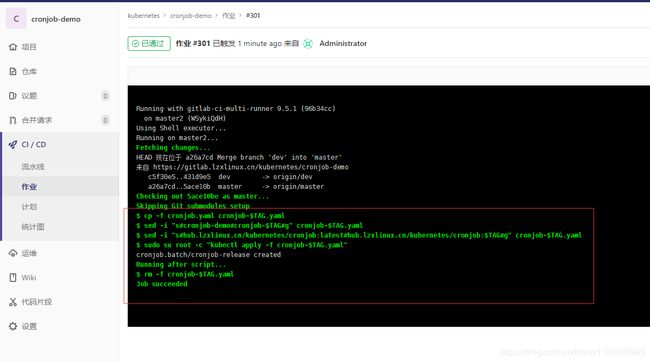

CI/CD → 流水线,运行docker-build2及k8s-release任务,部署到预发布环境,

预发布环境项目部署完成,到master1节点查看,

# kubectl get pod |grep cronjob-release

cronjob-release-1574412600-kpjbv 0/1 Completed 0 2m23s

cronjob-release-1574412660-xk8rr 0/1 Completed 0 92s

cronjob-release-1574412720-zpvj5 0/1 Completed 0 32s

# kubectl logs cronjob-release-1574412600-kpjbv

I will working for 25 seconds! It's very good!

All work is done! This is the first k8s ci/cd!

# kubectl logs cronjob-release-1574412660-xk8rr

I will working for 18 seconds! It's very good!

All work is done! This is the first k8s ci/cd!

# kubectl logs cronjob-release-1574412720-zpvj5

I will working for 24 seconds! It's very good!

All work is done! This is the first k8s ci/cd!

可以看到,java的定时任务cronjob的输出与之前修改的代码一致,预发布环境下部署没有问题。

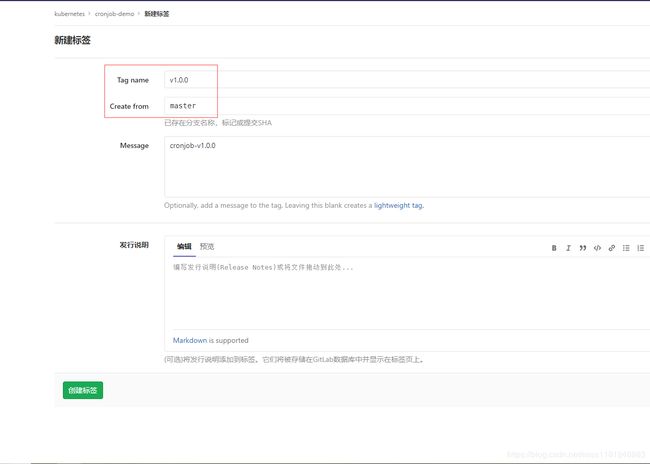

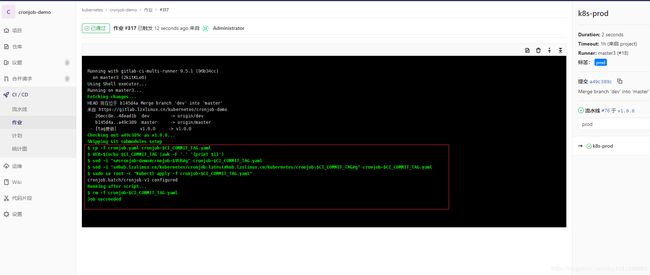

接下来需要部署到生产环境,生产环境下的项目必须是稳定版本,因此我们需要打一个tagv1.0.0。

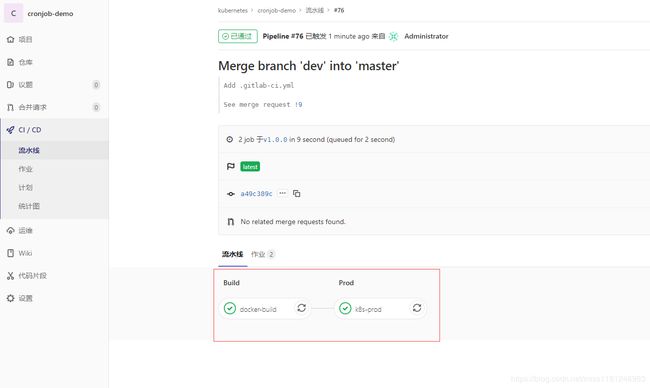

CI/CD → 流水线,

生产环境项目部署完成,到master1节点查看,

# kubectl get pod |grep cronjob-v1

cronjob-v1-1574414940-p8f9x 0/1 Completed 0 2m26s

cronjob-v1-1574415000-gglfk 0/1 Completed 0 86s

cronjob-v1-1574415060-r98tp 0/1 Completed 0 25s

# kubectl logs cronjob-v1-1574414940-p8f9x

I will working for 27 seconds! It's very good!

All work is done! This is the first k8s ci/cd!

# kubectl logs cronjob-v1-1574415000-gglfk

I will working for 17 seconds! It's very good!

All work is done! This is the first k8s ci/cd!

# kubectl logs cronjob-v1-1574415060-r98tp

I will working for 21 seconds! It's very good!

All work is done! This is the first k8s ci/cd!

可以看到,java的定时任务cronjob的输出与之前修改的代码一致,生产环境下部署没有问题。

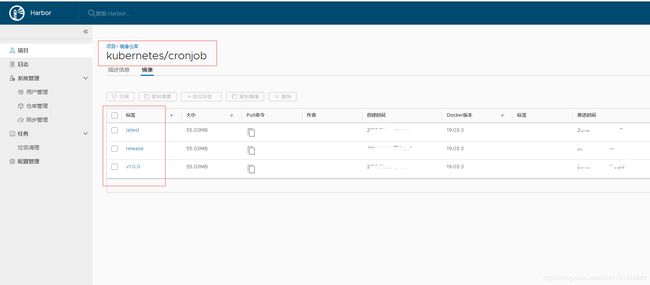

查看harbor仓库hub.lzxlinux.cn,

java定时任务cronjob项目的CI/CD到此结束。

SpringBoot的web服务的CI/CD

- 设置项目变量:

设置 → CI/CD → 变量,

- 修改代码(harbor1):

创建新的dev分支,克隆代码到本地主机。

# cd /home

# git -c http.sslVerify=false clone https://gitlab.lzxlinux.cn/kubernetes/springboot-web-demo.git

# cd springboot-web-demo/

# git checkout dev

# ls

Dockerfile pom.xml README.md springboot-web.yaml src

# vim src/main/java/com/mooc/demo/controller/DemoController.java

package com.mooc.demo.controller;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.bind.annotation.RestController;

/**

* Created by Michael on 2018/9/29.

*/

@RestController

public class DemoController {

@RequestMapping("/hello")

public String sayHello(@RequestParam String name) {

return "Hi "+name+"! Cicd for the springboot-web-demo project in k8s!";

}

}

# vim springboot-web.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: springboot-web-demo

spec:

selector:

matchLabels:

app: springboot-web-demo

replicas: 1

template:

metadata:

labels:

app: springboot-web-demo

spec:

containers:

- name: springboot-web-demo

image: hub.lzxlinux.cn/kubernetes/springboot-web:latest

imagePullPolicy: Always

ports:

- containerPort: 8080

imagePullSecrets:

- name: hub-secret

---

apiVersion: v1

kind: Service

metadata:

name: springboot-web-demo

spec:

ports:

- port: 80

protocol: TCP

targetPort: 8080

selector:

app: springboot-web-demo

type: ClusterIP

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: springboot-web-demo

spec:

rules:

- host: springboot.lzxlinux.cn

http:

paths:

- path: /

backend:

serviceName: springboot-web-demo

servicePort: 80

# vim .gitlab-ci.yml

stages:

- login

- build

- dev

- release

- prod

docker-login:

stage: login

script:

- docker login hub.lzxlinux.cn -u $HARBOR_USER -p $HARBOR_PASS

- docker image prune -f

tags:

- harbor1

only:

- master

docker-build1:

stage: build

script:

- mvn clean package

- docker build -t hub.lzxlinux.cn/kubernetes/springboot-web:latest .

- docker push hub.lzxlinux.cn/kubernetes/springboot-web:latest

tags:

- harbor1

only:

- master

docker-build2:

stage: build

script:

- mvn clean package

- docker build -t hub.lzxlinux.cn/kubernetes/springboot-web:$TAG .

- docker push hub.lzxlinux.cn/kubernetes/springboot-web:$TAG

when: manual

tags:

- harbor1

only:

- master

docker-build:

stage: build

script:

- mvn clean package

- docker build -t hub.lzxlinux.cn/kubernetes/springboot-web:$CI_COMMIT_TAG .

- docker push hub.lzxlinux.cn/kubernetes/springboot-web:$CI_COMMIT_TAG

tags:

- harbor1

only:

- tags

k8s-dev:

stage: dev

script:

- sudo su root -c "kubectl apply -f springboot-web.yaml"

tags:

- dev

only:

- master

k8s-release:

stage: release

script:

- cp -f springboot-web.yaml springboot-web-$TAG.yaml

- sed -i "s#springboot-web-demo#springboot-web-$TAG#g" springboot-web-$TAG.yaml

- sed -i "s#hub.lzxlinux.cn/kubernetes/springboot-web:latest#hub.lzxlinux.cn/kubernetes/springboot-web:$TAG#g" springboot-web-$TAG.yaml

- sed -i "s#springboot.lzxlinux.cn#$R_DOMAIN#g" springboot-web-$TAG.yaml

- sudo su root -c "kubectl apply -f springboot-web-$TAG.yaml"

after_script:

- rm -f springboot-web-$TAG.yaml

when: manual

tags:

- release

only:

- master

k8s-prod:

stage: prod

script:

- cp -f springboot-web.yaml springboot-web-$CI_COMMIT_TAG.yaml

- VER=$(echo $CI_COMMIT_TAG |awk -F '.' '{print $1}')

- sed -i "s#springboot-web-demo#springboot-web-$VER#g" springboot-web-$CI_COMMIT_TAG.yaml

- sed -i "s#hub.lzxlinux.cn/kubernetes/springboot-web:latest#hub.lzxlinux.cn/kubernetes/springboot-web:$CI_COMMIT_TAG#g" springboot-web-$CI_COMMIT_TAG.yaml

- sed -i "s#springboot.lzxlinux.cn#$P_DOMAIN#g" springboot-web-$CI_COMMIT_TAG.yaml

- sudo su root -c "kubectl apply -f springboot-web-$CI_COMMIT_TAG.yaml"

after_script:

- rm -f springboot-web-$CI_COMMIT_TAG.yaml

tags:

- prod

only:

- tags

修改完代码后可以在本地运行项目,看是否存在问题,这样可以在merge到master分支前避免低级错误。

# mvn clean package

# cd target/

# java -jar springboot-web-demo-1.0-SNAPSHOT.jar

# cd ..

# rm -rf target/

可以看到,在本地运行该项目没有问题。

- push代码(harbor1):

# git add .

# git commit -m 'Add .gitlab-ci.yml'

# git -c http.sslVerify=false push origin dev

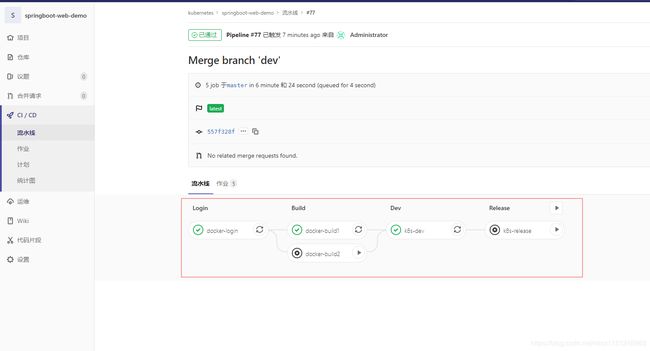

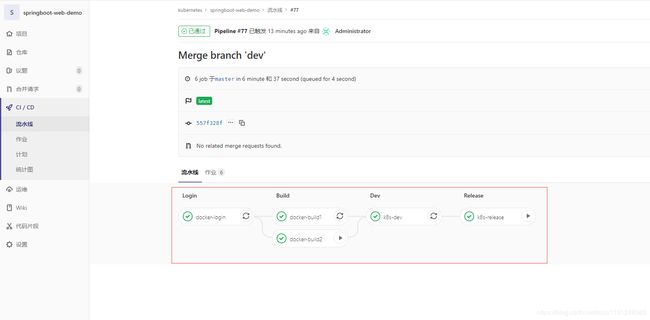

直接发起合并申请,merge到master分支,然后点击合并,CI/CD → 流水线,

测试环境项目部署完成,到master1节点查看,

# kubectl get pod -o wide |grep springboot-web-demo

springboot-web-demo-58676cb448-dvpfl 1/1 Running 0 2m15s 172.10.4.86 node1 <none> <none>

可以看到,该pod在node1节点上运行。在Windows电脑hosts文件中添加本地dns:

192.168.1.54 springboot.lzxlinux.cn

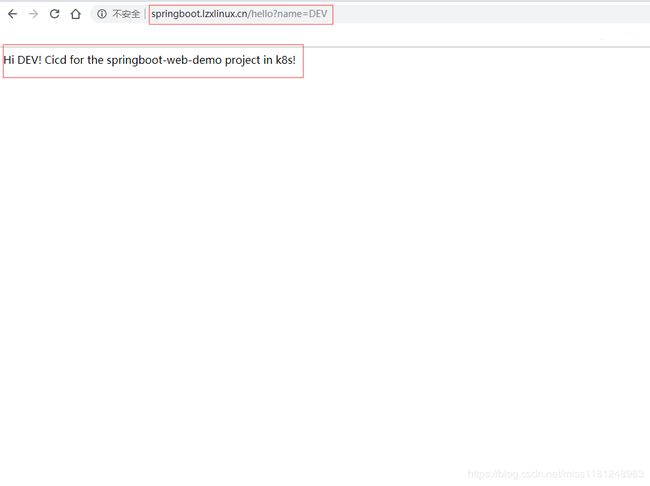

访问springboot.lzxlinux.cn/hello?name=DEV,

可以看到,显示与之前修改的代码一致,测试环境下部署没有问题。

CI/CD → 流水线,运行docker-build2及k8s-release任务,部署到预发布环境,

预发布环境项目部署完成,到master1节点查看,

# kubectl get pod -o wide |grep springboot-web-release

springboot-web-release-68fcb8b9d-6zft7 1/1 Running 0 48s 172.10.4.93 node1 <none> <none>

可以看到,该pod在node1节点上运行。在Windows电脑hosts文件中添加本地dns:

192.168.1.54 springboot.release.cn

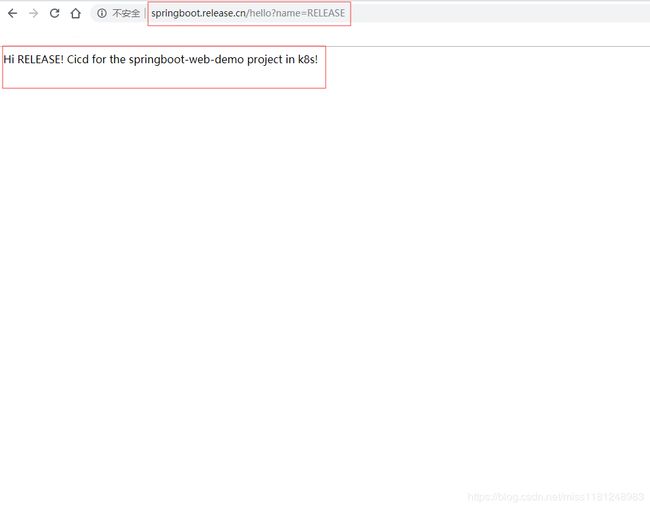

访问springboot.release.cn/hello?name=RELEASE,

可以看到,预发布环境下部署没有问题。

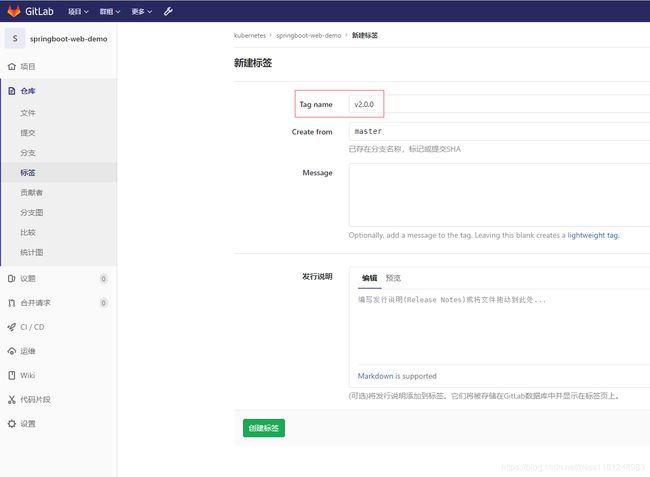

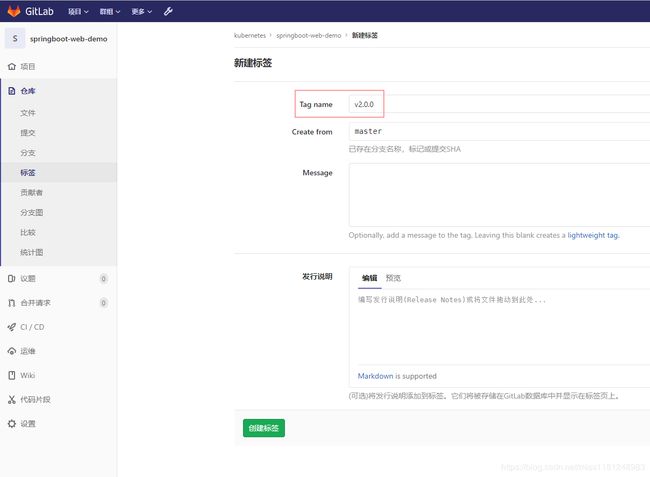

接下来需要部署到生产环境,生产环境下的项目必须是稳定版本,因此我们需要打一个tagv2.0.0。

CI/CD → 流水线,

生产环境项目部署完成,到master1节点查看,

# kubectl get pod -o wide |grep springboot-web-v2

springboot-web-v2-cf8c88dc9-6j4p4 1/1 Running 0 77s 172.10.2.91 node2 <none> <none>

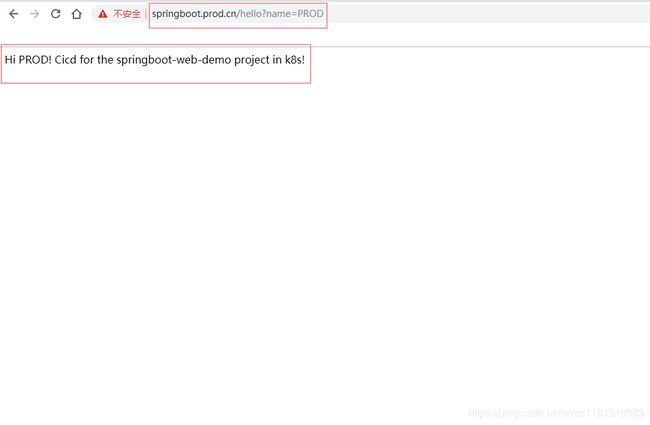

可以看到,该pod在node2节点上运行。在Windows电脑hosts文件中添加本地dns:

192.168.1.55 springboot.prod.cn

访问springboot.prod.cn/hello?name=PROD,

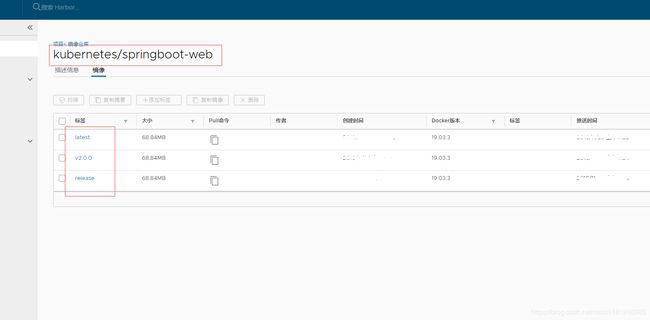

查看harbor仓库hub.lzxlinux.cn,

生产环境下部署没有问题。SpringBoot的web服务项目的CI/CD到此结束。

Dubbo服务的CI/CD

- 运行zookeeper容器(harbor1):

# docker run -d -p 2181:2181 --name zookeeper zookeeper

- 设置项目变量:

设置 → CI/CD → 变量,

- 修改代码(harbor1):

创建新的dev分支,克隆代码到本地主机。

# cd /home

# vim pom.xml

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0modelVersion>

<groupId>com.moocgroupId>

<artifactId>mooc-k8s-demoartifactId>

<packaging>pompackaging>

<version>1.0-SNAPSHOTversion>

<modules>

<module>dubbo-demomodule>

<module>web-demomodule>

<module>springboot-web-demomodule>

<module>cronjob-demomodule>

<module>dubbo-demo-apimodule>

modules>

<properties>

<source.level>1.8source.level>

<target.level>1.8target.level>

<spring.version>4.3.16.RELEASEspring.version>

<dubbo.version>2.6.2dubbo.version>

<dubbo.rpc.version>2.6.2dubbo.rpc.version>

<curator.version>2.12.0curator.version>

<resteasy.version>3.0.19.Finalresteasy.version>

<curator-client.version>2.12.0curator-client.version>

<swagger.version>1.5.19swagger.version>

properties>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframeworkgroupId>

<artifactId>spring-framework-bomartifactId>

<version>${spring.version}version>

<type>pomtype>

<scope>importscope>

dependency>

<dependency>

<groupId>org.springframeworkgroupId>

<artifactId>spring-webartifactId>

<version>${spring.version}version>

dependency>

<dependency>

<groupId>org.springframeworkgroupId>

<artifactId>spring-webmvc-portletartifactId>

<version>${spring.version}version>

dependency>

<dependency>

<groupId>commons-validatorgroupId>

<artifactId>commons-validatorartifactId>

<version>1.6version>

dependency>

<dependency>

<groupId>com.alibabagroupId>

<artifactId>dubboartifactId>

<version>${dubbo.version}version>

dependency>

<dependency>

<groupId>com.alibabagroupId>

<artifactId>dubbo-rpc-restartifactId>

<version>${dubbo.rpc.version}version>

dependency>

<dependency>

<groupId>com.alibabagroupId>

<artifactId>fastjsonartifactId>

<version>1.2.49version>

dependency>

<dependency>

<groupId>javaxgroupId>

<artifactId>javaee-web-apiartifactId>

<version>7.0version>

dependency>

<dependency>

<groupId>org.apache.curatorgroupId>

<artifactId>curator-frameworkartifactId>

<version>${curator.version}version>

dependency>

<dependency>

<groupId>javax.validationgroupId>

<artifactId>validation-apiartifactId>

<version>${validation-api.version}version>

dependency>

<dependency>

<groupId>org.hibernategroupId>

<artifactId>hibernate-validatorartifactId>

<version>${hibernate-validator.version}version>

dependency>

<dependency>

<groupId>org.jboss.resteasygroupId>

<artifactId>resteasy-jackson-providerartifactId>

<version>${resteasy.version}version>

dependency>

<dependency>

<groupId>org.apache.curatorgroupId>

<artifactId>curator-clientartifactId>

<version>${curator-client.version}version>

dependency>

<dependency>

<groupId>io.swaggergroupId>

<artifactId>swagger-annotationsartifactId>

<version>${swagger.version}version>

dependency>

<dependency>

<groupId>io.swaggergroupId>

<artifactId>swagger-jaxrsartifactId>

<version>${swagger.version}version>

dependency>

dependencies>

dependencyManagement>

<dependencies>

<dependency>

<groupId>org.slf4jgroupId>

<artifactId>slf4j-log4j12artifactId>

<version>1.7.25version>

dependency>

dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.pluginsgroupId>

<artifactId>maven-compiler-pluginartifactId>

<version>3.7.0version>

<configuration>

<source>${source.level}source>

<target>${target.level}target>

configuration>

plugin>

plugins>

build>

project>

# git -c http.sslVerify=false clone https://gitlab.lzxlinux.cn/kubernetes/dubbo-demo.git

# cd dubbo-demo/

# git checkout dev

# ls

Dockerfile dubbo-demo-api dubbo.yaml pom.xml README.md src

# vim src/main/java/com/mooc/demo/service/DemoServiceImpl.java

package com.mooc.demo.service;

import com.mooc.demo.api.DemoService;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

/**

* Created by Michael on 2018/9/25.

*/

public class DemoServiceImpl implements DemoService {

private static final Logger log = LoggerFactory.getLogger(DemoServiceImpl.class);

public String sayHello(String name) {

log.debug("dubbo say hello to : {}", name);

return "Hello "+name+"! This is my dubbo service!";

}

}

# vim dubbo.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: dubbo-demo

spec:

selector:

matchLabels:

app: dubbo-demo

replicas: 1

template:

metadata:

labels:

app: dubbo-demo

spec:

hostNetwork: true

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- dubbo-demo

topologyKey: "kubernetes.io/hostname"

containers:

- name: dubbo-demo

image: hub.lzxlinux.cn/kubernetes/dubbo:latest

imagePullPolicy: Always

ports:

- containerPort: 20880

env:

- name: DUBBO_PORT

value: "20880"

imagePullSecrets:

- name: hub-secret

# vim .gitlab-ci.yml

stages:

- login

- build

- dev

- release

- prod

docker-login:

stage: login

script:

- docker login hub.lzxlinux.cn -u $HARBOR_USER -p $HARBOR_PASS

- docker image prune -f

tags:

- harbor1

only:

- master

docker-build1:

stage: build

script:

- cd dubbo-demo-api/ && mvn clean install && cd ..

- mvn clean package && mkdir target/ROOT

- mv target/dubbo-demo-1.0-SNAPSHOT-assembly.tar.gz target/ROOT/

- cd target/ROOT/ && tar xf dubbo-demo-1.0-SNAPSHOT-assembly.tar.gz

- cd ../.. && rm -f target/ROOT/dubbo-demo-1.0-SNAPSHOT-assembly.tar.gz

- docker build -t hub.lzxlinux.cn/kubernetes/dubbo:latest .

- docker push hub.lzxlinux.cn/kubernetes/dubbo:latest

tags:

- harbor1

only:

- master

docker-build2:

stage: build

script:

- cd dubbo-demo-api/ && mvn clean install && cd ..

- mvn clean package && mkdir target/ROOT

- mv target/dubbo-demo-1.0-SNAPSHOT-assembly.tar.gz target/ROOT/

- cd target/ROOT/ && tar xf dubbo-demo-1.0-SNAPSHOT-assembly.tar.gz

- cd ../.. && rm -f target/ROOT/dubbo-demo-1.0-SNAPSHOT-assembly.tar.gz

- docker build -t hub.lzxlinux.cn/kubernetes/dubbo:$TAG .

- docker push hub.lzxlinux.cn/kubernetes/dubbo:$TAG

when: manual

tags:

- harbor1

only:

- master

docker-build:

stage: build

script:

- cd dubbo-demo-api/ && mvn clean install && cd ..

- mvn clean package && mkdir target/ROOT

- mv target/dubbo-demo-1.0-SNAPSHOT-assembly.tar.gz target/ROOT/

- cd target/ROOT/ && tar xf dubbo-demo-1.0-SNAPSHOT-assembly.tar.gz

- cd ../.. && rm -f target/ROOT/dubbo-demo-1.0-SNAPSHOT-assembly.tar.gz

- docker build -t hub.lzxlinux.cn/kubernetes/dubbo:$CI_COMMIT_TAG .

- docker push hub.lzxlinux.cn/kubernetes/dubbo:$CI_COMMIT_TAG

tags:

- harbor1

only:

- tags

k8s-dev:

stage: dev

script:

- sudo su root -c "kubectl apply -f dubbo.yaml"

tags:

- dev

only:

- master

k8s-release:

stage: release

script:

- cp -f dubbo.yaml dubbo-$TAG.yaml

- sed -i "s#dubbo-demo#dubbo-$TAG#g" dubbo-$TAG.yaml

- sed -i "s#hub.lzxlinux.cn/kubernetes/dubbo:latest#hub.lzxlinux.cn/kubernetes/dubbo:$TAG#g" dubbo-$TAG.yaml

- sed -i "s#20880#$R_PORT#g" dubbo-$TAG.yaml

- sudo su root -c "kubectl apply -f dubbo-$TAG.yaml"

after_script:

- rm -f dubbo-$TAG.yaml

when: manual

tags:

- release

only:

- master

k8s-prod:

stage: prod

script:

- cp -f dubbo.yaml dubbo-$CI_COMMIT_TAG.yaml

- VER=$(echo $CI_COMMIT_TAG |awk -F '.' '{print $1}')

- sed -i "s#dubbo-demo#dubbo-$VER#g" dubbo-$CI_COMMIT_TAG.yaml

- sed -i "s#hub.lzxlinux.cn/kubernetes/dubbo:latest#hub.lzxlinux.cn/kubernetes/dubbo:$CI_COMMIT_TAG#g" dubbo-$CI_COMMIT_TAG.yaml

- sed -i "s#20880#$P_PORT#g" dubbo-$CI_COMMIT_TAG.yaml

- sudo su root -c "kubectl apply -f dubbo-$CI_COMMIT_TAG.yaml"

after_script:

- rm -f dubbo-$CI_COMMIT_TAG.yaml

tags:

- prod

only:

- tags

修改完代码后可以在本地运行项目,看是否存在问题,这样可以在merge到master分支前避免低级错误。

# cd dubbo-demo-api/ && mvn clean install && cd ..

# mvn clean package && mkdir target/ROOT

# mv target/dubbo-demo-1.0-SNAPSHOT-assembly.tar.gz target/ROOT/

# cd target/ROOT/ && tar xf dubbo-demo-1.0-SNAPSHOT-assembly.tar.gz

# rm -f dubbo-demo-1.0-SNAPSHOT-assembly.tar.gz

# ./bin/start.sh

# netstat -lntp |grep 20880

tcp 0 0 0.0.0.0:20880 0.0.0.0:* LISTEN 14518/java

# telnet 192.168.1.59 20880

dubbo>ls

com.mooc.demo.api.DemoService

dubbo>ls com.mooc.demo.api.DemoService

sayHello

dubbo>invoke com.mooc.demo.api.DemoService.sayHello('DUBBO')

"Hello DUBBO! This is my dubbo service!"

elapsed: 9 ms.

# cd ../..

# rm -rf dubbo-demo-api/target/

# rm -rf target/

可以看到,在本地运行该项目没有问题。

- push代码(harbor1):

# git add .

# git commit -m 'Add .gitlab-ci.yml'

# git -c http.sslVerify=false push origin dev

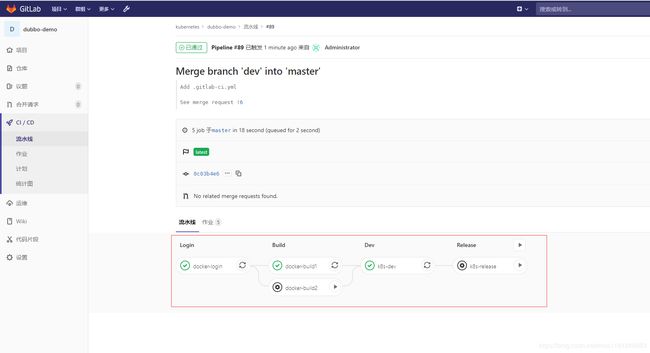

直接发起合并申请,merge到master分支,然后点击合并,CI/CD → 流水线,

测试环境项目部署完成,到master1节点查看,

# kubectl get pod -o wide |grep dubbo-demo

dubbo-demo-598bff497-zddt2 1/1 Running 0 51s 192.168.1.54 node1 <none> <none>

可以看到,该pod在node1节点上运行。到node1节点操作,

# netstat -lntp |grep 20880

tcp 0 0 0.0.0.0:20880 0.0.0.0:* LISTEN 14118/java

# telnet 192.168.1.54 20880

dubbo>ls

com.mooc.demo.api.DemoService

dubbo>ls com.mooc.demo.api.DemoService

sayHello

dubbo>invoke com.mooc.demo.api.DemoService.sayHello('K8S-CI/CD')

"Hello K8S-CI/CD! This is my dubbo service!"

elapsed: 10 ms.

可以看到,输出与之前修改的代码一致,测试环境下部署没有问题。

CI/CD → 流水线,运行docker-build2及k8s-release任务,部署到预发布环境,

预发布环境项目部署完成,到master1节点查看,

# kubectl get pod -o wide |grep dubbo-release

dubbo-release-6fbfb84dc6-klxn6 1/1 Running 0 36s 192.168.1.54 node1 <none> <none>

可以看到,该pod在node1节点上运行。到node1节点操作,

# netstat -lntp |grep 20881

tcp 0 0 0.0.0.0:20881 0.0.0.0:* LISTEN 31667/java

# telnet 192.168.1.54 20881

dubbo>ls

com.mooc.demo.api.DemoService

dubbo>ls com.mooc.demo.api.DemoService

sayHello

dubbo>invoke com.mooc.demo.api.DemoService.sayHello('RELEASE')

"Hello RELEASE! This is my dubbo service!"

elapsed: 11 ms.

可以看到,预发布环境下部署没有问题。

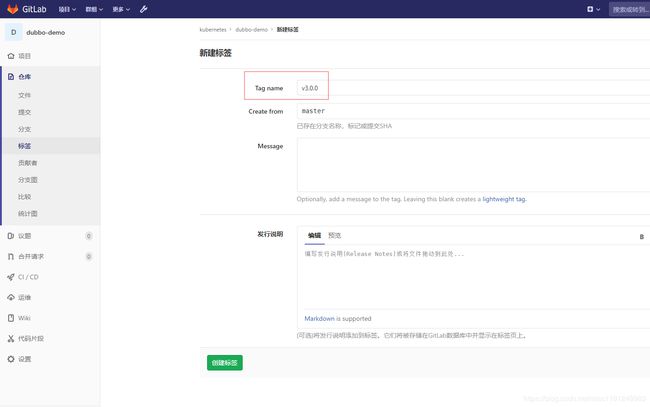

接下来需要部署到生产环境,生产环境下的项目必须是稳定版本,因此我们需要打一个tagv3.0.0。

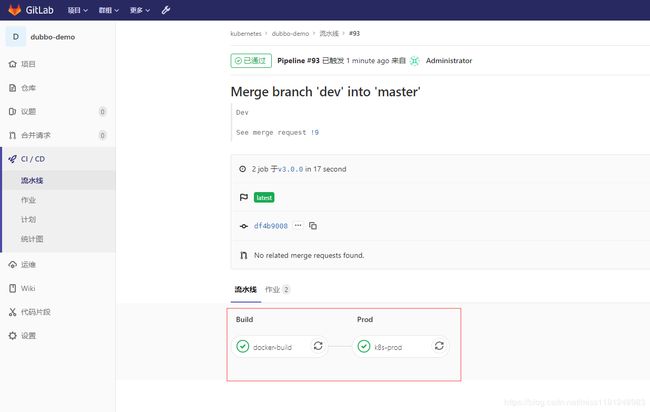

CI/CD → 流水线,

生产环境项目部署完成,到master1节点查看,

# kubectl get pod -o wide |grep dubbo-v3

dubbo-v3-6ccb54cc79-z6msm 1/1 Running 0 107s 192.168.1.54 node1 <none> <none>

可以看到,该pod在node1节点上运行。到node1节点操作,

# netstat -lntp |grep 20882

tcp 0 0 0.0.0.0:20882 0.0.0.0:* LISTEN 1237/java

# telnet 192.168.1.54 20882

dubbo>ls

com.mooc.demo.api.DemoService

dubbo>ls com.mooc.demo.api.DemoService

sayHello

dubbo>invoke com.mooc.demo.api.DemoService.sayHello('PROD')

"Hello PROD! This is my dubbo service!"

elapsed: 10 ms.

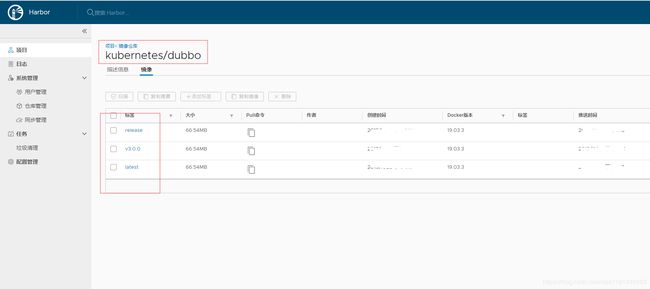

查看harbor仓库hub.lzxlinux.cn,

生产环境下部署没有问题。传统的debbo服务项目的CI/CD到此结束。

Web服务的CI/CD

该web服务需要前面的zookeeper服务与dubbo服务支持,不用关闭前面的zookeeper服务和dubbo服务。

- 设置项目变量:

设置 → CI/CD → 变量,

- 修改代码(harbor1):

创建新的dev分支,克隆代码到本地主机。

# cd /home

# git -c http.sslVerify=false clone https://gitlab.lzxlinux.cn/kubernetes/web-demo.git

# cd web-demo/

# git checkout dev

# ls

Dockerfile pom.xml README.md src web.yaml

# vim src/main/java/com/mooc/demo/controller/DemoController.java

package com.mooc.demo.controller;

import com.mooc.demo.api.DemoService;

import org.apache.commons.logging.Log;

import org.apache.commons.logging.LogFactory;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Controller;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.bind.annotation.ResponseBody;

import org.springframework.web.bind.annotation.RestController;

import org.springframework.web.context.ContextLoader;

/**

* Created by Michael on 2018/9/26.

*/

@Controller

public class DemoController {

private static final Logger log = LoggerFactory.getLogger(DemoController.class);

@Autowired

private DemoService demoService;

@RequestMapping("/k8s")

@ResponseBody

public String sayHello(@RequestParam String name) {

log.debug("say hello to :{}", name);

String message = demoService.sayHello(name);

log.debug("dubbo result:{}", message);

return message+" This is the Web Service CI/CD!";

}

}

# vim web.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-demo

spec:

selector:

matchLabels:

app: web-demo

replicas: 1

template:

metadata:

labels:

app: web-demo

spec:

containers:

- name: web-demo

image: hub.lzxlinux.cn/kubernetes/web:latest

imagePullPolicy: Always

ports:

- containerPort: 8080

imagePullSecrets:

- name: hub-secret

---

apiVersion: v1

kind: Service

metadata:

name: web-demo

spec:

ports:

- port: 80

protocol: TCP

targetPort: 8080

selector:

app: web-demo

type: ClusterIP

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: web-demo

spec:

rules:

- host: web.lzxlinux.cn

http:

paths:

- path: /

backend:

serviceName: web-demo

servicePort: 80

# vim .gitlab-ci.yml

stages:

- login

- build

- dev

- release

- prod

docker-login:

stage: login

script:

- docker login hub.lzxlinux.cn -u $HARBOR_USER -p $HARBOR_PASS

- docker image prune -f

tags:

- harbor1

only:

- master

docker-build1:

stage: build

script:

- mvn clean package && mkdir target/ROOT

- mv target/web-demo-1.0-SNAPSHOT.war target/ROOT/

- cd target/ROOT/ && jar -xf web-demo-1.0-SNAPSHOT.war

- cd ../.. && rm -f target/ROOT/web-demo-1.0-SNAPSHOT.war

- docker build -t hub.lzxlinux.cn/kubernetes/web:latest .

- docker push hub.lzxlinux.cn/kubernetes/web:latest

tags:

- harbor1

only:

- master

docker-build2:

stage: build

script:

- mvn clean package && mkdir target/ROOT

- mv target/web-demo-1.0-SNAPSHOT.war target/ROOT/

- cd target/ROOT/ && jar -xf web-demo-1.0-SNAPSHOT.war

- cd ../.. && rm -f target/ROOT/web-demo-1.0-SNAPSHOT.war

- docker build -t hub.lzxlinux.cn/kubernetes/web:$TAG .

- docker push hub.lzxlinux.cn/kubernetes/web:$TAG

when: manual

tags:

- harbor1

only:

- master

docker-build:

stage: build

script:

- mvn clean package && mkdir target/ROOT

- mv target/web-demo-1.0-SNAPSHOT.war target/ROOT/

- cd target/ROOT/ && jar -xf web-demo-1.0-SNAPSHOT.war

- cd ../.. && rm -f target/ROOT/web-demo-1.0-SNAPSHOT.war

- docker build -t hub.lzxlinux.cn/kubernetes/web:$CI_COMMIT_TAG .

- docker push hub.lzxlinux.cn/kubernetes/web:$CI_COMMIT_TAG

tags:

- harbor1

only:

- tags

k8s-dev:

stage: dev

script:

- sudo su root -c "kubectl apply -f web.yaml"

tags:

- dev

only:

- master

k8s-release:

stage: release

script:

- cp -f web.yaml web-$TAG.yaml

- sed -i "s#web-demo#web-$TAG#g" web-$TAG.yaml

- sed -i "s#hub.lzxlinux.cn/kubernetes/web:latest#hub.lzxlinux.cn/kubernetes/web:$TAG#g" web-$TAG.yaml

- sed -i "s#web.lzxlinux.cn#$R_DOMAIN#g" web-$TAG.yaml

- sudo su root -c "kubectl apply -f web-$TAG.yaml"

after_script:

- rm -f web-$TAG.yaml

when: manual

tags:

- release

only:

- master

k8s-prod:

stage: prod

script:

- cp -f web.yaml web-$CI_COMMIT_TAG.yaml

- VER=$(echo $CI_COMMIT_TAG |awk -F '.' '{print $1}')

- sed -i "s#web-demo#web-$VER#g" web-$CI_COMMIT_TAG.yaml

- sed -i "s#hub.lzxlinux.cn/kubernetes/web:latest#hub.lzxlinux.cn/kubernetes/web:$CI_COMMIT_TAG#g" web-$CI_COMMIT_TAG.yaml

- sed -i "s#web.lzxlinux.cn#$P_DOMAIN#g" web-$CI_COMMIT_TAG.yaml

- sudo su root -c "kubectl apply -f web-$CI_COMMIT_TAG.yaml"

after_script:

- rm -f web-$CI_COMMIT_TAG.yaml

tags:

- prod

only:

- tags

修改完代码后可以在本地运行项目,看是否存在问题,这样可以在merge到master分支前避免低级错误。

# mvn clean package && mkdir target/ROOT

# mv target/web-demo-1.0-SNAPSHOT.war target/ROOT/

# cd target/ROOT/ && tar xf web-demo-1.0-SNAPSHOT.war

# rm -f web-demo-1.0-SNAPSHOT.war

# cd ../..

# docker build -t web:test .

# docker run -d -p 8080:8080 --rm --name web web:test

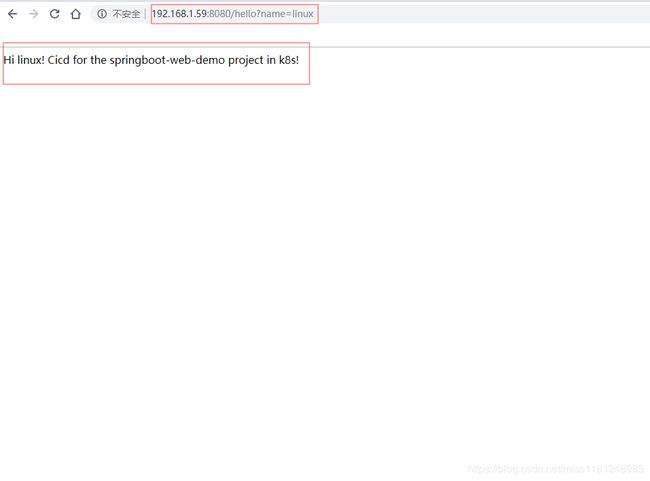

浏览器访问192.168.1.59:8080/k8s?name=WEB,

# rm -rf target/

可以看到,容器运行该项目没有问题。

- push代码(harbor1):

# git add .

# git commit -m 'Add .gitlab-ci.yml'

# git -c http.sslVerify=false push origin dev

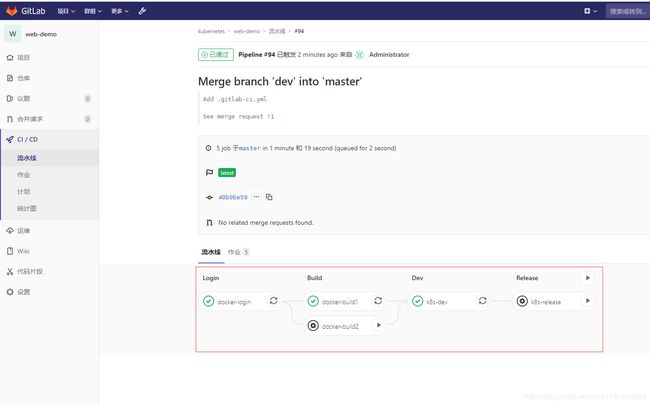

直接发起合并申请,merge到master分支,然后点击合并,CI/CD → 流水线,

测试环境项目部署完成,到master1节点查看,

# kubectl get pod -o wide |grep web-demo

web-demo-855b754fc6-h8mh6 1/1 Running 0 2m4s 172.10.5.193 node3 <none> <none>

可以看到,该pod在node3节点上运行。在Windows电脑hosts文件中添加本地dns:

192.168.1.56 web.lzxlinux.cn

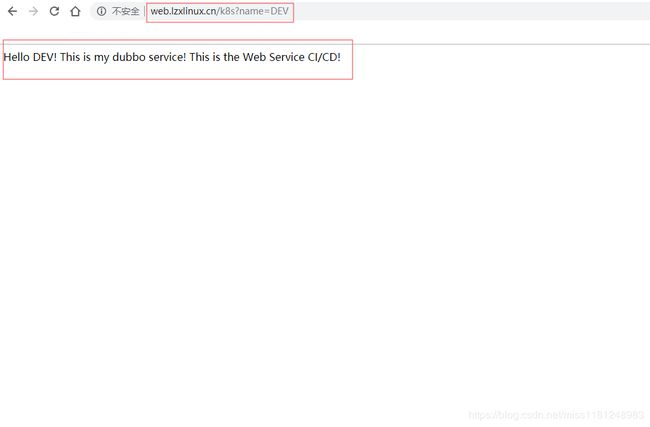

访问web.lzxlinux.cn/k8s?name=DEV,

可以看到,显示与之前修改的代码一致,测试环境下部署没有问题。

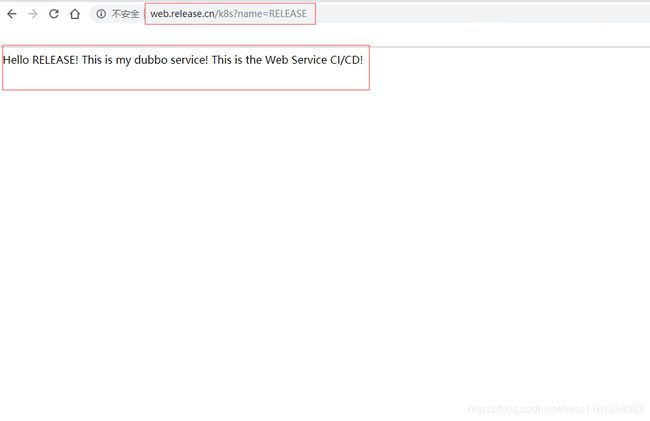

CI/CD → 流水线,运行docker-build2及k8s-release任务,部署到预发布环境,

预发布环境项目部署完成,到master1节点查看,

# # kubectl get pod -o wide |grep web-release

web-release-5997c954f6-s7bt9 1/1 Running 0 67s 172.10.5.207 node3 <none> <none>

可以看到,该pod在node3节点上运行。在Windows电脑hosts文件中添加本地dns:

192.168.1.56 web.release.cn

访问web.release.cn/k8s?name=RELEASE,

可以看到,预发布环境下部署没有问题。

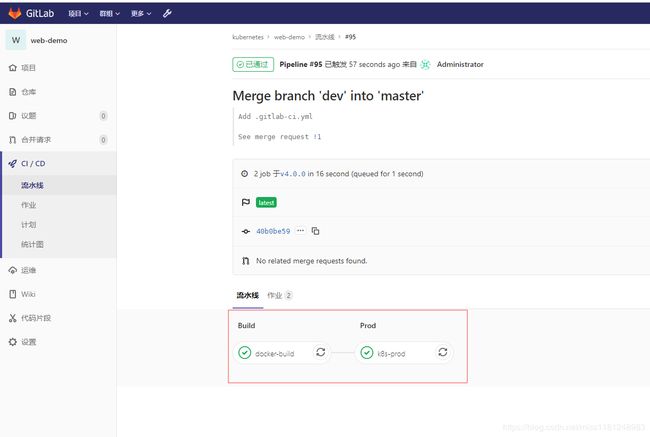

接下来需要部署到生产环境,生产环境下的项目必须是稳定版本,因此我们需要打一个tagv4.0.0。

CI/CD → 流水线,

生产环境项目部署完成,到master1节点查看,

# kubectl get pod -o wide |grep web-v4

web-v4-bc7f8fdd5-8c5nn 1/1 Running 0 83s 172.10.5.222 node3 <none> <none>

可以看到,该pod在node3节点上运行。在Windows电脑hosts文件中添加本地dns:

192.168.1.56 web.prod.cn

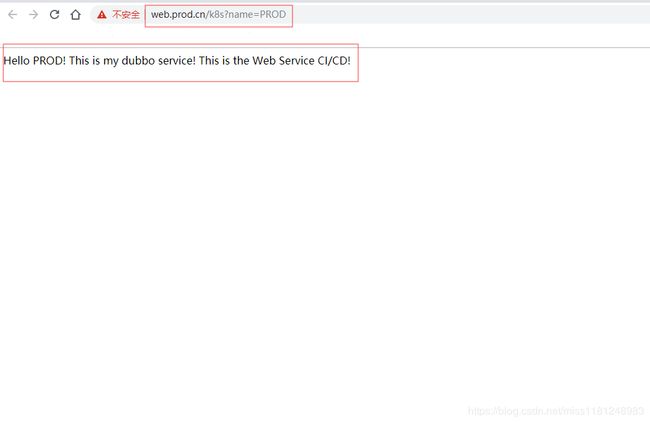

访问web.prod.cn/k8s?name=PROD(若访问报错,请先关闭springboot-web项目),

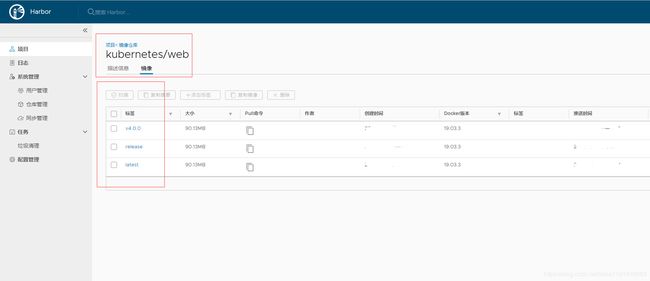

查看harbor仓库hub.lzxlinux.cn,

生产环境下部署没有问题。传统的debbo服务项目的CI/CD到此结束。

整个业务系统的项目基于k8s的CI/CD到此结束。