SpringBoot实现Mysql百万级数据量导出并避免OOM的解决方案

前言

动态数据导出是一般项目都会涉及到的功能。它的基本实现逻辑就是从mysql查询数据,加载到内存,然后从内存创建excel或者csv,以流的形式响应给前端。

参考 https://grokonez.com/spring-framework/spring-boot/excel-file-download-from-springboot-restapi-apache-poi-mysql。SpringBoot下载excel基本都是这么干。

虽然这是个可行的方案,然而一旦mysql数据量太大,达到十万级,百万级,千万级,大规模数据加载到内存必然会引起OutofMemoryError。

要考虑如何避免OOM,一般有两个方面的思路。

一方面从产品设计上考虑,先问问产品一下几个问题:

- 我们为什么要导出这么多数据呢?这个设计是不是合理的呢?

- 怎么做好权限控制?百万级数据导出确定不会泄露公司机密?

- 如果要导出百万级数据,那为什么不直接找大数据或者DBA来干呢?然后以邮件形式传递不行吗?

- 为什么要通过后端的逻辑来实现,不考虑时间成本,流量成本吗?

- 如果通过分页导出,每次点击按钮只导2万条,分批导出难道不能满足业务需求吗?

如果产品缺个筋,听不懂你的话,坚持要一次性导出全量数据,那就只能从技术实现上考虑如何实现了。

从技术上讲,为了避免OOM,我们一定要注意一个原则:

- 不能将全量数据一次性加载到内存之中。

全量加载不可行,那我们的目标就是如何实现数据的分批加载了。实际上,java8提供的stream可以实现将你需要的数据逐条返回,我们可以通过stream的形式将数据逐条刷入到文件中,每次刷入后再从内存中移除这条数据,从而避免OOM。

由于采用了数据逐条刷入文件,所以文件格式就不要采用excel了,这里推荐:

- 以csv代替excel。

考虑到当前SpringBoot持久层框架通常为JPA和mybatis,我们可以分别从这两个框架实现百万级数据导出的方案。

JPA实现百万级数据导出

具体方案不妨参考:http://knes1.github.io/blog/2015/2015-10-19-streaming-mysql-results-using-java8-streams-and-spring-data.html。

实现项目对应:https://github.com/knes1/todo

核心注解如下,需要加入到具体的Repository之上。方法的返回类型定义成Stream。Integer.MIN_VALUE告诉jdbc driver逐条返回数据。

@QueryHints(value = @QueryHint(name = HINT_FETCH_SIZE, value = "" + Integer.MIN_VALUE))

@Query(value = "select t from Todo t")

Stream streamAll(); 此外还需要在Stream处理数据的方法之上添加@Transactional(readOnly = true),保证事物是只读的。

同时需要注入EntityManager,通过detach从内存中移除Stream的遍历的对象。

@RequestMapping(value = "/todos.csv", method = RequestMethod.GET)

@Transactional(readOnly = true)

public void exportTodosCSV(HttpServletResponse response) {

response.addHeader("Content-Type", "application/csv");

response.addHeader("Content-Disposition", "attachment; filename=todos.csv");

response.setCharacterEncoding("UTF-8");

try(Stream todoStream = todoRepository.streamAll()) {

PrintWriter out = response.getWriter();

todoStream.forEach(rethrowConsumer(todo -> {

String line = todoToCSV(todo);

out.write(line);

out.write("\n");

entityManager.detach(todo);

}));

out.flush();

} catch (IOException e) {

log.info("Exception occurred " + e.getMessage(), e);

throw new RuntimeException("Exception occurred while exporting results", e);

}

} MyBatis实现百万级数据导出

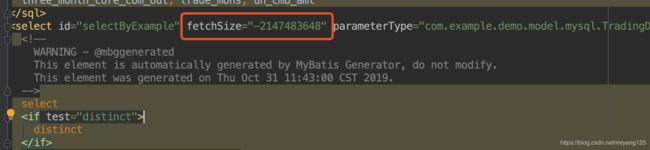

MyBatis实现逐条获取数据,必须要自定义ResultHandler,然后在mapper.xml文件中,对应的select语句中添加fetchSize="-2147483648"。

最后通过SqlSession来执行查询,并将返回的结果进行处理。

代码如下:

自定义的ResultHandler,用于获取数据:

@Slf4j

public class CustomResultHandler implements ResultHandler {

private final CallbackProcesser callbackProcesser;

public CustomResultHandler(

CallbackProcesser callbackProcesser) {

super();

this.callbackProcesser = callbackProcesser;

}

@Override

public void handleResult(ResultContext resultContext) {

TradingDetailsDownload detailsDownload = (TradingDetailsDownload)resultContext.getResultObject();

log.info("detailsDownload:{}",detailsDownload);

callbackProcesser.processData(detailsDownload);

}

}获取数据后的回调处理类CallbackProcesser,这个类专门用来将对象写入到csv。

public class CallbackProcesser {

private final HttpServletResponse response;

public CallbackProcesser(HttpServletResponse response) {

this.response = response;

String fileName = System.currentTimeMillis() + ".csv";

this.response.addHeader("Content-Type", "application/csv");

this.response.addHeader("Content-Disposition", "attachment; filename="+fileName);

this.response.setCharacterEncoding("UTF-8");

}

public void processData(E record) {

try {

response.getWriter().write(record.toString()); //如果是要写入csv,需要重写toString,属性通过","分割

response.getWriter().write("\n");

}catch (IOException e){

e.printStackTrace();

}

}

} 获取数据的核心service:(由于只做个简单演示,就懒得写成接口了)

@Service

public class TradingDetailsService {

private final SqlSessionTemplate sqlSessionTemplate;

public TradingDetailsService(SqlSessionTemplate sqlSessionTemplate) {

this.sqlSessionTemplate = sqlSessionTemplate;

}

public void downloadAsCsv(String coreComId, HttpServletResponse httpServletResponse)

throws IOException {

TradingDetailsDownloadExample tradingDetailsDownloadExample = new TradingDetailsDownloadExample();

TradingDetailsDownloadExample.Criteria criteria = tradingDetailsDownloadExample.createCriteria();

criteria.andIdIsNotNull();

criteria.andCoreComIdEqualTo(coreComId);

tradingDetailsDownloadExample.setOrderByClause(" id desc");

HashMap param = new HashMap<>();

param.put("oredCriteria", tradingDetailsDownloadExample.getOredCriteria());

param.put("orderByClause", tradingDetailsDownloadExample.getOrderByClause());

CustomResultHandler customResultHandler = new CustomResultHandler(new CallbackProcesser(httpServletResponse));

sqlSessionTemplate.select(

"com.example.demo.mapper.TradingDetailsDownloadMapper.selectByExample", param, customResultHandler);

httpServletResponse.getWriter().flush();

httpServletResponse.getWriter().close();

}

} 下载的入口controller:

@RestController

@RequestMapping("down")

public class HelloController {

private final TradingDetailsService tradingDetailsService;

public HelloController(TradingDetailsService tradingDetailsService) {

this.tradingDetailsService = tradingDetailsService;

}

@GetMapping("download_csv")

public void downloadAsCsv(@RequestParam("coreComId") String coreComId, HttpServletResponse response)

throws IOException {

tradingDetailsService.downloadAsCsv(coreComId, response);

}

}

实体类如下,由mybatis-generator自动生成,实际字段很多,下面只放出了部分字段。:

public class TradingDetailsDownload {

/**

* This field was generated by MyBatis Generator.

* This field corresponds to the database column trading_details_download.id

*

* @mbggenerated Thu Oct 31 11:43:00 CST 2019

*/

private Integer id;

/**

* This field was generated by MyBatis Generator.

* This field corresponds to the database column trading_details_download.core_com_id

*

* @mbggenerated Thu Oct 31 11:43:00 CST 2019

*/

private String coreComId;

/**

* This field was generated by MyBatis Generator.

* This field corresponds to the database column trading_details_download.add

*

* @mbggenerated Thu Oct 31 11:43:00 CST 2019

*/

private String add;

/**

* This field was generated by MyBatis Generator.

* This field corresponds to the database column trading_details_download.amt_rate

*

* @mbggenerated Thu Oct 31 11:43:00 CST 2019

*/

private Double amtRate;

/**

* This field was generated by MyBatis Generator.

* This field corresponds to the database column trading_details_download.bank_acceptance_bill_amt

*

* @mbggenerated Thu Oct 31 11:43:00 CST 2019

*/

private Double bankAcceptanceBillAmt;

/**

* This field was generated by MyBatis Generator.

* This field corresponds to the database column trading_details_download.brch

*

* @mbggenerated Thu Oct 31 11:43:00 CST 2019

*/

private String brch;

/**

* This field was generated by MyBatis Generator.

* This field corresponds to the database column trading_details_download.city

*

* @mbggenerated Thu Oct 31 11:43:00 CST 2019

*/

private String city;

/**

* This field was generated by MyBatis Generator.

* This field corresponds to the database column trading_details_download.com_typ

*

* @mbggenerated Thu Oct 31 11:43:00 CST 2019

*/

private String comTyp;

/**

* This field was generated by MyBatis Generator.

* This field corresponds to the database column trading_details_download.conduct_financial_transactions_amt

*

* @mbggenerated Thu Oct 31 11:43:00 CST 2019

*/

private Double conductFinancialTransactionsAmt;

/**

* This field was generated by MyBatis Generator.

* This field corresponds to the database column trading_details_download.core_com_nam

*

* @mbggenerated Thu Oct 31 11:43:00 CST 2019

*/

private String coreComNam;

}写个存储过程造它100万条假数据进去:

CREATE DEFINER=`root`@`%` PROCEDURE `NewProc`()

BEGIN

SET @num = 1;

WHILE

@num < 1000000 DO

INSERT INTO `trading_details_download`(`core_com_id`, `add`, `amt_rate`, `bank_acceptance_bill_amt`, `brch`, `city`, `com_typ`, `conduct_financial_transactions_amt`, `core_com_nam`, `credit_amt`, `cust_id`, `district`, `dpst`, `dpst_daily`, `following_loan`, `fpa`, `industry1`, `industry2`, `is_client`, `is_hitech`, `is_loan`, `is_new_sp_or_sa`, `is_on_board`, `is_recent_three_mons_new_sp_or_sa`, `is_sl`, `is_vip_com`, `last_year_dpst`, `last_year_dpst_daily`, `letter_of_credit_amt`, `letter_of_guarantee_amt`, `level`, `loan_amt`, `nam`, `non_performing_loan`, `province`, `recent_year_in`, `recent_year_integerims`, `recent_year_out`, `recent_year_out_tims`, `registered_capital`, `registered_date`, `role`, `scale`, `substitute_tims`, `team`, `tel`, `this_year_amt`, `this_year_eva`, `this_year_in`, `this_year_substitute_amt`, `this_year_substitute_tims`, `this_year_tims`, `three_month_core_com_in`, `three_month_core_com_out`, `trade_mons`, `un_cmb_amt`) VALUES ('APRG21246', '科兴科学园A1栋49楼', 0.1422, 3208132746360.17, '某上市企业总部', '漳州', '合伙', 8619650727872.58, '某医药技术有限公司', 6624346214937.97, '4888864469', '龙文区', 2319401156645.59, 8648101521823.08, 4026817601311.52, 2791285180576.86, '电力、热力、燃气及水生产和供应业', '大健康交易链', '是', '是', '是', '否', '是', '否', '否', '总部', 1421134076032.25, 6948196537024.35, 4534195429054.29, 220191634435.78, 2, 8460047551335.62, '北京燕京啤酒股份有限公司', 6320618506709.96, '福建', 7848516990131.7, NULL, 2512926179345.88, 760435, 1327348247660.02, '20130121', '供应商', '中型', '是', '战略客户团队', '18308778888', 7538773630340.53, 3520434718096.73, 1607690495836.96, 7458777479144.64, 216257, 855652, 6486558126565.19, 5002169133815.36, 3, 2213873926430.35);

SET @num = @num + 1;

END WHILE;然后运行存储过程函数:

call NewProc();然后浏览器运行: http://localhost:8080/down/download_csv?coreComId=APRG21246。同时终端运行top或者通过任务管理器观察java进程,内存始终保持在一个比较低的使用率。

本机配置一般,8G内存,2.3 GHz Intel Core i5,cpu使用率最高大概维持在70%- 80%。

实验证实了此方案确实避免了OOM,并且成功导出了csv,但是文件本身还是比较大的。