使用python远程监控多个服务器状态、数据库同步状态

日常工作中需要远程监控多个服务器状态和数据库是否同步,手动使用CRT查询的话太过麻烦了,切换数据库查询同步也很蛋疼,这种重复操作果然还是应该交给python

配置文件

使用列表存储各地服务器和数据库连接信息

config = [{

"server_name": "项目1",

"server_1": {

"name": "服务器1",

"host": "1.1.1.1",

"port": 2022,

"user": "xxx",

"passwd": "xxx"

},

"server_2": {

"name": "服务器2",

"host": "2.2.2.2",

"port": 2022,

"user": "xxx",

"passwd": "xxx"

},

"db_1": {

"ssh": {

"host": "5.5.5.5",

"port": 2022,

"user": "xxx",

"passwd": "xxx"

},

"name": "数据库1",

"host": "3.3.3.3",

"port": 3306,

"db": "db_name1",

"user": "xxx",

"passwd": "xxx"

},

"db_2": {

"ssh": {

"host": "5.5.5.5",

"port": 2022,

"user": "xxx",

"passwd": "xxx"

},

"name": "数据库2",

"host": "4.4.4.4",

"port": 3306,

"db": "db_name1",

"user": "xxx",

"passwd": "xxx"

},

},]

数据库连接方法

重点:部分数据库需要使用跳板机连接,需要使用sshtunnel模块。数据库通过ssh通道连接成功以后,ssh通道没有关闭,主线程会阻塞,而且ssh通道只能开启一个,通过生成多个对象来开启多个ssh会报错,想像以前那样把mysql封装成一个工具类的时候踩了一地的坑,干脆写成一个方法,连接数据库之后立马执行sql语句,然后关闭连接关闭ssh通道。

from sshtunnel import SSHTunnelForwarder, BaseSSHTunnelForwarderError

import pymysql

def query(host='', user='', passwd='', db='', port=3306, charset='utf8', ssh_info=None, sql=""):

if ssh_info:

# 使用with方式启动ssh通道不用start和stop

with SSHTunnelForwarder(

ssh_address_or_host=(ssh_info["host"], ssh_info["port"]), # 指定ssh登录的跳转机的ip地址和端口号

ssh_username=ssh_info["user"], # 跳转机的用户

ssh_password=ssh_info["passwd"], # 跳转机的密码

remote_bind_address=(host, port) # 数据库的IP地址和端口号

) as server:

# 连接数据库

conn = pymysql.connect(

user=user, # 数据库用户名

passwd=passwd, # 数据库密码

host="127.0.0.1", # 使用SSH通道时host必须是127.0.0.1

db=db, # 打开的表

port=server.local_bind_port

)

print("%s数据库已连接" % host)

cursor = conn.cursor()

cursor.execute(sql)

results = cursor.fetchall()

conn.close()

server.close()

return results

else:

conn = pymysql.connect(

user=user, # 数据库用户名

passwd=passwd, # 数据库密码

host=host, # 使用SSH通道时host必须是127.0.0.1

db=db, # 打开的表

port=port

)

print("%s数据库已连接" % host)

cursor = conn.cursor()

cursor.execute(sql)

results = cursor.fetchall()

conn.close()

return results

逻辑代码

python通过ssh连接服务器时,可以使用ssh模块,但是这个模块已经停止维护了,改用paramiko模块。

1.服务器状态

CPU

连上以后通过linux命令查询服务器状态

top -bn1|grep 'Cpu'| awk '{print $5}'

使用top性能分析命令,-bn 1只输出一次,不然grep 和 awk 命令不能正常生效。

grep 'Cpu'筛选指定行

![]()

awk '{print $5}‘输出第5列数据,就是’89.6%id’,代表cpu目前使用率只有10.4%

硬盘

df -h 查看硬盘使用情况

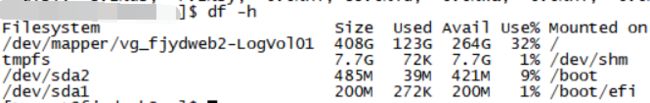

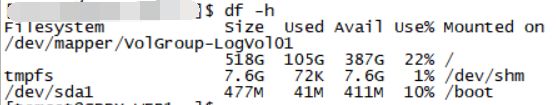

这是两个项目使用的服务器执行df -h的结果,第二台服务器filesysrem那里有个换行,需要取的Use%列数和第一台不同,只能两列都取出来然后代码判断分析应该拿哪一列的数据了。

因此命令是这样的df -h|awk '{print $4,$5}'|grep '%'

内存

free -m查看内存使用情况,虽然top也会显示空余内存,但是free -m会直接显示free+buffers+cache的结果,就不用再计算一次了。

free -m |grep 'buffers/cache'|awk '{print $4}'

2.数据库同步

数据库主从备份可以通过主机查询show master status,从机查询show slave status来判断同步状态,然而我们数据库配置有些混乱,不一定哪台是主机哪台是从机,就用个笨办法,从两台数据库里面的用户使用记录表里查询相同的数据,如果可以匹配则正常同步。

3.代码

# coding=utf-8

import socket

import server_config

import paramiko

import mysql

import time

def check_server(server_info_list):

server_status_info = []

for server in server_info_list:

server_status_info.append(get_server_status(server))

if len(server_status_info) > 0:

for server in server_status_info:

if server:

print("%s服务器:CPU空余%s%%\t硬盘使用%s\t可用内存%sG" % (server[0], server[1], server[2], server[3]))

def check_db(db_info_list):

sync_key = None

for db_info in db_info_list:

host = db_info['host']

user = db_info['user']

passwd = db_info['passwd']

db = db_info['db']

port = db_info['port']

ssh_info = db_info['ssh']

if sync_key is None:

command = "select id,userid,pageid from xxx order by createtime desc limit 500"

sync_key = mysql.query(host=host, user=user, passwd=passwd, db=db, port=port, ssh_info=ssh_info,

sql=command)

if sync_key is None:

print("数据库连接失败")

break

else:

index_id, user_id, page_id = sync_key[0]

command = "select * from xxx where id=%s and userid=%s and pageid=%s" % (index_id, user_id, page_id)

res = mysql.query(host=host, user=user, passwd=passwd, db=db, port=port, ssh_info=ssh_info, sql=command)

if res:

print("同步正常")

else:

print("同步异常")

def check(server_info):

server_name = server_info['server_name']

server_info_list = [server_info['server_1'], server_info['server_2']]

db_info_list = [server_info['db_1'], server_info['db_2']]

print("\n正在检查【%s】" % server_name)

check_server(server_info_list)

check_db(db_info_list)

def check_db_sync(db_obj_1, db_obj_2):

res_list = db_obj_1.query("select id,userid,pageid from xxx order by createtime desc limit 500")

if res_list:

index_id, user_id, page_id = res_list[0]

res = db_obj_2.query("select * from xxx where id=%s and userid=%s and pageid=%s"

% (index_id, user_id, page_id))

if res:

print("正常同步")

else:

print("同步异常")

def ssh_shell(client, command):

stdin, stdout, stderr = client.exec_command(command)

return stdout.read().decode('utf-8').rstrip().strip()

def get_server_status(server_info):

name = server_info['name']

ip = server_info['host']

port = server_info['port']

user = server_info['user']

password = server_info['passwd']

ssh_client = paramiko.SSHClient()

ssh_client.set_missing_host_key_policy(paramiko.AutoAddPolicy())

try:

ssh_client.connect(ip, int(port), user, password, timeout=10)

except paramiko.ssh_exception.AuthenticationException:

print("登陆失败,账号密码错误")

return

except socket.timeout:

print("连接超时,请检查是否需要使用VPN")

return

# CPU空闲率

cpu_raw = ssh_shell(ssh_client, "top -bn1|grep 'Cpu'| awk '{print $5}'")

cpu = cpu_raw.split("%")[0]

# 硬盘使用

hard_disk_raw = ssh_shell(ssh_client, "df -h|awk '{print $4,$5}'|grep '%'")

# 不同服务器use%位置不同,需要手动处理

hard_disk_raw_list = hard_disk_raw.split('\n')

hard_disk_raw_tem_list = hard_disk_raw_list[1].split(" ")

if hard_disk_raw_tem_list[0].endswith("G"):

hard_disk = hard_disk_raw_tem_list[1]

else:

hard_disk = hard_disk_raw_tem_list[0]

# 可用内存

free_cache_raw = ssh_shell(ssh_client, "free -m |grep 'buffers/cache'|awk '{print $4}'")

free_cache = int(int(free_cache_raw)/1024)

ssh_client.close()

res = (name, cpu, hard_disk, free_cache,)

return res

def run():

while 1:

# 获取服务器信息列表

print("监视时间:%s" % time.asctime(time.localtime(time.time())))

server_config_list = server_config.config

for obj in server_config_list:

check(obj)

print("等待下一个监视周期")

time.sleep(60*60)

if __name__ == "__main__":

run()