基于SpringBoot集成DATAX、XXL-JOB实现离线数据定时同步实践

在很多实际应用中,需要实现离线数据定时同步,比如生产库和运营库分离之后,在运营库实现离线数据对账。

本文介绍一种实现方式,完成基于SpringBoot集成DATAX、XXL-JOB实现离线数据定时同步。

一、集成XXL-JOB

集成XXL-JOB,需要添加xxl-job-admin、xxl-job-core模块。在主工程配置中添加:

>

>xxl-job-admin >

>xxl-job-core >

>

二、集成DATAX

在主工程配置中添加

>

>datax-integration >

>

添加相关依赖,主工程配置最终版本如下:

>

<!--JSON处理-->

com.alibaba

fastjson

1.2.56

commons-configuration

commons-configuration

1.10

commons-cli

commons-cli

1.3.1

>

>commons-beanutils >

>commons-beanutils >

>1.9.3 >

>

>

>org.apache.commons >

>commons-lang3 >

>3.6 >

>

>

>commons-io >

>commons-io >

>2.6 >

>

>

>org.apache.httpcomponents >

>httpclient >

>4.4 >

>

>

>org.apache.httpcomponents >

>fluent-hc >

>4.4 >

>

>

>org.springframework.boot >

>spring-boot-starter-test >

>test >

>

>

>org.springframework.boot >

>spring-boot-starter-web >

>

<!-- lombok -->

org.projectlombok

lombok

1.16.12

org.springframework.boot

spring-boot-starter-aop

>

>mysql >

>mysql-connector-java >

>8.0.15 >

>

>

>com.alibaba >

>druid >

>1.1.18 >

>

>

>com.google.guava >

>guava >

>16.0.1 >

>

<!-- slf4j -->

org.slf4j

slf4j-log4j12

1.7.28

>

三、在数据同步服务datax-integration工程中,添加数据同步的相关组件依赖

>

>

>com.alibaba.datax >

>datax-common >

>0.0.1-SNAPSHOT >

>system >

>${project.basedir}/src/main/webapp/WEB-INF/lib/datax-common-0.0.1-SNAPSHOT.jar >

>

>

>com.alibaba.datax >

>datax-core >

>0.0.1-SNAPSHOT >

>system >

>${project.basedir}/src/main/webapp/WEB-INF/lib/datax-core-0.0.1-SNAPSHOT.jar >

>

>

>com.alibaba.datax >

>mysqlreader >

>0.0.1-SNAPSHOT >

>system >

>${project.basedir}/src/main/webapp/WEB-INF/lib/mysqlreader-0.0.1-SNAPSHOT.jar >

>

>

>com.alibaba.datax >

>mysqlwriter >

>0.0.1-SNAPSHOT >

>system >

>${project.basedir}/src/main/webapp/WEB-INF/lib/mysqlwriter-0.0.1-SNAPSHOT.jar >

>

>

>com.alibaba.datax >

>plugin-rdbms-util >

>0.0.1-SNAPSHOT >

>system >

>${project.basedir}/src/main/webapp/WEB-INF/lib/plugin-rdbms-util-0.0.1-SNAPSHOT.jar >

>

<!-- xxl-job-core -->

com.xuxueli

xxl-job-core

1.0-SNAPSHOT

>

四、在数据同步服务datax-integration工程中,添加分布式定时调度注册配置信息,这样服务启动时会注册到xxl-job

private Logger logger = LoggerFactory.getLogger(XxlJobConfig.class);

@Value("${xxl.job.admin.addresses}")

private String adminAddresses;

@Value("${xxl.job.executor.appname}")

private String appName;

@Value("${xxl.job.executor.ip}")

private String ip;

@Value("${xxl.job.executor.port}")

private int port;

@Value("${xxl.job.accessToken}")

private String accessToken;

@Value("${xxl.job.executor.logpath}")

private String logPath;

@Value("${xxl.job.executor.logretentiondays}")

private int logRetentionDays;

@Bean(initMethod = "start", destroyMethod = "destroy")

public XxlJobSpringExecutor xxlJobExecutor() {

logger.info(">>>>>>>>>>> xxl-job config init.");

XxlJobSpringExecutor xxlJobSpringExecutor = new XxlJobSpringExecutor();

xxlJobSpringExecutor.setAdminAddresses(adminAddresses);

xxlJobSpringExecutor.setAppName(appName);

xxlJobSpringExecutor.setIp(ip);

xxlJobSpringExecutor.setPort(port);

xxlJobSpringExecutor.setAccessToken(accessToken);

xxlJobSpringExecutor.setLogPath(logPath);

xxlJobSpringExecutor.setLogRetentionDays(logRetentionDays);

return xxlJobSpringExecutor;

}

五、编写数据同步配置文件

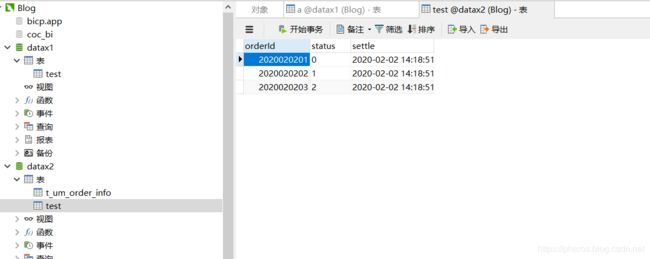

本文为验证实际结果,分别建立两个库datax1、datax2;datax1建立表test,预先录制相应数据;datax2建立表test,为空;

编写datax配置文件如下:

{

"job": {

"setting": {

"speed": {

"channel": 3

},

"errorLimit": {

"record": 0,

"percentage": 0.02

}

},

"content": [

{

"reader": {

"name": "mysqlreader",

"parameter": {

"username": "root",

"password": "****",

"column": [

"orderId",

"status",

"settle"

],

"splitPk": "id",

"connection": [

{

"table": [

"test"

],

"jdbcUrl": [

"jdbc:mysql://localhost:3306/datax1?useUnicode=true&characterEncoding=utf-8"

]

}

]

}

},

"writer": {

"name": "mysqlwriter",

"parameter": {

"writeMode": "insert",

"username": "root",

"password": "****",

"column": [

"orderId",

"status",

"settle"

],

"session": [

"set session sql_mode='ANSI'"

],

"preSql": [

"delete from test"

],

"connection": [

{

"jdbcUrl": "jdbc:mysql://localhost:3306/datax2?useUnicode=true&characterEncoding=utf-8",

"table": [

"test"

]

}

]

}

}

}

]

}

}

六、编写数据同步任务代码

七、启动分布式调度任务管理平台

八、根据datax-integration工程配置信息,新增执行器

九、在任务管理栏目,选择刚刚新建的执行器,新增定时任务

在JobHandler参数中,填写之前在第六步编写代码的任务信息,各参数填写好后,保存。

十、执行一次任务,检验数据同步结果

验证结果,发现数据已由生产库同步到运营库。