基于Kubeadm双节点部署Kubernetes集群+dashboard

服务器配置

centos7.2 wmware 2g2h

192.168.200.128 master(做高可用可以添加多个)

192.168.200.132 node(可以添加多个)

1.基础环境配置

1.1配置主机映射

首先修改所以节点的/etc/hosts

[root@master ~]# vi /etc/hosts

192.168.200.128 master

192.168.200.132 node1.2 配置防火墙和selinux

[root@master ~]# systemctl stop firewalld&systemctl disable firewalld[root@master ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config然后重启电脑

1.3关闭swap

[root@master ~]# swapoff -a && sed -i "s/\dev\/mapper\/centos-swap/\#\/dev\/mapper\/centos-swap/g" /etc/fstab1.4 配置时间同步

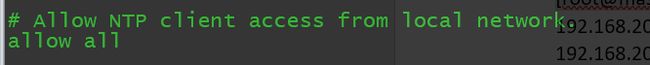

[root@master ~]# yum install -y chrony在master节点修改

[root@master ~]# sed -i 's/^server/#&/' /etc/chrony.conf[root@master ~]# systemctl enable chronyd && systemctl restart chronyd

[root@master ~]# timedatectl set-ntp true然后在node节点进行指定上游ntp服务器

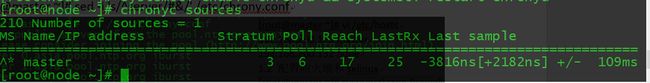

[root@node ~]# echo server 192.168.200.128 iburst >> /etc/chrony.conf

[root@node ~]# systemctl enable chronyd && systemctl restart chronyd1.5配置路由转发

在所以节点执行

[root@master ~]#cat << EOF | tee /etc/sysctl.d/k8s.conf

> net.ipv4.ip_forward=1

> net.bridge.bridge-nf-call-ipv6tables=1

> net.bridge.bridge-nf-call-ipvtables=1

> EOF[root@node ~]# modprobe br_netfilter1.6 配置IPVS

在所有节点操作

[root@master ~]#cat /etc/sysconfig/modules/ipvs.modiles

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4然后使用命令查看是否正确加载所需内核模块

[root@master ~]#chmod 755 /etc/sysconfig/modules/ipvs.modiles && bash /etc/sysconfig/modules/ipvs.modiles&& lsmod |grep -e ip_vs -e nf_conntrack_ipv4[root@master ~]#yum install -y ipset ipvsadm -y1.7 安装docker

在所有节点操作

[root@master ~]#yum install -y wget

[root@master ~]#wget -O /etc/yum.repos.d/CentOS-Base.repo

[root@master ~]#yum -y install yum-utils

[root@master ~]#yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@master ~]#yum install -y yum-utils device-mapper-persistent-data lvm2

[root@master ~]# yum install docker-ce-18.09.6 docker-ce-cli-18.09.6 containerd.io -y

[root@master ~]# mkdir -p /etc/docker[root@master ~]# tee /etc/docker/daemon.json <<- 'EOF'

{

"registry-mirrors" :["https://5twf62k1.mirror.aliyuncs.com"],

"exec-opts":["native.cgroupdriver=systemd"]

}

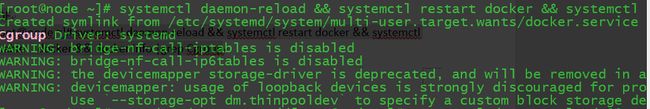

EOF[root@node ~]# systemctl daemon-reload && systemctl restart docker && systemctl enable docker && docker info |grep Cgroup 2安装k8s集群

2.1配置kubernetes源

在所以节点执行

cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF 2.2 安装kubeadm

在所有节点执行

[root@master ~]# yum install kubelet-1.14.1 kubeadm-1.14.1 kubectl-1.14.1 -y

[root@master ~]# systemctl enable kubelet && systemctl start kubelet2.3 初始化k8s

在master节点

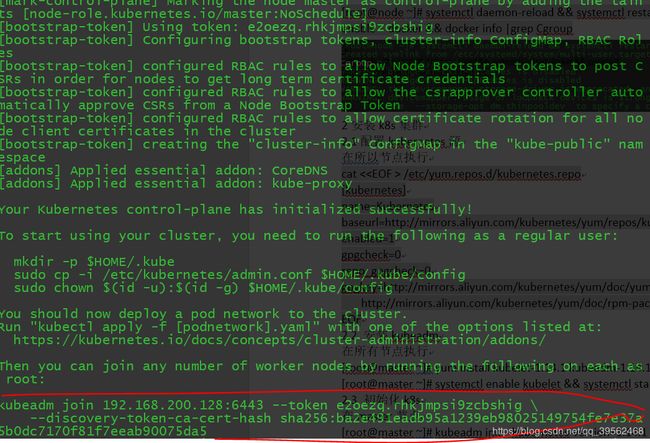

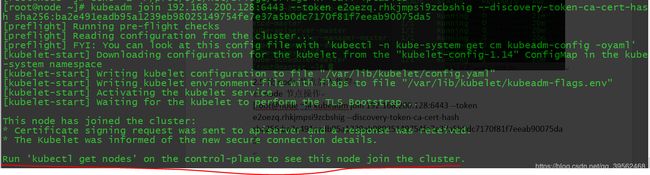

[root@master ~]# kubeadm init --kubernetes-version=1.14.1 --apiserver-advertise-address 192.168.200.128 --pod-network-cidr=10.244.0.0/21 --image-repository=registry.aliyuncs.com/google_containers需要注意的是红笔内容是用于node加入集群的必须保存!!!!

kubeadm join 192.168.200.128:6443 --token e2oezq.rhkjmpsi9zcbshig \

--discovery-token-ca-cert-hash sha256:ba2e491eadb95a1239eb98025149754fe7e37a5b0dc7170f81f7eeab90075da5

kubectl会默认在执行的用户home目录下面的.kube目录下寻找config文件配置kubectl工具

[root@master ~]# mkdir -p $HOME/.kube

[root@master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

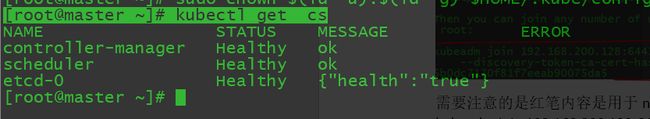

[root@master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config检查集群状态

[root@master ~]# kubectl get cs2.4 配置k8s网络

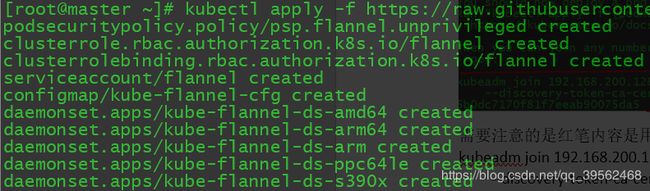

在master节点 部署flannel网络

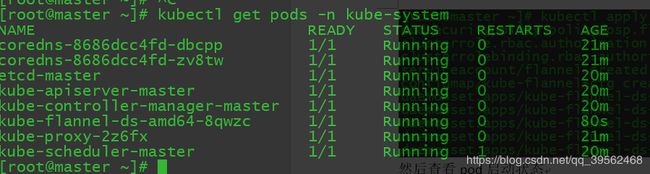

[root@master ~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml然后查看pod启动状态

[root@master ~]# kubectl get pods -n kube-system2.5使node节点加入集群

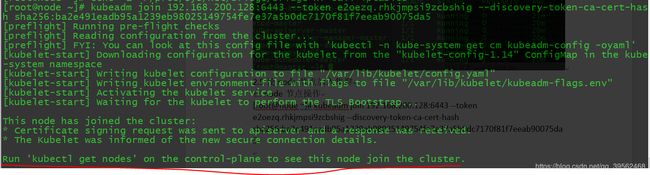

在node节点操作

[root@node ~]# kubeadm join 192.168.200.128:6443 --token e2oezq.rhkjmpsi9zcbshig --discovery-token-ca-cert-hash sha256:ba2e491eadb95a1239eb98025149754fe7e37a5b0dc7170f81f7eeab90075da5注意!!!笔者在加入节点的时候遇到报错

[ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables contents are not set to 1

解决方法:输入echo "1" >/proc/sys/net/bridge/bridge-nf-call-iptables

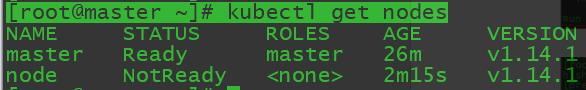

在master节点查看节点情况

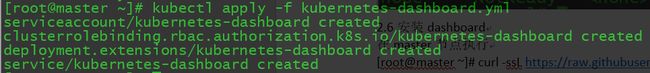

[root@master ~]# kubectl get nodes2.6安装dashboard

在master节点执行

[root@master ~]# kubectl apply -f kubernetes-dashboard.yamlps:群内有大佬给我说他自己做了个yaml可以看看。

wget http://down.i4t.com/k8s-passwd-dashboard.yaml| kubectl apply -f k8s-passwd-dashboard.yaml

这里感谢一下大佬https://i4t.com/3819.html

本次实验未用大佬的,我把我使用的单独复制出来:

[root@master ~]# cat kubernetes-dashboard.yaml

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# Configuration to deploy release version of the Dashboard UI compatible with

# Kubernetes 1.8.

#

# Example usage: kubectl create -f

# ------------------- Dashboard Secret ------------------- #

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kube-system

type: Opaque

---

# ------------------- Dashboard Service Account ------------------- #

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Role & Role Binding ------------------- #

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

rules:

# Allow Dashboard to create 'kubernetes-dashboard2-key-holder' secret.

- apiGroups: [""]

resources: ["secrets"]

verbs: ["create"]

# Allow Dashboard to create 'kubernetes-dashboard2-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["create"]

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard2-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics from heapster.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard-minimal

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Deployment ------------------- #

kind: Deployment

apiVersion: apps/v1beta2

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: mirrorgooglecontainers/kubernetes-dashboard-amd64:v1.10.0

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --authentication-mode=basic

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

# ------------------- Dashboard Service ------------------- #

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort

ports:

- port: 80

targetPort: 8443

nodePort: 30000

selector:

k8s-app: kubernetes-dashboard [root@master ~]vi dashboard-admin.yaml

[root@master ~]# kubectl create -f dashboard-adminuser.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

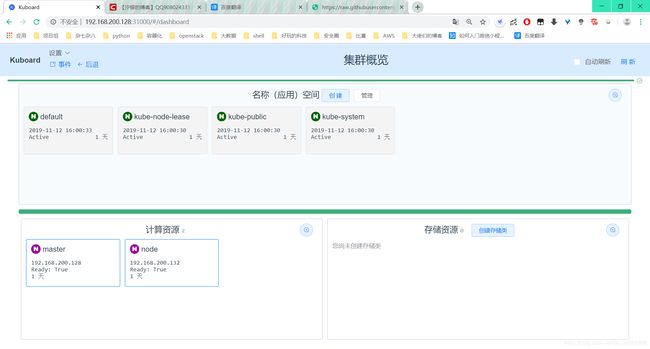

namespace: kube-system然后安装下kuboard.yaml

[root@master ~]# kubectl apply -f kubectl apply -f

[root@master ~]# cat kuboard.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kuboard

namespace: kube-system

annotations:

k8s.eip.work/displayName: kuboard

k8s.eip.work/ingress: "true"

k8s.eip.work/service: NodePort

k8s.eip.work/workload: kuboard

labels:

k8s.eip.work/layer: monitor

k8s.eip.work/name: kuboard

spec:

replicas: 1

selector:

matchLabels:

k8s.eip.work/layer: monitor

k8s.eip.work/name: kuboard

template:

metadata:

labels:

k8s.eip.work/layer: monitor

k8s.eip.work/name: kuboard

spec:

containers:

- name: kuboard

image: eipwork/kuboard:latest

imagePullPolicy: IfNotPresent

---

apiVersion: v1

kind: Service

metadata:

name: kuboard

namespace: kube-system

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: 80

nodePort: 31000

selector:

k8s.eip.work/layer: monitor

k8s.eip.work/name: kuboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: kuboard-user

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kuboard-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kuboard-user

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: kuboard-viewer

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kuboard-viewer

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: view

subjects:

- kind: ServiceAccount

name: kuboard-viewer

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kuboard-viewer-node

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:node

subjects:

- kind: ServiceAccount

name: kuboard-viewer

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kuboard-viewer-pvp

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:persistent-volume-provisioner

subjects:

- kind: ServiceAccount

name: kuboard-viewer

namespace: kube-system

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kuboard

namespace: kube-system

annotations:

nginx.org/websocket-services: "kuboard"

nginx.com/sticky-cookie-services: "serviceName=kuboard srv_id expires=1h path=/"

spec:

rules:

- host: kuboard.cn

http:

paths:

- path: /

backend:

serviceName: kuboard

servicePort: http然后输入获取登陆token:kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep kuboard-user | awk '{print $1}')

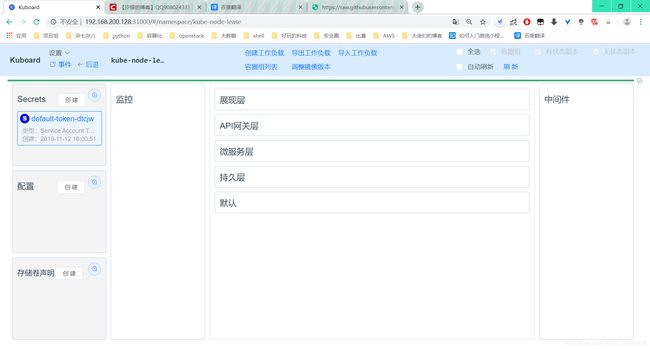

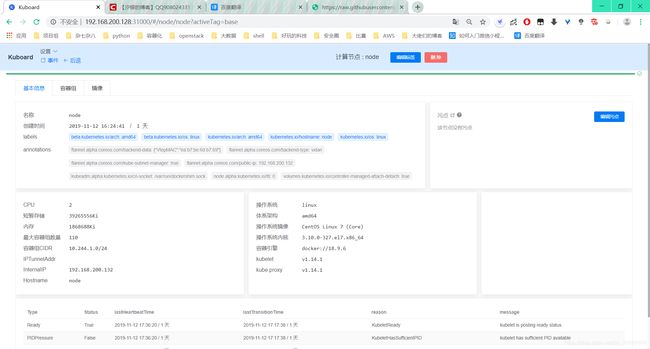

登陆master:31000访问界面