kubernetes搭建 二十一、Filebeat+ELK日志监控

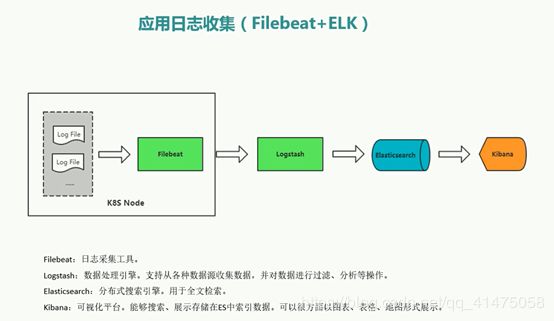

1、ELK流程图

2、再创建一台虚拟机10.0.0.107用来搭建ELK

下载安装包并解压

[root@elk-107 ~]# mkdir /home/elk

[root@elk-107 ~]# cd /home/elk/

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.1.1.tar.gz

wget https://artifacts.elastic.co/downloads/kibana/kibana-6.1.1-linux-x86_64.tar.gz

wget https://artifacts.elastic.co/downloads/logstash/logstash-6.1.1.tar.gz

ls | xargs -i tar zxvf {}

3、安装Java环境

yum install -y java-1.8.0-openjdk

4、不能使用root用户运行,创建一个elk用户

useradd elk

chown -R elk.elk /home/elk/

su – elk

5、启动elasticsearch

/home/elk/elasticsearch-6.1.1/bin/elasticsearch -d

[root@elk-107 ~]# netstat -tlunp | grep 9200

tcp6 0 0 127.0.0.1:9200 :::* LISTEN 2339/java

tcp6 0 0 ::1:9200 :::* LISTEN 2339/java

6、修改kibana配置文件

cd /home/elk/kibana-6.1.1-linux-x86_64/

vim config/kibana.yml

[root@elk-107 kibana-6.1.1-linux-x86_64]# grep -Ev "^$|^[#;]" config/kibana.yml

server.port: 5601

server.host: "0.0.0.0"

elasticsearch.url: "http://localhost:9200"

指定访问端口 5601

server.host改为0.0.0.0方便等等使用浏览器访问

连接elasticsearch的地址为http://localhost:9200

7、启动kibana

nohup /home/elk/kibana-6.1.1-linux-x86_64/bin/kibana &

9、创建logstash接收日志和传输日志的规则,收集过滤和输出

vim /home/elk/logstash-6.1.1/config/logstash.conf

接收来自filebeat的数据,根据其中的tags进行分类,再添加index进行分类,例如nginx-access-%{+YYYY.MM.dd},在kibana中会根据这个index获取日志。

[root@elk-107 logstash-6.1.1]# cat /home/elk/logstash-6.1.1/config/logstash.conf

input {

beats {

port => 5044

}

}

output {

if "nginx" in [tags] {

elasticsearch {

hosts => "localhost:9200"

index => "nginx-access-%{+YYYY.MM.dd}"

}

}

if "wp-nginx" in [tags] {

elasticsearch {

hosts => "localhost:9200"

index => "wp-nginx-access-%{+YYYY.MM.dd}"

}

}

if "tomcat" in [tags] {

elasticsearch {

hosts => "localhost:9200"

index => "tomcat-catalina-%{+YYYY.MM.dd}"

}

}

stdout { codec => rubydebug }

}

10、启动logstash,开了5044端口用来接收数据

/home/elk/logstash-6.1.1/bin/logstash -f /home/elk/logstash-6.1.1/config/logstash.conf &

[root@elk-107 logstash-6.1.1]# netstat -tlunp | grep 5044

tcp6 0 0 :::5044 :::* LISTEN 2896/java

[root@elk-107 logstash-6.1.1]# netstat -tlunp | grep 9600

tcp6 0 0 127.0.0.1:9600 :::* LISTEN 2896/jav

在/etc/rc.local设置开机自启

chome +x /etc/rc.d/rc.local

sudo -u elk nohup /home/elk/kibana-6.1.1-linux-x86_64/bin/kibana >/tmp/kibana.log 2>&1 &

sudo -u elk nohup /home/elk/elasticsearch-6.1.1/bin/elasticsearch >/dev/null 2>&1 -d &

sudo -u elk nohup /home/elk/logstash-6.1.1/bin/logstash -f /home/elk/logstash-6.1.1/config/logstash.conf >/tmp/logstash.log 2>&1 &

11、在k8s master上创建filebeat,这里定义了三个tags的日志,nginx和tomcat用来等等测试,wp-nginx用于前面lnmp环境的日志测试。

将宿主机存放日志的目录app-logs挂载到容器同样app-logs的目录下,然后filebeat根据规则匹配相应文件夹中的日志。

filebeat收集数据后会推送到elk机器10.0.0.107上logstash对外端口5044,这个在前面有设置了,然后logstash根据tags标签再分类,添加index。

output.logstash:

hosts: [‘10.0.0.107:5044’]

mkdir elk

cd elk

vim filebeat-to-logstash.yaml

[root@k8s-master-101 elk]# cat filebeat-to-logstash.yaml

---

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-config

namespace: kube-system

data:

filebeat.yml: |-

filebeat.prospectors:

- type: log

paths:

- /app-logs/www-nginx/*.log

tags: ["nginx"]

fields_under_root: true

fields:

level: info

- type: log

paths:

- /app-logs/wp-nginx/*.log

tags: ["wp-nginx"]

fields_under_root: true

fields:

level: info

- type: log

paths:

- /app-logs/www-tomcat/catalina.out

- /app-logs/www-tomcat/localhost_access_log*.txt

multiline:

pattern: '^\d+-\d+-\d+ \d+:\d+:\d+'

negate: true

match: after

# exclude_lines: ['^DEBUG']

# include_lines: ['^ERR','^WARN']

tags: ["tomcat"]

fields_under_root: true

fields:

level: info

# processors:

# -drop_fields:

# fields: ["beat.hostname","beat.name","beat.version","offset","prospector.type"]

output.logstash:

hosts: ['10.0.0.107:5044']

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: filebeat

namespace: kube-system

labels:

logs: filebeat

spec:

template:

metadata:

labels:

logs: filebeat

spec:

terminationGracePeriodSeconds: 30

containers:

- name: filebeat

image: docker.elastic.co/beats/filebeat:6.1.1

args: [

"-c", "/usr/share/filebeat.yml",

"-e",

]

resources:

limits:

memory: 500Mi

requests:

cpu: 100m

memory: 200Mi

volumeMounts:

- name: config

mountPath: /usr/share/filebeat.yml

subPath: filebeat.yml

- name: data

mountPath: /usr/share/filebeat/data

- name: app-logs

mountPath: /app-logs

- name: timezone

mountPath: /etc/localtime

volumes:

- name: config

configMap:

name: filebeat-config

- name: data

emptyDir: {}

- name: app-logs

hostPath:

path: /app-logs

- name: timezone

hostPath:

path: /etc/localtime

kubectl create -f filebeat-to-logstash.yaml

12、可以在node上事先拉取一下镜像,这个镜像有时候会拉失败,多试几次

docker pull docker.elastic.co/beats/filebeat:6.1.1

13、在node上也得事先设置日志目录

mkdir -p /app-logs/{www-nginx,www-tomcat,wp-nginx}

14、到镜像仓库上在做一个nginx:v2的镜像然后在node上先pull下来,因为之前lnmp的镜像没有直接设置server,这里不再描述了。

15、在master上创建一个示例来测试日志

vim nginx-example.yaml

把宿主机上设置的日志目录挂载到容器中的日志目录下,因为filebeat也是挂载的宿主机上的日志目录,这样filebeat就可以获取到日志信息了。

[root@k8s-master-101 elk]# cat nginx-example.yaml

---

apiVersion: v1

kind: Service

metadata:

name: www-nginx

spec:

ports:

- port: 80

selector:

app: www-nginx

---

apiVersion: apps/v1beta2

kind: Deployment

metadata:

name: www-nginx

spec:

replicas: 3

selector:

matchLabels:

app: www-nginx

template:

metadata:

labels:

app: www-nginx

spec:

containers:

- name: nginx

image: 10.0.0.106:5000/nginx:v2

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

volumeMounts:

- name: nginx-logs

mountPath: /usr/local/nginx/logs

- name: timezone

mountPath: /etc/localtime

volumes:

- name: nginx-logs

hostPath:

path: /app-logs/www-nginx

type: Directory

- name: timezone

hostPath:

path: /etc/localtime

kubectl create -f nginx-example.yaml

16、查看filebeat日志可以看到开始监听

kubectl logs filebeat-5qs9

17、在logstash启动页面也可以看到有相应日志产生

18、匹配收集到的日志

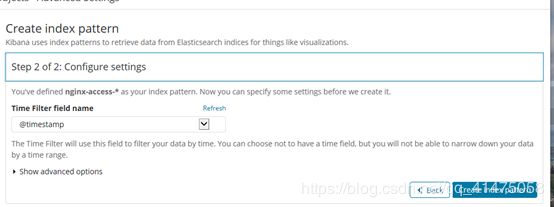

19、使用时间戳创建索引模式

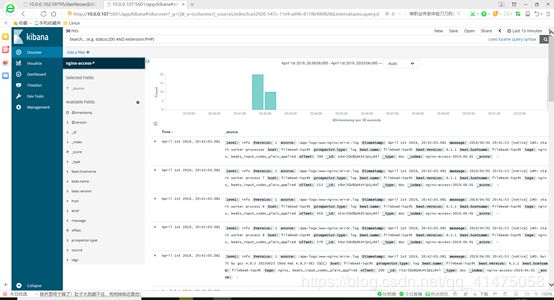

20、然后可以看到有日志

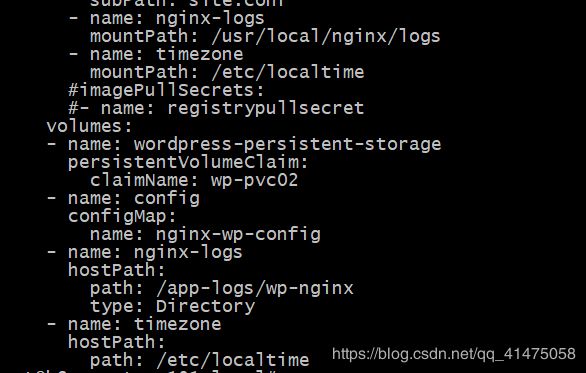

21、修改之前lnmp中nginx的deployment,增加日志存储卷。然后apply,再到kibana上设置收集日志。

kubectl apply -f nginx-deployment.yaml