TensorFlow学习心得

初次尝试TensorFlow

import tensorflow as tf

import numpy as np

#create data

x_data = np.random.rand(100).astype(np.float32)

y_data = x_data*0.1+0.3

#随机生成weight,biases为0

Weight = tf.Variable(tf.random_uniform([1],-1.0,1.0))

biases = tf.Variable(tf.zeros([1]))

#设置假设函数,loss函数,优化器,以及init初始化

y = Weight*x_data+biases

loss = tf.reduce_mean(tf.square(y-y_data))

optimizer = tf.train.GradientDescentOptimizer(0.5)

train = optimizer.minimize(loss)

init = tf.initialize_all_variables()

#激活初始化

sess = tf.Session()

sess.run(init)

#for循环训练

for step in range(201):

sess.run(train)

if step%20==0:

print(step,sess.run(Weight),sess.run(biases))

矩阵相乘以及输出

import tensorflow as tf

import numpy as np

maxtrix1 = tf.constant([[3,3]])

maxtrix2 = tf.constant([[2],[2]])

#maxtrix multipy np.dot(maxtrix1,maxtrix2)

# 这两行一样的这行是numpy的矩阵相乘,下面是TensorFlow的矩阵相乘

product = tf.matmul(maxtrix2,maxtrix1)

#方法一

sess = tf.Session()

result = sess.run(product)

print(result)

sess.close()

#方法二,不用close

with tf.Session() as sess:

result2 = sess.run(product)

print(result2)

定义变量,相加,更新

import tensorflow as tf

import numpy as np

state = tf.Variable(0,name="counter")

#print(state.name)

one = tf.constant(1)

new_value = tf.add(state,one)

update = tf.assign(state,new_value)

#一定要有这个init,如果定义了一个变量

init = tf.initialize_all_variables()

with tf.Session() as sess:

sess.run(init)

for _ in range(3):

sess.run(update)

print(sess.run(state))

placeholder

意思是运行的时候再给placeholder赋值,在sess.run时确定placeholder的值

它有几个参数,第一个参数是你要保存的数据的数据类型,大多数是tensorflow中的float32数据类型,后面的参数就是要保存数据的结构,比如要保存一个1×2的矩阵,则struct=[1 2]。

xs = tf.placeholder(tf.float32,[None,1])

ys = tf.placeholder(tf.float32,[None,1])

sess.run(train_step,feed_dict={xs:x_data,ys:y_data})

数据初始化

init = tf.initialize_all_variables()

建造神经网络

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

def add_layer(input,in_size,out_size,activation_function=None):

weight = tf.Variable(tf.random_normal([in_size,out_size]))

biases = tf.Variable(tf.zeros([1,out_size])+0.1)

Wx_plus_b = tf.matmul(input,weight)+biases

if activation_function is None:

outputs = Wx_plus_b

else:

outputs = activation_function(Wx_plus_b)

return outputs

#设置输入数据,加noise

x_data = np.linspace(-1,1,300)[:,np.newaxis]

noise = np.random.normal(0,0.05,x_data.shape)

y_data = np.square(x_data)-0.5+noise

xs = tf.placeholder(tf.float32,[None,1])

ys = tf.placeholder(tf.float32,[None,1])

#设置hidden layer和output layer

l1 = add_layer(xs,1,10,activation_function=tf.nn.relu)

prediction = add_layer(l1,10,1,activation_function=None)

#设置loss函数,使用gd优化

loss = tf.reduce_mean(tf.reduce_sum(tf.square(ys-prediction),reduction_indices=[1]))

train_step = tf.train.GradientDescentOptimizer(0.1).minimize(loss)

#激活初始化

init = tf.initialize_all_variables()

sess = tf.Session()

sess.run(init)

#绘制散点图,连续变化

fig = plt.figure()

ax = fig.add_subplot(1,1,1)

ax.scatter(x_data,y_data,s=10)

plt.ion()

plt.show()

#开始训练

for i in range(1000):

sess.run(train_step,feed_dict={xs:x_data,ys:y_data})

if i%50==0:

print(sess.run(loss,feed_dict={xs:x_data,ys:y_data}))

try:

ax.lines.remove(lines[0])

except Exception:

pass

prediction_value = sess.run(prediction,feed_dict={xs:x_data})

#绘制预测曲线

lines = ax.plot(x_data,prediction_value,"r-",lw=5)

plt.pause(0.1)

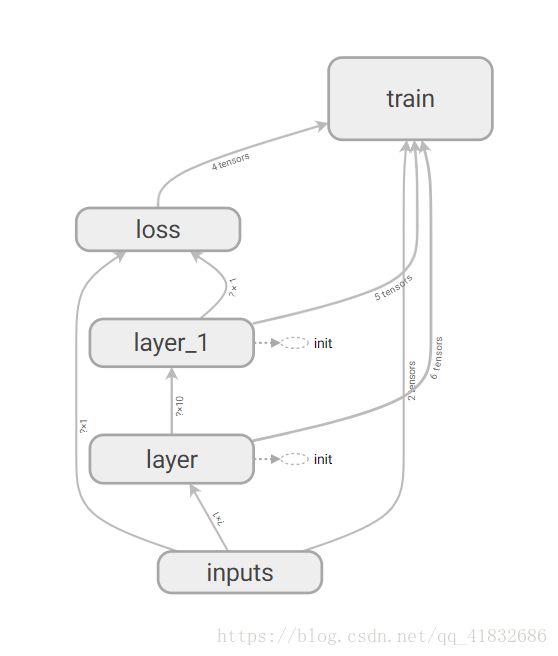

tensorboard神经网络可视化工具

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

def add_layer(input,in_size,out_size,activation_function=None):

with tf.name_scope('layer'):

with tf.name_scope('Weight'):

weight = tf.Variable(tf.random_normal([in_size,out_size]),name='W')

with tf.name_scope('Biases'):

biases = tf.Variable(tf.zeros([1,out_size])+0.1,name='B')

with tf.name_scope('Wx_plus_b'):

Wx_plus_b = tf.add(tf.matmul(input,weight),biases)

if activation_function is None:

output = Wx_plus_b

else:

output = activation_function(Wx_plus_b)

return output

x_data = np.linspace(-1,1,300)[:,np.newaxis]

noise = np.random.normal(0,0.05,x_data.shape)

y_data = np.square(x_data)-0.5+noise

with tf.name_scope('inputs'):

xs = tf.placeholder(tf.float32,[None,1],name='x_input')

ys = tf.placeholder(tf.float32,[None,1],name='y_input')

h1 = add_layer(xs,1,10,activation_function = tf.nn.relu)

prediction = add_layer(h1,10,1,activation_function=None)

with tf.name_scope('loss'):

loss = tf.reduce_mean(tf.reduce_sum(tf.square(ys-prediction),reduction_indices=[1]))

with tf.name_scope('train'):

train_step = tf.train.GradientDescentOptimizer(0.1).minimize(loss)

init = tf.initialize_all_variables()

sess = tf.Session()

writer = tf.summary.FileWriter('logs/',sess.graph)

sess.run(init)

运行程序,会在log文件夹内产生名叫events.out…的文件

人后打开cmd,输入tensorboard --logdir logs,cmd会输出一个网址

然后在浏览器中输入该网址即可。

MNIST手写识别

- 使用sofemax激励函数,和cross entrpy损失函数

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets("MNIST_data/", one_hot=True)

def add_layer(input,in_size,out_size,activation_function=None):

Weight = tf.Variable(tf.random_normal([in_size,out_size]))

biases = tf.Variable(tf.zeros([1,out_size])+0.1)

Wx_plus_b = tf.matmul(input,Weight)+biases

if activation_function is None:

outputs = Wx_plus_b

else:

outputs = activation_function(Wx_plus_b)

return outputs

def comput_accurary(v_xs,v_ys):

global prediction

y_pre = sess.run(prediction,feed_dict={xs:v_xs})

correct_prediction = tf.equal(tf.argmax(y_pre,1),tf.arg_max(v_ys,1))

accurary = tf.reduce_mean(tf.cast(correct_prediction,tf.float32))

result = sess.run(accurary,feed_dict={xs:v_xs,ys:v_ys})

return result

xs = tf.placeholder(tf.float32,[None,784])

ys = tf.placeholder(tf.float32,[None,10])

#sofemax

prediction= add_layer(xs,784,10,activation_function=tf.nn.softmax)

#cross_entropy交叉熵

cross_entrpy = -tf.reduce_sum(ys*tf.log(prediction),reduction_indices=[1])

train_step = tf.train.GradientDescentOptimizer(0.01).minimize(cross_entrpy)

sess = tf.Session()

sess.run(tf.initialize_all_variables())

for i in range(1000):

batch_xs,batch_ys = mnist.train.next_batch(100)

sess.run(train_step,feed_dict={xs:batch_xs,ys:batch_ys})

if i%50==0:

print(comput_accurary(mnist.test.images,mnist.test.labels))

- 使用卷积神经网络

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets('MNIST_data', one_hot=True)

def compute_accuracy(test_x,test_y):

global prediction

test_prediction = sess.run(prediction,feed_dict={xs:test_x,keep_prob:1})

if_correct = tf.equal(tf.arg_max(test_prediction,1),tf.arg_max(test_y,1))

accurary = tf.reduce_mean(tf.cast(if_correct,tf.float32))

result = sess.run(accurary,feed_dict={xs:test_x,ys:test_y,keep_prob:1})

return result

def weight_variable(shape):

initial = tf.truncated_normal(shape, stddev=0.1)

return tf.Variable(initial)

def bias_variable(shape):

initial = tf.constant(0.1, shape=shape)

return tf.Variable(initial)

def conv2d(x, W):

return tf.nn.conv2d(x, W, strides=[1, 1, 1, 1], padding='SAME')

def max_pool_2x2(x):

return tf.nn.max_pool(x, ksize=[1, 2, 2, 1],

strides=[1, 2, 2, 1], padding='SAME')

xs = tf.placeholder(tf.float32,[None,784])

ys = tf.placeholder(tf.float32,[None,10])

keep_prob = tf.placeholder(tf.float32)

x_image = tf.reshape(xs,[-1,28,28,1])

#conv1 layer

W_conv1 = weight_variable([5,5,1,32]) #28*28*32

b_conv1 = bias_variable([32]) #14*14*32

h_conv1 = tf.nn.relu(conv2d(x_image,W_conv1)+b_conv1)

h_pool1 = max_pool_2x2(h_conv1)

#conv2 layer

W_conv2 = weight_variable([5,5,32,64]) #14*14*64

b_conv2 = bias_variable([64]) #7*7*64

h_conv2 = tf.nn.relu(conv2d(h_pool1,W_conv2)+b_conv2)

h_pool2 = max_pool_2x2(h_conv2)

#func1 layer

W_fc1 = weight_variable([7*7*64,1024])

b_fc1 = bias_variable([1024])

h_pool2_flat = tf.reshape(h_pool2,[-1,7*7*64])

h_fc1= tf.nn.relu(tf.matmul(h_pool2_flat,W_fc1)+b_fc1)

h_fc1_drop = tf.nn.dropout(h_fc1,keep_prob)

#func2 layer

W_fc2 = weight_variable([1024,10])

b_fc2 = bias_variable([10])

prediction= tf.nn.softmax(tf.matmul(h_fc1_drop,W_fc2)+b_fc2)

#训练过程

cross_entrpy = -tf.reduce_sum(ys*tf.log(prediction),reduction_indices=[1])

train_step = tf.train.AdamOptimizer(0.001).minimize(cross_entrpy)

sess = tf.Session()

init = tf.initialize_all_variables()

sess.run(init)

for i in range(1000):

batch_xs,batch_ys = mnist.train.next_batch(100)

sess.run(train_step,feed_dict={xs:batch_xs,ys:batch_ys,keep_prob:0.5})

if i%50 ==0:

print(compute_accuracy(mnist.test.images,mnist.test.labels))

- 基于tensorflow1.11版本的cnn,tsne可视化降维

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

from matplotlib import cm

from sklearn.manifold import TSNE

from sklearn.model_selection import GridSearchCV

from tensorflow.examples.tutorials.mnist import input_data

#导入tensorflow自带的mnist数据集,并使用# one_hot编码

mnist = input_data.read_data_sets(’./mnist’, one_hot=True)

#设置参数

learning_rate = 0.02

batch_size = 50

train_size = 5000

plot_num = 500

#显示数据集大小,格式

print(“train image shape”, mnist.train.images.shape)

print(“train image labels”, mnist.train.labels.shape)

print(“test image shape”, mnist.test.images.shape)

print(“test image labels”, mnist.test.labels.shape)

#展示数据

plt.imshow(mnist.train.images[0].reshape((28, 28)), cmap=“gray”)

plt.title("%i" % np.argmax(mnist.train.labels[0]))

plt.show()

x = tf.placeholder(tf.float32, [None, 28*28]) / 255.

y = tf.placeholder(tf.float32, [None,10])

image = tf.reshape(x, [-1, 28, 28, 1])

##CNN

#卷积层

conv1 = tf.layers.conv2d(inputs=image, filters=16, kernel_size=5, strides=1, padding=“same”, activation=tf.nn.relu) # ->28,28,16

pool1 = tf.layers.max_pooling2d(conv1, pool_size=2, strides=2) # ->14,14,16

conv2 = tf.layers.conv2d(pool1, 32, 5, 1, “same”, activation=tf.nn.relu) # ->14,14,32

pool2 = tf.layers.max_pooling2d(conv2, 2, 2) # ->7,7,32

flat = tf.reshape(pool2, [-1, 7732])

hidden1 = tf.layers.dense(flat, 64)

#drop_out防止过拟合,提高训练速度

hidden1_dropout = tf.layers.dropout(hidden1, 0.5)

output = tf.layers.dense(hidden1_dropout, 10)

loss = tf.losses.softmax_cross_entropy(onehot_labels=y, logits=output)

train_op = tf.train.AdamOptimizer(learning_rate).minimize(loss)

accuracy = tf.metrics.accuracy(labels=tf.argmax(y, 1), predictions=tf.argmax(output, 1))[1]

sess = tf.Session()

init = tf.group(tf.global_variables_initializer(), tf.local_variables_initializer())

sess.run(init)

plt.ion()

for epoch in range(train_size):

batch_x, batch_y = mnist.train.next_batch(batch_size)

, loss = sess.run([train_op, loss], {x: batch_x, y: batch_y, })

if epoch % 50 == 0:

accuracy_test, hidden1_dropout_ = sess.run([accuracy, hidden1_dropout], {x: mnist.test.images, y: mnist.test.labels})

accuracy_train = sess.run(accuracy,{x: batch_x,y:batch_y})

print(“epoch:”, epoch, “| loss:%.4f” % loss_, “| accuracy_train:%.4f” % accuracy_train, “| accuracy_test:%.4f” % accuracy_test,)

#show two dimension

def plot_labels(two_dim, labels):

plt.cla()

X, Y = two_dim[:, 0], two_dim[:, 1]

for x, y, s in zip(X, Y, labels):

c = cm.rainbow(int(255 * s / 9))

plt.text(x, y, s, backgroundcolor=c, fontsize=9)

plt.xlim(X.min(), X.max())

plt.ylim(Y.min(), Y.max())

plt.title(‘Visualize last layer’)

plt.show()

plt.pause(0.01)

tsne = TSNE(perplexity=30, n_components=2, init=‘pca’, n_iter=5000)

two_dim = tsne.fit_transform(hidden1_dropout_[:plot_num, :])

labels = np.argmax(mnist.test.labels, axis=1)[:plot_num]

plot_labels(two_dim, labels)

plt.ioff()

- 使用RNN,LSTM实现MNIST

import numpy as np

import tensorflow as tf

import torch

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets(‘MNIST_data’,one_hot=True) ##使用one-hot编码,把每张图片具体的数字,转化为[0,0,0,0,1,0,0,0,0,0]的形式,与输出的结果相匹配

##参数设置

learn_rate = 0.001 ##学习率

training_iters = 100000 ##总训练次数

batch_size = 128 ##分批次训练,每批次数

n_inputs = 28 ##每一个神经网络输入图片的一行,即28个像素

n_steps = 28 ##设置为28个神经网络连起来,每一个输入一行,有28行

n_hidden_units = 128 ##每一个神经网络有128个隐藏层

n_classes = 10 ##结果分为10类

x = tf.placeholder(tf.float32, [None, n_steps, n_inputs]) ##设置占位符,存放参数,方便换参数

y = tf.placeholder(tf.float32, [None, n_classes])

weights = {

“in”: tf.Variable(tf.random_normal([n_inputs,n_hidden_units])),

“out”: tf.Variable(tf.random_normal([n_hidden_units,n_classes]))

}

biases = {

“in”: tf.Variable(tf.constant(0.1, shape=[n_hidden_units,])),

“out”: tf.Variable(tf.constant(0.1, shape=[n_classes,]))

}

def RNN(X, weights, biases):

##设置单个神经网络结构

X = tf.reshape(X, [-1, n_inputs]) ##转化三维为二维[128*28,28]

X_in = tf.matmul(X, weights['in'])+biases['in'] ##[128*28,128]

X_in = tf.reshape(X_in, [-1, n_steps, n_hidden_units]) ##二维转换成三维[128,28,128]

##设置单个神经网络里面的cell

lstm_cell = tf.nn.rnn_cell.BasicLSTMCell(n_hidden_units,forget_bias=1, state_is_tuple=True) ##设置cell

##lstm state被分为c_state和m_state

_init_state = lstm_cell.zero_state(batch_size, dtype=tf.float32) ##设置初始state

output,states = tf.nn.dynamic_rnn(lstm_cell, X_in, initial_state=_init_state, time_major=False) ##使用dynamic_rnn即使用最后一个step填充后面不够的step

result = tf.matmul(states[1],weights["out"])+biases["out"] ##第一种结果计算方法,使用states[1]。

outputs = tf.unstack(tf.transpose(output,[1,0,2]))

result = tf.matmul(outputs[-1], weights["out"])+biases["out"] ##第二种结果计算方法,使用outputs[-1],它是一个数列。

return result

##训练步骤

pred = RNN(x, weights, biases)

cost = tf.reduce_sum(tf.nn.softmax_cross_entropy_with_logits( logits= pred, labels=y)) ##使用softmax和cross entropy来作为损失函数

train_op = tf.train.AdamOptimizer(learn_rate).minimize(cost) ##使用AdamOptimizer的优化器

##计算准确率

correct = tf.equal(tf.argmax(pred, 1), tf.argmax(y, 1))

accuracy = tf.reduce_mean(tf.cast(correct, tf.float32))

init = tf.initialize_all_variables() ##初始化所有变量

with tf.Session() as sess:

sess.run(init)

step = 0

while step*batch_size < training_iters:

batch_xs, batch_ys = mnist.train.next_batch(batch_size)

batch_xs = batch_xs.reshape([batch_size, n_steps, n_inputs]) ##转化为[128,28,28]的形式

sess.run([train_op], feed_dict={x: batch_xs, y: batch_ys})

if step % 20 == 0:

print(sess.run(accuracy, feed_dict={x: batch_xs, y: batch_ys}))

step += 1

import tensorflow as tf

import os

import numpy as np

import cv2

import glob

#参数设置

test_image = "172648-014.bmp"

learning_rate = 0.0001

train_size = 350

batch_size = 50

train_data_path = "train_data"

test_data_path = "test_data"

img_num = 2

n_classes = 2

batch_step = 0

test1 = "test_data\\test1.jpg"

#读取图片,利用canny算子进行边缘检测

img = cv2.imread('tupian/'+test_image)

img_yuan = cv2.resize(img, (1024, 544))

img = cv2.cvtColor(img_yuan, cv2.COLOR_BGR2GRAY)

img = cv2.Canny(img, 50, 130)

#利用霍夫变换检测圆

circles = cv2.HoughCircles(img, cv2.HOUGH_GRADIENT, dp=1, minDist=40, param1=220, param2=25, minRadius=5, maxRadius=40)

#在保存切割的图像之前,清空文件夹内的内容

fileNames = glob.glob(test_data_path + r'\*')

for fileName in fileNames:

if fileName == test1:

pass

else:

os.remove(fileName)

#对图像进行标注,分割,保存

for circle in circles[0]:

x = int(circle[0])

y = int(circle[1])

r = int(circle[2])

img_rect = cv2.rectangle(img, (x-r, y-r), (x+r, y+r), (0, 0, 255))

img_rect_yuan = cv2.rectangle(img_yuan, (x-r, y-r), (x+r, y+r), (0, 0, 255))

img_save = img_rect[y-r:y+r, x-r:x+r]

img_save = cv2.resize(img_save, (64, 64))

cv2.imwrite("test_data/test" + str(img_num) + ".jpg", img_save)

cv2.imshow("ssi",img_rect_yuan)

cv2.imshow("sss",img_rect)

img_num += 1

print("请按任意键继续")

cv2.waitKey()

#提取测试图像

def get_test_images(test_data_path):

test_images = cv2.imread(test_data_path+"/test1.jpg")

test_images = test_images.reshape([1, 64*64*3])

for files in os.listdir(test_data_path):

files = cv2.imread(test_data_path+"/"+files)

files = files.reshape([1, 64*64*3])

test_images = np.vstack((test_images, files))

return test_images

#读取训练图像

def get_file(train_data_path,batch_step):

tubes = cv2.imread(train_data_path+"/1train76.jpg")

tubes = tubes.reshape([1, 64*64*3])

not_tubes = cv2.imread(train_data_path+"/0train209.jpg")

not_tubes = not_tubes.reshape([1, 64*64*3])

label_tubes = np.array([1])

label_not_tubes = np.array([0])

for file in os.listdir(train_data_path):

name = file.split("t")

if name[0] == "1":

file1 = cv2.imread(train_data_path+"/"+file)

file1 = file1.reshape([1, 64*64*3])

tubes = np.vstack((tubes, file1))

label_tubes = np.vstack((label_tubes, np.array([1])))

else:

file0 = cv2.imread(train_data_path+"/"+file)

file0 = file0.reshape([1, 64*64*3])

not_tubes = np.vstack((not_tubes, file0))

label_not_tubes = np.vstack((label_not_tubes, np.array([0])))

image_list = np.vstack((tubes, not_tubes))

label_list = np.vstack((label_tubes, label_not_tubes))

temp = np.hstack((image_list, label_list))

np.random.shuffle(temp)

images = temp[batch_step: batch_step + 50, 0:12288]

labels = temp[batch_step: batch_step + 50, 12288:]

labels = tf.one_hot(labels, 2, 1, axis=1)

labels = tf.reshape(labels, [-1, 2])

batch_step += 1

return images, labels

#设置占位符

xs = tf.placeholder(tf.float32, [None, 64*64*3])

ys = tf.placeholder(tf.float32, [None, 2])

image = tf.reshape(xs, [-1, 64, 64, 3])

##CNN,训练是否为假圆

conv1 = tf.layers.conv2d(inputs=image, filters=16, kernel_size=5, strides=1, padding="same", activation=tf.nn.relu) # ->64,64,16

pool1 = tf.layers.max_pooling2d(conv1, pool_size=2, strides=2) # ->32,32,16

conv2 = tf.layers.conv2d(pool1, 32, 5, 1, "same", activation=tf.nn.relu) # ->32,32,32

pool2 = tf.layers.max_pooling2d(conv2, 2, 2) # ->16,16,32

flat = tf.reshape(pool2, [-1, 16*16*32])

hidden1 = tf.layers.dense(flat, 64)

output = tf.layers.dense(hidden1, 2)

loss = tf.losses.softmax_cross_entropy(onehot_labels=ys, logits=output)

train_op = tf.train.AdamOptimizer(learning_rate).minimize(loss)

accuracy = tf.metrics.accuracy(labels=tf.argmax(ys, 1), predictions=tf.argmax(output, 1))[1]

sess = tf.Session()

init = tf.global_variables_initializer()

init_lo = tf.local_variables_initializer()

sess.run(init)

sess.run(init_lo)

#训练阶段

for step in range(train_size):

sum = -1

train_image, train_label = get_file(train_data_path,batch_step)

train_label = sess.run(train_label)

sess.run(train_op, {xs: train_image, ys: train_label})

loss_ = sess.run(loss, {xs: train_image, ys: train_label})

accuracy_ = sess.run(accuracy, {xs: train_image, ys: train_label})

print("step:%d" % step, "| loss:%.4f" % loss_, "| accuracy:%.4f" % accuracy_)

if step % 50 == 0:

test_image = get_test_images(test_data_path)

output_ = sess.run(output, {xs: test_image})

for i in range(len(output_[:, 1])):

if output_[i, 1] > output_[i, 0]:

sum += 1

print("试管数量为:%d" % sum)

验证码识别:

import tensorflow as tf

import numpy as np

import cv2

import os

import glob

img_num = 1

learning_rate = 0.001

train_path = “train/”

test_path = “test/”

train_data = “train_data/”

test_data = “test_data/”

first_num = 0

test_image = test_path+“test1.png”

step_num = 10000

batch_step = 0

batch_size = 50

def pro_img(img1):

img1 = cv2.blur(img1, (17, 17), 25)

img1 = cv2.cvtColor(img1, cv2.COLOR_BGR2GRAY)

ret, img1 = cv2.threshold(img1, 127, 255, cv2.THRESH_BINARY)

return img1

def pro_img_(img1):

ret, img1 = cv2.threshold(img1, 127, 255, cv2.THRESH_BINARY)

return img1

def pro(img):

img = cv2.resize(img, (100, 100))

img = pro_img(img)

img = cv2.resize(img, (10, 10))

img = pro_img_(img)

return img

def rm_black(img2):

j = 0

for i in range(10):

if img2[i, 0] < 127:

j += 1

if j > 9:

img2[0:10, 0:3] = 255

return img2

fileNames = glob.glob(test_data + r’*’)

for fileName in fileNames:

os.remove(fileName)

img4 = cv2.imread(test_image)

img4 = img4[3:13, :]

for i in range(5):

if i == 0:

img = img4[:, first_num: first_num+10]

img = pro(img)

cv2.imwrite(test_data + “test” + str(img_num) + “.jpg”, img)

first_num += 9

img_num += 1

else:

img = img4[:, first_num-1:first_num + 9]

img = pro(img)

cv2.imwrite(test_data + “test” + str(img_num) + “.jpg”, img)

first_num += 9

img_num += 1

fileNames = glob.glob(train_data + r’*’)

for fileName in fileNames:

os.remove(fileName)

for file in os.listdir(train_path):

img = cv2.imread(train_path+file)

img_label = file.split("_")

img = img[3:13, :]

img = pro(img)

img = rm_black(img)

cv2.imwrite(train_data+img_label[0]+“train” + str(img_num)+".jpg", img)

img_num += 1

def get_train(train_data):

global batch_step

train_ = cv2.imread(train_data + “0train16.jpg”)

train_ = train_.reshape([1, 10 * 10 * 3])

train_label = np.array([0])

for file in os.listdir(train_data):

if file == “0train16.jpg”:

pass

else:

label = file.split(“t”)

img = cv2.imread(train_data + file)

img = img.reshape([1, 10 * 10 * 3])

train_ = np.vstack((train_, img))

train_label = np.vstack((train_label, label[0]))

temp = np.hstack((train_, train_label))

np.random.shuffle(temp)

train_ = temp[0:batch_size, 0:300]

label_ = temp[0:batch_size, 300:]

label_ = tf.one_hot(label_, 10, 1, axis=1)

label_ = tf.reshape(label_, [-1, 10])

return train_, label_

def get_test(test_data):

test_ = cv2.imread(test_data + “test1.jpg”)

test_ = test_.reshape([1, 10 * 10 * 3])

for file in os.listdir(test_data):

if file == “test1.jpg”:

pass

else:

imgs = cv2.imread(test_data + file)

imgs = imgs.reshape([1, 10 * 10 * 3])

test_ = np.vstack((test_, imgs))

return test_

xs = tf.placeholder(tf.float32, [None, 10 * 10 * 3])

ys = tf.placeholder(tf.float32, [None, 10])

image = tf.reshape(xs, [-1, 10, 10, 3])

conv1 = tf.layers.conv2d(inputs=image, filters=16, kernel_size=5, strides=1, padding=“same”,

activation=tf.nn.relu) # ->10,10,16

pool1 = tf.layers.max_pooling2d(conv1, pool_size=2, strides=2) # ->5,5,16

conv2 = tf.layers.conv2d(pool1, 32, 5, 1, “same”, activation=tf.nn.relu) # ->5,5,32

flat = tf.reshape(conv2, [-1, 5 * 5 * 32])

hidden1 = tf.layers.dense(flat, 64)

output = tf.layers.dense(hidden1, 10)

loss = tf.losses.softmax_cross_entropy(onehot_labels=ys, logits=output)

train_op = tf.train.AdamOptimizer(learning_rate).minimize(loss)

accuracy = tf.metrics.accuracy(labels=tf.argmax(ys, 1), predictions=tf.argmax(output, 1))[1]

sess = tf.Session()

init = tf.global_variables_initializer()

init_lo = tf.local_variables_initializer()

sess.run(init)

sess.run(init_lo)

for step in range(step_num):

train_image, train_label = get_train(train_data)

train_label = sess.run(train_label)

sess.run(train_op, {xs: train_image, ys: train_label})

loss_ = sess.run(loss, {xs: train_image, ys: train_label})

accuracy_ = sess.run(accuracy, {xs: train_image, ys: train_label})

print(“step:%d” % step, “| loss:%.4f” % loss_, “| accuracy:%.4f” % accuracy_)

if step % 50 == 0:

test_image = get_test(test_data)

output_ = sess.run(output, {xs: test_image})

output_ = tf.argmax(output_,1)

output_ = sess.run(output_)

print(output_)