python 使用scrapy爬取小说以及保存json格式

今天爬取的小说网站地址:https://www.hongxiu.com/all?gender=2&catId=-1

使用终端创建项目命令: scrapy startproject hongxiu

然后进入项目命令:cd hongxiu

接着创建爬取的项目名字和网址,命令:scrapy genspider book hongxiu.com

运行:scrapy crawl hongxiu

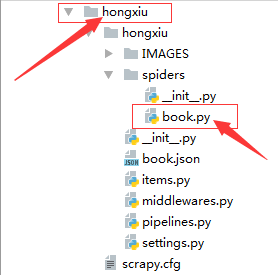

创建完以后如下图:

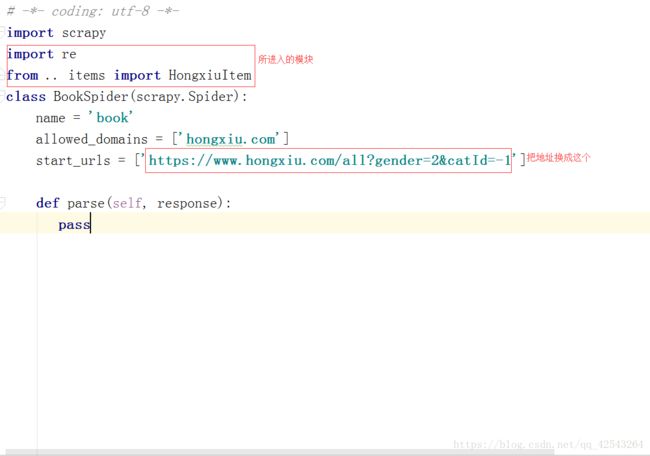

然后我们进入book.py修改一下地址,把所用到的模块引进去,如下图:

接着我们进入网页来获取想要的链接:

def parse(self, response):

#获取href链接

type_list = response.xpath('//ul[@type="category"]//a/@href').extract()

del type_list[0]

for type in type_list:

#将获取的链接进行拼接

url = 'https://www.hongxiu.com' + type

split = re.compile(r'.*?catId=(.*?)&.*?',re.S)

catId = re.findall(split,url)

yield

scrapy.Request(url=url,meta{'type':catId[0]},callback=self.get_content_with_type_url)

因为小说太多,我们连获取前十页的链接:

def get_content_with_type_url(self,response):

catId = response.meta['type']

for page_num in range(1,11):

url = 'https://www.hongxiu.com/all?pageNum=' + str(page_num) + '&pageSize=10&gender=2&catId=' + catId + '&isFinish=-1&isVip=-1&size=-1&updT=-1&orderBy=0'

# print(url)

yield scrapy.Request(url=url,callback=self.get_book_with_url)获取每一部小说的详情链接:

def get_book_with_url(self,response):

detail_list = response.xpath('//div[@class="book-info"]/h3/a/@href').extract()

for book_detail in detail_list:

url = 'https://www.hongxiu.com' + book_detail

yield scrapy.Request(url=url,callback=self.get_detail_with_url)获取小说名字类型,作者,收藏数,点击数,字数,还有小说简介

def get_detail_with_url(self,response):

type = response.xpath('//div[@class="crumbs-nav center1020"]/span/a[2]/text()').extract_first()

print(type)

name = response.xpath('//div[@class="book-info"]/h1/em/text()').extract_first()

print(name)

author = response.xpath('//div[@class="book-info"]/h1/a/text()').extract_first()

print(author)

total = response.xpath('//p[@class="total"]/span/text()').extract_first() +\

response.xpath('//p[@class="total"]/em/text()').extract_first()

print(total)

love = response.xpath('//p[@class="total"]/span[2]/text()').extract_first() +\

response.xpath('//p[@class="total"]/em[2]/text()').extract_first()

print(love)

click = response.xpath('//p[@class="total"]/span[3]/text()').extract_first() +\

response.xpath('//p[@class="total"]/em[3]/text()').extract_first()

print(click)

introduce = response.xpath('//p[@class="intro"]/text()').extract()

print(introduce)

url = 'http:' + response.xpath('//div[@class="book-img"]//img/@src').extract_first()

url = url.replace('\r','')

print(url)然后我们进入items.py进行编辑:

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# https://doc.scrapy.org/en/latest/topics/items.html

import scrapy

class HongxiuItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

type = scrapy.Field()

name = scrapy.Field()

author = scrapy.Field()

total = scrapy.Field()

love = scrapy.Field()

click = scrapy.Field()

introduce = scrapy.Field()

url = scrapy.Field()

pass

接着我们回到book.py:

item = HongxiuItem()

item['type'] = type

item['name'] = name

item['author'] = author

item['total'] = total

item['love'] = love

item['click'] = click

item['introduce'] = introduce

item['url'] = [url]

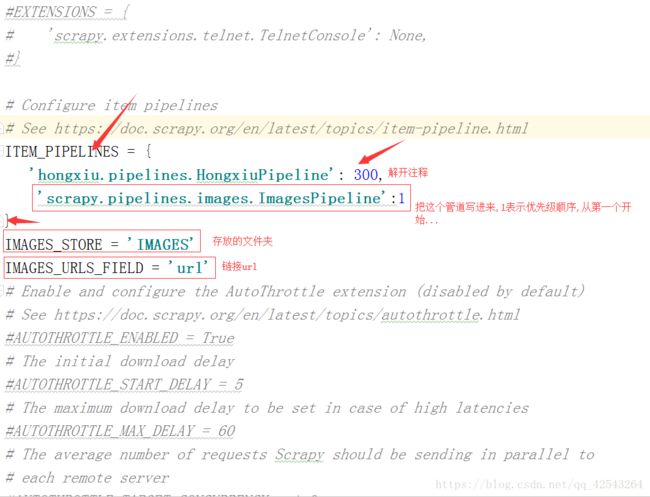

yield item然后我们进入pipelines.py

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html

import codecs

import os

import json

class HongxiuPipeline(object):

def __init__(self):

self.file = codecs.open(filename='book.json',mode='w',encoding='utf-8')

self.file.write('"book_list":[')

def process_item(self, item, spider):

res = dict(item)

str = json.dumps(res,ensure_ascii=False)

self.file.write(str)

self.file.write(',\n')

return item

def close_spider(self,spider):

self.file.seek(-1,os.SEEK_END)

self.file.truncate()

self.file.seek(-1,os.SEEK_END)

self.file.write(']')

self.file.close()下面是我们今天在book.py里面的完整代码:

# -*- coding: utf-8 -*-

import scrapy

import re

from .. items import HongxiuItem

class BookSpider(scrapy.Spider):

name = 'book'

allowed_domains = ['hongxiu.com']

start_urls = ['https://www.hongxiu.com/all?gender=2&catId=-1']

def parse(self, response):

type_list = response.xpath('//ul[@type="category"]//a/@href').extract()

del type_list[0]

for type in type_list:

url = 'https://www.hongxiu.com' + type

split = re.compile(r'.*?catId=(.*?)&.*?',re.S)

catId = re.findall(split,url)

yield scrapy.Request(url=url,meta={'type':catId[0]},callback=self.get_content_with_type_url)

def get_content_with_type_url(self,response):

#https://www.hongxiu.com

# /all?pageNum=1&pageSize=10&gender=2&catId=30020&isFinish=-1&

# isVip=-1&size=-1&updT=-1&orderBy=0

catId = response.meta['type']

for page_num in range(1,11):

url = 'https://www.hongxiu.com/all?pageNum=' + str(page_num) + '&pageSize=10&gender=2&catId=' + catId + '&isFinish=-1&isVip=-1&size=-1&updT=-1&orderBy=0'

# print(url)

yield scrapy.Request(url=url,callback=self.get_book_with_url)

def get_book_with_url(self,response):

detail_list = response.xpath('//div[@class="book-info"]/h3/a/@href').extract()

for book_detail in detail_list:

url = 'https://www.hongxiu.com' + book_detail

yield scrapy.Request(url=url,callback=self.get_detail_with_url)

def get_detail_with_url(self,response):

type = response.xpath('//div[@class="crumbs-nav center1020"]/span/a[2]/text()').extract_first()

print(type)

name = response.xpath('//div[@class="book-info"]/h1/em/text()').extract_first()

print(name)

author = response.xpath('//div[@class="book-info"]/h1/a/text()').extract_first()

print(author)

total = response.xpath('//p[@class="total"]/span/text()').extract_first() +\

response.xpath('//p[@class="total"]/em/text()').extract_first()

print(total)

love = response.xpath('//p[@class="total"]/span[2]/text()').extract_first() +\

response.xpath('//p[@class="total"]/em[2]/text()').extract_first()

print(love)

click = response.xpath('//p[@class="total"]/span[3]/text()').extract_first() +\

response.xpath('//p[@class="total"]/em[3]/text()').extract_first()

print(click)

introduce = response.xpath('//p[@class="intro"]/text()').extract()

print(introduce)

url = 'http:' + response.xpath('//div[@class="book-img"]//img/@src').extract_first()

url = url.replace('\r','')

print(url)

item = HongxiuItem()

item['type'] = type

item['name'] = name

item['author'] = author

item['total'] = total

item['love'] = love

item['click'] = click

item['introduce'] = introduce

item['url'] = [url]

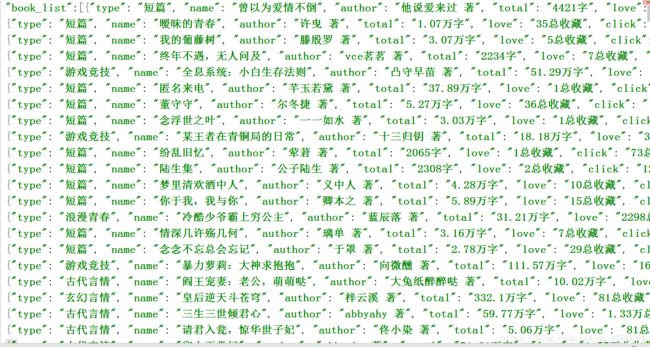

yield item运行结果是如下:

谁有更好的方法,下面留言一起交流...