keras实现U-Net, R2U-Net, Attention U-Net, Attention R2U-Net

keras实现U-Net, R2U-Net, Attention U-Net, Attention R2U-Net

代码在github上,,记得给星哦。

我的github地址

https://github.com/lixiaolei1982/Keras-Implementation-of-U-Net-R2U-Net-Attention-U-Net-Attention-R2U-Net.-

一,Unet结构:

优点:结构简单易懂,能在很小的数据集训练并取得不错的效果,用于许多的生物医学的分割。

缺点:网络深度不够,导致在多分类中表现一般。

参考代码:

def unet(img_w, img_h, n_label, data_format='channels_first'):

inputs = Input((3, img_w, img_h))

x = inputs

depth = 4

features = 64

skips = []

for i in range(depth):

x = Conv2D(features, (3, 3), activation='relu', padding='same', data_format=data_format)(x)

x = Dropout(0.2)(x)

x = Conv2D(features, (3, 3), activation='relu', padding='same', data_format=data_format)(x)

skips.append(x)

x = MaxPooling2D((2, 2), data_format= data_format)(x)

features = features * 2

x = Conv2D(features, (3, 3), activation='relu', padding='same', data_format=data_format)(x)

x = Dropout(0.2)(x)

x = Conv2D(features, (3, 3), activation='relu', padding='same', data_format=data_format)(x)

for i in reversed(range(depth)):

features = features // 2

# attention_up_and_concate(x,[skips[i])

x = UpSampling2D(size=(2, 2), data_format=data_format)(x)

x = concatenate([skips[i], x], axis=1)

x = Conv2D(features, (3, 3), activation='relu', padding='same', data_format=data_format)(x)

x = Dropout(0.2)(x)

x = Conv2D(features, (3, 3), activation='relu', padding='same', data_format=data_format)(x)

conv6 = Conv2D(n_label, (1, 1), padding='same', data_format=data_format)(x)

conv7 = core.Activation('sigmoid')(conv6)

model = Model(inputs=inputs, outputs=conv7)

#model.compile(optimizer=Adam(lr=1e-5), loss=[focal_loss()], metrics=['accuracy', dice_coef])

return model

二, R2U-Net

Recurrent Residual Convolutional Neural Network based on U-Net (R2U-Net) for Medical Image Segmentation。

优点:在Unet基础上增加残差与循环网络,网络加深又避免梯度无法学习,用于医学影像的分割。分割效果好于unet。

缺点:model大小及参数远超unet。

def r2_unet(img_w, img_h, n_label, data_format='channels_first'):

inputs = Input((3, img_w, img_h))

x = inputs

depth = 4

features = 64

skips = []

for i in range(depth):

x = rec_res_block(x, features, data_format=data_format)

skips.append(x)

x = MaxPooling2D((2, 2), data_format=data_format)(x)

features = features * 2

x = rec_res_block(x, features, data_format=data_format)

for i in reversed(range(depth)):

features = features // 2

x = up_and_concate(x, skips[i], data_format=data_format)

x = rec_res_block(x, features, data_format=data_format)

conv6 = Conv2D(n_label, (1, 1), padding='same', data_format=data_format)(x)

conv7 = core.Activation('sigmoid')(conv6)

model = Model(inputs=inputs, outputs=conv7)

#model.compile(optimizer=Adam(lr=1e-6), loss=[dice_coef_loss], metrics=['accuracy', dice_coef])

return model

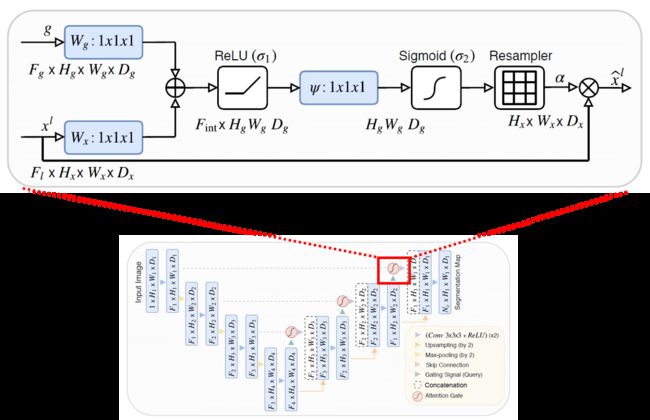

三, Att-Net

Attention U-Net: Learning Where to Look for the Pancreas

优点:在Unet基础上增加注意门,使跳远链接更有选择性。

缺点:网络未加深。

def att_unet(img_w, img_h, n_label, data_format='channels_first'):

inputs = Input((3, img_w, img_h))

x = inputs

depth = 4

features = 64

skips = []

for i in range(depth):

x = Conv2D(features, (3, 3), activation='relu', padding='same', data_format=data_format)(x)

x = Dropout(0.2)(x)

x = Conv2D(features, (3, 3), activation='relu', padding='same', data_format=data_format)(x)

skips.append(x)

x = MaxPooling2D((2, 2), data_format='channels_first')(x)

features = features * 2

x = Conv2D(features, (3, 3), activation='relu', padding='same', data_format=data_format)(x)

x = Dropout(0.2)(x)

x = Conv2D(features, (3, 3), activation='relu', padding='same', data_format=data_format)(x)

for i in reversed(range(depth)):

features = features // 2

x = attention_up_and_concate(x, skips[i], data_format=data_format)

x = Conv2D(features, (3, 3), activation='relu', padding='same', data_format=data_format)(x)

x = Dropout(0.2)(x)

x = Conv2D(features, (3, 3), activation='relu', padding='same', data_format=data_format)(x)

conv6 = Conv2D(n_label, (1, 1), padding='same', data_format=data_format)(x)

conv7 = core.Activation('sigmoid')(conv6)

model = Model(inputs=inputs, outputs=conv7)

#model.compile(optimizer=Adam(lr=1e-5), loss=[focal_loss()], metrics=['accuracy', dice_coef])

return model

四,Attention R2U-Net : integration of two recent advanced works (R2U-Net + Attention U-Net)

优点:在Unet基础上增加残差与循环网络,网络加深又避免梯度无法学习,增加注意门,学习更专注有用的东西。

缺点:model大小及参数远超unet。

def att_r2_unet(img_w, img_h, n_label, data_format='channels_first'):

inputs = Input((3, img_w, img_h))

x = inputs

depth = 4

features = 64

skips = []

for i in range(depth):

x = rec_res_block(x, features, data_format=data_format)

skips.append(x)

x = MaxPooling2D((2, 2), data_format=data_format)(x)

features = features * 2

x = rec_res_block(x, features, data_format=data_format)

for i in reversed(range(depth)):

features = features // 2

x = attention_up_and_concate(x, skips[i], data_format=data_format)

x = rec_res_block(x, features, data_format=data_format)

conv6 = Conv2D(n_label, (1, 1), padding='same', data_format=data_format)(x)

conv7 = core.Activation('sigmoid')(conv6)

model = Model(inputs=inputs, outputs=conv7)

#model.compile(optimizer=Adam(lr=1e-6), loss=[dice_coef_loss], metrics=['accuracy', dice_coef])

return model