Flutter 插件开发

Flutter 汇总请看这里

文章目录

- Flutter插件 解决什么问题

- 通信原理

- 支持传递的数据类型

- step1 创建插件工程

- MethodChannel是如何交互

- step2 编写Api和不同平台的实现

- flutter插件实例

- step2.1 定义api

- step2.2 实现android API

- step2.3 实现iOS API

- step2.4 flutter 调用

- Flutter调用原生并传递数据

- 在平台接收Flutter传递过来的数据

- 从平台回调结果给Flutter

- 平台如何调用Flutter

Flutter插件 解决什么问题

Flutter是一种跨平台框架,一套代码在Android和iOS上运行,那么当一些功能在两端需要不同的实现比如权限申请,三方api调用,这时Flutter Plugin就可以发挥作用,它包含一个用Dart编写的API定义,然后结合Android和iOS的平台不同实现。达到统一调用的目的

通信原理

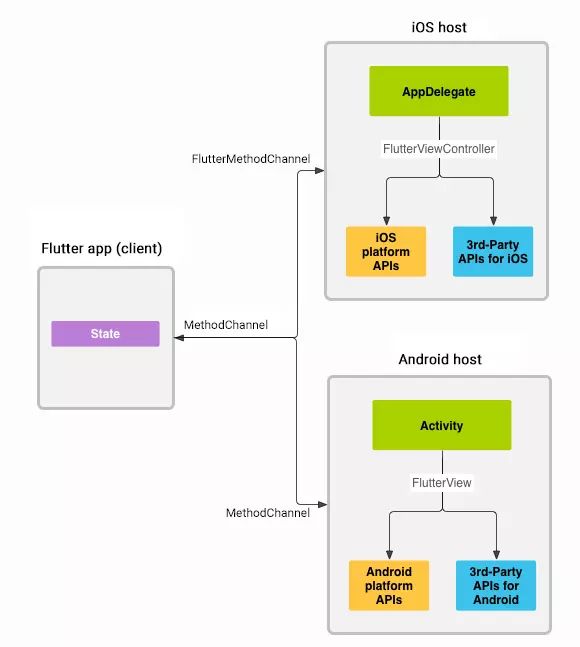

消息通过platform channels在客户端(UI)和主机(platform)之间传递,如下图所示:

在Flutter层,MethodChannel(API)允许发送与方法调用相对应的消息。 在平台层面也就是Android或者iOS,Android(API)上的MethodChannel和iOS(API)上的FlutterMethodChannel启用接收方法调用并发回结果。 可以使用非常少的“样板”代码开发平台插件。

支持传递的数据类型

既然要通信,那么一下两个问题就不仅浮现在眼前

MethodChannel传递的数据支持什么类型?

Dart数据类型与Android,iOS类型的对应关系是怎样的?

结论如下:

| Dart | Android | iOS |

|---|---|---|

| null | null | nil (NSNull when nested) |

| bool | java.lang.Boolean | NSNumber numberWithBool: |

| int | java.lang.Integer | NSNumber numberWithInt: |

| int if 32 bits not enough | java.lang.Long | NSNumber numberWithLong: |

| double | java.lang.Double | NSNumber numberWithDouble: |

| String | java.lang.String | NSString |

| Uint8List | byte[] | FlutterStandardTypedData typedDataWithBytes: |

| Int32List | int[] | FlutterStandardTypedData typedDataWithInt32: |

| Int64List | long[] | FlutterStandardTypedData typedDataWithInt64: |

| Float64List | double[] | FlutterStandardTypedData typedDataWithFloat64: |

| List | java.util.ArrayList | NSArray |

| Map | java.util.HashMap | NSDictionary |

step1 创建插件工程

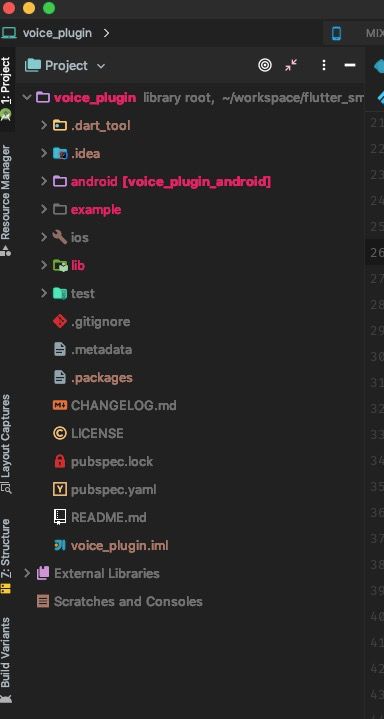

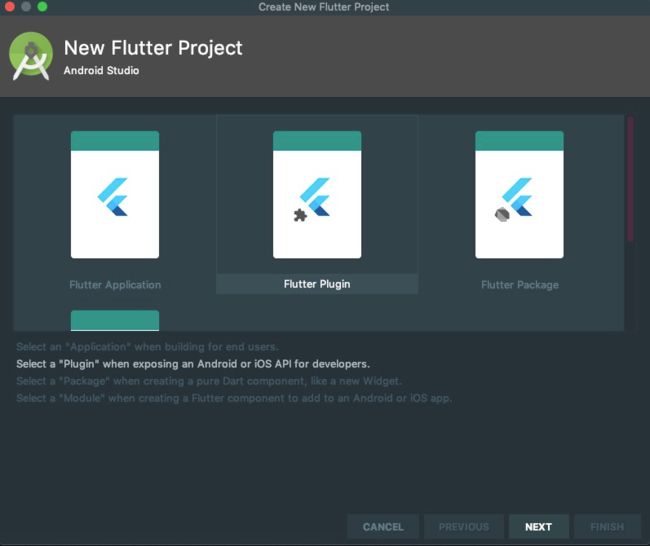

File->New->New Flutter Project 选择Flutter Plugin 起个名字一路next

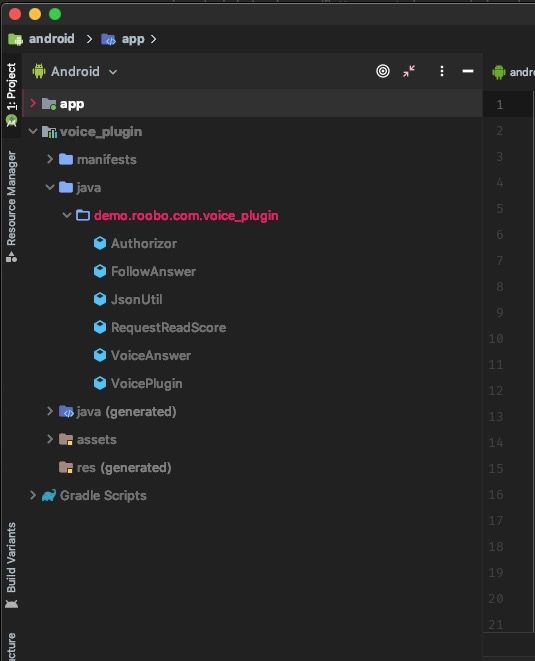

此时我们创建了一个名为voice_plugin的插件

我们着重了解一下以下三个文件

lib/voice_plugin.dart ——Api定义类

android/src/main/java/demo/roobo/com/voice_plugin/VoicePlugin.java ——android实现类

ios/Classes/VoicePlugin.m ——ios实现类

MethodChannel是如何交互

交互就是通过MethodChannel。MethodChannel负责dart和原生代码通信。voice_plugin是MethodChannel的名字,flutter通过一个具体的名字能才够在对应平台上找到对应的MethodChannel,从而实现flutter与平台的交互。同样地,我们在对应的平台上也要注册名为voice_plugin的MethodChannel。

lib/voice_plugin.dart

class VoicePlugin {

static const MethodChannel _channel = const MethodChannel('voice_plugin');

static Future<String> get platformVersion async {

final String version = await _channel.invokeMethod('getPlatformVersion');

return version;

}

}

Android注册名为voice_plugin的Channel

public class VoicePlugin implements MethodCallHandler {

private static final String TAG = "VoicePlugin";

/**

* Plugin registration.

*/

public static void registerWith(Registrar registrar) {

final MethodChannel channel = new MethodChannel(registrar.messenger(), "voice_plugin");

channel.setMethodCallHandler(new VoicePlugin());

Log.e(TAG, "init: mContext");

}

@Override

public void onMethodCall(MethodCall call, Result result) {

switch (call.method) {

case "getPlatformVersion":

result.success("Android " + android.os.Build.VERSION.RELEASE);

break;

default:

result.notImplemented();

break;

}

}

}

ios注册名为voice_plugin的Channel

public class SwiftVoicePlugin: NSObject, FlutterPlugin {

public static func register(with registrar: FlutterPluginRegistrar) {

let channel = FlutterMethodChannel(name: "voice_plugin", binaryMessenger: registrar.messenger())

let instance = SwiftVoicePlugin()

registrar.addMethodCallDelegate(instance, channel: channel)

}

public func handle(_ call: FlutterMethodCall, result: @escaping FlutterResult) {

result("iOS " + UIDevice.current.systemVersion)

}

}

@implementation VoicePlugin

+ (void)registerWithRegistrar:(NSObject<FlutterPluginRegistrar>*)registrar {

[SwiftVoicePlugin registerWithRegistrar:registrar];

}

@end

至此,Flutter与原生的桥接已经完成

step2 编写Api和不同平台的实现

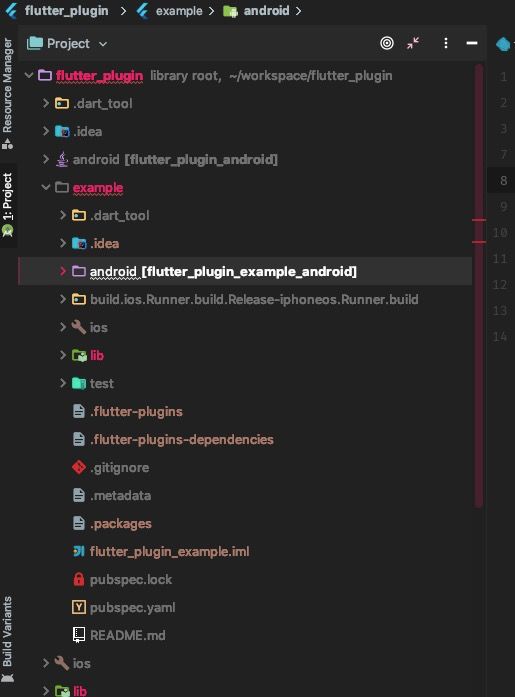

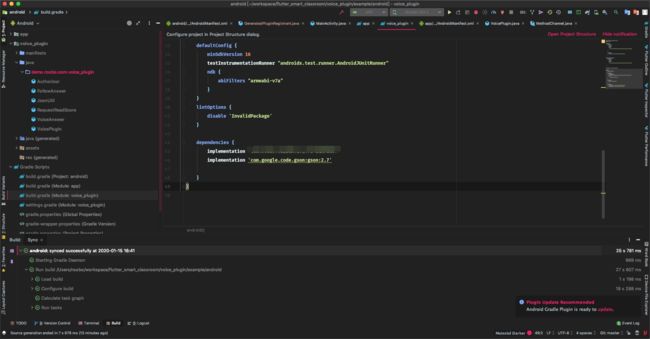

编写不同平台实现的时候你会发现没有提示,甚至连包依赖都找不到,而且ide在提示你 编写特定平台的代码时 你需要以工程身份打开项目 点解即可

我们打开android 工程

如下图 :

app 是插件工程example下的android的示例程序,可以用来测试android与dart的通信

voice_plugin即android平台下实现插件api的地方

这里就像一个android工程一样 可以gradle导包 编写java或kotlin代码

首先我们在插件工程下 lib/voice_plugin.dart定义我们需要的功能 比如我们定义了register getRead getASR platformVersion等方法

在android/src/main/java/demo/roobo/com/voice_plugin/VoicePlugin.java中的onMethodCall截获这些方法获取参数并实现相应功能并且提供返回值。

//以如下方式传递参数

static Future register(String sn, String publicKey) async {

Map<String, Object> map = {"sn": sn, "publicKey": publicKey};

await _channel.invokeMethod('register', map);

}

//以如下方式截获参数处理

private void registerAndInit(MethodCall call, Result result) {

String sn = call.argument("sn");

String publicKey = call.argument("publicKey");

Log.e("voicePlugin", " sn:" + sn + " pbk: " + publicKey);

init(mContext, sn, publicKey, result);

}

//以如下方式返回数据给flutter

//Result result result 由flutter框架创建 onMethodCall中可以得到 public void onMethodCall(MethodCall call, Result result) {}

result.success(answer.Data.pronunciation + "");

flutter插件实例

step2.1 定义api

class VoicePlugin {

static const MethodChannel _channel = const MethodChannel('voice_plugin');

static Future<String> get platformVersion async {

final String version = await _channel.invokeMethod('getPlatformVersion');

return version;

}

static Future register(String sn, String publicKey) async {

Map<String, Object> map = {"sn": sn, "publicKey": publicKey};

await _channel.invokeMethod('register', map);

}

static Future<String> get getASR async {

final String result = await _channel.invokeMethod('getASR');

return result;

}

static Future<String> getRead(String qa) async {

Map<String, Object> map = {"qa": qa};

final String result = await _channel.invokeMethod('getRead', map);

return result;

}

}

step2.2 实现android API

public class VoicePlugin implements MethodCallHandler {

private static final String TAG = "VoicePlugin";

private static Context mContext;

/**

* Plugin registration.

*/

public static void registerWith(Registrar registrar) {

final MethodChannel channel = new MethodChannel(registrar.messenger(), "voice_plugin");

channel.setMethodCallHandler(new VoicePlugin());

Log.e(TAG, "init: mContext");

mContext = registrar.context();

}

@Override

public void onMethodCall(MethodCall call, Result result) {

switch (call.method) {

case "getPlatformVersion":

result.success("Android " + android.os.Build.VERSION.RELEASE);

break;

case "register":

registerAndInit(call, result);

break;

case "getASR":

onASRClick(result);

break;

case "getRead":

onReadClick(call, result);

break;

default:

result.notImplemented();

break;

}

}

private void registerAndInit(MethodCall call, Result result) {

String sn = call.argument("sn");

String publicKey = call.argument("publicKey");

Log.e("voicePlugin", " sn:" + sn + " pbk: " + publicKey);

init(mContext, sn, publicKey, result);

}

public void onASRClick(final Result result) {

//这里我们调用了三方sdk获取结果

IRooboRecognizeEngine engine = VUISDK.getInstance().getRecognizeEngine();

engine.stopListening();

engine.setParameter(SDKConstant.Parameter.PARAMERER_AI_ON_OFF, "off");

engine.setCloudResultListener(new OnCloudResultListener() {

@Override

public void onResult(int i, String s) {

Log.d(TAG, "onASRResult() called with: i = [" + i + "], s = [" + s + "]");

Gson gson = new Gson();

VoiceAnswer voiceAnswer = gson.fromJson(s, VoiceAnswer.class);

//回执

result.success(voiceAnswer.text);

IRooboRecognizeEngine engine = VUISDK.getInstance().getRecognizeEngine();

engine.stopListening();

}

@Override

public void onError(SDKError sdkError) {

Log.d(TAG, "onError() called with: sdkError = [" + sdkError + "]");

}

});

engine.setOnVadListener(new OnVADListener() {

@Override

public void onBeginOfSpeech() {

Log.d(TAG, "onBeginOfSpeech() called");

}

@Override

public void onEndOfSpeech() {

Log.d(TAG, "onEndOfSpeech() called");

}

@Override

public void onCancelOfSpeech() {

Log.d(TAG, "onCancelOfSpeech() called");

}

@Override

public void onAudioData(byte[] bytes) {

}

});

engine.startListening();

}

public void onReadClick(MethodCall call, final Result result) {

String qa = call.argument("qa");

IRooboRecognizeEngine engine = VUISDK.getInstance().getRecognizeEngine();

engine.stopListening();

engine.setParameter(SDKConstant.Parameter.PARAMERER_ORAL_TESTING, "on");

engine.setParameter(SDKConstant.Parameter.PARAMERER_AI_CONTEXTS, "");

engine.setCloudResultListener(new OnCloudResultListener() {

@Override

public void onResult(int i, String s) {

Log.d(TAG, "onReadResult() called with: i = [" + i + "], s = [" + s + "]");

Gson gson = new Gson();

FollowAnswer answer = gson.fromJson(s, FollowAnswer.class);

result.success(answer.Data.pronunciation + "");

IRooboRecognizeEngine engine = VUISDK.getInstance().getRecognizeEngine();

engine.stopListening();

}

@Override

public void onError(SDKError sdkError) {

Log.d(TAG, "onError() called with: sdkError = [" + sdkError + "]");

}

});

engine.setOnVadListener(new OnVADListener() {

@Override

public void onBeginOfSpeech() {

Log.d(TAG, "onBeginOfSpeech() called");

}

@Override

public void onEndOfSpeech() {

Log.d(TAG, "onEndOfSpeech() called");

}

@Override

public void onCancelOfSpeech() {

Log.d(TAG, "onCancelOfSpeech() called");

}

@Override

public void onAudioData(byte[] bytes) {

}

});

engine.setParameter(

SDKConstant.Parameter.PARAMERER_ORAL_TESTING_EX,

JsonUtil.toJsonString(new RequestReadScore(1, qa)));

engine.startListening();

}

private static void init(Context context, String sn, String publicKey, final Result result) {

if (VUISDK.getInstance().hasInited()) {

Log.e(TAG, "init: has inited");

result.success("succeed");

return;

}

SDKRequiredInfo info = new SDKRequiredInfo();

info.setDeviceID(sn);

info.setAgentID("xxxxxxxxxxxxxxxxxx");

info.setPublicKey(publicKey);

info.setAgentToken("xxxxxxxxxxxxxxxxxxxxxxxxx");

ISDKSettings settings = VUISDK.getInstance().getSettings();

settings.setTokenType(AIParamInfo.TOKEN_MODE_INNER);

settings.setEnv(SDKConstant.EnvType.ENV_TEST);

settings.setLogLevel(SDKConstant.LogLevelType.LOG_LEVEL_VERBOSE);

VUISDK.getInstance().init(context, info, new ISDKInitListener() {

@Override

public void onSuccess() {

Log.d(TAG, "onSuccess() called");

IRooboRecognizeEngine engine = VUISDK.getInstance().getRecognizeEngine();

engine.setEngineEnable(SDKConstant.Engine.ENGINE_LOCAL_REC, false);

engine.setEngineEnable(SDKConstant.Engine.ENGINE_VAD, false);

engine.setEngineEnable(SDKConstant.Engine.ENGINE_WAKEUP, false);

}

@Override

public void onFailed(SDKError sdkError) {

Log.d(TAG, "onFailed() called with: sdkError = [" + sdkError + "]");

}

});

result.success("succeed");

}

}

step2.3 实现iOS API

和android 一样 实现一套ios平台代码

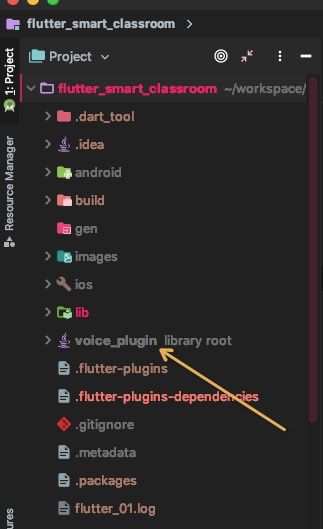

step2.4 flutter 调用

在pubspec.yaml中添加依赖

dependencies:

flutter:

sdk: flutter

# The following adds the Cupertino Icons font to your application.

# Use with the CupertinoIcons class for iOS style icons.

cupertino_icons: ^0.1.2

dio: ^3.0.0

shared_preferences: ^0.5.4+6

permission_handler: ^4.0.0

voice_plugin:

path: voice_plugin

//导包

import 'package:voice_plugin/voice_plugin.dart';

Future<String> getRead(String qa) async {

return await VoicePlugin.getRead(qa);

}

Future<String> getASR() async {

return await VoicePlugin.getASR;

}

//调用

getRead(msg.extra.question)

.then((str) {

NetHelper.post(

action: msg.action,

session: msg.session,

answer: str,

hostId: msg.hostId);

Navigator.pop(context);

});

getASR().then((str) {

NetHelper.post(

action: msg.action,

session: msg.session,

answer: str,

hostId: msg.hostId);

Navigator.pop(context);

});

Flutter调用原生并传递数据

static Future register(String sn, String publicKey) async {

await _channel.invokeMethod('register', {"sn": sn, "publicKey": publicKey});

}

我们将传进来的参数重新组装成了Map并传递给了invokeMethod。其中invokeMethod函数第一个参数为函数名称,即register,我们将在原生平台用到这个名字。第二个参数为要传递给原生的数据。我们看一下invokeMethod的源码:

@optionalTypeArgs

Future<T> invokeMethod<T>(String method, [ dynamic arguments ]) async {

assert(method != null);

final ByteData result = await binaryMessenger.send(

name,

codec.encodeMethodCall(MethodCall(method, arguments)),

);

if (result == null) {

throw MissingPluginException('No implementation found for method $method on channel $name');

}

final T typedResult = codec.decodeEnvelope(result);

return typedResult;

}

第二个参数是dynamic的,那么我们是否可以传递任何数据类型呢?至少语法上是没有错误的,但实际上这是不允许的,只有对应平台的codec支持的类型才能进行传递,也就是上文提到的数据类型对应表,这条规则同样适用于返回值,也就是原生给Flutter传值。请记住这条规定

在平台接收Flutter传递过来的数据

@Override

public void onMethodCall(MethodCall call, Result result) {

switch (call.method) {

case "getPlatformVersion":

result.success("Android " + android.os.Build.VERSION.RELEASE);

break;

case "register":

registerAndInit(call, result);

break;

case "getASR":

onASRClick(result);

break;

case "getRead":

onReadClick(call, result);

break;

default:

result.notImplemented();

break;

}

}

call.method是方法名称,我们要通过方法名称比对完成调用匹配。当call.method == "register"成立时,说明我们要调用register,从而进行更多的操作。

如发现call.method不存在,通过result向Flutter报告一下该方法没实现:result.notImplemented()当调用这个方法之后,我们会在Flutter层收到一个没实现该方法的异常。

iOS端也是大同小异:

- (void)handleMethodCall:(FlutterMethodCall *)call result:(FlutterResult)result {

if ([@"registerApp" isEqualToString:call.method]) {

[_fluwxWXApiHandler registerApp:call result:result];

return;

}

}

如果方法不存在:result(FlutterMethodNotImplemented);

其数据放在call.arguments,其类型为java.lang.Object,与Flutter传递过来数据类型一一对应。如果数据类型是Map,我们可以通过以下方式取出对应值:

String sn = call.argument("sn");

String publicKey = call.argument("publicKey");

IOS也一样

NSString *appId = call.arguments[@"sn"];

NSString *appId = call.arguments[@"publicKey"];

从平台回调结果给Flutter

在接收Flutter调用的时候会传递一个名字result的参数,通过result我们可以向Flutter回值有三种形式:

success,成功

error,遇到错误

notImplemented,没实现对应方法

result.success("succeed")

result.error("invalid sn", "are you sure your sn is correct ?", sn)

result("succeed");

result([FlutterError errorWithCode:@"invalid sn" message:@"are you sure your sn is correct ? " details:sn]);

平台如何调用Flutter

如果插件的功能很重,或者是异步回调,此时我们就不好使用Result 对象回传结果,比如微信分享,当我们完成分享时,我们可能将分享结果传回Flutter。微信的这些回调是异步的,

原理也一样,在原生代码中,我们也有一个MethodChannel:

val channel = MethodChannel(registrar.messenger(), "xxx")

let channel = FlutterMethodChannel(name: "xxx", binaryMessenger: registrar.messenger())

当我们拿到了MethodChannel,

val result = mapOf(

errStr to response.errStr,

WechatPluginKeys.TRANSACTION to response.transaction,

type to response.type,

errCode to response.errCode,

openId to response.openId,

WechatPluginKeys.PLATFORM to WechatPluginKeys.ANDROID

)

channel?.invokeMethod("onShareResponse", result)

NSDictionary *result = @{

description: messageResp.description == nil ?@"":messageResp.description,

errStr: messageResp.errStr == nil ? @"":messageResp.errStr,

errCode: @(messageResp.errCode),

type: messageResp.type == nil ? @2 :@(messageResp.type),

country: messageResp.country== nil ? @"":messageResp.country,

lang: messageResp.lang == nil ? @"":messageResp.lang,

fluwxKeyPlatform: fluwxKeyIOS

};

[methodChannel invokeMethod:@"onShareResponse" arguments:result];

原生调用Flutter和Flutter调用原生的方式其实是一样的,都是通过MethodChannel调用指定名称的方法,并传递数据。

Flutter的接受原生调用的方式和原生接收Flutter调用的方式应该也是样的:

final MethodChannel _channel = const MethodChannel('XXX')

..setMethodCallHandler(_handler);

Future<dynamic> _handler(MethodCall methodCall) {

if ("onShareResponse" == methodCall.method) {

_responseController.add(WeChatResponse(methodCall.arguments, WeChatResponseType.SHARE));

}

return Future.value(true);

}

稍微不一样的地方就是,在Flutter中,我们使用到了Stream:

StreamController<WeChatResponse> _responseController =

new StreamController.broadcast();

Stream<WeChatResponse> get response => _responseController.stream;

当然了不使用Stream也可以。通过Stream,我们可以更轻松地监听回调数据变化:

response.listen((data) {

//do work

});