OBS集成WebRTC

OBS集成WebRTC

- 1 前言

- 1.1 目的

- 1.2 目标

- 1.3 版本

- 1.3.1 OBS版本

- 1.3.2 WebRTC版本

- 2 正文

- 2.1 OBS编译

- 2.2 WebRTC编译

- 2.3 OBS的输出插件开发

- 2.3.1 自定义输出流

- 2.3.2 注册自定义输出流

- 2.3.3 自定义推流服务

- 2.3.4 注册自定义推流服务

- 2.3.5 桥接自定义输出流到自定义推流服务

- 2.3.6 设置界面增加自定义推流服务

- 2.3.7 获取自定义推流服务参数

- 2.4 YUV视频数据输入WebRTC

- 2.4.1 实现

- 2.4.2 一些细节

- 2.5 PCM音频数据输入WebRTC

- 2.5.1 实现

- 2.5.2 一些细节

- 2.6 WebRTC封装OBS编码器

- 2.6.1 实现

- 2.6.2 一些细节

- 2.6.3 关键帧的产生

- 2.6.3.1 SPS/PPS处理

- 2.6.3.2 关键帧发送时机

- 2.6.3.3 X264强制编码关键帧

- 2.6.3.3 INTEL/QSV强制编码关键帧

- 2.6.3.4 AMD

- 3 代码地址

1 前言

1.1 目的

Just for fun.

1.2 目标

让OBS能够实时互动。

1.3 版本

1.3.1 OBS版本

21.0.3。

1.3.2 WebRTC版本

M66。

2 正文

2.1 OBS编译

略。很简单,按照网上教程切到21.0.3所在的分支,编译即可。其他分支应该也OK,可能会有少量变化。

2.2 WebRTC编译

略。稍微复杂,不过真想搞的没有编不了的。

2.3 OBS的输出插件开发

首先要获得OBS的输出,包括音频、视频,要修改obs-outputs插件。OBS输出插件obs-outputs默认的输出流是“rtmp_output”,这里要重写一个输出流,称为"bee_output"。

2.3.1 自定义输出流

创建一个bee-stream.c,定义一个obs_output_info结构,并实现obs_output_info结构中的以下方法:

struct obs_output_info bee_output_info = {

.id = "bee_output",

.flags = OBS_OUTPUT_AV |

OBS_OUTPUT_SERVICE |

OBS_OUTPUT_MULTI_TRACK,

.encoded_video_codecs = "h264",

.encoded_audio_codecs = "aac",

.get_name = bee_stream_getname, // 输出流的名字

.create = bee_stream_create, // 创建流

.destroy = bee_stream_destroy, // 销毁流

.start = bee_stream_start, // 开始推流

.stop = bee_stream_stop, // 停止推流

.raw_video = bee_receive_video, // 获取YUV视频数据回调

.raw_audio = bee_receive_audio, // 获取PCM音频数据回调

.get_defaults = bee_stream_defaults, // 设置一些默认参数

.get_properties = bee_stream_properties, // 返回流的一些属性

.get_total_bytes = bee_stream_total_bytes_sent, // 统计发出的字节数,可不实现

.get_congestion = bee_stream_congestion, // 拥塞控制,可不实现

.get_dropped_frames = bee_stream_dropped_frames, // 丢弃的帧数,可不实现

.update = bee_update // 更新一些设置

};

这个“流”是什么东西,由开发者自己决定,可以是自己定义的一个任意对象,封装了一次推流所有操作的数据、操作等,从bee_stream_create方法返回后,后续其他所有的方法会通过参数带回来,相当于OBS替开发者保持了一个流的上下文。

2.3.2 注册自定义输出流

在obs-outputs.c中

……

extern struct obs_output_info rtmp_output_info;

extern struct obs_output_info null_output_info;

extern struct obs_output_info flv_output_info;

extern struct obs_output_info bee_output_info; // 新增

……

bool obs_module_load(void)

{

#ifdef _WIN32

WSADATA wsad;

WSAStartup(MAKEWORD(2, 2), &wsad);

#endif

obs_register_output(&rtmp_output_info);

obs_register_output(&null_output_info);

obs_register_output(&flv_output_info);

obs_register_output(&bee_output_info); // 新增

#if COMPILE_FTL

obs_register_output(&ftl_output_info);

#endif

return true;

}

这样,obs-outputs插件加载的时候这个输出流就注册好了。

2.3.3 自定义推流服务

要启用刚才定义的bee_output输出流,需要想办法指定使用这个输出流,写死是个办法,但是不灵活,最好由界面指定,这样就能够兼容RTMP输出流和自定义的输出流。

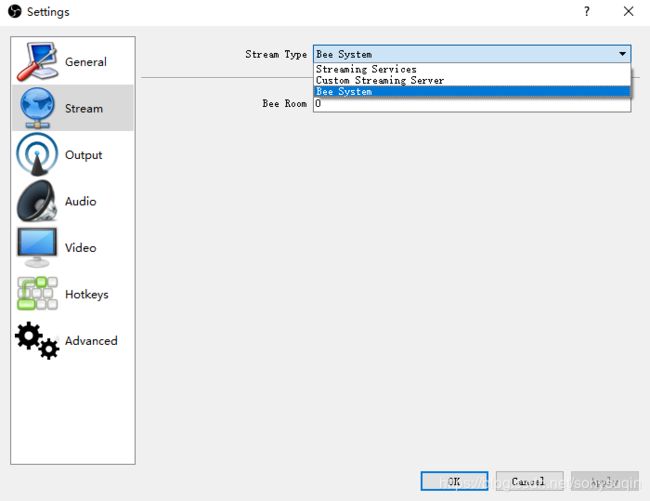

由于OBS默认只输出RTMP流,我们期望在【设置-Stream-Stream Type】的RTMP服务列表中增加一项”Bee System“,另外设置一个”Bee Room“参数,作为WebRTC的输入房间名,使用我们自己的推流服务。

增加一个rtmp-services-bee.c,定义一个obs_service_info结构,并实现obs_service_info结构中的以下方法:

struct obs_service_info rtmp_bee_service = {

.id = "rtmp_bee", //推流服务id

.get_name = rtmp_bee_name, //服务名

.create = rtmp_bee_create, //创建服务

.destroy = rtmp_bee_destroy, //销毁服务

.update = rtmp_bee_update, //更新服务的设置

.get_properties = rtmp_bee_properties, //返回服务的一些属性

.get_url = rtmp_bee_url, //返回URL,无用

.get_room = rtmp_bee_room //返回设置的房间名

};

rtmp_bee_create创建的service是自定义的对象,可以存放任意自定义数据,并在后续的所有方法中传入这个service指针作为参数。我们可以在rtmp_bee_create方法中通过参数获取到界面设置的房间名存入该自定义service。注意get_room方法是新增的,原始的obs_service_info中并没有这个成员,需要返回我们自定义service中的房间名,该方法在我们推流的时候会被调用。

这样我们就定义了一个自定义的推流服务,并且能够获得界面设置的房间名。

2.3.4 注册自定义推流服务

在rtmp-services-main.c中

……

extern struct obs_service_info rtmp_common_service;

extern struct obs_service_info rtmp_custom_service;

extern struct obs_service_info rtmp_bee_service; // 新增

……

bool obs_module_load(void)

{

init_twitch_data();

dstr_copy(&module_name, "rtmp-services plugin (libobs ");

dstr_cat(&module_name, obs_get_version_string());

dstr_cat(&module_name, ")");

proc_handler_t *ph = obs_get_proc_handler();

proc_handler_add(ph, "void twitch_ingests_refresh(int seconds)",

refresh_callback, NULL);

#if !defined(_WIN32) || CHECK_FOR_SERVICE_UPDATES

char *local_dir = obs_module_file("");

char *cache_dir = obs_module_config_path("");

if (cache_dir) {

update_info = update_info_create(

RTMP_SERVICES_LOG_STR,

module_name.array,

RTMP_SERVICES_URL,

local_dir,

cache_dir,

confirm_service_file, NULL);

}

load_twitch_data();

bfree(local_dir);

bfree(cache_dir);

#endif

obs_register_service(&rtmp_common_service);

obs_register_service(&rtmp_custom_service);

obs_register_service(&rtmp_bee_service); // 新增

return true;

}

这样,rtmp-services插件加载的时候就会加载我们自定义的推流服务。

2.3.5 桥接自定义输出流到自定义推流服务

在window-basic-main-outputs.cpp

bool SimpleOutput::StartStreaming(obs_service_t *service)

{

if (!Active())

SetupOutputs();

/* --------------------- */

// 新增

const char *type = obs_service_get_output_type(service);

//service为我们创建的自定义推流服务指针,这里返回我们定义的id:"rtmp_bee"。

std:string typeCheck = obs_service_get_type(service);

if (!type) {

if (typeCheck.find("bee") != std::string::npos) {

type = "bee_output"; // 发现我们定义的推流服务名,则重置输出流为bee_output,也就是我们之前定义的自定义输出流。

} else {

type = "rtmp_output"; // 否则设置默认的输出流为rtmp。

}

}

……

2.3.6 设置界面增加自定义推流服务

在window-basic-auto-config.cpp

AutoConfigStreamPage::AutoConfigStreamPage(QWidget *parent)

: QWizardPage (parent),

ui (new Ui_AutoConfigStreamPage)

{

ui->setupUi(this);

ui->bitrateLabel->setVisible(false);

ui->bitrate->setVisible(false);

ui->streamType->addItem(obs_service_get_display_name("rtmp_common"));

ui->streamType->addItem(obs_service_get_display_name("rtmp_custom"));

ui->streamType->addItem(obs_service_get_display_name("rtmp_bee")); // 新增,增加一个列表项

……

bool AutoConfigStreamPage::validatePage()

{

OBSData service_settings = obs_data_create();

obs_data_release(service_settings);

wiz->customServer = ui->streamType->currentIndex() == 1;

const char *serverType;

switch (wiz->customServer) {

case 0: serverType = "rtmp_common";

break;

case 1: serverType = "rtmp_custom";

break;

case 2: serverType = "rtmp_bee"; // 新增,选中第3个列表项返回我们的自定义推流服务。

break;

default: blog(LOG_ERROR, "streamType do not exist");

break;

}

经过以上步骤后,我们就能够从界面选择自定义推流服务,并且设置输出流为自定义输出流。

2.3.7 获取自定义推流服务参数

我们在自定义推流服务设置界面增加了一个"Bee Room"参数,这个参数在自定义输出流内部使用,但是却在自定义推流服务内部维护,为了从自定义输出流访问到这个参数,需要做一定的封装:

在obs.h增加一个方法:

EXPORT const char *obs_service_get_room(const obs_service_t *service);

在obs-service.c中实现:

const char *obs_service_get_room(const obs_service_t *service)

{

if (!obs_service_valid(service, "obs_service_get_room"))

return NULL;

if (!service->info.get_room) return NULL;

return service->info.get_room(service->context.data); // get_room就是我们在自定义推流服务中实现的方法。

}

这样在自定义输出流的实现文件bee-stream.c中,就可以通过obs_output_get_service、obs_service_get_room方法直接获取到该参数:

void *bee_stream_create(obs_data_t *settings, obs_output_t *output) {

……

const obs_service_t *service = obs_output_get_service(output);

const char *room = obs_service_get_room(service);

……

综上,我们实现了自定义输出流bee_output,实现了自定义推流服务rtmp_bee,并修改了界面,增加了接口,能够接管OBS的输出,并设置我们自己的推流服务、参数。后面就是OBS的输出与WebRTC的适配。

2.4 YUV视频数据输入WebRTC

如果期望使用OBS的编码器以获得比较高的性能,直接跳到2.6节。

WebRTC默认使用的编码器在Windows下是OpenH264,性能不高,对游戏直播输出1080p/30fps的需求来说无法满足。

2.4.1 实现

开始的时候没有考虑编码的问题,直接输出YUV给WebRTC,格式默认是NV12,WebRTC需要转成I420才能处理。可以派生WebRTC的AdaptedVideoTrackSource类传递YUV数据,这个是Android/iOS端的通用做法,主要是能够支持旋转和裁剪,这里沿用了这个方法。

直接贴上代码:

win_video_track_source.h

#ifndef __WIN_VIDEO_TRACK_SOURCE_H__

#define __WIN_VIDEO_TRACK_SOURCE_H__

#include "bee/base/bee_define.h"

#include "webrtc/rtc_base/asyncinvoker.h"

#include "webrtc/rtc_base/checks.h"

#include "webrtc/rtc_base/thread_checker.h"

#include "webrtc/rtc_base/timestampaligner.h"

#include "webrtc/common_video/include/i420_buffer_pool.h"

#include "webrtc/common_video/libyuv/include/webrtc_libyuv.h"

#include "webrtc/media/base/adaptedvideotracksource.h"

#include "webrtc/api/videosourceproxy.h"

namespace bee {

class WinVideoTrackSource : public rtc::AdaptedVideoTrackSource {

public:

WinVideoTrackSource(rtc::Thread* signaling_thread, bool is_screencast = true);

~WinVideoTrackSource();

public:

bool is_screencast() const override { return is_screencast_; }

// Indicates that the encoder should denoise video before encoding it.

// If it is not set, the default configuration is used which is different

// depending on video codec.

rtc::Optional needs_denoising() const override {

return rtc::Optional(false);

}

void SetState(SourceState state);

SourceState state() const override { return state_; }

bool remote() const override { return false; }

void OnByteBufferFrameCaptured(

const void* frame_data,

int length,

int width,

int height,

int rotation,

int64_t timestamp_ns);

void OnOutputFormatRequest(int width, int height, int fps);

static rtc::scoped_refptr create(

rtc::Thread *signaling_thread,

rtc::Thread *worker_thread,

int32_t width,

int32_t height,

int32_t fps);

private:

rtc::Thread* signaling_thread_;

rtc::AsyncInvoker invoker_;

rtc::ThreadChecker camera_thread_checker_;

SourceState state_;

rtc::VideoBroadcaster broadcaster_;

rtc::TimestampAligner timestamp_aligner_;

webrtc::NV12ToI420Scaler nv12toi420_scaler_;

webrtc::I420BufferPool buffer_pool_;

const bool is_screencast_;

};

} // namespace bee

#endif // __WIN_VIDEO_TRACK_SOURCE_H__

win_video_track_source.cpp

#include "win_video_track_source.h"

#include "service/bee_entrance.h"

#include

namespace {

// MediaCodec wants resolution to be divisible by 2.

const int kRequiredResolutionAlignment = 2;

}

namespace bee {

WinVideoTrackSource::WinVideoTrackSource(rtc::Thread* signaling_thread, bool is_screencast)

: AdaptedVideoTrackSource(kRequiredResolutionAlignment),

signaling_thread_(signaling_thread),

is_screencast_(is_screencast) {

camera_thread_checker_.DetachFromThread();

}

WinVideoTrackSource::~WinVideoTrackSource() {

}

void WinVideoTrackSource::SetState(SourceState state) {

if (signaling_thread_ == NULL) {

return;

}

if (rtc::Thread::Current() != signaling_thread_) {

invoker_.AsyncInvoke(

RTC_FROM_HERE, signaling_thread_,

rtc::Bind(&WinVideoTrackSource::SetState, this, state));

return;

}

if (state_ != state) {

state_ = state;

FireOnChanged();

}

}

void WinVideoTrackSource::OnByteBufferFrameCaptured(

const void* frame_data,

int length,

int width,

int height,

int rotation,

int64_t timestamp_ns) {

RTC_DCHECK(camera_thread_checker_.CalledOnValidThread());

RTC_DCHECK(rotation == 0 || rotation == 90 || rotation == 180 || rotation == 270);

int64_t camera_time_us = timestamp_ns / rtc::kNumNanosecsPerMicrosec;

int64_t translated_camera_time_us = timestamp_aligner_.TranslateTimestamp(camera_time_us, rtc::TimeMicros());

int adapted_width;

int adapted_height;

int crop_width;

int crop_height;

int crop_x;

int crop_y;

if (!AdaptFrame(

width,

height,

camera_time_us,

&adapted_width,

&adapted_height,

&crop_width,

&crop_height,

&crop_x,

&crop_y)) {

return;

}

const uint8_t* y_plane = static_cast(frame_data);

const uint8_t* uv_plane = y_plane + width * height;

const int uv_width = (width + 1) / 2;

RTC_CHECK_GE(length, width * height + 2 * uv_width * ((height + 1) / 2));

// Can only crop at even pixels.

crop_x &= ~1;

crop_y &= ~1;

// Crop just by modifying pointers.

y_plane += width * crop_y + crop_x;

uv_plane += uv_width * crop_y + crop_x;

rtc::scoped_refptr buffer = buffer_pool_.CreateBuffer(adapted_width, adapted_height);

nv12toi420_scaler_.NV12ToI420Scale(

y_plane,

width,

uv_plane,

uv_width * 2,

crop_width,

crop_height,

buffer->MutableDataY(),

buffer->StrideY(),

buffer->MutableDataU(), //OBS uses NV12, not NV21.

buffer->StrideU(),

buffer->MutableDataV(),

buffer->StrideV(),

buffer->width(),

buffer->height());

OnFrame(webrtc::VideoFrame(buffer, static_cast(rotation), translated_camera_time_us));

}

void WinVideoTrackSource::OnOutputFormatRequest(int width, int height, int fps) {

cricket::VideoFormat format(width, height, cricket::VideoFormat::FpsToInterval(fps), cricket::FOURCC_NV12);

video_adapter()->OnOutputFormatRequest(format);

}

rtc::scoped_refptr WinVideoTrackSource::create(

rtc::Thread *signaling_thread,

rtc::Thread *worker_thread,

int32_t width,

int32_t height,

int32_t fps) {

rtc::scoped_refptr proxy_source;

do {

if (signaling_thread == NULL || worker_thread == NULL) {

break;

}

auto source(new rtc::RefCountedObject(signaling_thread));

source->OnOutputFormatRequest(width, height, fps);

source->SetState(webrtc::MediaSourceInterface::kLive);

proxy_source = webrtc::VideoTrackSourceProxy::Create(signaling_thread, worker_thread, source);

} while (0);

return proxy_source;

}

} // namespace bee

在创建VideoTrack时候,传入这个WinVideoTrackSource对象即可。

rtc::scoped_refptr factory = get_peer_connection_factory();

rtc::scoped_refptr stream = factory->CreateLocalMediaStream(kStreamLabel);

rtc::scoped_refptr video_track = factory->CreateVideoTrack(kVideoLabel, win_video_track_source);

stream->AddTrack(video_track);

peer_connection_->AddStream(stream)

在自定义输出流bee-stream.c的bee_receive_video回调中,传入YUV数据即可。

static void bee_receive_video(void *data, struct video_data *frame) {

BeeStream *stream = (BeeStream*)data;

int width = (int)obs_output_get_width(stream->obs_output);

int height = (int)obs_output_get_height(stream->obs_output);

int len = width * height * 3 / 2;

……

// 注意,下面是伪代码,实际上C调C++需要做一些转换,这里只是展示如果传递数据。

win_video_track_source->OnByteBufferFrameCaptured(

(unsigned char*)frame->data[0],

len,

width,

height,

0,

frame->timestamp);

}

2.4.2 一些细节

- 需要注意的一个地方是AdaptedVideoTrackSource的is_screencast必须返回true,这样可以保证WebRTC不会自动调整分辨率,这个在我的另外一篇文章《WebRTC的QP、分辨率自动调整》有解释。

2.5 PCM音频数据输入WebRTC

2.5.1 实现

PCM音频数据输入WebRTC的方法跟YUV视频数据的输入WebRTC的方法有点区别,需要自定义一个AudioDeviceModule,而不是AudioTrackSource,然后在调用CreatePeerConnectionFactory时传入这个AudioDeviceModule。

注意如果直接派生AudioDeviceModule则需要重写所有的音频录制、播放方法,为简单起见这里派生Windows的默认实现AudioDeviceModuleImpl(也派生自AudioDeviceModule),保留所有的播放方法,只重写录制方法,这样既能够通过OBS输入PCM,也不用重写播放逻辑。

这里直接贴代码:

audio_device_module_wrapper.h

#ifndef __AUDIO_DEVICE_MODULE_WRAPPER_H__

#define __AUDIO_DEVICE_MODULE_WRAPPER_H__

#include

#include "bee/base/bee_define.h"

#include "webrtc/modules/audio_device/audio_device_impl.h"

#include "webrtc/rtc_base/refcountedobject.h"

#include "webrtc/rtc_base/criticalsection.h"

#include "webrtc/rtc_base/checks.h"

using webrtc::AudioDeviceBuffer;

using webrtc::AudioDeviceGeneric;

using webrtc::AudioDeviceModule;

using webrtc::AudioTransport;

using webrtc::kAdmMaxDeviceNameSize;

using webrtc::kAdmMaxGuidSize;

using webrtc::kAdmMaxFileNameSize;

namespace bee {

class AudioDeviceModuleWrapper

: public rtc::RefCountedObject {

public:

AudioDeviceModuleWrapper(const AudioLayer audioLayer);

virtual ~AudioDeviceModuleWrapper() override;

// Creates an ADM.

static rtc::scoped_refptr Create(

const AudioLayer audio_layer = kPlatformDefaultAudio);

// Full-duplex transportation of PCM audio

virtual int32_t RegisterAudioCallback(AudioTransport* audioCallback);

// Device enumeration

virtual int16_t RecordingDevices() { return 0; }

virtual int32_t RecordingDeviceName(uint16_t index,

char name[kAdmMaxDeviceNameSize],

char guid[kAdmMaxGuidSize]) { return 0; }

// Device selection

virtual int32_t SetRecordingDevice(uint16_t index) { return 0; }

virtual int32_t SetRecordingDevice(WindowsDeviceType device) { return 0; }

// Audio transport initialization

virtual int32_t RecordingIsAvailable(bool* available) { return 0; }

virtual int32_t InitRecording();

virtual bool RecordingIsInitialized() const;

// Audio transport control

virtual int32_t StartRecording();

virtual int32_t StopRecording();

virtual bool Recording() const;

// Audio mixer initialization

virtual int32_t InitMicrophone() { return 0; }

virtual bool MicrophoneIsInitialized() const { return 0; }

// Microphone volume controls

virtual int32_t MicrophoneVolumeIsAvailable(bool* available) { return 0; }

virtual int32_t SetMicrophoneVolume(uint32_t volume) { return 0; }

virtual int32_t MicrophoneVolume(uint32_t* volume) const { return 0; }

virtual int32_t MaxMicrophoneVolume(uint32_t* maxVolume) const { return 0; }

virtual int32_t MinMicrophoneVolume(uint32_t* minVolume) const { return 0; }

// Microphone mute control

virtual int32_t MicrophoneMuteIsAvailable(bool* available) { return 0; }

virtual int32_t SetMicrophoneMute(bool enable) { return 0; }

virtual int32_t MicrophoneMute(bool* enabled) const { return 0; }

// Stereo support

virtual int32_t StereoRecordingIsAvailable(bool* available) const;

virtual int32_t SetStereoRecording(bool enable);

virtual int32_t StereoRecording(bool* enabled) const { return 0; }

// Only supported on Android.

virtual bool BuiltInAECIsAvailable() const { return false; }

virtual bool BuiltInAGCIsAvailable() const { return false; }

virtual bool BuiltInNSIsAvailable() const { return false; }

// Enables the built-in audio effects. Only supported on Android.

virtual int32_t EnableBuiltInAEC(bool enable) { return 0; }

virtual int32_t EnableBuiltInAGC(bool enable) { return 0; }

virtual int32_t EnableBuiltInNS(bool enable) { return 0; }

static bool IsEnabled() { return enabled_; }

static BeeErrorCode SetInputParam(int32_t channels, int32_t sample_rate, int32_t sample_size);

void OnPCMData(uint8_t* data, size_t samples_per_channel);

public:

bool init_ = false;

bool recording_ = false;

bool rec_is_initialized_ = false;

rtc::CriticalSection crit_sect_;

AudioTransport *audio_transport_ = NULL;

uint8_t pending_[640 * 2 * 2];

size_t pending_length_ = 0;

static bool input_param_set_;

static int32_t channels_;

static int32_t sample_rate_;

static int32_t sample_size_;

static bool enabled_;

};

} // namespace bee

#endif // __AUDIO_DEVICE_MODULE_WRAPPER_H__

audio_device_module_wrapper.cpp

#include "platform/win32/audio_device_module_wrapper.h"

#include "service/bee_entrance.h"

namespace bee {

bool AudioDeviceModuleWrapper::input_param_set_ = false;

int32_t AudioDeviceModuleWrapper::channels_ = 0;

int32_t AudioDeviceModuleWrapper::sample_rate_ = 0;

int32_t AudioDeviceModuleWrapper::sample_size_ = 0;

bool AudioDeviceModuleWrapper::enabled_ = false;

AudioDeviceModuleWrapper::AudioDeviceModuleWrapper(const AudioLayer audioLayer)

: rtc::RefCountedObject(audioLayer) {

enabled_ = true;

}

AudioDeviceModuleWrapper::~AudioDeviceModuleWrapper() {

}

rtc::scoped_refptr AudioDeviceModuleWrapper::Create(const AudioLayer audio_layer) {

rtc::scoped_refptr audio_device(new rtc::RefCountedObject(audio_layer));

// Ensure that the current platform is supported.

if (audio_device->CheckPlatform() == -1) {

return nullptr;

}

// Create the platform-dependent implementation.

if (audio_device->CreatePlatformSpecificObjects() == -1) {

return nullptr;

}

// Ensure that the generic audio buffer can communicate with the platform

// specific parts.

if (audio_device->AttachAudioBuffer() == -1) {

return nullptr;

}

// audio_device->Init();

return audio_device;

}

int32_t AudioDeviceModuleWrapper::RegisterAudioCallback(AudioTransport* audioCallback) {

audio_transport_ = audioCallback; //Custom record transport.

return AudioDeviceModuleImpl::RegisterAudioCallback(audioCallback);

}

int32_t AudioDeviceModuleWrapper::InitRecording() {

rec_is_initialized_ = true;

return 0;

}

bool AudioDeviceModuleWrapper::RecordingIsInitialized() const {

return rec_is_initialized_;

}

int32_t AudioDeviceModuleWrapper::StartRecording() {

if (!rec_is_initialized_) {

return -1;

}

recording_ = true;

return 0;

}

int32_t AudioDeviceModuleWrapper::StopRecording() {

recording_ = false;

return 0;

}

bool AudioDeviceModuleWrapper::Recording() const {

return recording_;

}

int32_t AudioDeviceModuleWrapper::StereoRecordingIsAvailable(bool* available) const {

*available = false;

return 0;

}

int32_t AudioDeviceModuleWrapper::SetStereoRecording(bool enable) {

if (!enable) {

return 0;

}

return -1;

}

BeeErrorCode AudioDeviceModuleWrapper::SetInputParam(int32_t channels, int32_t sample_rate, int32_t sample_size) {

BeeErrorCode ret = kBeeErrorCode_Success;

do {

if (!enabled_) {

ret = kBeeErrorCode_Invalid_State;

break;

}

if (!input_param_set_) {

channels_ = channels;

sample_rate_ = sample_rate;

sample_size_ = sample_size;

input_param_set_ = true;

} else if (channels != channels_ || sample_rate != sample_rate_ || sample_size != sample_size_) {

ret = kBeeErrorCode_Invalid_Param;

}

} while (0);

return ret;

}

void AudioDeviceModuleWrapper::OnPCMData(uint8_t* data, size_t samples_per_channel) {

crit_sect_.Enter();

if (!audio_transport_) {

return;

}

crit_sect_.Leave();

//This info is set on the stream before starting capture

size_t channels = channels_;

size_t sample_rate = sample_rate_;

size_t sample_size = sample_size_;

//Get chunk for 10ms

size_t chunk = (sample_rate / 100);

size_t i = 0;

uint32_t level;

//Check if we had pending

if (pending_length_) {

//Copy the missing ones

i = chunk - pending_length_;

//Copy

memcpy(pending_ + pending_length_ * sample_size * channels, data, i * sample_size * channels);

//Add sent

audio_transport_->RecordedDataIsAvailable(pending_, chunk, sample_size * channels, channels, sample_rate, 0, 0, 0, 0, level);

//No pending

pending_length_ = 0;

}

//Send all full chunks possible

while ( i + chunk < samples_per_channel) {

//Send them

audio_transport_->RecordedDataIsAvailable(data + i * sample_size * channels, chunk, sample_size * channels, channels, sample_rate, 0, 0, 0, 0, level);

//Inc sent

i += chunk;

}

//If there are missing ones

if (i != samples_per_channel) {

//Calculate pending

pending_length_ = samples_per_channel - i;

//Copy to pending buffer

memcpy(pending_, data + i*sample_size*channels, pending_length_ * sample_size * channels);

}

}

} // namespace bee

创建PeerConnectionFactory:

webrtc::AudioDeviceModule *adm = AudioDeviceModuleWrapper::Create();

rtc::scoped_refptr factory =

webrtc::CreatePeerConnectionFactory(

network_thread_.get(),

worker_thread_.get(),

signaling_thread_.get(),

adm,

audio_encoder_factory,

audio_decoder_factory,

video_encoder_factory,

video_decoder_factory);

在自定义输出流bee-stream.c的bee_receive_audio回调中,传入PCM数据即可。

static void bee_receive_audio(void *data, struct audio_data *frame) {

// 注意,下面是伪代码,实际上C调C++需要做一些转换,这里只是展示如果传递数据。

adm->OnPCMData((unsigned char*)frame->data[0], frame->frames);

}

2.5.2 一些细节

- 一次只能推给WebRTC 10ms的数据,这个数据量可以根据采样率、采样大小、通道数等算出来。

2.6 WebRTC封装OBS编码器

OBS支持软件编码器X264,并支持适配了各类显卡的硬件编码器。无论哪个编码器性能都比当前版本的OpenH264高,最开始使用WebRTC的OpenH264编码的时候码率和帧率都达不到要求,直观的效果就是卡顿,后来才改成OBS的编码器,效果还可以,比较流畅。

2.6.1 实现

WebRTC使用外部编码器的方法也很简单,见2.5节,在调用CreatePeerConnectionFactory传入自定义的编码器工厂,因此需要自定义编码器、编码器工厂,这个也是Android/iOS端实现硬编硬解的通用做法。

这里也直接贴代码吧。

bee-obs-video-encoder.h

#ifndef __BEE_OBS_VIDEO_ENCODER_H__

#define __BEE_OBS_VIDEO_ENCODER_H__

#include "obs.hpp"

#include "util/util.hpp"

extern "C" {

#include "obs-internal.h"

}

#include "webrtc/media/engine/webrtcvideoencoderfactory.h"

#include "webrtc/api/video_codecs/video_encoder.h"

#include "webrtc/common_video/h264/h264_bitstream_parser.h"

#include "webrtc/system_wrappers/include/clock.h"

#include

#include

namespace bee_obs {

///////////////////////////////////BeeObsVideoEncoder///////////////////////////////////////

class IOService;

class BeeObsVideoEncoder : public webrtc::VideoEncoder {

public:

BeeObsVideoEncoder();

virtual ~BeeObsVideoEncoder();

public:

bool open(const ConfigFile& config);

bool close();

bool start();

bool stop();

void obs_encode(struct video_data *frame);

//WebRTC interfaces.

virtual int32_t InitEncode(

const webrtc::VideoCodec* codec_settings,

int32_t number_of_cores,

size_t max_payload_size) override;

virtual int32_t RegisterEncodeCompleteCallback(

webrtc::EncodedImageCallback* callback) override;

virtual int32_t Release() override;

virtual int32_t Encode(

const webrtc::VideoFrame& frame,

const webrtc::CodecSpecificInfo* codec_specific_info,

const std::vector* frame_types) override;

virtual int32_t SetChannelParameters(

uint32_t packet_loss,

int64_t rtt) override;

private:

bool start_internal(obs_encoder_t *encoder);

bool stop_internal(

obs_encoder_t *encoder,

void(*new_packet)(void *param, struct encoder_packet *packet),

void *param);

void configure_encoder(const ConfigFile& config);

static void new_encoded_packet(void *param, struct encoder_packet *packet);

void on_new_encoded_packet(struct encoder_packet *packet);

void load_streaming_preset_h264(const char *encoderId);

void update_streaming_settings_amd(obs_data_t *settings, int bitrate);

size_t get_callback_idx(

const struct obs_encoder *encoder,

void(*new_packet)(void *param, struct encoder_packet *packet),

void *param);

void obs_encoder_actually_destroy(obs_encoder_t *encoder);

void free_audio_buffers(struct obs_encoder *encoder);

//WebRTC interface implement.

void InitEncodeOnCodecThread(

int32_t width,

int32_t height,

int32_t target_bitrate,

int32_t fps,

std::shared_ptr > promise);

void RegisterEncodeCompleteCallbackOnCodecThread(

webrtc::EncodedImageCallback* callback,

std::shared_ptr > promise);

void ReleaseOnCodecThread(

std::shared_ptr > promise);

void EncodeOnCodecThread(

const webrtc::VideoFrame& frame,

const webrtc::FrameType frame_type,

const int64_t frame_input_time_ms);

//OBS encoder implement.

void do_encode(

struct obs_encoder *encoder,

struct encoder_frame *frame,

std::shared_ptr > promise);

void send_packet(

struct obs_encoder *encoder,

struct encoder_callback *cb, struct encoder_packet *packet);

void send_first_video_packet(

struct obs_encoder *encoder,

struct encoder_callback *cb,

struct encoder_packet *packet);

void send_idr_packet(

struct obs_encoder *encoder,

struct encoder_callback *cb,

struct encoder_packet *packet);

bool get_sei(

const struct obs_encoder *encoder,

uint8_t **sei,

size_t *size);

private:

OBSEncoder h264_encoder_;

std::shared_ptr io_service_;

webrtc::EncodedImageCallback* callback_ = NULL;

bool opened = false;

bool started = false;

bool last_encode_error = false;

int32_t width_ = 0;

int32_t height_ = 0;

int32_t target_bitrate_ = 0;

int32_t fps_ = 0;

webrtc::H264BitstreamParser h264_bitstream_parser_;

struct InputFrameInfo {

InputFrameInfo(

int64_t encode_start_time,

int32_t frame_timestamp,

int64_t frame_render_time_ms,

webrtc::VideoRotation rotation)

: encode_start_time(encode_start_time),

frame_timestamp(frame_timestamp),

frame_render_time_ms(frame_render_time_ms),

rotation(rotation) {}

// Time when video frame is sent to encoder input.

const int64_t encode_start_time;

// Input frame information.

const int32_t frame_timestamp;

const int64_t frame_render_time_ms;

const webrtc::VideoRotation rotation;

};

std::list input_frame_infos_;

int32_t output_timestamp_; // Last output frame timestamp from |input_frame_infos_|.

int64_t output_render_time_ms_; // Last output frame render time from |input_frame_infos_|.

webrtc::VideoRotation output_rotation_; // Last output frame rotation from |input_frame_infos_|.

webrtc::Clock* const clock_;

const int64_t delta_ntp_internal_ms_;

bool send_key_frame_ = false;

};

////////////////////////////////////BeeObsVideoEncoderFactory//////////////////////////////////////

class BeeObsVideoEncoderFactory : public cricket::WebRtcVideoEncoderFactory {

public:

BeeObsVideoEncoderFactory();

~BeeObsVideoEncoderFactory();

public:

webrtc::VideoEncoder* CreateVideoEncoder(const cricket::VideoCodec& codec) override;

const std::vector& supported_codecs() const override;

void DestroyVideoEncoder(webrtc::VideoEncoder* encoder) override;

//void set_encoder_configure(const ConfigFile &basic_config) { basic_config_ = basic_config; }

private:

std::vector supported_codecs_;

ConfigFile basic_config_;

};

} // namespace bee_obs

#endif // #ifndef __BEE_OBS_VIDEO_ENCODER_H__

bee-obs-video-encoder.cpp

#include "bee-obs-video-encoder.h"

#include "bee-io-service.h"

#include "bee-proxy.h"

#include "util/config-file.h"

#include "util/darray.h"

#include "obs-avc.h"

#include "webrtc/media/base/h264_profile_level_id.h"

#include "webrtc/rtc_base/timeutils.h"

#include "webrtc/modules/video_coding/include/video_codec_interface.h"

#include "webrtc/modules/include/module_common_types.h"

#include "webrtc/common_video/h264/h264_common.h"

#define SIMPLE_ENCODER_X264_LOWCPU "x264_lowcpu"

#define SIMPLE_ENCODER_QSV "qsv"

#define SIMPLE_ENCODER_NVENC "nvenc"

#define SIMPLE_ENCODER_AMD "amd"

const size_t kFrameDiffThresholdMs = 350;

const int kMinKeyFrameInterval = 6;

namespace bee_obs {

BeeObsVideoEncoder::BeeObsVideoEncoder()

: clock_(webrtc::Clock::GetRealTimeClock()),

delta_ntp_internal_ms_(clock_->CurrentNtpInMilliseconds() - clock_->TimeInMilliseconds()) {

}

BeeObsVideoEncoder::~BeeObsVideoEncoder() {

}

bool BeeObsVideoEncoder::open(const ConfigFile& config) {

const char *encoder_name = config_get_string(config, "SimpleOutput", "StreamEncoder");

if (encoder_name == NULL) {

return false;

}

//Search and load encoder.

if (strcmp(encoder_name, SIMPLE_ENCODER_QSV) == 0) {

load_streaming_preset_h264("obs_qsv11");

} else if (strcmp(encoder_name, SIMPLE_ENCODER_AMD) == 0) {

load_streaming_preset_h264("amd_amf_h264");

} else if (strcmp(encoder_name, SIMPLE_ENCODER_NVENC) == 0) {

load_streaming_preset_h264("ffmpeg_nvenc");

} else {

load_streaming_preset_h264("obs_x264");

}

//Configure encoder.

configure_encoder(config);

//Initialize encoder.

if (!obs_encoder_initialize(h264_encoder_)) {

return false;

}

opened = true;

return true;

}

bool BeeObsVideoEncoder::close() {

if (h264_encoder_ != NULL) {

obs_encoder_release(h264_encoder_);

}

opened = false;

return true;

}

bool BeeObsVideoEncoder::start() {

bool ret = true;

do {

if (h264_encoder_ == NULL) {

ret = false;

break;

}

obs_encoder_t *encoder = h264_encoder_;

pthread_mutex_lock(&encoder->init_mutex);

ret = start_internal(encoder);

pthread_mutex_unlock(&encoder->init_mutex);

} while (0);

return ret;

}

bool BeeObsVideoEncoder::stop() {

bool ret = true;

do {

if (h264_encoder_ == NULL) {

ret = false;

break;

}

obs_encoder_t *encoder = h264_encoder_;

pthread_mutex_lock(&encoder->init_mutex);

bool destroyed = stop_internal(encoder, new_encoded_packet, this);

if (!destroyed) {

pthread_mutex_unlock(&encoder->init_mutex);

}

} while (0);

return ret;

}

//WebRTC interfaces.

int32_t BeeObsVideoEncoder::InitEncode(

const webrtc::VideoCodec* codec_settings,

int32_t number_of_cores,

size_t max_payload_size) {

io_service_.reset(new IOService);

io_service_->start();

int32_t ret = 0;

std::shared_ptr > promise(new std::promise);

io_service_->ios()->post(

boost::bind(&BeeObsVideoEncoder::InitEncodeOnCodecThread,

this,

codec_settings->width,

codec_settings->height,

codec_settings->targetBitrate,

codec_settings->maxFramerate,

promise));

std::future future = promise->get_future();

ret = future.get();

return ret;

}

int32_t BeeObsVideoEncoder::RegisterEncodeCompleteCallback(

webrtc::EncodedImageCallback* callback) {

std::shared_ptr > promise(new std::promise);

io_service_->ios()->post(

boost::bind(&BeeObsVideoEncoder::RegisterEncodeCompleteCallbackOnCodecThread,

this,

callback,

promise));

std::future future = promise->get_future();

int32_t ret = future.get();

return ret;

}

int32_t BeeObsVideoEncoder::Release() {

std::shared_ptr > promise(new std::promise);

io_service_->ios()->post(

boost::bind(&BeeObsVideoEncoder::ReleaseOnCodecThread,

this,

promise));

std::future future = promise->get_future();

int32_t ret = future.get();

if (io_service_ != NULL) {

io_service_->stop();

io_service_.reset();

}

return ret;

}

int32_t BeeObsVideoEncoder::Encode(

const webrtc::VideoFrame& frame,

const webrtc::CodecSpecificInfo* codec_specific_info,

const std::vector* frame_types) {

//blog(LOG_INFO, "@@@ Encode");

if (last_encode_error) {

return -1;

}

io_service_->ios()->post(

boost::bind(&BeeObsVideoEncoder::EncodeOnCodecThread,

this,

frame,

frame_types->front(),

rtc::TimeMillis()));

return 0;

}

int32_t BeeObsVideoEncoder::SetChannelParameters(

uint32_t packet_loss,

int64_t rtt) {

return 0;

}

bool BeeObsVideoEncoder::start_internal(obs_encoder_t *encoder) {

struct encoder_callback cb = { false, new_encoded_packet, this };

bool first = false;

if (!encoder->context.data) {

return false;

}

pthread_mutex_lock(&encoder->callbacks_mutex);

first = (encoder->callbacks.num == 0);

size_t idx = get_callback_idx(encoder, new_encoded_packet, this);

if (idx == DARRAY_INVALID) {

da_push_back(encoder->callbacks, &cb);

}

pthread_mutex_unlock(&encoder->callbacks_mutex);

if (first) {

encoder->cur_pts = 0;

os_atomic_set_bool(&encoder->active, true);

}

started = true;

return true;

}

bool BeeObsVideoEncoder::stop_internal(

obs_encoder_t *encoder,

void(*new_packet)(void *param, struct encoder_packet *packet),

void *param) {

bool last = false;

size_t idx;

pthread_mutex_lock(&encoder->callbacks_mutex);

idx = get_callback_idx(encoder, new_packet, param);

if (idx != DARRAY_INVALID) {

da_erase(encoder->callbacks, idx);

last = (encoder->callbacks.num == 0);

}

pthread_mutex_unlock(&encoder->callbacks_mutex);

started = false;

if (last) {

obs_encoder_shutdown(encoder);

os_atomic_set_bool(&encoder->active, false);

encoder->initialized = false;

if (encoder->destroy_on_stop) {

pthread_mutex_unlock(&encoder->init_mutex);

obs_encoder_actually_destroy(encoder);

return true;

}

}

return false;

}

void BeeObsVideoEncoder::configure_encoder(const ConfigFile& config) {

obs_data_t *h264Settings = obs_data_create();

int videoBitrate = config_get_uint(config, "SimpleOutput", "VBitrate");

bool advanced = config_get_bool(config, "SimpleOutput", "UseAdvanced");

bool enforceBitrate = config_get_bool(config, "SimpleOutput", "EnforceBitrate");

const char *custom = config_get_string(config, "SimpleOutput", "x264Settings");

const char *encoder = config_get_string(config, "SimpleOutput", "StreamEncoder");

const char *presetType = NULL;

const char *preset = NULL;

if (strcmp(encoder, SIMPLE_ENCODER_QSV) == 0) {

presetType = "QSVPreset";

} else if (strcmp(encoder, SIMPLE_ENCODER_AMD) == 0) {

presetType = "AMDPreset";

update_streaming_settings_amd(h264Settings, videoBitrate);

} else if (strcmp(encoder, SIMPLE_ENCODER_NVENC) == 0) {

presetType = "NVENCPreset";

} else {

presetType = "Preset";

}

preset = config_get_string(config, "SimpleOutput", presetType);

obs_data_set_string(h264Settings, "rate_control", "CBR");

obs_data_set_int(h264Settings, "bitrate", videoBitrate);

//Add by HeZhen, disable b-frames

obs_data_set_int(h264Settings, "bf", 0);

//Add by HeZhen, default 250 frames' time.

obs_data_set_int(h264Settings, "keyint_sec", 10);

//Add by HeZhen for QSV immediately outputing first frame.

obs_data_set_int(h264Settings, "async_depth", 1);

if (advanced) {

obs_data_set_string(h264Settings, "preset", preset);

obs_data_set_string(h264Settings, "x264opts", custom);

}

if (advanced && !enforceBitrate) {

obs_data_set_int(h264Settings, "bitrate", videoBitrate);

}

video_t *video = obs_get_video();

enum video_format format = video_output_get_format(video);

if (format != VIDEO_FORMAT_NV12 && format != VIDEO_FORMAT_I420) {

obs_encoder_set_preferred_video_format(h264_encoder_, VIDEO_FORMAT_NV12);

}

obs_encoder_update(h264_encoder_, h264Settings);

obs_data_release(h264Settings);

obs_encoder_set_video(h264_encoder_, video);

}

void BeeObsVideoEncoder::new_encoded_packet(void *param, struct encoder_packet *packet) {

BeeObsVideoEncoder *encoder = (BeeObsVideoEncoder*)param;

if (encoder != NULL) {

encoder->on_new_encoded_packet(packet);

}

}

void BeeObsVideoEncoder::on_new_encoded_packet(struct encoder_packet *packet) {

if (!input_frame_infos_.empty()) {

const InputFrameInfo& frame_info = input_frame_infos_.front();

output_timestamp_ = frame_info.frame_timestamp;

output_render_time_ms_ = frame_info.frame_render_time_ms;

output_rotation_ = frame_info.rotation;

input_frame_infos_.pop_front();

}

int64_t render_time_ms = packet->dts_usec / rtc::kNumMicrosecsPerMillisec;

// Local time in webrtc time base.

int64_t current_time_us = clock_->TimeInMicroseconds();

int64_t current_time_ms = current_time_us / rtc::kNumMicrosecsPerMillisec;

// In some cases, e.g., when the frame from decoder is fed to encoder,

// the timestamp may be set to the future. As the encoding pipeline assumes

// capture time to be less than present time, we should reset the capture

// timestamps here. Otherwise there may be issues with RTP send stream.

if (packet->dts_usec > current_time_us) {

packet->dts_usec = current_time_us;

}

// Capture time may come from clock with an offset and drift from clock_.

int64_t capture_ntp_time_ms;

if (render_time_ms != 0) {

capture_ntp_time_ms = render_time_ms + delta_ntp_internal_ms_;

} else {

capture_ntp_time_ms = current_time_ms + delta_ntp_internal_ms_;

}

// Convert NTP time, in ms, to RTP timestamp.

const int kMsToRtpTimestamp = 90;

uint32_t timeStamp = kMsToRtpTimestamp * static_cast(capture_ntp_time_ms);

// Extract payload.

size_t payload_size = packet->size;

uint8_t* payload = packet->data;

const webrtc::VideoCodecType codec_type = webrtc::kVideoCodecH264;

webrtc::EncodedImageCallback::Result callback_result(webrtc::EncodedImageCallback::Result::OK);

if (callback_) {

std::unique_ptr image(new webrtc::EncodedImage(payload, payload_size, payload_size));

image->_encodedWidth = width_;

image->_encodedHeight = height_;

//image->capture_time_ms_ = packet->dts_usec / 1000;

//image->_timeStamp = image->capture_time_ms_ * 90;

image->capture_time_ms_ = render_time_ms;

image->_timeStamp = timeStamp;

image->rotation_ = output_rotation_;

image->_frameType = (packet->keyframe ? webrtc::kVideoFrameKey : webrtc::kVideoFrameDelta);

image->_completeFrame = true;

webrtc::CodecSpecificInfo info;

memset(&info, 0, sizeof(info));

info.codecType = codec_type;

if (packet->keyframe) {

blog(LOG_INFO, "Key frame");

}

// Generate a header describing a single fragment.

webrtc::RTPFragmentationHeader header;

memset(&header, 0, sizeof(header));

h264_bitstream_parser_.ParseBitstream(payload, payload_size);

int qp;

if (h264_bitstream_parser_.GetLastSliceQp(&qp)) {

//current_acc_qp_ += qp;

image->qp_ = qp;

}

// For H.264 search for start codes.

const std::vector nalu_idxs = webrtc::H264::FindNaluIndices(payload, payload_size);

if (nalu_idxs.empty()) {

blog(LOG_ERROR, "Start code is not found!");

blog(LOG_ERROR,

"Data: %02x %02x %02x %02x %02x %02x",

image->_buffer[0],

image->_buffer[1],

image->_buffer[2],

image->_buffer[3],

image->_buffer[4],

image->_buffer[5]);

//ProcessHWErrorOnCodecThread(true /* reset_if_fallback_unavailable */);

//return false;

return;

}

header.VerifyAndAllocateFragmentationHeader(nalu_idxs.size());

for (size_t i = 0; i < nalu_idxs.size(); i++) {

header.fragmentationOffset[i] = nalu_idxs[i].payload_start_offset;

header.fragmentationLength[i] = nalu_idxs[i].payload_size;

header.fragmentationPlType[i] = 0;

header.fragmentationTimeDiff[i] = 0;

}

callback_result = callback_->OnEncodedImage(*image, &info, &header);

}

}

void BeeObsVideoEncoder::load_streaming_preset_h264(const char *encoderId) {

h264_encoder_ = obs_video_encoder_create(encoderId, "bee_h264_stream", nullptr, nullptr);

if (!h264_encoder_) {

throw "Failed to create h264 streaming encoder (simple output)";

}

obs_encoder_release(h264_encoder_);

}

void BeeObsVideoEncoder::update_streaming_settings_amd(obs_data_t *settings, int bitrate) {

// Static Properties

obs_data_set_int(settings, "Usage", 0);

obs_data_set_int(settings, "Profile", 100); // High

// Rate Control Properties

obs_data_set_int(settings, "RateControlMethod", 3);

obs_data_set_int(settings, "Bitrate.Target", bitrate);

obs_data_set_int(settings, "FillerData", 1);

obs_data_set_int(settings, "VBVBuffer", 1);

obs_data_set_int(settings, "VBVBuffer.Size", bitrate);

// Picture Control Properties

obs_data_set_double(settings, "KeyframeInterval", 2.0);

obs_data_set_int(settings, "BFrame.Pattern", 0);

}

size_t BeeObsVideoEncoder::get_callback_idx(

const struct obs_encoder *encoder,

void(*new_packet)(void *param, struct encoder_packet *packet),

void *param) {

for (size_t i = 0; i < encoder->callbacks.num; i++) {

struct encoder_callback *cb = encoder->callbacks.array + i;

if (cb->new_packet == new_packet && cb->param == param)

return i;

}

return DARRAY_INVALID;

}

void BeeObsVideoEncoder::obs_encoder_actually_destroy(obs_encoder_t *encoder) {

if (encoder) {

blog(LOG_DEBUG, "encoder '%s' destroyed", encoder->context.name);

free_audio_buffers(encoder);

if (encoder->context.data)

encoder->info.destroy(encoder->context.data);

da_free(encoder->callbacks);

pthread_mutex_destroy(&encoder->init_mutex);

pthread_mutex_destroy(&encoder->callbacks_mutex);

obs_context_data_free(&encoder->context);

if (encoder->owns_info_id)

bfree((void*)encoder->info.id);

bfree(encoder);

}

}

void BeeObsVideoEncoder::free_audio_buffers(struct obs_encoder *encoder) {

for (size_t i = 0; i < MAX_AV_PLANES; i++) {

circlebuf_free(&encoder->audio_input_buffer[i]);

bfree(encoder->audio_output_buffer[i]);

encoder->audio_output_buffer[i] = NULL;

}

}

void BeeObsVideoEncoder::InitEncodeOnCodecThread(

int32_t width,

int32_t height,

int32_t target_bitrate,

int32_t fps,

std::shared_ptr > promise) {

int32_t ret = 0;

do {

width_ = width;

height_ = height;

target_bitrate_ = target_bitrate;

fps_ = fps;

input_frame_infos_.clear();

const ConfigFile &basic_config = BeeProxy::instance()->get_basic_configure();

if (!open(basic_config)) {

ret = -1;

break;

}

if (!start()) {

ret = -1;

break;

}

BeeProxy::instance()->set_video_encoder(this);

} while (0);

if (promise != NULL) {

promise->set_value(ret);

}

}

void BeeObsVideoEncoder::RegisterEncodeCompleteCallbackOnCodecThread(

webrtc::EncodedImageCallback* callback,

std::shared_ptr > promise) {

callback_ = callback;

if (promise != NULL) {

promise->set_value(0);

}

}

void BeeObsVideoEncoder::ReleaseOnCodecThread(std::shared_ptr > promise) {

BeeProxy::instance()->set_video_encoder(NULL);

if (started) {

stop();

}

if (opened) {

close();

}

if (promise != NULL) {

promise->set_value(0);

}

}

void BeeObsVideoEncoder::EncodeOnCodecThread(

const webrtc::VideoFrame& frame,

const webrtc::FrameType frame_type,

const int64_t frame_input_time_ms) {

if (frame_type != webrtc::kVideoFrameDelta) {

send_key_frame_ = true;

}

//Just store timestamp, real encoding is bypassed.

input_frame_infos_.emplace_back(

frame_input_time_ms,

frame.timestamp(),

frame.render_time_ms(),

frame.rotation());

}

static const char *receive_video_name = "receive_video";

void BeeObsVideoEncoder::obs_encode(

struct video_data *frame) {

//blog(LOG_INFO, "@@@ obs_encode");

profile_start(receive_video_name);

do {

struct obs_encoder *encoder = h264_encoder_;

struct obs_encoder *pair = encoder->paired_encoder;

struct encoder_frame enc_frame;

if (!encoder->first_received && pair) {

if (!pair->first_received ||

pair->first_raw_ts > frame->timestamp) {

break;

}

}

memset(&enc_frame, 0, sizeof(struct encoder_frame));

for (size_t i = 0; i < MAX_AV_PLANES; i++) {

enc_frame.data[i] = frame->data[i];

enc_frame.linesize[i] = frame->linesize[i];

}

if (!encoder->start_ts) {

encoder->start_ts = frame->timestamp;

}

enc_frame.frames = 1;

enc_frame.pts = encoder->cur_pts;

std::shared_ptr > promise(new std::promise);

io_service_->ios()->post(boost::bind(&BeeObsVideoEncoder::do_encode, this, encoder, &enc_frame, promise));

std::future future = promise->get_future();

future.get();

encoder->cur_pts += encoder->timebase_num;

} while (0);

profile_end(receive_video_name);

}

static const char *do_encode_name = "do_encode";

void BeeObsVideoEncoder::do_encode(

struct obs_encoder *encoder,

struct encoder_frame *frame,

std::shared_ptr > promise) {

//blog(LOG_INFO, "@@@ do_encode");

profile_start(do_encode_name);

if (!encoder->profile_encoder_encode_name) {

encoder->profile_encoder_encode_name = profile_store_name(obs_get_profiler_name_store(), "encode(%s)", encoder->context.name);

}

struct encoder_packet pkt = { 0 };

bool received = false;

bool success;

pkt.timebase_num = encoder->timebase_num;

pkt.timebase_den = encoder->timebase_den;

pkt.encoder = encoder;

if (send_key_frame_) {

pkt.keyframe = true;

send_key_frame_ = false;

}

profile_start(encoder->profile_encoder_encode_name);

success = encoder->info.encode(encoder->context.data, frame, &pkt, &received);

profile_end(encoder->profile_encoder_encode_name);

if (!success) {

last_encode_error = true;

blog(LOG_ERROR, "Error encoding with encoder '%s'", encoder->context.name);

goto error;

}

if (received) {

if (!encoder->first_received) {

encoder->offset_usec = packet_dts_usec(&pkt);

encoder->first_received = true;

}

/* we use system time here to ensure sync with other encoders,

* you do not want to use relative timestamps here */

pkt.dts_usec = encoder->start_ts / 1000 + packet_dts_usec(&pkt) - encoder->offset_usec;

pkt.sys_dts_usec = pkt.dts_usec;

pthread_mutex_lock(&encoder->callbacks_mutex);

for (size_t i = encoder->callbacks.num; i > 0; i--) {

struct encoder_callback *cb;

cb = encoder->callbacks.array + (i - 1);

send_packet(encoder, cb, &pkt);

}

pthread_mutex_unlock(&encoder->callbacks_mutex);

}

error:

profile_end(do_encode_name);

if (promise != NULL) {

promise->set_value(0);

}

}

void BeeObsVideoEncoder::send_packet(

struct obs_encoder *encoder,

struct encoder_callback *cb,

struct encoder_packet *packet) {

/* include SEI in first video packet */

if (encoder->info.type == OBS_ENCODER_VIDEO && !cb->sent_first_packet) {

send_first_video_packet(encoder, cb, packet);

} else if (packet->keyframe) {

//Add by HeZhen, WebRTC limit:Every key frame must begin with sps/pps.

send_idr_packet(encoder, cb, packet);

} else {

cb->new_packet(cb->param, packet);

}

}

void BeeObsVideoEncoder::send_first_video_packet(

struct obs_encoder *encoder,

struct encoder_callback *cb,

struct encoder_packet *packet) {

struct encoder_packet first_packet;

DARRAY(uint8_t) data;

uint8_t *sei;

size_t size;

/* always wait for first keyframe */

if (!packet->keyframe) {

return;

}

da_init(data);

//Add sps/pps first, modified by HeZhen.

uint8_t *header;

obs_encoder_get_extra_data(encoder, &header, &size);

da_push_back_array(data, header, size);

if (!get_sei(encoder, &sei, &size) || !sei || !size) {

cb->new_packet(cb->param, packet);

cb->sent_first_packet = true;

return;

}

da_push_back_array(data, sei, size);

da_push_back_array(data, packet->data, packet->size);

first_packet = *packet;

first_packet.data = data.array;

first_packet.size = data.num;

cb->new_packet(cb->param, &first_packet);

cb->sent_first_packet = true;

da_free(data);

}

void BeeObsVideoEncoder::send_idr_packet(

struct obs_encoder *encoder,

struct encoder_callback *cb,

struct encoder_packet *packet) {

DARRAY(uint8_t) data;

da_init(data);

uint8_t *header;

size_t size;

obs_encoder_get_extra_data(encoder, &header, &size);

da_push_back_array(data, header, size);

da_push_back_array(data, packet->data, packet->size);

struct encoder_packet idr_packet;

idr_packet = *packet;

idr_packet.data = data.array;

idr_packet.size = data.num;

cb->new_packet(cb->param, &idr_packet);

da_free(data);

}

bool BeeObsVideoEncoder::get_sei(

const struct obs_encoder *encoder,

uint8_t **sei,

size_t *size) {

if (encoder->info.get_sei_data) {

return encoder->info.get_sei_data(encoder->context.data, sei, size);

} else {

return false;

}

}

///////////////////////////////////BeeObsVideoEncoderFactory///////////////////////////////////////

BeeObsVideoEncoderFactory::BeeObsVideoEncoderFactory() {

//WebRTC内部的编码器会在sdp中发送一个42e01f的编码能力,也就是kProfileConstrainedBaseline+3.1,

//janus只会协商一个,这里必须设置成42e01f,否则外部编码器无法使能。

cricket::VideoCodec codec(cricket::kH264CodecName);

const webrtc::H264::ProfileLevelId profile(webrtc::H264::kProfileConstrainedBaseline, webrtc::H264::kLevel3_1);

std::string s = *webrtc::H264::ProfileLevelIdToString(profile);

codec.SetParam(cricket::kH264FmtpProfileLevelId, *webrtc::H264::ProfileLevelIdToString(profile));

codec.SetParam(cricket::kH264FmtpLevelAsymmetryAllowed, "1");

codec.SetParam(cricket::kH264FmtpPacketizationMode, "1");

supported_codecs_.push_back(codec);

}

BeeObsVideoEncoderFactory::~BeeObsVideoEncoderFactory() {

}

webrtc::VideoEncoder* BeeObsVideoEncoderFactory::CreateVideoEncoder(const cricket::VideoCodec& codec) {

return new BeeObsVideoEncoder();

}

const std::vector& BeeObsVideoEncoderFactory::supported_codecs() const {

return supported_codecs_;

}

void BeeObsVideoEncoderFactory::DestroyVideoEncoder(webrtc::VideoEncoder* encoder) {

delete encoder;

}

} // namespace bee_obs

2.6.2 一些细节

- 需要关闭B帧,因为WebRTC不支持B帧以降低延迟,代价是码流增大,如果不关闭的话画面会前后跳动;

obs_data_set_int(h264Settings, "bf", 0)

- x264可能会发送一些填充包,WebRTC的h264 nalu解析会报错,但是没有什么影响,忽略即可;

- WebRTC内部的编码器会在sdp中发送一个42e01f的编码能力,也就是kProfileConstrainedBaseline+3.1,Janus(使用了Janus)只会协商一个,自定义的编码器工厂支持的编码Profile必须设置成42e01f,否则外部编码器无法使能;

- 这里没有在WebRTC的编码回调中调用OBS的编码,而是直接在OBS自定义输出流的raw_video回调中直接调用编码,相当于绕过了WebRTC,免去了一些数据转换和传递(WebRTC内部会将输入的NV12格式数据转换成I420,如果要使用obs编码器,需要再转回NV12)。

2.6.3 关键帧的产生

如果不处理好关键帧,可能首帧很久才能出来,甚至可能直接无法解码正常播放。

2.6.3.1 SPS/PPS处理

- 需要在每个I帧前面加SPS/PPS,否则WebRTC无法解码;

void BeeObsVideoEncoder::send_idr_packet(

struct obs_encoder *encoder,

struct encoder_callback *cb,

struct encoder_packet *packet) {

DARRAY(uint8_t) data;

da_init(data);

uint8_t *header;

size_t size;

obs_encoder_get_extra_data(encoder, &header, &size); //这个就是SPS、PPS

da_push_back_array(data, header, size);

da_push_back_array(data, packet->data, packet->size);

struct encoder_packet idr_packet;

idr_packet = *packet;

idr_packet.data = data.array;

idr_packet.size = data.num;

cb->new_packet(cb->param, &idr_packet);

da_free(data);

}

2.6.3.2 关键帧发送时机

第一帧肯定要发关键帧,事实上要随时响应WebRTC对关键帧的请求,以适应WebRTC对流的控制。

//这里是WebRTC Android版(M57)的逻辑,控制关键帧的频率。

bool send_key_frame = false;

++frames_received_since_last_key_;

int64_t now_ms = rtc::TimeMillis();

if (last_frame_received_ms_ != -1 &&

(now_ms - last_frame_received_ms_) > kFrameDiffThresholdMs) {

if (frames_received_since_last_key_ > kMinKeyFrameInterval) {

send_key_frame = true;

}

frames_received_since_last_key_ = 0;

}

last_frame_received_ms_ = now_ms;

//第一帧的情况

if (first_frame_) {

send_key_frame = true;

first_frame_ = false;

}

//request_key_frame_是最近的一次WebRTC回调编码关键帧的请求。

send_key_frame = request_key_frame_ || send_key_frame;

request_key_frame_ = false;

struct encoder_packet pkt = { 0 };

bool received = false;

bool success;

pkt.timebase_num = encoder->timebase_num;

pkt.timebase_den = encoder->timebase_den;

pkt.encoder = encoder;

pkt.keyframe = send_key_frame;

pkt.force_keyframe = true;

……

2.6.3.3 X264强制编码关键帧

obs默认的x264编码器并不能随时编出关键帧,而是根据编码器初始化时的关键帧间隔来产生,为处理FIR、PLI等WebRTC的关键帧请求需要修改,在编码时指定参数i_type :

if (packet->keyframe && packet->force_keyframe) {

pic.i_type = X264_TYPE_KEYFRAME;

}

ret = x264_encoder_encode(obsx264->context, &nals, &nal_count,

(frame ? &pic : NULL), &pic_out);

2.6.3.3 INTEL/QSV强制编码关键帧

我的机器是INTEL的集成显卡,使用QSV进行硬件编码,默认不能随时编出关键帧,而是根据编码器初始化时的关键帧间隔来产生,为处理FIR、PLI等WebRTC的关键帧请求需要修改,在编码时指定参数FrameType :

mfxEncodeCtrl EncodeCtrl;

memset(&EncodeCtrl, 0, sizeof(mfxEncodeCtrl));

EncodeCtrl.FrameType = MFX_FRAMETYPE_I | MFX_FRAMETYPE_REF | MFX_FRAMETYPE_IDR;

sts = m_pmfxENC->EncodeFrameAsync(&EncodeCtrl, pSurface,

&m_pTaskPool[nTaskIdx].mfxBS,

&m_pTaskPool[nTaskIdx].syncp);

另外在设置编码器的时候需要指定"async_depth"参数为1,否则第一次EncodeFrameAsync调用将返回MFX_ERR_MORE_DATA,默认是4,会等待若干帧的输入后才会正确输出编码数据。

obs_data_set_int(h264Settings, "async_depth", 1);

2.6.3.4 AMD

未测试

3 代码地址

改天有时间再上传Github。