基于Java的阿里妈妈数据抓取技术

基于Java的阿里妈妈数据抓取技术

前言:

对于需要登录的网站爬虫最大的困难就是需要登录,然后才能获取到数据,如微博,阿里妈妈,webqq等。之前也有看过使用浏览器登录到网站后直接从浏览器中获取cookie的文章,这不失为一种解决方案,但是当cookie失效时就需要再次获取,比较麻烦,那有没有能有自动登录,然后在爬取数据的技术呢,这就是本文研究的重点,好啦不扯淡了,开始进入正题吧。

技术要点:java + Phantomjs+httpclient

思路:

1.需要借助一些工具获取登录的cookie,在做后续操作,这里就使用phantomjs来获取,其实phantomjs就是一个没有界面的浏览器,它提供了一些js的接口操作该浏览器,http://phantomjs.org/download.html这个链接就是该浏览器的链接,大家需要可以在这里下载。

2.由于phantom也可以直接发送post,get请求,但是解析起来比较慢,所以这里就只是在登录时使用该插件来获取cookie然后使用Java的httpclient来发送请求获取数据。

引入maven库:

<dependency>

<groupId>net.sourceforge.htmlunitgroupId>

<artifactId>htmlunitartifactId>

<version>2.15version>

dependency>

<dependency>

<groupId>org.apache.httpcomponentsgroupId>

<artifactId>httpclientartifactId>

<version>4.5.4version>

dependency>

<dependency>

<groupId>org.seleniumhq.seleniumgroupId>

<artifactId>selenium-javaartifactId>

<version>2.53.0version>

dependency>

<dependency>

<groupId>com.codebornegroupId>

<artifactId>phantomjsdriverartifactId>

<version>1.2.1version>

<exclusions>

<exclusion>

<groupId>org.seleniumhq.seleniumgroupId>

<artifactId>selenium-remote-driverartifactId>

exclusion>

<exclusion>

<groupId>org.seleniumhq.seleniumgroupId>

<artifactId>selenium-javaartifactId>

exclusion>

exclusions>

dependency>

编写页面处理的工具类

/***

* 页面操作的工具类

*

* @author admin

*

*/

public class PageUtils {

public static PhantomJSDriver driver = null;

public static String PhantomPath = "E:/phantomjs/bin/phantomjs.exe";

//页面中的cookie

public static Set

//页面中的token

public static String token=null;

// 初始化phantom先关的操作

static {

// 设置必要参数

DesiredCapabilities dcaps = new DesiredCapabilities();

// ssl证书支持

dcaps.setCapability("acceptSslCerts", true);

// 截屏支持

dcaps.setCapability("takesScreenshot", true);

// css搜索支持

dcaps.setCapability("cssSelectorsEnabled", true);

// js支持

dcaps.setJavascriptEnabled(true);

// 驱动支持

dcaps.setCapability(PhantomJSDriverService.PHANTOMJS_EXECUTABLE_PATH_PROPERTY, PhantomPath);

// 创建无界面浏览器对象

driver = new PhantomJSDriver(dcaps);

}

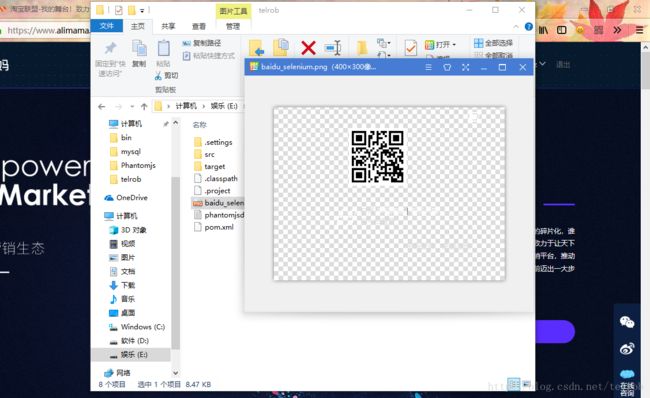

public static boolean loadLogin(String loginUrl, String mainUrl) throws Exception {

try {

driver.get(loginUrl);

File scrFile = ((TakesScreenshot) driver).getScreenshotAs(OutputType.FILE);

File file = new File("baidu_selenium.png");

FileUtils.copyFile(scrFile, file);

System.out.println("二维码已经保存为:" + file.getAbsolutePath());

System.out.println("请打开淘宝扫描该图片登录");

String current = "";

while (!current.equals(mainUrl)) {

current = driver.getCurrentUrl();

Thread.sleep(2000);

}

System.out.println("登录成功");

return true;

} catch (Exception e) {

//当出现异常时关闭

//driver.close();

//driver.quit();

return false;

}

}

//开启一个线程,定时访问页面,防止页面太久没有操作导致session超时

public static void keepCookies() {

new Thread() {

public void run() {

//淘宝联盟页面

String url="http://pub.alimama.com/myunion.htm";

while(true) {

try {

driver.get(url);

cookies=driver.manage().getCookies();

Thread.sleep(8*1000);

} catch (InterruptedException e) {

}

}

};

}.start();

}

//获取token

public static String getToken() {

//等待cookie中有数据

while(cookies==null) {

try {

Thread.sleep(1*1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

for(Cookie cook:cookies) {

String name=cook.getName();

if(name!=null&&name.equals("_tb_token_")) {

return token= cook.getValue();

}

}

return token;

}

/**

* 封装get请求

* @param url

* @return

*/

public static String httpGet(String url) {

try {

//获取cookie字符串

String cookieStr="";

for(Cookie cookie:cookies) {

cookieStr+=cookie.getName()+"="+cookie.getValue()+";";

}

// 根据地址获取请求

HttpGet request = new HttpGet(url);//这里发送get请求

//添加请求头

request.addHeader("Pragma", "no-cache");

//设置浏览器类型

request.addHeader("User-Agent", "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/538.1 (KHTML, like Gecko) PhantomJS/2.1.1 Safari/538.1");

request.addHeader("Accept-Language", "zh-CN,en,*");

//设置cookie

request.addHeader("Cookie", cookieStr);

// 获取当前客户端对象

HttpClient httpClient = HttpClients.custom().build();

// 通过请求对象获取响应对象

HttpResponse response = httpClient.execute(request);

if (response.getStatusLine().getStatusCode() == HttpStatus.SC_OK) {

return EntityUtils.toString(response.getEntity(),"utf-8");

}

} catch (Exception e) {

e.printStackTrace();

}

return null;

}

/**

* 封装post请求

* @param url

* @return

*/

public static String httpPost(String url) {

try {

//获取cookie字符串

String cookieStr="";

for(Cookie cookie:cookies) {

cookieStr+=cookie.getName()+"="+cookie.getValue()+";";

}

// 根据地址获取请求

HttpPost request = new HttpPost(url);//这里发送get请求

//添加请求头

request.addHeader("Pragma", "no-cache");

//设置浏览器类型

request.addHeader("User-Agent", "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/538.1 (KHTML, like Gecko) PhantomJS/2.1.1 Safari/538.1");

request.addHeader("Accept-Language", "zh-CN,en,*");

//设置cookie

request.addHeader("Cookie", cookieStr);

// 获取当前客户端对象

HttpClient httpClient = HttpClients.custom().build();

// 通过请求对象获取响应对象

HttpResponse response = httpClient.execute(request);

if (response.getStatusLine().getStatusCode() == HttpStatus.SC_OK) {

return EntityUtils.toString(response.getEntity(),"utf-8");

}

} catch (Exception e) {

e.printStackTrace();

}

return null;

}

/**

* 获取导购推广位

* @param time

* @param pvid

* @param token

* @return

*/

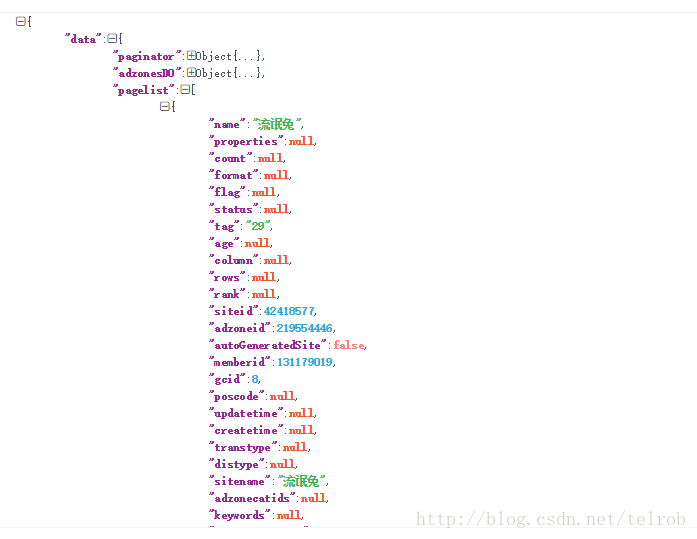

public static String getShoppingGuide(String pvid,String token) {

String url="https://pub.alimama.com/common/adzone/adzoneManage.json?"

+ "spm=a219t.7900221/1.1998910419.dbb742793.5bcb8cdcYsmdCb&"

+ "tab=3&"

+ "toPage=1&"

+ "perPageSize=40&"

+ "gcid=8&"

+ "t="+getCirrentTime()+"&"

+ "pvid="+pvid+"&"

//"pvid=60_59.172.110.203_862_1517744052508&"

+ "_tb_token_="+token+"&"

+ "_input_charset=utf-8";

return httpGet(url);

}

//获取当前的时间戳

public static long getCirrentTime() {

return System.currentTimeMillis();

}

/**

* 获取1000以内的随机数

* @return

*/

public static int getRandom() {

Random random=new Random();

int result=(int)(random.nextFloat()*1000);

return result;

}

}

编写主类:

public class Test {

public static Gson gson=new Gson();

public static void main(String[] args) {

//阿里妈妈录页面

String taobao="https://login.taobao.com/member/login.jhtml?style=mini&newMini2=true&css_style=alimama_index&from=alimama&redirectURL=http://www.alimama.com&full_redirect=true&disableQuickLogin=true";

//阿里妈妈主页面

String loginUrl="https://www.alimama.com/index.htm";

//获取个人信息的接口

String userUrl="http://pub.alimama.com/common/getUnionPubContextInfo.json";

try {

PageUtils.loadLogin(taobao, loginUrl);

PageUtils.keepCookies();

String token=PageUtils.getToken();

System.out.println("token:"+token);

String result=PageUtils.httpGet(userUrl);

LoginInfo info=gson.fromJson(result, LoginInfo.class);

System.out.println("获取个人信息:"+info);

//格式60_59.172.110.203_862_1517744052508

String pvid="60"

+"_"+info.getData().getIp()

+"_"+PageUtils.getRandom()

+"_"+PageUtils.getCirrentTime();

//获取导购推广位

String shopping=PageUtils.getShoppingGuide(pvid,PageUtils.getToken());

System.out.println("导购位信息:"+shopping);

} catch (Exception e) {

e.printStackTrace();

}

}

效果展示:

总结:通过本次爬虫也对http中cookie有了一定的了解,同时一些细节没有直接展示出来,但是代码中会有对一些躺过的坑作出解释,希望能够帮助到大家。