【CEPH】对象存储入门——从概念到基本命令,再到源码分析

目录

三种存储本质区别

为什么需要对象存储?

核心概念

用户

存储桶

对象

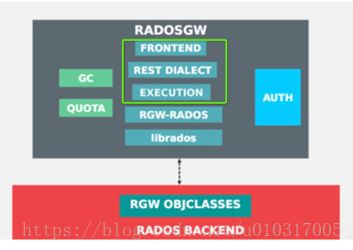

架构

HTTP-RGW IO路径

RGW-RADOS IO栈

FRONTEND

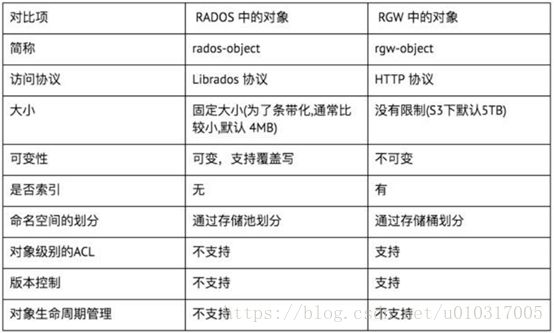

rados对象和rgw对象对比

各个池的作用

.rgw.root

.rgw.control

.rgw

.rgw.gc

.users.uid

.users

.rgw.buckets.index

.rgw.buckets

基本命令

查看所有用户

查看用户信息

创建用户

创建子账号

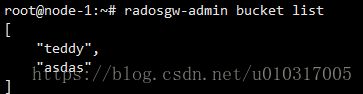

查看所有桶

查看桶内对象

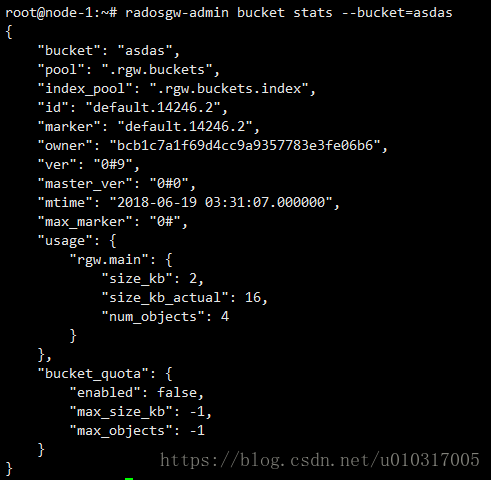

查看桶信息

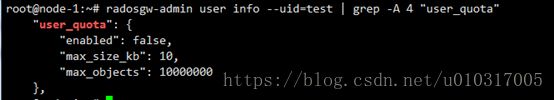

查看配额

更新配额

激活/取消配额

完整的命令使用帮助

上传对象流程分析

解析动作

具体的动作

动作预处理

动作执行

选择处理器

检查配额

处理器处理数据

处理器收尾

动作收尾

三种存储本质区别

对象存储: 也就是通常意义的键值存储,其接口就是简单的GET、PUT、DEL和其他扩展操作等。常见的对象存储厂商有七牛、又拍、Swift、S3。性能居于其他两种存储之间。

块存储: 这种接口通常以QEMU Driver或者Kernel Module的方式存在,这种接口需要实现Linux的Block Device的接口或者QEMU提供的Block Driver接口。实现如Sheepdog,AWS的EBS,青云的云硬盘和阿里云的盘古系统,还有Ceph的RBD(RBD是Ceph面向块存储的接口),可以使用磁盘阵列提供速度。此类存储读写效率快,但是不利于共享。

文件存储: 通常意义是支持POSIX接口,它跟传统的文件系统如Ext4是一个类型的,但区别在于分布式存储提供了并行化的能力,如Ceph的CephFS(CephFS是Ceph面向文件存储的接口),但是有时候又会把GFS,HDFS这种非POSIX接口的类文件存储接口归入此类。此类存储读写效率较慢,利于共享。

为什么需要对象存储?

首先,一个文件包含了了属性(术语叫metadata,元数据,例如该文件的大小、修改时间、存储路径等)以及内容(以下简称数据)。

例如FAT32这种文件系统,存储过程是链表的形式。

而对象存储则将元数据独立了出来,控制节点叫元数据服务器(服务器+对象存储管理软件),里面主要负责存储对象的属性(主要是对象的数据被打散存放到了那几台分布式服务器中的信息),而其他负责存储数据的分布式服务器叫做OSD,主要负责存储文件的数据部分。当用户访问对象,会先访问元数据服务器,元数据服务器只负责反馈对象存储在哪些OSD,假设反馈文件A存储在B、C、D三台OSD,那么用户就会再次直接访问3台OSD服务器去读取数据。

这时候由于是3台OSD同时对外传输数据,所以传输的速度就加快了。当OSD服务器数量越多,这种读写速度的提升就越大,通过此种方式,实现了读写快的目的。

另一方面,对象存储软件是有专门的文件系统的,所以OSD对外又相当于文件服务器,那么就不存在文件共享方面的困难了,也解决了文件共享方面的问题。

所以对象存储的出现,很好地结合了块存储与文件存储的优点。

核心概念

用户

对象存储的使用者,存储桶的拥有者

存储桶

作为存放对象的容器

对象

用户实际上传的文件

架构

HTTP-RGW IO路径

RGW-RADOS IO栈

FRONTEND

l Civetweb(可嵌入的C++实现的HTTP服务端库)

l Loadgen(测试专用,并不处理数据IO)

l FCGI(作为Apache模块,支持CGI协议)

l 其他

rados对象和rgw对象对比

各个池的作用

对象存储中,包含两种类型的pool,一种是保存元数据的,例如.rgw.buckets.index,users.uid等,另一种是保存数据的.rgw.buckets

.rgw.root

region和zone的信息

.rgw.control

在RGW上电时,在control pool创建若干个对象用于watch-notify,主要作用为当一个zone对应多个RGW,且cache使能时, 保证数据的一致性,其基本原理为利用librados提供的对象watch-notify功能,当有数据更新时,通知其他RGW刷新cache, 后面会有文档专门描述RGW cache。.rgw

包含容器名称,归属信息(项目ID+工程名称),读写权限等bucket属性信息

.rgw.gc

RGW中大文件数据一般在后台删除,该pool用于记录那些待删除的文件对象。

.users.uid

包含用户的ID,默认为项目ID

.users

包含用户信息

.rgw.buckets.index

buckets索引,格式为.dir.

.rgw.buckets

包括所有容器里面的对象,对象名字,ACL等信息

基本命令

查看所有用户

查看用户信息

创建用户

创建子账号

创建一个swift子账号

查看所有桶

查看桶内对象

查看桶信息

查看配额

更新配额

激活/取消配额

完整的命令使用帮助

usage: radosgw-admin [options...]

commands:

user create create a new user

user modify modify user

user info get user info

user rm remove user

user suspend suspend a user

user enable re-enable user after suspension

user check check user info

user stats show user stats as accounted by quota subsystem

caps add add user capabilities

caps rm remove user capabilities

subuser create create a new subuser

subuser modify modify subuser

subuser rm remove subuser

key create create access key

key rm remove access key

bucket list list buckets

bucket link link bucket to specified user

bucket unlink unlink bucket from specified user

bucket stats returns bucket statistics

bucket rm remove bucket

bucket check check bucket index

object rm remove object

object unlink unlink object from bucket index

quota set set quota params

quota enable enable quota

quota disable disable quota

region get show region info

regions list list all regions set on this cluster

region set set region info (requires infile)

region default set default region

region-map get show region-map

region-map set set region-map (requires infile)

zone get show zone cluster params

zone set set zone cluster params (requires infile)

zone list list all zones set on this cluster

pool add add an existing pool for data placement

pool rm remove an existing pool from data placement set

pools list list placement active set

policy read bucket/object policy

log list list log objects

log show dump a log from specific object or (bucket + date

+ bucket-id)

log rm remove log object

usage show show usage (by user, date range)

usage trim trim usage (by user, date range)

temp remove remove temporary objects that were created up to

specified date (and optional time)

gc list dump expired garbage collection objects (specify

--include-all to list all entries, including unexpired)

gc process manually process garbage

metadata get get metadata info

metadata put put metadata info

metadata rm remove metadata info

metadata list list metadata info

mdlog list list metadata log

mdlog trim trim metadata log

bilog list list bucket index log

bilog trim trim bucket index log (use start-marker, end-marker)

datalog list list data log

datalog trim trim data log

opstate list list stateful operations entries (use client_id,

op_id, object)

opstate set set state on an entry (use client_id, op_id, object, state)

opstate renew renew state on an entry (use client_id, op_id, object)

opstate rm remove entry (use client_id, op_id, object)

replicalog get get replica metadata log entry

replicalog update update replica metadata log entry

replicalog delete delete replica metadata log entry

options:

--uid= user id

--subuser= subuser name

--access-key= S3 access key

--email=

--secret= specify secret key

--gen-access-key generate random access key (for S3)

--gen-secret generate random secret key

--key-type= key type, options are: swift, s3

--temp-url-key[-2]= temp url key

--access= Set access permissions for sub-user, should be one

of read, write, readwrite, full

--display-name=

--max_buckets max number of buckets for a user

--system set the system flag on the user

--bucket=

--pool=

--object=

上传对象流程分析

函数入口在rgw_main.cc,line 1014

/*

* start up the RADOS connection and then handle HTTP messages as they come in

*/

int main(int argc, const char **argv)

{

// dout() messages will be sent to stderr, but FCGX wants messages on stdout

// Redirect stderr to stdout.

TEMP_FAILURE_RETRY(close(STDERR_FILENO));

if (TEMP_FAILURE_RETRY(dup2(STDOUT_FILENO, STDERR_FILENO) < 0)) {

int err = errno;

cout << "failed to redirect stderr to stdout: " << cpp_strerror(err)

<< std::endl;

return ENOSYS;

}

/* alternative default for module */

vector def_args;

def_args.push_back("--debug-rgw=1/5");

def_args.push_back("--keyring=$rgw_data/keyring");

def_args.push_back("--log-file=/var/log/radosgw/$cluster-$name.log");

vector args;

argv_to_vec(argc, argv, args);

env_to_vec(args);

global_init(&def_args, args, CEPH_ENTITY_TYPE_CLIENT, CODE_ENVIRONMENT_DAEMON,

CINIT_FLAG_UNPRIVILEGED_DAEMON_DEFAULTS);

for (std::vector::iterator i = args.begin(); i != args.end(); ++i) {

if (ceph_argparse_flag(args, i, "-h", "--help", (char*)NULL)) {

usage();

return 0;

}

}

check_curl();

if (g_conf->daemonize) {

global_init_daemonize(g_ceph_context, 0);

}

Mutex mutex("main");

SafeTimer init_timer(g_ceph_context, mutex);

init_timer.init();

mutex.Lock();

init_timer.add_event_after(g_conf->rgw_init_timeout, new C_InitTimeout);

mutex.Unlock();

common_init_finish(g_ceph_context);

rgw_tools_init(g_ceph_context);

rgw_init_resolver();

curl_global_init(CURL_GLOBAL_ALL);

FCGX_Init();

int r = 0;

RGWRados *store = RGWStoreManager::get_storage(g_ceph_context,

g_conf->rgw_enable_gc_threads, g_conf->rgw_enable_quota_threads);

if (!store) {

mutex.Lock();

init_timer.cancel_all_events();

init_timer.shutdown();

mutex.Unlock();

derr << "Couldn't init storage provider (RADOS)" << dendl;

return EIO;

}

r = rgw_perf_start(g_ceph_context);

rgw_rest_init(g_ceph_context, store->region);

mutex.Lock();

init_timer.cancel_all_events();

init_timer.shutdown();

mutex.Unlock();

if (r)

return 1;

rgw_user_init(store);

rgw_bucket_init(store->meta_mgr);

rgw_log_usage_init(g_ceph_context, store);

RGWREST rest;

list apis;

bool do_swift = false;

get_str_list(g_conf->rgw_enable_apis, apis);

map apis_map;

for (list::iterator li = apis.begin(); li != apis.end(); ++li) {

apis_map[*li] = true;

}

if (apis_map.count("s3") > 0)

rest.register_default_mgr(set_logging(new RGWRESTMgr_S3));

if (apis_map.count("swift") > 0) {

do_swift = true;

swift_init(g_ceph_context);

rest.register_resource(g_conf->rgw_swift_url_prefix, set_logging(new RGWRESTMgr_SWIFT));

}

if (apis_map.count("swift_auth") > 0)

rest.register_resource(g_conf->rgw_swift_auth_entry, set_logging(new RGWRESTMgr_SWIFT_Auth));

if (apis_map.count("admin") > 0) {

RGWRESTMgr_Admin *admin_resource = new RGWRESTMgr_Admin;

admin_resource->register_resource("usage", new RGWRESTMgr_Usage);

admin_resource->register_resource("user", new RGWRESTMgr_User);

admin_resource->register_resource("bucket", new RGWRESTMgr_Bucket);

/*Registering resource for /admin/metadata */

admin_resource->register_resource("metadata", new RGWRESTMgr_Metadata);

admin_resource->register_resource("log", new RGWRESTMgr_Log);

admin_resource->register_resource("opstate", new RGWRESTMgr_Opstate);

admin_resource->register_resource("replica_log", new RGWRESTMgr_ReplicaLog);

admin_resource->register_resource("config", new RGWRESTMgr_Config);

rest.register_resource(g_conf->rgw_admin_entry, admin_resource);

}

OpsLogSocket *olog = NULL;

if (!g_conf->rgw_ops_log_socket_path.empty()) {

olog = new OpsLogSocket(g_ceph_context, g_conf->rgw_ops_log_data_backlog);

olog->init(g_conf->rgw_ops_log_socket_path);

}

r = signal_fd_init();

if (r < 0) {

derr << "ERROR: unable to initialize signal fds" << dendl;

exit(1);

}

init_async_signal_handler();

register_async_signal_handler(SIGHUP, sighup_handler);

register_async_signal_handler(SIGTERM, handle_sigterm);

register_async_signal_handler(SIGINT, handle_sigterm);

register_async_signal_handler(SIGUSR1, handle_sigterm);

sighandler_alrm = signal(SIGALRM, godown_alarm);

list frontends;

get_str_list(g_conf->rgw_frontends, ",", frontends);

multimap fe_map;

list configs;

if (frontends.empty()) {

frontends.push_back("fastcgi");

}

for (list::iterator iter = frontends.begin(); iter != frontends.end(); ++iter) {

string& f = *iter;

RGWFrontendConfig *config = new RGWFrontendConfig(f);

int r = config->init();

if (r < 0) {

cerr << "ERROR: failed to init config: " << f << std::endl;

return EINVAL;

}

configs.push_back(config);

string framework = config->get_framework();

fe_map.insert(pair(framework, config));

}

list fes;

for (multimap::iterator fiter = fe_map.begin(); fiter != fe_map.end(); ++fiter) {

RGWFrontendConfig *config = fiter->second;

string framework = config->get_framework();

RGWFrontend *fe;

if (framework == "fastcgi" || framework == "fcgi") {

RGWProcessEnv fcgi_pe = { store, &rest, olog, 0 };

fe = new RGWFCGXFrontend(fcgi_pe, config);

} else if (framework == "civetweb" || framework == "mongoose") {

int port;

config->get_val("port", 80, &port);

RGWProcessEnv env = { store, &rest, olog, port };

fe = new RGWMongooseFrontend(env, config);

} else if (framework == "loadgen") {

int port;

config->get_val("port", 80, &port);

RGWProcessEnv env = { store, &rest, olog, port };

fe = new RGWLoadGenFrontend(env, config);

} else {

dout(0) << "WARNING: skipping unknown framework: " << framework << dendl;

continue;

}

dout(0) << "starting handler: " << fiter->first << dendl;

int r = fe->init();

if (r < 0) {

derr << "ERROR: failed initializing frontend" << dendl;

return -r;

}

fe->run();

fes.push_back(fe);

}

wait_shutdown();

derr << "shutting down" << dendl;

for (list::iterator liter = fes.begin(); liter != fes.end(); ++liter) {

RGWFrontend *fe = *liter;

fe->stop();

}

for (list::iterator liter = fes.begin(); liter != fes.end(); ++liter) {

RGWFrontend *fe = *liter;

fe->join();

delete fe;

}

for (list::iterator liter = configs.begin(); liter != configs.end(); ++liter) {

RGWFrontendConfig *fec = *liter;

delete fec;

}

unregister_async_signal_handler(SIGHUP, sighup_handler);

unregister_async_signal_handler(SIGTERM, handle_sigterm);

unregister_async_signal_handler(SIGINT, handle_sigterm);

unregister_async_signal_handler(SIGUSR1, handle_sigterm);

shutdown_async_signal_handler();

if (do_swift) {

swift_finalize();

}

rgw_log_usage_finalize();

delete olog;

RGWStoreManager::close_storage(store);

rgw_tools_cleanup();

rgw_shutdown_resolver();

curl_global_cleanup();

rgw_perf_stop(g_ceph_context);

dout(1) << "final shutdown" << dendl;

g_ceph_context->put();

signal_fd_finalize();

return 0;

}

所有动作的处理在rgw_main.cc line 537

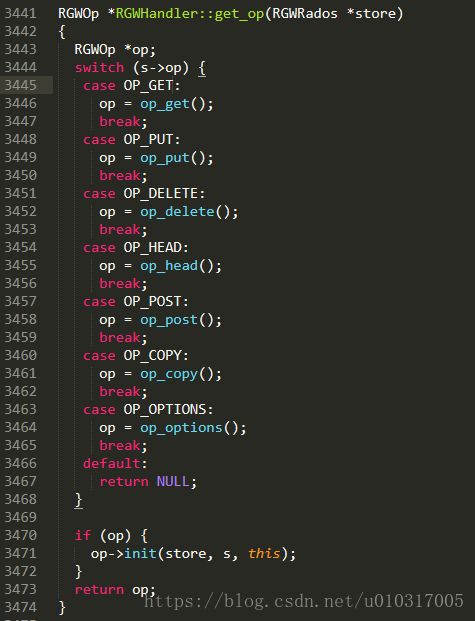

解析动作

op = handler->get_op(store);

rgw_op.cc line 3441

定义在rgw_op.h line 1018

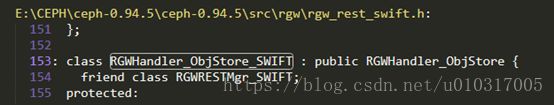

这边我们取返回对象为RGWPutObj_ObjStore_SWIFT对象继续跟踪

具体的动作

rgw_rest_swift.h

rgw_rest.h

动作预处理

op->pre_exec();

rgw_op.cc

动作执行

op->execute();

rgw_op.cc,line 1722

void RGWPutObj::execute()

{

RGWPutObjProcessor *processor = NULL;

char supplied_md5_bin[CEPH_CRYPTO_MD5_DIGESTSIZE + 1];

char supplied_md5[CEPH_CRYPTO_MD5_DIGESTSIZE * 2 + 1];

char calc_md5[CEPH_CRYPTO_MD5_DIGESTSIZE * 2 + 1];

unsigned char m[CEPH_CRYPTO_MD5_DIGESTSIZE];

MD5 hash;

bufferlist bl, aclbl;

map attrs;

int len;

map::iterator iter;

bool multipart;

bool need_calc_md5 = (obj_manifest == NULL);

perfcounter->inc(l_rgw_put);

ret = -EINVAL;

if (s->object.empty()) {

goto done;

}

ret = get_params();

if (ret < 0)

goto done;

ret = get_system_versioning_params(s, &olh_epoch, &version_id);

if (ret < 0) {

goto done;

}

if (supplied_md5_b64) {

need_calc_md5 = true;

ldout(s->cct, 15) << "supplied_md5_b64=" << supplied_md5_b64 << dendl;

ret = ceph_unarmor(supplied_md5_bin, &supplied_md5_bin[CEPH_CRYPTO_MD5_DIGESTSIZE + 1],

supplied_md5_b64, supplied_md5_b64 + strlen(supplied_md5_b64));

ldout(s->cct, 15) << "ceph_armor ret=" << ret << dendl;

if (ret != CEPH_CRYPTO_MD5_DIGESTSIZE) {

ret = -ERR_INVALID_DIGEST;

goto done;

}

buf_to_hex((const unsigned char *)supplied_md5_bin, CEPH_CRYPTO_MD5_DIGESTSIZE, supplied_md5);

ldout(s->cct, 15) << "supplied_md5=" << supplied_md5 << dendl;

}

if (!chunked_upload) { /* with chunked upload we don't know how big is the upload.

we also check sizes at the end anyway */

ret = store->check_quota(s->bucket_owner.get_id(), s->bucket,

user_quota, bucket_quota, s->content_length);

if (ret < 0) {

goto done;

}

}

if (supplied_etag) {

strncpy(supplied_md5, supplied_etag, sizeof(supplied_md5) - 1);

supplied_md5[sizeof(supplied_md5) - 1] = '\0';

}

processor = select_processor(*(RGWObjectCtx *)s->obj_ctx, &multipart);

ret = processor->prepare(store, NULL);

if (ret < 0)

goto done;

do {

bufferlist data;

len = get_data(data);

if (len < 0) {

ret = len;

goto done;

}

if (!len)

break;

/* do we need this operation to be synchronous? if we're dealing with an object with immutable

* head, e.g., multipart object we need to make sure we're the first one writing to this object

*/

bool need_to_wait = (ofs == 0) && multipart;

bufferlist orig_data;

if (need_to_wait) {

orig_data = data;

}

ret = put_data_and_throttle(processor, data, ofs, (need_calc_md5 ? &hash : NULL), need_to_wait);

if (ret < 0) {

if (!need_to_wait || ret != -EEXIST) {

ldout(s->cct, 20) << "processor->thottle_data() returned ret=" << ret << dendl;

goto done;

}

ldout(s->cct, 5) << "NOTICE: processor->throttle_data() returned -EEXIST, need to restart write" << dendl;

/* restore original data */

data.swap(orig_data);

/* restart processing with different oid suffix */

dispose_processor(processor);

processor = select_processor(*(RGWObjectCtx *)s->obj_ctx, &multipart);

string oid_rand;

char buf[33];

gen_rand_alphanumeric(store->ctx(), buf, sizeof(buf) - 1);

oid_rand.append(buf);

ret = processor->prepare(store, &oid_rand);

if (ret < 0) {

ldout(s->cct, 0) << "ERROR: processor->prepare() returned " << ret << dendl;

goto done;

}

ret = put_data_and_throttle(processor, data, ofs, NULL, false);

if (ret < 0) {

goto done;

}

}

ofs += len;

} while (len > 0);

if (!chunked_upload && ofs != s->content_length) {

ret = -ERR_REQUEST_TIMEOUT;

goto done;

}

s->obj_size = ofs;

perfcounter->inc(l_rgw_put_b, s->obj_size);

ret = store->check_quota(s->bucket_owner.get_id(), s->bucket,

user_quota, bucket_quota, s->obj_size);

if (ret < 0) {

goto done;

}

if (need_calc_md5) {

processor->complete_hash(&hash);

hash.Final(m);

buf_to_hex(m, CEPH_CRYPTO_MD5_DIGESTSIZE, calc_md5);

etag = calc_md5;

if (supplied_md5_b64 && strcmp(calc_md5, supplied_md5)) {

ret = -ERR_BAD_DIGEST;

goto done;

}

}

policy.encode(aclbl);

attrs[RGW_ATTR_ACL] = aclbl;

if (obj_manifest) {

bufferlist manifest_bl;

string manifest_obj_prefix;

string manifest_bucket;

char etag_buf[CEPH_CRYPTO_MD5_DIGESTSIZE];

char etag_buf_str[CEPH_CRYPTO_MD5_DIGESTSIZE * 2 + 16];

manifest_bl.append(obj_manifest, strlen(obj_manifest) + 1);

attrs[RGW_ATTR_USER_MANIFEST] = manifest_bl;

user_manifest_parts_hash = &hash;

string prefix_str = obj_manifest;

int pos = prefix_str.find('/');

if (pos < 0) {

ldout(s->cct, 0) << "bad user manifest, missing slash separator: " << obj_manifest << dendl;

goto done;

}

manifest_bucket = prefix_str.substr(0, pos);

manifest_obj_prefix = prefix_str.substr(pos + 1);

hash.Final((byte *)etag_buf);

buf_to_hex((const unsigned char *)etag_buf, CEPH_CRYPTO_MD5_DIGESTSIZE, etag_buf_str);

ldout(s->cct, 0) << __func__ << ": calculated md5 for user manifest: " << etag_buf_str << dendl;

etag = etag_buf_str;

}

if (supplied_etag && etag.compare(supplied_etag) != 0) {

ret = -ERR_UNPROCESSABLE_ENTITY;

goto done;

}

bl.append(etag.c_str(), etag.size() + 1);

attrs[RGW_ATTR_ETAG] = bl;

for (iter = s->generic_attrs.begin(); iter != s->generic_attrs.end(); ++iter) {

bufferlist& attrbl = attrs[iter->first];

const string& val = iter->second;

attrbl.append(val.c_str(), val.size() + 1);

}

rgw_get_request_metadata(s->cct, s->info, attrs);

ret = processor->complete(etag, &mtime, 0, attrs, if_match, if_nomatch);

done:

dispose_processor(processor);

perfcounter->tinc(l_rgw_put_lat,

(ceph_clock_now(s->cct) - s->time));

}

选择处理器

这个是上传对象中用来区分是原子上传还是mutipart上传的一个处理器

rgw_op.cc

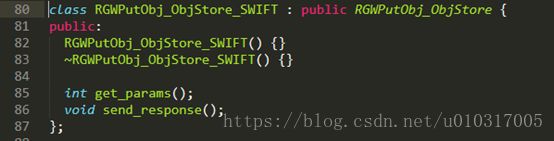

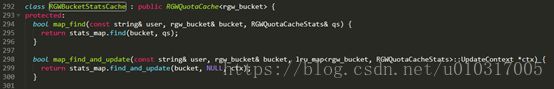

检查配额

rgw_op.cc

![]()

store定义在rgw_op.h中

quota_handler定义在rgw_rados.hline 1547中的int RGWRados::init_complete()的

![]()

rgw_quota.h

处理器处理数据

处理器收尾

rgw_op.cc

![]()

rgw_rados.cc

write_meta

rgw_rados.cc

更新配额

具体的逻辑可以下载以下链接的资源并用visio工具打开:

https://download.csdn.net/download/u010317005/10507538

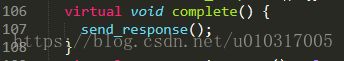

动作收尾

op->complete();

rgw_op.h

使用的ceph版本号:0.94.5