Spark job在hue-oozie中的另一种方式调度

工作中使用的是hue来配置oozie工作流调度及任务中调度关系,spark job的输入是由hive sql产生输出的表,在配置spark job由于一些客观关系出现很多问题导致无法正确的执行,在oozie中支持spark job的及shell job的配置执行

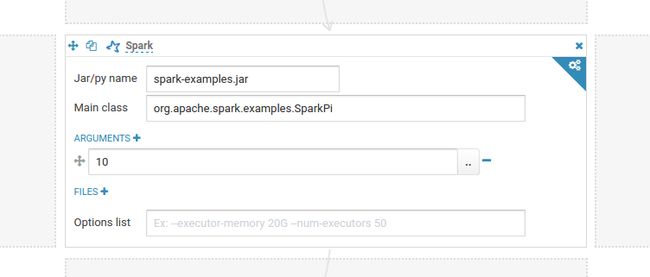

1. 采用spark program组件配置, 目前版本过低无法添加运行时的一些参数

官网http://gethue.com/how-to-schedule-spark-jobs-with-spark-on-yarn-and-oozie/给出最新的例子

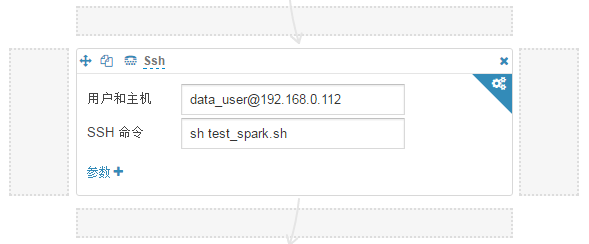

2. 采用ssh组件配置远程到部署test_spark.sh的机器上执行

由于环境配置问题,出现异常错误 ssh-base.sh not present,暂未解决

2017-01-03 20:22:07,354 WARN ActionStartXCommand:523 - SERVER[m-hadoop] USER[xubingchuan] GROUP[-] TOKEN[] APP[test_ssh_shell] JOB[0052820-161122132240169-oozie-oozi-W] ACTION[0052820-161122132240169-oozie-oozi-W@ssh-8f58] Error starting action [ssh-8f58]. ErrorType [TRANSIENT], ErrorCode [FNF], Message [FNF: Required Local file /var/tmp/oozie/oozie-oozi5514069982911677039.dir/ssh/ssh-base.sh not present.]

org.apache.oozie.action.ActionExecutorException: FNF: Required Local file /var/tmp/oozie/oozie-oozi5514069982911677039.dir/ssh/ssh-base.sh not present.

at org.apache.oozie.action.ssh.SshActionExecutor.execute(SshActionExecutor.java:572)

at org.apache.oozie.action.ssh.SshActionExecutor.start(SshActionExecutor.java:206)

at org.apache.oozie.command.wf.ActionStartXCommand.execute(ActionStartXCommand.java:250)

at org.apache.oozie.command.wf.ActionStartXCommand.execute(ActionStartXCommand.java:64)

at org.apache.oozie.command.XCommand.call(XCommand.java:286)

at org.apache.oozie.service.CallableQueueService$CompositeCallable.call(CallableQueueService.java:321)

at org.apache.oozie.service.CallableQueueService$CompositeCallable.call(CallableQueueService.java:250)

at org.apache.oozie.service.CallableQueueService$CallableWrapper.run(CallableQueueService.java:175)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.io.IOException: Required Local file /var/tmp/oozie/oozie-oozi5514069982911677039.dir/ssh/ssh-base.sh not present.

at org.apache.oozie.action.ssh.SshActionExecutor.setupRemote(SshActionExecutor.java:367)

at org.apache.oozie.action.ssh.SshActionExecutor$1.call(SshActionExecutor.java:208)

at org.apache.oozie.action.ssh.SshActionExecutor$1.call(SshActionExecutor.java:206)

at org.apache.oozie.action.ssh.SshActionExecutor.execute(SshActionExecutor.java:550)

... 10 more3. 采用运行shell组件直接运行上传在hdfs上的test_spark.sh和相关资源文件

test_spark.sh,input.sql,test_spark.jar均上传到/user/data_user/recommend目录中,test_spark.sh脚本内容如下

spark-submit --class com.test.bigdata.TestSpark \

--master yarn \

--deploy-mode cluster \

--driver-memory 4g \

--executor-memory 2g \

--executor-cores 1 \

test_spark.jar \

input.sql 100

rc=$?

if [[ $rc != 0 ]]; then

echo "spark task: $0 failed,please check......"

exit 1

fi

echo "end run spark: `date "+%Y-%m-%d %H:%M:%S"`"

运行过程中报各种权限问题,运行test_spark.sh是AM的yarn用户,但是实际提交后又变成了oozie的用户

17/01/03 19:11:46 INFO Client: Application report for application_1479210500211_308279 (state: ACCEPTED)

17/01/03 19:11:47 INFO Client: Application report for application_1479210500211_308279 (state: FAILED)

17/01/03 19:11:47 INFO Client:

client token: N/A

diagnostics: Application application_1479210500211_308279 failed 2 times due to AM Container for appattempt_1479210500211_308279_000002 exited with exitCode: -1000

For more detailed output, check application tracking page:http://m-hadoop:8088/cluster/app/application_1479210500211_308279Then, click on links to logs of each attempt.

Diagnostics: Permission denied: user=xubingchuan, access=EXECUTE, inode="/user/yarn/.sparkStaging/application_1479210500211_308279/__spark_conf__497763855254140178.zip":yarn:hdfs:drwx------

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.check(FSPermissionChecker.java:319)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkTraverse(FSPermissionChecker.java:259)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:205)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:190)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:1771)

at org.apache.hadoop.hdfs.server.namenode.FSDirStatAndListingOp.getFileInfo(FSDirStatAndListingOp.java:108)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getFileInfo(FSNamesystem.java:3866)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.getFileInfo(NameNodeRpcServer.java:1076)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.getFileInfo(ClientNamenodeProtocolServerSideTranslatorPB.java:843)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:616)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:969)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2151)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2147)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1657)

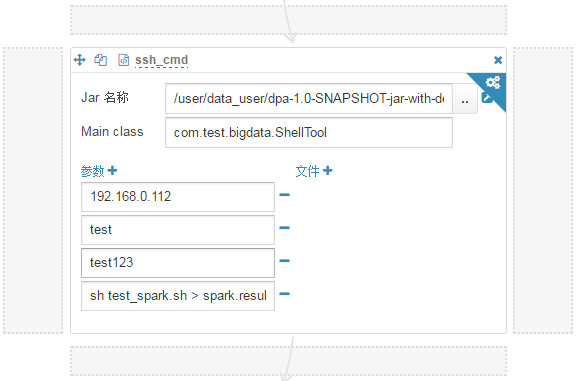

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2145)最后在没有权限修改相关配置的情况下,发现可以oozie可以正常调度Java程序,在程序中使用ssh远程执行shell脚本的方式模拟了oozie中ssh组件的功能

public class ShellTool {

public static void main(String[] args) {

verify(args);

String ip = args[0];

String user = args[1];

String passwd = args[2];

String cmd = args[3];

int status = ShellRunner.callRemoteShell(ip, user, passwd, cmd, null);

if (status != 0) {

System.exit(1);

}

}

private static void verify(String[] args) {

if (args == null || args.length != 4) {

System.out.println("ShellTool args 不合法:");

if (args != null) {

for (String arg : args) {

System.out.println(" " + arg);

}

} else {

System.out.println(" args is null");

}

System.exit(1);

}

}

}上述方式就是在AM的yarn用户下远程到某台服务器上执行上面的shell脚本,最终实现调用spark job功能

欢迎各位批评指正文中描述错误的地方或者指出文中遇到未解决的异常错误办法,互助互勉!