视觉里程计 | 视觉里程计综述

博主github:https://github.com/MichaelBeechan

博主CSDN:https://blog.csdn.net/u011344545

博文Github:https://github.com/MichaelBeechan/Visual-Odometry-Review 欢迎Fork和Star

OF-VO:Robust and Efficient Stereo Visual Odometry Using Points and Feature Optical Flow

代码:https://github.com/MichaelBeechan/MyStereoLibviso2 欢迎Fork和Star

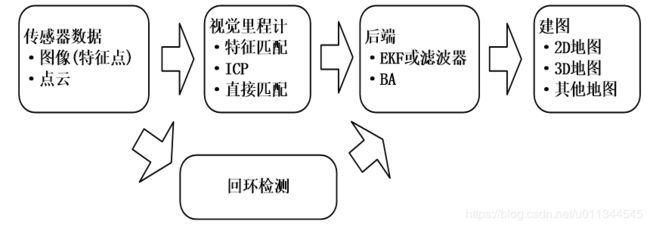

SLAM框架

RGB-D SLAM开源

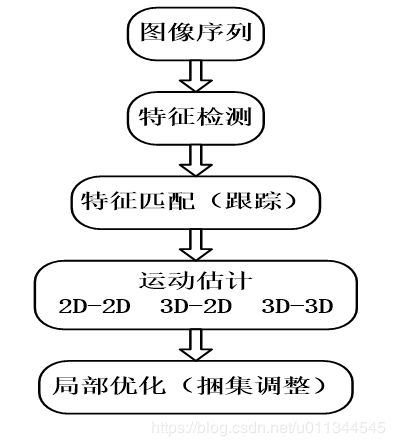

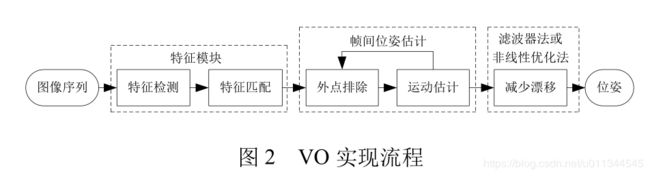

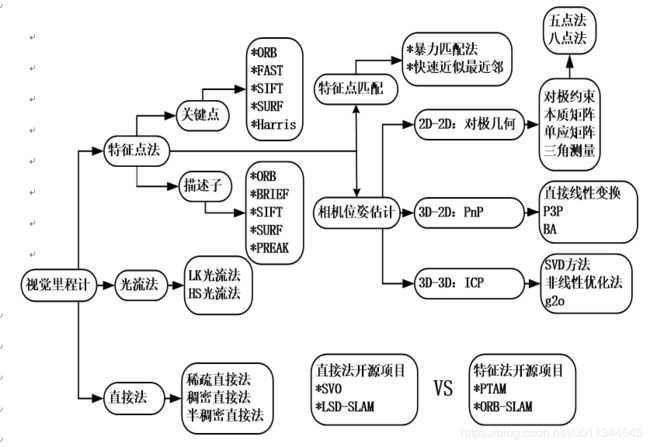

SLAM 主要分为两个部分:前端和后端,前端也就是视觉里程计(VO),它根据相邻图像的信息粗略的估计出相机的运动,给后端提供较好的初始值。VO的实现方法可以根据是否需要提取特征分为两类:基于特征点的方法,不使用特征点的直接方法。 基于特征点的VO运行稳定,对光照、动态物体不敏感。

视觉里程计框架

视觉里程计主要研究方法

视觉里程计发展状况

主要视觉里程计代码及论文

SVO: Fast Semi-Direct Monocular Visual Odometry

论文:http://rpg.ifi.uzh.ch/docs/ICRA14_Forster.pdf

代码:https://github.com/uzh-rpg/rpg_svo

Robust Odometry Estimation for RGB-D Cameras

Real-Time Visual Odometry from Dense RGB-D Images

论文:http://www.cs.nuim.ie/research/vision/data/icra2013/Whelan13icra.pdf

代码:https://github.com/tum-vision/dvo

Parallel Tracking and Mapping for Small AR Workspaces

论文:https://cse.sc.edu/~yiannisr/774/2015/ptam.pdf

http://www.robots.ox.ac.uk/ActiveVision/Papers/klein_murray_ismar2007/klein_murray_ismar2007.pdf

代码:https://github.com/Oxford-PTAM/PTAM-GPL

ORBSLAM

代码:https://github.com/raulmur/ORB_SLAM2

https://github.com/raulmur/ORB_SLAM

A ROS Implementation of the Mono-Slam Algorithm

论文:https://www.researchgate.net/publication/269200654_A_ROS_Implementation_of_the_Mono-Slam_Algorithm

代码:https://github.com/rrg-polito/mono-slam

DTAM: Dense tracking and mapping in real-time

论文:https://ieeexplore.ieee.org/document/6126513

代码:https://github.com/anuranbaka/OpenDTAM

LSD-SLAM: Large-Scale Direct Monocular SLAM

论文:http://pdfs.semanticscholar.org/c13c/b6dfd26a1b545d50d05b52c99eb87b1c82b2.pdf

https://vision.in.tum.de/research/vslam/lsdslam

代码:https://github.com/tum-vision/lsd_slam

MSCKF_VIO:Robust Stereo Visual Inertial Odometry for Fast Autonomous Flight

论文:https://arxiv.org/abs/1712.00036

代码:https://github.com/KumarRobotics/msckf_vio

LIBVISO2: C++ Library for Visual Odometry 2

论文:http://www.cvlibs.net/software/libviso/

代码:https://github.com/srv/viso2

Stereo Visual SLAM for Mobile Robots Navigation

A constant-time SLAM back-end in the continuum between global mapping and submapping: application to visual stereo SLAM

论文:http://mapir.uma.es/famoreno/papers/thesis/FAMD_thesis.pdf

代码:https://github.com/famoreno/stereo-vo

Combining Edge Images and Depth Maps for Robust Visual Odometry

Robust Edge-based Visual Odometry using Machine-Learned Edges(REVO)

论文:https://graz.pure.elsevier.com/

代码:https://github.com/fabianschenk/REVO

HKUST Aerial Robotics Group

VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator

论文:https://arxiv.org/pdf/1708.03852.pdf

代码:https://github.com/HKUST-Aerial-Robotics/VINS-Mono

VINS-Fusion:Online Temporal Calibration for Monocular Visual-Inertial Systems

论文:https://arxiv.org/pdf/1808.00692.pdf

代码;https://github.com/HKUST-Aerial-Robotics/VINS-Fusion

Monocular Visual-Inertial State Estimation for Mobile Augmented Reality

论文:https://ieeexplore.ieee.org/document/8115400

代码:https://github.com/HKUST-Aerial-Robotics/VINS-Mobile

VINet: Visual-Inertial Odometry as a Sequence-to-Sequence Learning Problem

论文:https://arxiv.org/abs/1701.08376

代码:https://github.com/HTLife/VINet

Computer Vision Group TUM Department of Informatics Technical University of Munich

DSO: Direct Sparse Odometry

代码;https://github.com/JingeTu/StereoDSO

Visual-Inertial DSO:https://vision.in.tum.de/research/vslam/vi-dso

DVSO:https://vision.in.tum.de/research/vslam/dvso

DSO with Loop-closure and Sim(3) pose graph optimization:https://vision.in.tum.de/research/vslam/ldso

Stereo odometry based on careful feature selection and tracking

论文:https://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=7324219

代码:https://github.com/Mayankm96/Stereo-Odometry-SOFT

OKVIS: Open Keyframe-based Visual-Inertial SLAM

代码:https://github.com/gaoxiang12/okvis

Trifo-VIO: Robust and Efficient Stereo Visual Inertial Odometry using Points and Lines

论文:https://arxiv.org/pdf/1803.02403.pdf

代码:https://github.com/UMiNS/Trifocal-tensor-VIO

PL-VIO: Tightly-Coupled Monocular Visual–Inertial Odometry Using Point and Line Features

论文:https://www.mdpi.com/1424-8220/18/4/1159/html

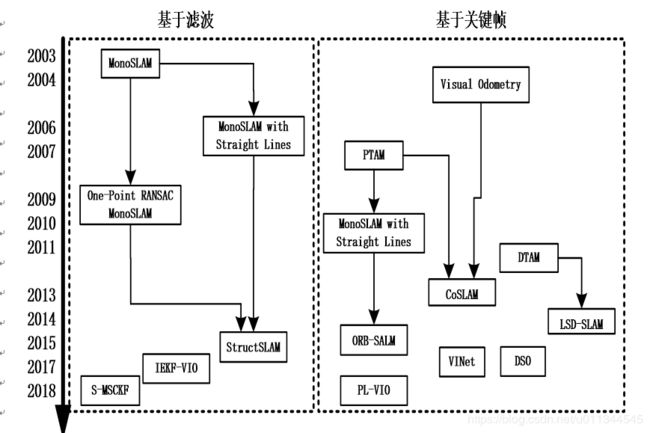

A Review of Visual-Inertial Simultaneous Localization and Mapping from Filtering-Based and Optimization-Based Perspectives:视觉惯导的综述性文章