几种半监督的python实现(标签传播、半监督Kmeans、自训练)

半监督学习:综合利用有类标的数据和没有类标的数据,来生成合适的分类函数。它是一类可以自动地利用未标记的数据来提升学习性能的算法。

一、LabelPropagation和LabelSpreading

(1)标记传播算法:

优点:概念清晰

缺点:存储开销大,难以直接处理大规模数据;而且对于新的样本加入,需要对原图重构并进行标记传播

(2)迭代式标记传播算法:

输入:有标记样本集Dl,未标记样本集Du,构图参数δ,折中参数α

输出:未标记样本的预测结果y

步骤:

1)计算W

2)基于W构造标记传播矩阵S

3)根据公式初始化F<0>

4)t=0

5)迭代,迭代终止条件是F收敛至F*:

F

t=t+1

6)构造未标记样本的预测结果yi

7)输出结果y

python在 sklearn 里提供了两个标签传播模型:LabelPropagation 和 LabelSpreading,其中后者是前者的正则化形式。W 的计算方式提供了 rbf 与 knn。

LabelPropagation和LabelSpreading的实现代码如下:

import numpy as np

import matplotlib.pyplot as plt

from sklearn import metrics

from sklearn import datasets

from sklearn.semi_supervised import LabelPropagation,LabelSpreading

def load_data():

digits=datasets.load_digits()

rng=np.random.RandomState(0)

index=np.arange(len(digits.data))

rng.shuffle(index)

X=digits.data[index]

Y=digits.target[index]

n_labeled_points=int(len(Y)/10)

unlabeled_index=np.arange(len(Y))[n_labeled_points:]

return X,Y,unlabeled_index

def test_LabelPropagation(*data):

X,Y,unlabeled_index=data

Y_train=np.copy(Y)

Y_train[unlabeled_index]=-1

cls=LabelPropagation(max_iter=100,kernel='rbf',gamma=0.1)

cls.fit(X,Y_train)

print("Accuracy:%f"%cls.score(X[unlabeled_index],Y[unlabeled_index]))

def test_LabelSpreading(*data):

X,Y,unlabeled_index=data

Y_train=np.copy(Y)

Y_train[unlabeled_index]=-1

cls=LabelSpreading(max_iter=100,kernel='rbf',gamma=0.1)

cls.fit(X,Y_train)

predicted_labels=cls.transduction_[unlabeled_index]

true_labels=Y[unlabeled_index]

print("Accuracy:%f"%metrics.accuracy_score(true_labels,predicted_labels))

X,Y,unlabeled_index=load_data()

#test_LabelPropagation(X,Y,unlabeled_index)

test_LabelSpreading(X,Y,unlabeled_index)

LabelPropagation实验结果:Accuracy:0.948084

可见预测的准确率还是挺高的。

LabelSpreading类似于LabelPropagation,但是使用基于normalized graph Laplacian and soft clamping的距离矩阵

实验结果:Accuracy:0.972806

预测效果也很不错

还有手动实现标签传播的python写法,可以参考:

聚类——标签传播算法以及Python实现

动手实践标签传播算法

二、半监督K-means聚类

半监督K-means的初始聚类中心的选择是根据有标签数据而定的,聚类个数=类别个数,初始聚类中心=各个类样本的均值(其余步骤和标准的K-means没有差别)。

# -*- coding: utf-8 -*-

import numpy as np

def distEclud(vecA, vecB):

'''

输入:向量A和B

输出:A和B间的欧式距离

'''

return np.sqrt(sum(np.power(vecA - vecB, 2)))

def newCent(L):

'''

输入:有标签数据集L

输出:根据L确定初始聚类中心

'''

centroids = []

label_list = np.unique(L[:,-1])

for i in label_list:

L_i = L[(L[:,-1])==i]

cent_i = np.mean(L_i,0)

centroids.append(cent_i[:-1])

return np.array(centroids)

def semi_kMeans(L, U, distMeas=distEclud, initial_centriod=newCent):

'''

输入:有标签数据集L(最后一列为类别标签)、无标签数据集U(无类别标签)

输出:聚类结果

'''

dataSet = np.vstack((L[:,:-1],U))#合并L和U

label_list = np.unique(L[:,-1])

k = len(label_list) #L中类别个数

m = np.shape(dataSet)[0]

clusterAssment = np.zeros(m)#初始化样本的分配

centroids = initial_centriod(L)#确定初始聚类中心

clusterChanged = True

while clusterChanged:

clusterChanged = False

for i in range(m):#将每个样本分配给最近的聚类中心

minDist = np.inf; minIndex = -1

for j in range(k):

distJI = distMeas(centroids[j,:],dataSet[i,:])

if distJI < minDist:

minDist = distJI; minIndex = j

if clusterAssment[i] != minIndex: clusterChanged = True

clusterAssment[i] = minIndex

return clusterAssment

L =np.array([[1.0, 4.2 ,1],

[1.3, 4.0 , 1],

[1.0, 4.0 , 1],

[1.5, 4.3 , 1],

[2.0, 4.0 , 0],

[2.3, 3.7 , 0],

[4.0, 1.0 , 0]])

#L的最后一列是类别标签

U =np.array([[1.4, 5.0],

[1.3, 5.4],

[2.0, 5.0],

[4.0, 2.0],

[5.0, 1.0],

[5.0, 2.0]])

clusterResult = semi_kMeans(L,U)

三、自训练半监督

自训练是一种增量算法(Incremental Algorithm),它既不需要像基于图的半监督学习方法一样构造复杂的图模型,也不需要像协同训练一样基于特定的假设条件。自训练方法只需要一个分类模型,少量的有标记样本和大量的无标记样本,就可以完成复杂的半监督学习任务。

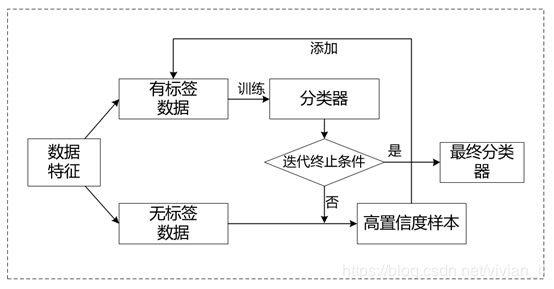

自训练算法首先利用少量的有标注的样本训练得到一个原始分类器,然后用这个基础的原始分类器不断地预测大量的无标注数据,并从中选取可信度较高的数据,再把这些数据添加到训练集中,以不断更新训练集并对基础分类器重新训练,直到满足停止条件,得到具有最高分类精度和最强的泛化性的最终分类器。训练流程如图所示:

下面以XGBoost作为基分类器为例,自己写一个自训练半监督算法:

数据全部是有标注的,从中随机挑选一定比例(ratio)的数据丢弃标注,作为无标注数据。

import argparse

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split,GridSearchCV

from sklearn.preprocessing import StandardScaler

from xgboost import XGBClassifier

from sklearn import metrics

from sklearn.metrics import accuracy_score,roc_auc_score,f1_score

from sklearn.externals import joblib

import warnings

warnings.filterwarnings("ignore")

parser = argparse.ArgumentParser(description='BMD prediction')

parser.add_argument('--classifier-name', type=str, default='xgboost', metavar='S',

help='model name')

parser.add_argument('--dataset', type=str, default='../data/newdata/largedata.csv', metavar='DATASET',

help='dataset path')

parser.add_argument('--feature-columns', nargs='+', default=['sex','year','height','weight'],

help='columns of features')

parser.add_argument('--label-column', type=str, default='label',

help='column of label')

parser.add_argument('--cat-numbers', type=int, default=2,

help='Number of categories')

parser.add_argument('--model-name', type=str, default='xgboost', choices=['xgboost','svm'],

help='name of classifier')

parser.add_argument('--save-path', type=str, default='../model/classifiers/xgboost_label_gridSearch.joblib.dat',

help='path of saved model')

parser.add_argument('--grid-search', type=bool, default=True,

help='adjust parameters with grid search')

def read_data(args):

path = args.dataset

data = pd.read_csv(path,encoding='gbk')

# 获取label列非空的行

for i in range(len(data)):

if np.isnan(data[args.label_column][i]):

data = data.drop(i)

# data.to_csv('data.csv')

# data.info()

return data

def split(args,data):

features = data[args.feature_columns]

label = data[args.label_column]

print(args.feature_columns)

print(args.label_column)

X_train, X_test, y_train, y_test = train_test_split(features,label,test_size=0.2, random_state=77)

ss = StandardScaler()

X_train = ss.fit_transform(X_train)

X_test = ss.transform(X_test)

return X_train,X_test,y_train,y_test

def selfTraining(args, X_train, X_test, y_train, y_test):

ratio = 0.1 # 缺失值比例

rng = np.random.RandomState(10) # 产生一个随机状态种子

YSemi_train = np.copy(y_train)

YSemi_train[rng.rand(len(y_train)) < ratio] = -1 # rng.rand()返回一个或一组服从“0~1”均匀分布的随机样本值

unlabeledX = X_train[YSemi_train == -1, :]

YTrue = y_train[YSemi_train == -1]

idx = np.where(YSemi_train != -1)[0] # np.where返回一个(array([ 3, 10, 12, ..., 8042, 8050, 8053], dtype=int64),)

labeledX = X_train[idx, :]

labeledY = YSemi_train[idx]

model = XGBClassifier(

learning_rate=0.02,

n_estimators=90,

max_depth=4,

min_child_weight=1,

gamma=0,

subsample=1,

colsample_bytree=1,

objective='binary:logistic',

nthread=4,

scale_pos_weight=1,

seed=27

)

# 全监督

clf = model.fit(X_train, y_train)

score = accuracy_score(y_train, clf.predict(X_train))

test_score = accuracy_score(y_test, clf.predict(X_test)) # 测试集准确率

auc_score = roc_auc_score(y_test, clf.predict(X_test))

f1 = f1_score(y_test, clf.predict(X_test))

print("Training data size=", len(y_train), "Labeld accuracy= %.4f" % score, " , Unlabeled ratio=", 0,

"ACC: %.4f" % test_score, 'AUC: %.4f' % auc_score, 'F1-score: %.4f' % f1)

# 半监督

clf = model.fit(labeledX, labeledY)

unlabeledY = clf.predict(unlabeledX)

print(unlabeledY)

unlabeledProb = clf.predict_proba(unlabeledX).max(axis=1) # 预测为0和1的置信度,取大的

print(unlabeledProb)

ratioInitial = 1 - (len(labeledY) / len(y_train))

score = accuracy_score(labeledY, clf.predict(labeledX))

test_score = accuracy_score(y_test, clf.predict(X_test))

auc_score = roc_auc_score(y_test, clf.predict(X_test))

f1 = f1_score(y_test, clf.predict(X_test))

print("iteration=",0, "Training data size=", len(labeledY), "Labeld accuracy=: %.4f" % score, " , Unlabeled ratio=: %.4f" % ratioInitial, "ACC: %.4f" % test_score, 'AUC: %.4f' % auc_score, 'F1-score: %.4f' % f1)

max_iter = 500

probThreshold = 0.8

unlabeledXOrg = np.copy(unlabeledX)

YTrueOrg = np.copy(YTrue)

rr = []

it = []

i = 0

repeat = 1

while (i < max_iter and score > 0.01 and repeat<=10):

lastscore = score

ratio = 1 - (len(labeledY) / len(y_train))

rr.append(ratio)

it.append(i)

labelidx = np.where(unlabeledProb > probThreshold)[0]

unlabelidx = np.where(unlabeledProb <= probThreshold)[0]

labeledX = np.vstack((labeledX, unlabeledX[labelidx, :])) # 按照行顺序把数组给堆叠起来

labeledY = np.hstack((labeledY, unlabeledY[labelidx]))

unlabeledX = unlabeledX[unlabelidx, :]

YTrue = y_train[unlabelidx]

clf = model.fit(labeledX, labeledY)

score = accuracy_score(labeledY, clf.predict(labeledX))

test_score = accuracy_score(y_test, clf.predict(X_test))

auc_score = roc_auc_score(y_test, clf.predict(X_test))

f1 = f1_score(y_test, clf.predict(X_test))

print("iteration=",i+1, "Training data size=", len(labeledY), "Labeld accuracy= %.4f" % score, " , Unlabeled ratio= %.4f" % ratio, "ACC: %.4f" % test_score, 'AUC: %.4f' % auc_score, 'F1-score: %.4f' % f1)

unlabeledY = clf.predict(unlabeledX)

unlabeledProb = clf.predict_proba(unlabeledX).max(axis=1)

i += 1

if lastscore == score:

repeat += 1

else:

repeat = 1

def main(args):

data = read_data(args)

X_train, X_test, y_train, y_test = split(args,data)

selfTraining(args, np.array(X_train), np.array(X_test), np.array(y_train), np.array(y_test))

if __name__ == '__main__':

main(parser.parse_args())

参考网址:

python大战机器学习——半监督学习

sklearn半监督学习

半监督K均值聚类python代码