filebeat收集日志,传到kafka,logstash过滤nginx,利用kibana显示nginx客户ip分布图

流程:

nginx日志—>filebeat (收集)----->kafka (流式处理)------>logstash(过滤)----->elasticsearch(存储)------->kibana (显示)

这里只介绍各组件的配置,各组件的部署安装请参考官网。

操作环境:

centos7

nginx 1.4

filebeat , logstash , elasticsearch ,kibana 6.2

- 配置nginx日志格式

nginx 日志格式。(仅供参考,请根据各自业务情况设定日志格式。)

http {

log_format main '$remote_addr - [$time_local] $request_method "$uri" "$query_string" '

'$status $body_bytes_sent "$http_referer" $upstream_status '

'"$http_user_agent" '

在线grok

http://grok.qiexun.net/

参考:

%{IPORHOST:clientip} - [%{HTTPDATE:timestamp}] %{WORD:verb} “%{URIPATH:uri}” “-” %{NUMBER:httpversion} %{NUMBER:response} “%{GREEDYDATA:http_referrer}” %{BASE10NUM:upstream_status} “%{GREEDYDATA:user_agent}”

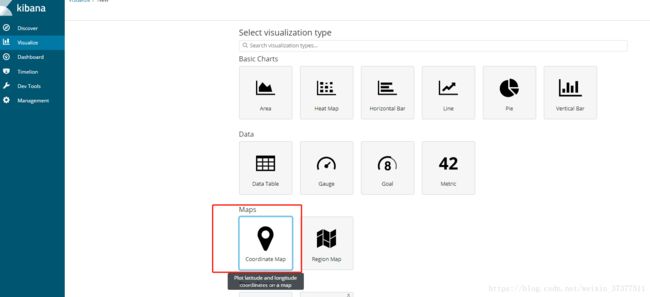

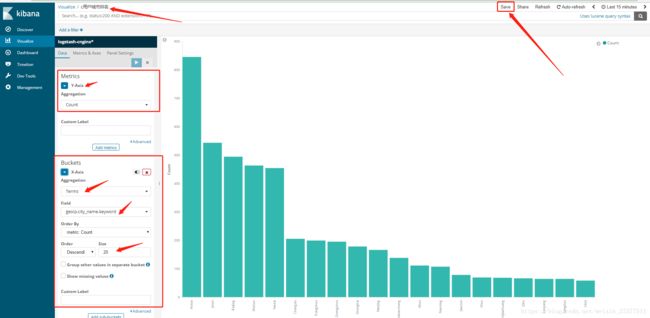

2,配置kibana

编辑kibana配置文件kibana.yml,

vim /etc/kibana/kibana.yml

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

fields:

log_topics: cnginx

#......中间省略

#输出到kafka

output.kafka:

hosts: ["172.17.78.197:9092","172.17.78.198:9092","172.17.78.199:9092"]

topic: '%{[fields][log_topics]}'

partition.round_robin:

reachable_only: false

required_acks: 1

compression: gzip

max_message_bytes: 1000000

最后面添加高德地图配置:

tilemap.url: 'http://webrd02.is.autonavi.com/appmaptile?lang=zh_cn&size=1&scale=1&style=7&x={x}&y={y}&z={z}'

style=7是地图,style=6是卫星图,删除kibana目录下的optimize/bundles文件夹

3,配置logstash

下载GeoIP数据库、

wget http://geolite.maxmind.com/download/geoip/database/GeoLite2-City.mmdb.gz

gzip -d GeoLiteCity.dat.gz

自定义 logstash patterns

mkdir -p /usr/local/logstash/patterns

vi /usr/local/logstash/patterns/nginx

写入:

URIPARM1 [A-Za-z0-9$.+!*'|(){},~@#%&/=:;_?\-\[\]]*

NGINXACCESS %{IPORHOST:clientip} \- \[%{HTTPDATE:timestamp}\] %{WORD:verb} \"%{URIPATH:uri}\" \"%{URIPARM1:param}\" %{NUMBER:httpversion} %{NUMBER:response} \"%{GREEDYDATA:http_referrer}\" %{BASE10NUM:upstream_status} \"%{GREEDYDATA:user_agent}\"

安装geoip插件:

logstash-filter-geoip - 可以获取到国家及城市信息

/usr/share/logstash/bin/logstash-plugin install logstash-filter-geoip

配置logstash

vim /etc/logstash/conf.d/nginx.conf

input {

kafka {

codec => "json"

topics => ["cnginx"]

consumer_threads => 2

enable_auto_commit => true

auto_commit_interval_ms => "1000"

bootstrap_servers => "172.17.78.197:9092,172.17.78.198:9092,172.17.78.199:9092"

auto_offset_reset => "latest"

group_id => "xqxlog"

}

}

filter {

if [fields][log_topics] == "cnginx" {

grok {

patterns_dir => "/usr/local/logstash/patterns"

match => { "message" => "%{NGINXACCESS}" }

remove_field => ["message"]

}

date {

match => [ "timestamp" , "dd/MMM/YYYY:HH:mm:ss Z" ]

}

urldecode {

all_fields => true

}

geoip {

source => "clientip"

target => "geoip"

database => "/etc/logstash/conf.d/GeoLite2-City.mmdb"

add_field => ["[geoip][coordinates]","%{[geoip][longitude]}"] #添加字段coordinates,值为经度

add_field => ["[geoip][coordinates]","%{[geoip][latitude]}"] #添加字段coordinates,值为纬度

}

mutate {

convert => [ "[geoip][coordinates]", "float"] #转化经纬度的值为浮点数

}

}

}

output {

if [fields][log_topics] == "cnginx" {

elasticsearch {

hosts => ["172.17.32.90:15029","172.17.32.91:15029","172.17.32.92:15029"]

index => "logstash-cnginx-%{+YYYY.MM.dd}"

#注意索引名称一定要以logstash-或者logstash_开头,不然kibana中创建地图时识别不了

}

}

}

启动,kafka , filebeat , logstash, elasticsearch , kibana .

登录kiban , 在 Management 选项里, 创建索引 index