MTCNN+Face_recognition实时人脸识别(二)训练自己的数据/多进程实时视频人脸识别

文章内容介绍

本文在上一篇博客的基础上,进行人脸识别特征模型的训练,以及将训练好的模型应用于图片上人脸识别和实时视频中的人脸识别。本文内容中代码会偏多,若是代码中有误,或者不优化,欢迎指出问题,留言,交流,谢谢!

话不多说,直接上code!!!code跑起来看到实际的效果胜过千言万语!!

目录

1.人脸识别数据集的准备

2.照片中的人脸识别以及底库人脸特征模型的生成

3.实时视频流人脸识别以及底库人脸特征模型的生成

4.本project总结

一. 人脸识别数据集的准备

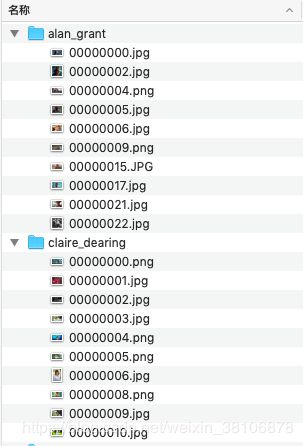

数据集文件格式如下图文件夹结构所示:

文件夹的命名为人的名字或ID,文件夹内的照片若干(3-5张左右即可),这里只是拿了两个人的文件夹来展示数据集的结构,实际使用中,可添加为若干个人。

二.人脸特征提取生成特征库

1.用于照片中人脸识别的特征库的生成

这边就直接上代码了,提取的人脸特征为128维

train_pic_knn_classifer.py

#coding: utf-8

#author: hxy

'''

人脸定位采用mtcnn

训练人脸特征模型:KNN

用到了face_recognition模块: pip安装即可

'''

import math

from sklearn import neighbors

import os

import pickle

from PIL import Image, ImageDraw

import face_recognition

from face_recognition.face_recognition_cli import image_files_in_folder

import time

# 加载mtcnn相关库和model

from training.mtcnn_model import P_Net, R_Net, O_Net

from tools.loader import TestLoader

from detection.MtcnnDetector import MtcnnDetector

from detection.detector import Detector

from detection.fcn_detector import FcnDetector

def net(stage):

detectors = [None, None, None]

if stage in ['pnet', 'rnet', 'onet']:

modelPath = '../model/pnet/'

a = [b[5:-6] for b in os.listdir(modelPath) if b.startswith('pnet-') and b.endswith('.index')]

maxEpoch = max(map(int, a)) # auto match a max epoch model

modelPath = os.path.join(modelPath, "pnet-%d"%(maxEpoch))

print("Use PNet model: %s"%(modelPath))

detectors[0] = FcnDetector(P_Net,modelPath)

if stage in ['rnet', 'onet']:

modelPath = '../model/rnet/'

a = [b[5:-6] for b in os.listdir(modelPath) if b.startswith('rnet-') and b.endswith('.index')]

maxEpoch = max(map(int, a))

modelPath = os.path.join(modelPath, "rnet-%d"%(maxEpoch))

print("Use RNet model: %s"%(modelPath))

detectors[1] = Detector(R_Net, 24, 1, modelPath)

if stage in ['onet']:

modelPath = '../model/onet/'

a = [b[5:-6] for b in os.listdir(modelPath) if b.startswith('onet-') and b.endswith('.index')]

maxEpoch = max(map(int, a))

modelPath = os.path.join(modelPath, "onet-%d"%(maxEpoch))

print("Use ONet model: %s"%(modelPath))

detectors[2] = Detector(O_Net, 48, 1, modelPath)

return detectors

def train(train_dir, model_save_path=None, n_neighbors=None, knn_algo='ball_tree', verbose=False):

X = []

y = []

# Loop through each person in the training set

for class_dir in os.listdir(train_dir):

if not os.path.isdir(os.path.join(train_dir, class_dir)):

continue

# Loop through each training image for the current person

for img_path in image_files_in_folder(os.path.join(train_dir, class_dir)):

# 初始化

pic_list = []

face_location = []

image = face_recognition.load_image_file(img_path)

#print(img_path)

pic_list.append(img_path)

testDatas = TestLoader(pic_list)

# 这里需要注意boxes坐标信息的处理(与原始的mtcnn输出的坐标信息有区别)

allBoxes, _ = mtcnnDetector.detect_face(testDatas)

for box in allBoxes[0]:

x1 = int(box[0])

y1 = int(box[1])

x2 = int(box[2])

y2 = int(box[3])

face_location.append((y1-10, x2+12, y2+10, x1-12))

print(face_location)

if len(face_location) != 1:

# 要是一张图中人脸数量大于一个,跳过这张图

if verbose:

print("Image {} not suitable for training: {}".format(img_path, "Didn't find a face" if len(face_location) < 1 else "Found more than one face"))

else:

# Add face encoding for current image to the training set

X.append(face_recognition.face_encodings(image, known_face_locations=face_location, num_jitters=6)[0])

y.append(class_dir)

# Determine how many neighbors to use for weighting in the KNN classifier

if n_neighbors is None:

n_neighbors = int(round(math.sqrt(len(X))))

if verbose:

print("Chose n_neighbors automatically:", n_neighbors)

# Create and train the KNN classifier

knn_clf = neighbors.KNeighborsClassifier(n_neighbors=n_neighbors, algorithm=knn_algo, weights='distance')

knn_clf.fit(X, y)

# Save the trained KNN classifier

if model_save_path is not None:

with open(model_save_path, 'wb') as f:

#data = {"encoding": X, "name":y}

pickle.dump(knn_clf, f)

return knn_clf

if __name__ == "__main__":

start = time.time()

print("Start Training classifier...")

detectors = net('onet')

os.environ["CUDA_VISIBLE_DEVICES"] = '0'

mtcnnDetector = MtcnnDetector(detectors=detectors, min_face_size = 40, threshold=[0.9, 0.6, 0.7])

classifier = train("../faceid/main/LFW", model_save_path="LFW_test_classifier_model.clf", n_neighbors=2)

end = time.time()

print("Training complete! Time cost: {} s".format(int(end-start)))

这里大致讲解一下code的思路:

采用mtcnn进行人脸定位,然后将人脸location传给face_recognition的face_encoding模块,从而对人脸进行人脸128维特征提取,然后训练knn分类器,生成.clf文件。

2.用于视屏中人脸识别特征库的生成

encoding_faces_mtcnn.py

#-*-coding: utf-8-*-

#Author: hxy

'''

生成的文件名为 ×××.pickle 文件用于视频中人脸识别

'''

# import the necessary packages

from imutils import paths

import face_recognition

import argparse

import pickle

import cv2

import os

# 加载mtcnn相关库和model

from training.mtcnn_model import P_Net, R_Net, O_Net

from tools.loader import TestLoader

from detection.MtcnnDetector import MtcnnDetector

from detection.detector import Detector

from detection.fcn_detector import FcnDetector

def net(stage):

detectors = [None, None, None]

if stage in ['pnet', 'rnet', 'onet']:

modelPath = '../model/pnet/'

a = [b[5:-6] for b in os.listdir(modelPath) if b.startswith('pnet-') and b.endswith('.index')]

maxEpoch = max(map(int, a)) # auto match a max epoch model

modelPath = os.path.join(modelPath, "pnet-%d"%(maxEpoch))

print("Use PNet model: %s"%(modelPath))

detectors[0] = FcnDetector(P_Net,modelPath)

if stage in ['rnet', 'onet']:

modelPath = '../model/rnet/'

a = [b[5:-6] for b in os.listdir(modelPath) if b.startswith('rnet-') and b.endswith('.index')]

maxEpoch = max(map(int, a))

modelPath = os.path.join(modelPath, "rnet-%d"%(maxEpoch))

print("Use RNet model: %s"%(modelPath))

detectors[1] = Detector(R_Net, 24, 1, modelPath)

if stage in ['onet']:

modelPath = '../model/onet/'

a = [b[5:-6] for b in os.listdir(modelPath) if b.startswith('onet-') and b.endswith('.index')]

maxEpoch = max(map(int, a))

modelPath = os.path.join(modelPath, "onet-%d"%(maxEpoch))

print("Use ONet model: %s"%(modelPath))

detectors[2] = Detector(O_Net, 48, 1, modelPath)

return detectors

def train():

print("[INFO] quantifying faces...")

imagePaths = list(paths.list_images('../faceid/datasets/train'))

knownEncodings = []

knownNames = []

# loop over the image paths

for (i, imagePath) in enumerate(imagePaths):

pic_list = []

face_location = []

# extract the person name from the image path

print("[INFO] processing image {}/{}".format(i + 1,

len(imagePaths)))

name = imagePath.split(os.path.sep)[-2]

# load the input image and convert it from RGB (OpenCV ordering)

# to dlib ordering (RGB)

image = cv2.imread(imagePath)

print(imagePath)

#rgb = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

pic_list.append(imagePath)

testDatas = TestLoader(pic_list)

# 这里需要注意boxes坐标信息的处理

allBoxes, _ = mtcnnDetector.detect_face(testDatas)

for box in allBoxes[0]:

x1 = int(box[0])

y1 = int(box[1])

x2 = int(box[2])

y2 = int(box[3])

face_location.append((y1-10, x2+12, y2+10, x1-12))

# compute the facial embedding for the face

encodings = face_recognition.face_encodings(image, face_location, num_jitters=6)

# loop over the encodings

for encoding in encodings:

knownEncodings.append(encoding)

knownNames.append(name)

# dump the facial encodings + names to disk

print("[INFO] serializing encodings...")

data = {"encodings": knownEncodings, "names": knownNames}

f = open('mtcnn_3_video_classifier.pickle', "wb")

f.write(pickle.dumps(data))

f.close()

if __name__=='__main__':

detectors = net('onet')

os.environ["CUDA_VISIBLE_DEVICES"] = '0'

mtcnnDetector = MtcnnDetector(detectors=detectors, min_face_size = 24, threshold=[0.9, 0.6, 0.7])

train()

code的思路和上面的思路是一样的,可以自己理解;代码思路应该也比较简单;

注意:在上面两部分代码的使用过程中,需要注意各个部分文件加载时的路径。

三. 实时视频流人脸识别

这里就直接上代码吧,我也不知道说什么,看着代码应该就懂了吧;

mtcnn_recognize_video.py

#-*-coding: utf-8-*-

#author: lxz-hxy

#e-mail: [email protected]

'''

采用多进程进行实时人脸识别

mtcnn人脸定位+ face-recognition

'''

import os

import gc

import time

import pickle

import logging

import numpy as np

from cv2 import cv2 as cv2

import face_recognition

import tensorflow as tf

from multiprocessing import Process, Manager

import multiprocessing as mp

# 载入mtcnn相关模块

from training.mtcnn_model import P_Net, R_Net, O_Net

from tools.loader import TestLoader

from detection.MtcnnDetector import MtcnnDetector

from detection.detector import Detector

from detection.fcn_detector import FcnDetector

def logset():

logging.basicConfig(level=logging.INFO, format='%(asctime)s -%(filename)s:%(lineno)d - %(levelname)s - %(message)s')

def net(stage):

detectors = [None, None, None]

if stage in ['pnet', 'rnet', 'onet']:

modelPath = '/home/lxz/project/faceid/main/tmp/model/pnet/'

a = [b[5:-6] for b in os.listdir(modelPath) if b.startswith('pnet-') and b.endswith('.index')]

maxEpoch = max(map(int, a)) # auto match a max epoch model

modelPath = os.path.join(modelPath, "pnet-%d"%(maxEpoch))

logging.info("Use PNet model: %s"%(modelPath))

detectors[0] = FcnDetector(P_Net, modelPath)

if stage in ['rnet', 'onet']:

modelPath = '/home/lxz/project/faceid/main/tmp/model/rnet/'

a = [b[5:-6] for b in os.listdir(modelPath) if b.startswith('rnet-') and b.endswith('.index')]

maxEpoch = max(map(int, a))

modelPath = os.path.join(modelPath, "rnet-%d"%(maxEpoch))

logging.info("Use RNet model: %s"%(modelPath))

detectors[1] = Detector(R_Net, 24, 1, modelPath)

if stage in ['onet']:

modelPath = '/home/lxz/project/faceid/main/tmp/model/onet/'

a = [b[5:-6] for b in os.listdir(modelPath) if b.startswith('onet-') and b.endswith('.index')]

maxEpoch = max(map(int, a))

modelPath = os.path.join(modelPath, "onet-%d"%(maxEpoch))

logging.info("Use ONet model: %s"%(modelPath))

detectors[2] = Detector(O_Net, 48, 1, modelPath)

return detectors

def receive(stack):

logging.info('[INFO]Receive the video..........')

top = 500

# rtsp = 'rtsp://admin:*****************'

cap = cv2.VideoCapture(0)

ret, frame = cap.read()

while True:

ret, frame = cap.read()

# frame = cv2.resize(frame, (int(frame.shape[1]/2), int(frame.shape[0]/2)))

if ret:

stack.append(frame)

if len(stack) >= top:

logging.info("stack full, begin to collect it......")

del stack[:450]

gc.collect()

def recognize(stack):

logging.info("[INFO]:Starting video stream...")

# os.environ["CUDA_VISIBLE_DEVICES"] = '1'

# config = tf.ConfigProto()

# config.gpu_options.allow_growth = True

# session = tf.Session(config = config)

data = pickle.loads(open('/home/lxz/project/faceid/alignment.pickle', "rb").read())

detectors = net('onet')

mtcnnDetector = MtcnnDetector(detectors = detectors, min_face_size = 60, threshold = [0.9, 0.6, 0.7])

logging.info('MTCNN/KNN Model load sucessed !!!!')

while True:

if len(stack) > 20:

boxes = []

frame = stack.pop()

image = np.array(frame)

allBoxes, _ = mtcnnDetector.detect_video(image)

for box in allBoxes:

x_1 = int(box[0])

y_1 = int(box[1])

x_2 = int(box[2])

y_2 = int(box[3])

boxes.append((y_1-10, x_2+12, y_2+10, x_1-12))

logging.debug(boxes)

start = time.time()

# num_jitters(re-sample人脸的次数)参数的设定,数值越大精度相对会高,但是速度会慢;

encodings = face_recognition.face_encodings(frame, boxes, num_jitters=6)

end = time.time()

logging.info('[INFO]:Encoding face costed: {} s'.format(end-start))

print('encode time is {}ms'.format((end-start)*1000))

names = []

for encoding in encodings:

# distance between faces to consider it a match, optimize is 0.6

matches = face_recognition.compare_faces(data['encodings'], encoding, tolerance=0.35)

name = 'Stranger'

if True in matches:

matchesidx = [i for (i, b) in enumerate(matches) if b]

counts = {}

for i in matchesidx:

name = data['names'][i]

counts[name] = counts.get(name, 0) + 1

name = max(counts, key = counts.get)

logging.debug(name)

names.append(name)

# 绘制检测框 + 人脸识别结果

for ((top, right, bottom, left), name) in zip(boxes, names):

# print(name)

y1 = int(top)

x1 = int(right)

y2 = int(bottom)

x2 = int(left)

cv2.rectangle(frame, (x2, y1), (x1, y2), (0, 0, 255), 2)

if name == 'Stranger':

cv2.putText(frame, name, (x2, y1-5), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 0, 255), 2)

else:

print(name)

cv2.putText(frame, name, (x2, y1-5), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 255, 0), 2)

cv2.imshow('Recognize-no-alignment', frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

cv2.destroyAllWindows()

if __name__ == '__main__':

logset()

os.environ["CUDA_VISIBLE_DEVICES"] = '1'

config = tf.ConfigProto()

config.gpu_options.allow_growth = True

session = tf.Session(config = config)

mp.set_start_method('spawn')

p = Manager().list()

p1 = Process(target=receive, args=(p,))

p2 = Process(target=recognize, args=(p,))

p1.start()

p2.start()

p1.join()

p2.terminate()

注意:

**这部分代码当时是用于简单测试的时候写的,和照片中人脸识别的思路是一样的。区别就是,这里处理的是视屏流(laptop的cam或者rtsp视频流),我们需要将视屏流利用cv库处理成一帧一帧的照片给算法使用就好。 **

四. 本project小总结

1. 这个人脸识别的小project是我在刚开始接触人脸识别的时候学习的时候做的小demo,在一定程度上帮助了本人对人脸识别做到了一定的学习和理解。当然,关于人脸检测/识别算法方面,这两篇博客不做解读,本人自己学习得还不够深刻,所以,怕引起误导。

2. 博客中涉及的所有的代码均会上传到本人的github上,同时,由于本人能力有限,代码的内容有部分参考于其它资源。

3. 代码中有部分参数可以自行调整,从而来提升人脸识别的精确度,有兴趣的朋友可以自行去进行实验和学习!同时,还有部分关于人脸对齐的代码这里没有贴出,到时候会放到github上。

4. 终于在假期的尾巴把人脸识别的博客写完,哈哈!!

5. 要是觉得本博客对您的学习有帮助,欢迎点赞!谢谢支持!要是觉得不足之处,欢迎指出,谢谢!互相学习,进步!!

本project 所有代码及github地址:https://github.com/YingXiuHe/MTCNN-for-Face-Recognition

欢迎点星星 哈哈~