ELK分析平台集群安装部署,收集系统日志展示

一般大型系统是一个分布式部署的架构,不同的服务模块部署在不同的服务器上,问题出现时,大部分情况需要根据问题暴露的关键信息,定位到具体的服务器和服务模块,构建一套集中式日志系统,可以提高定位问题的效率。

一个完整的集中式日志系统,需要包含以下几个主要特点:

- 收集-能够采集多种来源的日志数据

- 传输-能够稳定的把日志数据传输到中央系统

- 存储-如何存储日志数据

- 分析-可以支持 UI 分析

- 警告-能够提供错误报告,监控机制 警告-能够提供错误报告,监控机制

完整的日志数据作用

- 信息查找:通过检索日志信息,定位相应的bug,找出解决方案。

- 服务诊断:通过对日志信息进行统计、分析,了解服务器的负荷和服务运行状态,找出耗时请求进行优化等等。

- 数据分析:如果是格式化的log,可以做进一步的数据分析,统计、聚合出有意义的信息,比如根据请求中的商品id,找出TOP10用户感兴趣商品。

ELK下载地址

ELK官网:https://www.elastic.co/

elasticsearch下载地址:https://www.elastic.co/downloads/elasticsearch

elasticsearch安装文档:https://www.elastic.co/guide/en/elasticsearch/reference/6.4/install-elasticsearch.html

kibana下载地址:https://www.elastic.co/downloads/kibana

kibana安装文档:https://www.elastic.co/guide/en/kibana/6.4/install.html

logstash下载地址:https://www.elastic.co/downloads/logstash

logstash安装文档:https://www.elastic.co/guide/en/logstash/6.4/installing-logstash.html

beats插件下载地址:https://www.elastic.co/downloads/beats

beats插件安装文档:https://www.elastic.co/guide/en/beats/libbeat/6.4/getting-started.html

ELK架构

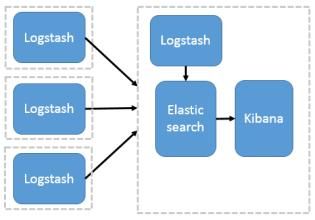

架构一:

此架构由Logstash分布于各个节点上搜集相关日志、数据,并经过分析、过滤后发送给远端服务器上的Elasticsearch进行存储。Elasticsearch将数据以分片的形式压缩存储并提供多种API供用户查询,操作。用户亦可以更直观的通过配置Kibana Web方便的对日志查询,并根据数据生成报表。

架构二:

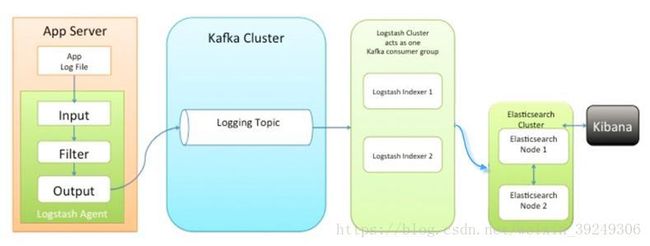

各个节点上的Logstash先将数据或日志传递给Kafka(或者Redis),并将队列中消息或数据间接传递给Logstash,Logstash过滤、分析后将数据传递给Elasticsearch远端服务器进行存储。最后由Kibana将日志和数据呈现给用户。因为引入了Kafka(或者Redis),所以即使远端Logstash server因故障停止运行,数据将会先被存储下来,从而避免数据丢失。

架构图三:

beats替换logstach,更灵活,消耗资源更少,扩展性更强。

ELK介绍

Logstash是一个ETL工具,负责从每台机器抓取日志数据,对数据进行格式转换和处理后,输出到Elasticsearch中存储。Elasticsearch是一个分布式搜索引擎和分析引擎,用于数据存储,可提供实时的数据查询。Kibana是一个数据可视化服务,根据用户的操作从Elasticsearch中查询数据,形成相应的分析结果,以图表的形式展现给用户。ELK是三个开源软件的缩写,分别表示:Elasticsearch , Logstash, Kibana;

Elasticsearch

基于lucene构建的开源分布式搜索引擎,提供搜集、分析、存储数据三大功能;

搜索引擎:lucene

搜索程序一般两部分组成,第一部分是索引链,索引链的功能实现主要有一下几个步骤:检索原始内容,创建文档,存储数据以及对文档创建索引;第二部分是搜索组件,搜索组件的作用是接受用户的请求,通过用户接口构建可编程查询语法,查询语句执行以及将结果相应给用户;

著名的开源软件lucene为索引组件,提供搜索程序的核心索引和搜索模块;

Lucene是一款高性能的、可扩展的信息检索(IR)工具库,是由Java语言开发的成熟、自由开源的搜索类库,基于Apache协议授权。

ElasticSearch和lucene的逻辑工作原理

- 获取内容

lucene不提供任何爬取网络内容的组件,由其他组件收集和搜索需要的相关数据,将获取的数据建立为小数据块,即文档; - 建立文档

将获取的原始数据转化为供搜索引擎专用的小数据块(文档),在建立文档过程中会赋予文档加权值,加权值越大,访问的概率也就越大;权重可在文档建立之前生成,也可在建立之后生成; - 文档分析

ElasticSearch搜索引擎不具备对文本直接搜索,需要将文本转化为成为独立原子元素,该过程称成为文档分析; - 建立文档索引

在索引时将文档加入索引列表来进行查询;查询是基于 lucene提供大量的API接口

主要接口如下:

IdexSearch:搜索索引入口

Query及其子类

topDocs:保存每次查询的最高峰值的前10个

scoreddoc:索引值 - 用户搜索界面

UI(user interface)是搜索引擎主要组件,用户通过搜索引擎界面来进行交互,提供用户搜索请求并转化为合适的查询格式,以便引擎查询; - 建立查询

用户提交的搜索请求通常以HTML表单或Ajax请求的形式由浏览器提交到搜索引擎服务器,因此,需要事先由查询解析器一类的组件将这个请求转换成搜索引擎使用的查询对象格式。 - 搜索查询

请求建立完成后,就需要查询检索索引并返回与查询语句匹配的并根据请求排好序的文档。 - 展示结果

将排好序的文档需要用直观、经济的方式为用户展现结果。

基本概念

- 索引

索引是具有类似特性的文档集合或者容器,索引由其名称进行标识,通过名称来完成对文档的增删改查,类比数据库表的行 - 类型

索引内部的逻辑分区,取决用户需求,定义一个或者多个类型。类比数据库表的结构 - 文档

包含一个或者多个域的容器或者集合,基于json格式表示; - 映射

原始内容在存储为文档前,需要进行分析,而映射就是定义如何去分析内容;例如切词以及过滤某些词; - 集群

一个或者多个节点的集合,共同存储整个数据。集群是靠集群名来进行标识以及加入集群;拥有冗余能力,它可以在一个或几个节点出现故障时保证服务的整体可用性 - 节点

单个运行Elasticsearch的实例,可以存储数据,参与进群的索引以及搜索操作; - 分片和副本

分片机制通过将索引切分为多个底层物理并且具有索引的存储数据功能,将每个物理索引成为分片;

每个分片的内部是全功能且独立的索引。系统默认为5个分片;

Shard有两种类型:primary和replica,即主shard及副本shard。

Primary shard用于文档存储,每个新的索引会自动创建5个Primary shard,当然此数量可在索引创建之前通过配置自行定义,不过,一旦创建完成,其Primary shard的数量将不可更改。

Replica shard是Primary Shard的副本,用于冗余数据及提高搜索性能。

每个Primary shard默认配置了一个Replica shard,但也可以配置多个,且其数量可动态更改。ES会根据需要自动增加或减少这些Replica shard的数量。

系统插件

数据查询

logstach

主要是用来日志的搜集、分析、过滤日志的工具,支持大量的数据获取方式。一般工作方式为c/s架构,client端安装在需要收集日志的主机上,server端负责将收到的各节点日志进行过滤、修改等操作在一并发往elasticsearch上去。

logstach工作原理

Logstash使用管道方式进行日志的搜集处理和输出。

logstach事件处理包含三个阶段:输入input–>处理filter–>输出output;是一个接收,处理,转发日志的工具。支持系统日志,webserver日志,错误日志,应用日志,总之包括所有可以抛出来的日志类型。

Input:输入数据到logstach

- file:从文件系统的文件中读取;

- syslog:在514端口上监听系统日志消息;

- redis:从redis service中读取;

- beats:从filebeat中读取;

- Filters:数据中间处理,对数据进行操作;

filetr

- grok:解析任意文本数据,Grok 是 Logstash 最重要的插件。它的主要作用就是将文本格式的字符串,转换成为具体的结构化的数据,配合正则表达式使用。内置120多个解析语法。

- mutate:对字段进行转换。例如对字段进行删除、替换、修改、重命名等。

- drop:丢弃outputs是logstash处理管道的最末端组件一部分events不进行处理。

- clone:拷贝 event,这个过程中也可以添加或移除字段。

- geoip:添加地理信息(为前台kibana图形化展示使用);

- Outputs:一个event可以在处理过程中经过多重输出,但是一旦所有的outputs都执行结束,这个event也就完成生命周期。

Output:outputs是logstash处理管道的最末端组件

- elasticsearch:可以高效的保存数据,并且能够方便和简单的进行查询。

- file:将event数据保存到文件中。

- graphite:将event数据发送到图形化组件;

- Codecs:codecs 是基于数据流的过滤器,它可以作为input,output的一部分配置。Codecs可以帮助你轻松的分割发送过来已经被序列化的数据。

Kibana

可以为 Logstash 和 ElasticSearch 提供的日志分析友好的 Web 界面,可以帮助汇总、分析和搜索重要数据日志。

Beats包含六种工具:

Packetbeat(搜集网络流量数据)

Topbeat(搜集系统、进程和文件系统级别的 CPU 和内存使用情况等数据)

Filebeat(搜集文件数据)

Winlogbeat(搜集 Windows 事件日志数据)

Metricbeat(从系统和服务收集指标,发送系统和服务统计信息的轻量级方法)

Auditbeat(收集liunx审计框架数据并监视文件完整性)

ELK安装部署

安装方式源码包安装和rpm包安装

1.单节点部署:源码包安装

安装环境准备

系统centos7.0,关闭防火墙和selinux;

elasticsearch是基于java开发的,需要节点安装java1.8版本以上

[root@localhost ~]# wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.5.4.tar.gz

[root@localhost ~]# tar -zxf elasticsearch-6.5.4.tar.gz

[root@localhost ~]# cd elasticsearch-6.5.4

修改配置文件

[root@localhost ~]# vim /home/elasticsearch/elasticsearch-6.5.4/config/elasticsearch.yml

cluster.name: my-application

node.name: node-1

network.host: 192.168.50.146

http.port: 9200

[root@localhost ~]# ./bin/elasticsearch

抛出异常问题:检查9200端口并没有起来

OpenJDK 64-Bit Server VM warning: If the number of processors is expected to increase from one, then you should configure the number of parallel GC threads appropriately using -XX:ParallelGCThreads=N

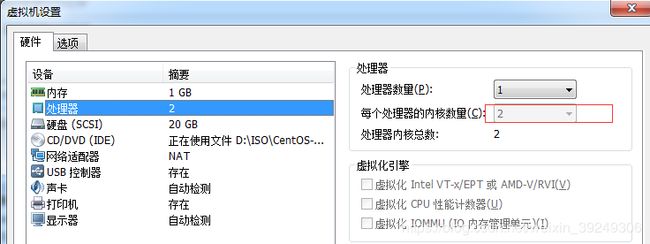

设置虚拟机的处理核心数为2,上面报错消除!

[2018-12-26T10:40:01,203][WARN ][o.e.b.ElasticsearchUncaughtExceptionHandler] [unknown] uncaught exception in thread [main]

org.elasticsearch.bootstrap.StartupException: java.lang.RuntimeException: can not run elasticsearch as root

因为服务器开启es服务不能使用root用户开启,需要新建用户进行开启服务

[root@localhost ~]# mv elasticsearch-6.5.4 /home/elasticsearch/

[root@localhost ~]# groupadd elasticsearch

[root@localhost ~]# useradd -g elasticsearch elasticsearch

[root@localhost ~]# id elasticsearch

uid=1000(elasticsearch) gid=1000(elasticsearch) groups=1000(elasticsearch)

需要为文件添加权限,否则启动时会报错

[root@localhost elasticsearch]# chown elasticsearch:elasticsearch elasticsearch-6.5.4 -R

[root@localhost elasticsearch]# su elasticsearch

[elasticsearch@localhost ~]$ cd elasticsearch-6.5.4/

[elasticsearch@localhost elasticsearch-6.5.4]$ ./bin/elasticsearch

在次启动时报错:

[1]: max number of threads [794] for user [elasticsearch] is too low, increase to at least [4096]

[2]: max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

报错1解决办法:

在配置文件后面添加最后几行即可

[root@localhost ~]# cat /etc/security/limits.d/20-nproc.conf

# Default limit for number of user's processes to prevent

# accidental fork bombs.

# See rhbz #432903 for reasoning.

* soft nproc 4096

root soft nproc unlimited

在接着修改配置文件:

[root@localhost ~]# cat /etc/security/limits.d/20-nproc.conf

* soft nofile 65536

* hard nofile 131072

* soft nproc 4096

* hard nproc 4096

报错2解决办法:

[root@localhost ~]# cat /etc/sysctl.conf

vm.max_map_count=262144

再次启动服务

[root@localhost ~]# cd /home/elasticsearch/elasticsearch-6.5.4/bin/

[root@localhost bin]# su elasticsearch

[elasticsearch@localhost bin]$ ./elasticsearch

[root@localhost ~]# curl http://192.168.50.146:9200

{

"name" : "node-1",

"cluster_name" : "my-application",

"cluster_uuid" : "hka16viUTAKyk2zKJeC5fg",

"version" : {

"number" : "6.5.4",

"build_flavor" : "default",

"build_type" : "tar",

"build_hash" : "d2ef93d",

"build_date" : "2018-12-17T21:17:40.758843Z",

"build_snapshot" : false,

"lucene_version" : "7.5.0",

"minimum_wire_compatibility_version" : "5.6.0",

"minimum_index_compatibility_version" : "5.0.0"

},

"tagline" : "You Know, for Search"

}

异常以及报错解决办法参考博客:安装ElasticSearch

2.分布式部署: rpm包安装

安装环境准备

系统centos7.0,关闭防火墙和selinux;

36作为主节点,37和38作为数据节点;

| 主机名以及ip地址 | 安装版本 |

|---|---|

| server36:192.168.50.136 | elasticsearch6.4 ,kibana6.4 |

| server37:192.168.50.137 | elasticsearch6.4,logstach6.4 |

| server38:192.168.50.138 | elasticsearch6.4 |

elasticsearch是基于java开发的,需要节点安装java

[root@server36 ~]# java -version

openjdk version "1.8.0_181"

OpenJDK Runtime Environment (build 1.8.0_181-b13)

OpenJDK 64-Bit Server VM (build 25.181-b13, mixed mode)

主节点安装:

[root@server36 ~]# rpm -ivh elasticsearch-6.4.0.rpm

[root@server36 ~]# rpm -ivh kibana-6.4.0-x86_64.rpm

数据节点1安装:

[root@server6 ~]# rpm -ivh elasticsearch-6.4.0.rpm

[root@server6 ~]# rpm -ivh logstash-6.4.0.rpm

数据节点2安装:

[root@server38 ~]# rpm -ivh elasticsearch-6.4.0.rpm

elasticsearch的配置文件:

[root@server36 ~]# ll /etc/elasticsearch/

total 28

-rw-rw----. 1 root elasticsearch 207 Sep 26 16:10 elasticsearch.keystore

-rw-rw----. 1 root elasticsearch 3009 Aug 18 07:23 jvm.options

-rw-rw----. 1 root elasticsearch 6380 Aug 18 07:23 log4j2.properties

主节点修改配置文件:

[root@server36 ~]# vim /etc/elasticsearch/elasticsearch.yml

集群的名字:

17 cluster.name: my-ELK

节点名字和描述:

23 node.name: master

24 node.master: true

25 node.data: false

数据存放目录:

path.data: /var/lib/elasticsearch

日志存放目录:

path.logs: /var/log/elasticsearch

是否锁定内存大小:

#bootstrap.memory_lock: true

启动集群后监听的ip地址:

57 network.host: 192.168.50.136

集群默认使用的端口:

61 http.port: 9200

检测集群的节点:

70 discovery.zen.ping.unicast.hosts: ["192.168.50.136", "192.168.50.137", "192.168.50.138"]

将修改的文件scp给其他节点:

[root@server36 ~]# scp /etc/elasticsearch/elasticsearch.yml [email protected]:/etc/elasticsearch/

[email protected]'s password:

elasticsearch.yml 100% 2923 1.6MB/s 00:00

[root@server36 ~]# scp /etc/elasticsearch/elasticsearch.yml [email protected]:/etc/elasticsearch/

[email protected]'s password:

elasticsearch.yml 100% 2923 2.7MB/s 00:00

数据节点1做如下修改:

节点名字和描述:

23 node.name: data-node1

24 node.master: false

25 node.data: true

57 network.host: 192.168.50.137

数据节点2做如下修改:

节点名字和描述:

23 node.name: data-node2

24 node.master: false

25 node.data: true

57 network.host: 192.168.50.138

开始启动集群的节点,启动主节点:

[root@server36 ~]# systemctl start elasticsearch.service

[root@server36 ~]# systemctl status elasticsearch.service #查看状态

[root@server36 ~]# systemctl enable elasticsearch.service

依次启动数据节点:

[root@server37 ~]# systemctl start elasticsearch.service

[root@server37 ~]# systemctl status elasticsearch.service

[root@server37 ~]# systemctl enable elasticsearch.service

[root@server38 ~]# systemctl start elasticsearch.service

[root@server38 ~]# systemctl status elasticsearch.service

[root@server38 ~]# systemctl enable elasticsearch.service

如果服务没有启动,查看日志:

[root@server36 ~]# tail -f /var/log/messages

[root@server36 ~]# ls /var/log/elasticsearch/

gc.log.0.current my-ELK_deprecation.log my-ELK_index_search_slowlog.log

my-ELK_access.log my-ELK_index_indexing_slowlog.log my-ELK.log

查看服务端口是否正常:9300端口是集群通信用的,9200则是数据传输时用的。

[root@server36 ~]# netstat -lnpt | grep java

tcp6 0 0 :::9200 :::* LISTEN 9550/java

tcp6 0 0 :::9300 :::* LISTEN 9550/java

[root@server36 ~]# ps aux | grep elasticsearch

elastic+ 9550 2.1 64.8 3240592 647164 ? Ssl 17:32 0:25 /bin/java -Xms1g -Xmx1g -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -XX:+AlwaysPreTouch -Xss1m -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djna.nosys=true -XX:-OmitStackTraceInFastThrow -Dio.netty.noUnsafe=true -Dio.netty.noKeySetOptimization=true -Dio.netty.recycler.maxCapacityPerThread=0 -Dlog4j.shutdownHookEnabled=false -Dlog4j2.disable.jmx=true -Djava.io.tmpdir=/tmp/elasticsearch.vVmOUXxj -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/var/lib/elasticsearch -XX:ErrorFile=/var/log/elasticsearch/hs_err_pid%p.log -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintTenuringDistribution -XX:+PrintGCApplicationStoppedTime -Xloggc:/var/log/elasticsearch/gc.log -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=32 -XX:GCLogFileSize=64m -Des.path.home=/usr/share/elasticsearch -Des.path.conf=/etc/elasticsearch -Des.distribution.flavor=default -Des.distribution.type=rpm -cp /usr/share/elasticsearch/lib/* org.elasticsearch.bootstrap.Elasticsearch -p /var/run/elasticsearch/elasticsearch.pid --quiet

elastic+ 9598 0.0 0.0 72136 704 ? Sl 17:32 0:00 /usr/share/elasticsearch/modules/x-pack-ml/platform/linux-x86_64/bin/controller

root 9701 0.0 0.0 112704 976 pts/0 R+ 17:53 0:00 grep --color=auto elasticsearch

其他节点服务以及端口正常!

检查集群健康状况

[root@server36 ~]# curl -XGET 'http://192.168.50.136:9200/_cluster/health?pretty'

{

"cluster_name" : "ELK",

"status" : "green", #green表示正常

"timed_out" : false,

"number_of_nodes" : 3, #3表示三个节点

"number_of_data_nodes" : 2, #2个数据节点

"active_primary_shards" : 0,

"active_shards" : 0,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0

}

检查集群状态

[root@server36 ~]# curl -XGET 'http://192.168.50.136:9200/_cat/nodes'

192.168.50.136 10 93 6 0.88 0.58 0.25 mi * master *号表示master

192.168.50.138 12 92 6 0.04 0.14 0.07 di - data-node2

192.168.50.137 10 90 7 0.23 0.25 0.11 di - data-node1

检查集群详细状况

[root@server36 ~]# curl -XGET 'http://192.168.50.136:9200/_cluster/state/nodes?pretty'

{

"cluster_name" : "ELK",

"compressed_size_in_bytes" : 9581,

"cluster_uuid" : "5ngUJ2q-S7WfQFaHAVvDhA",

"nodes" : {

"qA-0RcdDQy2vOOXK-64Zxw" : {

"name" : "master",

"ephemeral_id" : "a0I-6I2dRKOX935IlN95tQ",

"transport_address" : "192.168.50.136:9300",

"attributes" : {

"ml.machine_memory" : "1021906944",

"xpack.installed" : "true",

"ml.max_open_jobs" : "20",

"ml.enabled" : "true"

}

},

"VOfo7P6WQHWxx118ryjDJQ" : {

"name" : "data-node2",

"ephemeral_id" : "1Va2eWFKQgK042Dg7WjKeQ",

"transport_address" : "192.168.50.138:9300",

"attributes" : {

"ml.machine_memory" : "1021906944",

"ml.max_open_jobs" : "20",

"xpack.installed" : "true",

"ml.enabled" : "true"

}

},

"Si8PXyDARyGoGTcIxqKwNQ" : {

"name" : "data-node1",

"ephemeral_id" : "NXTrnH-mR_KCk7Yii0hn3w",

"transport_address" : "192.168.50.137:9300",

"attributes" : {

"ml.machine_memory" : "1910050816",

"ml.max_open_jobs" : "20",

"xpack.installed" : "true",

"ml.enabled" : "true"

}

}

}

}

此时我们的集群已经搭建成功!

安装kibana

[root@server36 ~]# rpm -ivh kibana-6.4.0-x86_64.rpm

配置文件修改

[root@server36 ~]# vim /etc/kibana/kibana.yml

2 #server.port: 5601 #监听端口

7 server.host: "192.168.50.136" #安装kibana服务器ip

27 # The URL of the Elasticsearch instance to use for all your queries.

28 elasticsearch.url: "http://192.168.50.136:9200" #连接地址

97 logging.dest: /var/log/kibana.loet #配置kibana的日志文件路经

创建日志文件

[root@server36 ~]# touch /var/log/kiban.log

[root@server36 ~]# chmod 777 /var/log/kiban.log

[root@server36 ~]# ll /var/log/kiban.log

-rwxrwxrwx. 1 root root 0 Sep 27 17:14 /var/log/kiban.log

检查监听端口

[root@server36 ~]# netstat -lnpt | grep :5601

tcp 0 0 192.168.50.136:5601 0.0.0.0:* LISTEN 1308/node

安装logstach

[root@server6 ~]# rpm -ivh logstash-6.4.0.rpm

安装完成先不要启动服务,先配置logstash收集syslog日志:

[root@server6 ~]# vim /etc/logstash/conf.d/syslog.conf

input {

syslog {

type => "system-syslog"

port => 10514

}

}

output {

stdout {

codec => rubydebug

}

}

检测配置文件是否有问题

[root@server6 bin]# ./logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/syslog.conf --config.test_and_exit

OpenJDK 64-Bit Server VM warning: If the number of processors is expected to increase from one, then you should configure the number of parallel GC threads appropriately using -XX:ParallelGCThreads=N

Sending Logstash logs to /var/log/logstash which is now configured via log4j2.properties

[2018-09-27T17:33:41,534][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

Configuration OK #代表配置文件没有问题

[2018-09-27T17:34:27,275][INFO ][logstash.runner ] Using config.test_and_exit mode. Config Validation Result: OK. Exiting Logstash

--path.settings 用于指定logstash的配置文件所在的目录

-f 指定需要被检测的配置文件的路径

--config.test_and_exit 指定检测完之后就退出

配置kibana服务的Ip和监听端口

[root@server37 bin]# vim /etc/rsyslog.conf

89 # remote host is: name/ip:port, e.g. 192.168.0.1:514, port optional

90 *.* @@192.168.50.137:10514

让配置生效

[root@server6 bin]# systemctl restart rsyslog

制定配置文件,启动logstash

[root@server37 ~]# cd /usr/share/logstash/bin/

[root@server37 bin]# ./logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/syslog.conf

OpenJDK 64-Bit Server VM warning: If the number of processors is expected to increase from one, then you should configure the number of parallel GC threads appropriately using -XX:ParallelGCThreads=N

#终端会停在这里。配置文件定义将信息输出到当前终端

打开新的窗口检查10514端口

[root@server37 ~]# netstat -lntp |grep 10514

tcp6 0 0 :::10514 :::* LISTEN 1572/java

测试是否有日志输出:

[root@server37 ~]# cd /usr/share/logstash/bin/

[root@server37 bin]# ./logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/syslog.conf

Sending Logstash logs to /var/log/logstash which is now configured via log4j2.properties

{

"facility" => 10,

"program" => "sshd",

"type" => "system-syslog",

"logsource" => "server6",

"priority" => 86,

"pid" => "1615",

"@timestamp" => 2018-09-27T09:46:11.000Z,

"facility_label" => "security/authorization",

"severity" => 6,

"severity_label" => "Informational",

"timestamp" => "Sep 27 17:46:11",

"message" => "Accepted password for root from 192.168.50.1 port 54705 ssh2\n",

"host" => "192.168.50.137",

"@version" => "1"

}

{

"facility" => 5,

"program" => "rsyslogd",

"type" => "system-syslog",

"logsource" => "server6",

"priority" => 46,

"@timestamp" => 2018-09-27T09:46:11.000Z,

"facility_label" => "syslogd",

"severity" => 6,

"severity_label" => "Informational",

"timestamp" => "Sep 27 17:46:11",

"message" => "action 'action 7' resumed (module 'builtin:omfwd') [v8.24.0 try http://www.rsyslog.com/e/2359 ]\n",

"host" => "192.168.50.137",

"@version" => "1"

}

{

"facility" => 5,

"program" => "rsyslogd",

"type" => "system-syslog",

"logsource" => "server6",

"priority" => 46,

"@timestamp" => 2018-09-27T09:46:11.000Z,

"facility_label" => "syslogd",

"severity" => 6,

"severity_label" => "Informational",

"timestamp" => "Sep 27 17:46:11",

"message" => "action 'action 7' resumed (module 'builtin:omfwd') [v8.24.0 try http://www.rsyslog.com/e/2359 ]\n",

"host" => "192.168.50.137",

"@version" => "1"

}

{

"facility" => 3,

"program" => "systemd",

"type" => "system-syslog",

"logsource" => "server6",

"priority" => 30,

"@timestamp" => 2018-09-27T09:46:12.000Z,

"facility_label" => "system",

"severity" => 6,

"severity_label" => "Informational",

"timestamp" => "Sep 27 17:46:12",

"message" => "Started Session 3 of user root.\n",

"host" => "192.168.50.137",

"@version" => "1"

}

{

"facility" => 10,

"program" => "sshd",

"type" => "system-syslog",

"logsource" => "server6",

"priority" => 86,

"pid" => "1615",

"@timestamp" => 2018-09-27T09:46:12.000Z,

"facility_label" => "security/authorization",

"severity" => 6,

"severity_label" => "Informational",

"timestamp" => "Sep 27 17:46:12",

"message" => "pam_unix(sshd:session): session opened for user root by (uid=0)\n",

"host" => "192.168.50.137",

"@version" => "1"

}

{

"facility" => 4,

"program" => "systemd-logind",

"type" => "system-syslog",

"logsource" => "server6",

"priority" => 38,

"@timestamp" => 2018-09-27T09:46:12.000Z,

"facility_label" => "security/authorization",

"severity" => 6,

"severity_label" => "Informational",

"timestamp" => "Sep 27 17:46:12",

"message" => "New session 3 of user root.\n",

"host" => "192.168.50.137",

"@version" => "1"

}

{

"facility" => 3,

"program" => "systemd",

"type" => "system-syslog",

"logsource" => "server6",

"priority" => 30,

"@timestamp" => 2018-09-27T09:46:12.000Z,

"facility_label" => "system",

"severity" => 6,

"severity_label" => "Informational",

"timestamp" => "Sep 27 17:46:12",

"message" => "Starting Session 3 of user root.\n",

"host" => "192.168.50.137",

"@version" => "1"

}

测试成功,编辑配置文件让日志输出到es服务器中:

[root@server37 ~]# vim /etc/logstash/conf.d/syslog.conf

input {

syslog {

type => "system-syslog"

port => 10514

}

}

output {

elasticsearch {

hosts => ["192.168.50.136:9200"] #定义输出到集群装有kibana服务器

index => "system-syslog-%{YYYY.MM}" #定义索引

}

}

检查配置文件是否有错

[root@server37 ~]# cd /usr/share/logstash/bin/

[root@server37 bin]# ./logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/syslog.conf --config.test_and_exit

OpenJDK 64-Bit Server VM warning: If the number of processors is expected to increase from one, then you should configure the number of parallel GC threads appropriately using -XX:ParallelGCThreads=N

Sending Logstash logs to /var/log/logstash which is now configured via log4j2.properties

[2018-09-27T19:04:42,260][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

Configuration OK

[2018-09-27T19:04:44,912][INFO ][logstash.runner ] Using config.test_and_exit mode. Config Validation Result: OK. Exiting Logstash

一切ok,启动logstash服务,检查进程以及监听端口:

[root@server37 ~]# systemctl start logstash

[root@server37 ~]# ps aux | grep logstash

logstash 1877 9.7 7.9 3150576 148516 ? SNsl 19:09 0:01 /bin/java -Xms1g -Xmx1g -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djruby.compile.invokedynamic=true -Djruby.jit.threshold=0 -XX:+HeapDumpOnOutOfMemoryError -Djava.security.egd=file:/dev/urandom -cp /usr/share/logstash/logstash-core/lib/jars/animal-sniffer-annotations-1.14.jar:/usr/share/logstash/logstash-core/lib/jars/commons-codec-1.11.jar:/usr/share/logstash/logstash-core/lib/jars/commons-compiler-3.0.8.jar:/usr/share/logstash/logstash-core/lib/jars/error_prone_annotations-2.0.18.jar:/usr/share/logstash/logstash-core/lib/jars/google-java-format-1.1.jar:/usr/share/logstash/logstash-core/lib/jars/gradle-license-report-0.7.1.jar:/usr/share/logstash/logstash-core/lib/jars/guava-22.0.jar:/usr/share/logstash/logstash-core/lib/jars/j2objc-annotations-1.1.jar:/usr/share/logstash/logstash-core/lib/jars/jackson-annotations-2.9.5.jar:/usr/share/logstash/logstash-core/lib/jars/jackson-core-2.9.5.jar:/usr/share/logstash/logstash-core/lib/jars/jackson-databind-2.9.5.jar:/usr/share/logstash/logstash-core/lib/jars/jackson-dataformat-cbor-2.9.5.jar:/usr/share/logstash/logstash-core/lib/jars/janino-3.0.8.jar:/usr/share/logstash/logstash-core/lib/jars/jruby-complete-9.1.13.0.jar:/usr/share/logstash/logstash-core/lib/jars/jsr305-1.3.9.jar:/usr/share/logstash/logstash-core/lib/jars/log4j-api-2.9.1.jar:/usr/share/logstash/logstash-core/lib/jars/log4j-core-2.9.1.jar:/usr/share/logstash/logstash-core/lib/jars/log4j-slf4j-impl-2.9.1.jar:/usr/share/logstash/logstash-core/lib/jars/logstash-core.jar:/usr/share/logstash/logstash-core/lib/jars/org.eclipse.core.commands-3.6.0.jar:/usr/share/logstash/logstash-core/lib/jars/org.eclipse.core.contenttype-3.4.100.jar:/usr/share/logstash/logstash-core/lib/jars/org.eclipse.core.expressions-3.4.300.jar:/usr/share/logstash/logstash-core/lib/jars/org.eclipse.core.filesystem-1.3.100.jar:/usr/share/logstash/logstash-core/lib/jars/org.eclipse.core.jobs-3.5.100.jar:/usr/share/logstash/logstash-core/lib/jars/org.eclipse.core.resources-3.7.100.jar:/usr/share/logstash/logstash-core/lib/jars/org.eclipse.core.runtime-3.7.0.jar:/usr/share/logstash/logstash-core/lib/jars/org.eclipse.equinox.app-1.3.100.jar:/usr/share/logstash/logstash-core/lib/jars/org.eclipse.equinox.common-3.6.0.jar:/usr/share/logstash/logstash-core/lib/jars/org.eclipse.equinox.preferences-3.4.1.jar:/usr/share/logstash/logstash-core/lib/jars/org.eclipse.equinox.registry-3.5.101.jar:/usr/share/logstash/logstash-core/lib/jars/org.eclipse.jdt.core-3.10.0.jar:/usr/share/logstash/logstash-core/lib/jars/org.eclipse.osgi-3.7.1.jar:/usr/share/logstash/logstash-core/lib/jars/org.eclipse.text-3.5.101.jar:/usr/share/logstash/logstash-core/lib/jars/slf4j-api-1.7.25.jar org.logstash.Logstash --path.settings /etc/logstash

root 1903 0.0 0.0 112704 972 pts/0 R+ 19:10 0:00 grep --color=auto logstash

[root@server37 ~]# netstat -lnpt |grep :9600

tcp6 0 0 127.0.0.1:9600 :::* LISTEN 1877/java

[root@server37 ~]# netstat -lnpt |grep :10514

tcp6 0 0 :::10514 :::* LISTEN 1877/java

启动logstash后,进程是正常存在的,但是9600以及10514端口却没有被监听。解决问题办法参考博客:http://blog.51cto.com/zero01/2082794

logstash监听的本地ip:127.0.0.1是无法远程通信,修改配置文件

[root@server37 ~]# vim /etc/logstash/logstash.yml

190 http.host: "192.168.50.137"

[root@server37 ~]# systemctl restart logstash

[root@server37 ~]# netstat -lnpt |grep 9600

tcp6 0 0 192.168.50.137:9600 :::* LISTEN 1965/java

回到kibana服务器查看索引日志,获取索引信息

[root@server36 ~]# curl '192.168.50.136:9200/_cat/indices?v'

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open system-syslog-2018.09 DInusYjkTS2PL5yur-DQwg 5 1 1 0 24.4kb 12.2kb

可以检查到含有索引值,logstash和es集群通信正常!

获取指定索引的详细信息

[root@server36 ~]# curl -XCET '192.168.50.136:9200/system-syslog-2018.09?pretty'

{

"error" : {

"root_cause" : [

{

"type" : "illegal_argument_exception",

"reason" : "Unexpected http method: CET"

}

],

"type" : "illegal_argument_exception",

"reason" : "Unexpected http method: CET"

},

"status" : 400

}

[root@server36 ~]# curl -XGET '192.168.50.136:9200/system-syslog-2018.09?pretty'

{

"system-syslog-2018.09" : {

"aliases" : { },

"mappings" : {

"doc" : {

"properties" : {

"@timestamp" : {

"type" : "date"

},

"@version" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"facility" : {

"type" : "long"

},

"facility_label" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"host" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"logsource" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"message" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"pid" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"priority" : {

"type" : "long"

},

"program" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"severity" : {

"type" : "long"

},

"severity_label" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"timestamp" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"type" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

}

}

}

},

"settings" : {

"index" : {

"creation_date" : "1538048829786",

"number_of_shards" : "5",

"number_of_replicas" : "1",

"uuid" : "DInusYjkTS2PL5yur-DQwg",

"version" : {

"created" : "6040099"

},

"provided_name" : "system-syslog-2018.09"

}

}

}

}

删除索引命令

curl -XDELETE ‘192.168.50.136:9200/system-syslog-2018.09’

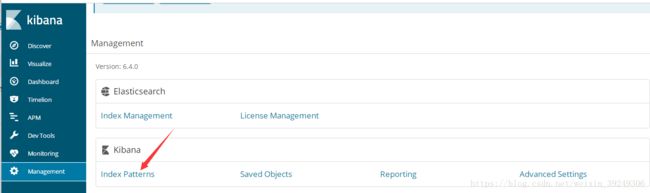

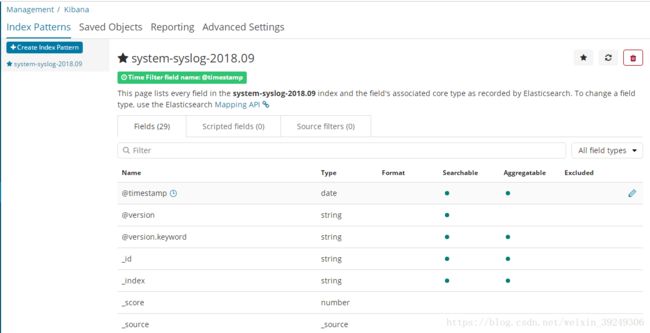

web界面kibana配置索引

进入kibana的web界面,选择management,点击index patterns开始创建索引!

输入创建的索引,点击下一步

下拉选择@timestamp,点击创建索引

创建完成后界面

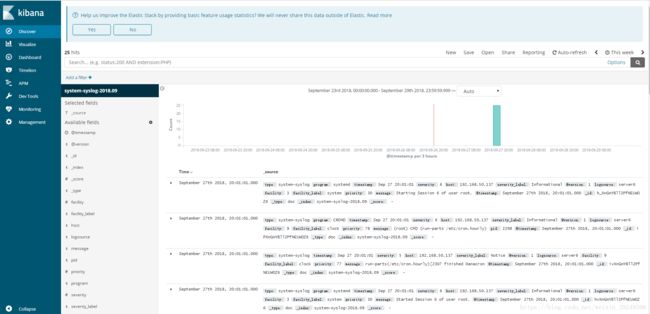

查看创建索引的图形

点击discovery,出现如下图所示表示近十二分钟没有日志!

点击右上角,选择合适的时间段,直到出现图形为止;

我这里选择的是最近一周

如果还是不能出现信息,解决办法参考博客:http://blog.51cto.com/zero01/2082794

或者参考ELKstack中文指南https://elkguide.elasticsearch.cn/

显示的日志数据就是 /var/log/messages 文件里的数据,以上就是logstash收集系统日志,输出到es服务器上,并在kibana的页面上进行查看。

kibana用户手册:https://www.elastic.co/guide/cn/kibana/current/index.html

检查kibana的状态:

192.168.50.136:5601/status查看服务器状态页,状态页展示了服务器资源使用的情况和以安装的插件列表

logstash收集nginx日志: